Trigram Models Estimate a distribution P ( w i | w 1 , w 2 , . . . w - PowerPoint PPT Presentation

Trigram Models Estimate a distribution P ( w i | w 1 , w 2 , . . . w i 1 ) given previous history w 1 , . . . , w i 1 = Third, the notion grammatical in Englishcannot be identified in any way with the notion high

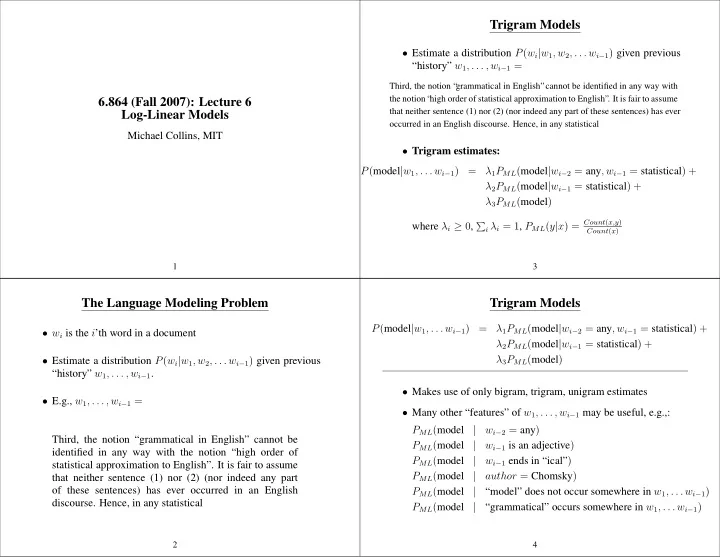

Trigram Models • Estimate a distribution P ( w i | w 1 , w 2 , . . . w i − 1 ) given previous “history” w 1 , . . . , w i − 1 = Third, the notion “ grammatical in English”cannot be identified in any way with the notion “ high order of statistical approximation to English”. It is fair to assume 6.864 (Fall 2007): Lecture 6 that neither sentence (1) nor (2) (nor indeed any part of these sentences) has ever Log-Linear Models occurred in an English discourse. Hence, in any statistical Michael Collins, MIT • Trigram estimates: P ( model | w 1 , . . . w i − 1 ) = λ 1 P ML ( model | w i − 2 = any , w i − 1 = statistical ) + λ 2 P ML ( model | w i − 1 = statistical ) + λ 3 P ML ( model ) i λ i = 1 , P ML ( y | x ) = Count ( x,y ) � where λ i ≥ 0 , Count ( x ) 1 3 The Language Modeling Problem Trigram Models P ( model | w 1 , . . . w i − 1 ) = λ 1 P ML ( model | w i − 2 = any , w i − 1 = statistical ) + • w i is the i ’th word in a document λ 2 P ML ( model | w i − 1 = statistical ) + λ 3 P ML ( model ) • Estimate a distribution P ( w i | w 1 , w 2 , . . . w i − 1 ) given previous “history” w 1 , . . . , w i − 1 . • Makes use of only bigram, trigram, unigram estimates • E.g., w 1 , . . . , w i − 1 = • Many other “features” of w 1 , . . . , w i − 1 may be useful, e.g.,: P ML ( model | w i − 2 = any ) Third, the notion “grammatical in English” cannot be P ML ( model | w i − 1 is an adjective ) identified in any way with the notion “high order of P ML ( model | w i − 1 ends in “ical” ) statistical approximation to English”. It is fair to assume P ML ( model | author = Chomsky ) that neither sentence (1) nor (2) (nor indeed any part of these sentences) has ever occurred in an English P ML ( model | “model” does not occur somewhere in w 1 , . . . w i − 1 ) discourse. Hence, in any statistical P ML ( model | “grammatical” occurs somewhere in w 1 , . . . w i − 1 ) 2 4

A Naive Approach A Second Example: Part-of-Speech Tagging P ( model | w 1 , . . . w i − 1 ) = Hispaniola/NNP quickly/RB became/VB an/DT λ 1 P ML ( model | w i − 2 = any , w i − 1 = statistical ) + important/JJ base/?? from which Spain expanded λ 2 P ML ( model | w i − 1 = statistical ) + its empire into the rest of the Western Hemisphere . λ 3 P ML ( model ) + λ 4 P ML ( model | w i − 2 = any ) + • There are many possible tags in the position ?? λ 5 P ML ( model | w i − 1 is an adjective ) + { NN, NNS, Vt, Vi, IN, DT, ... } λ 6 P ML ( model | w i − 1 ends in “ical” ) + λ 7 P ML ( model | author = Chomsky ) + • The task: model the distribution λ 8 P ML ( model | “model” does not occur somewhere in w 1 , . . . w i − 1 ) + P ( t i | t 1 , . . . , t i − 1 , w 1 . . . w n ) λ 9 P ML ( model | “grammatical” occurs somewhere in w 1 , . . . w i − 1 ) where t i is the i ’th tag in the sequence, w i is the i ’th word This quickly becomes very unwieldy... 5 7 A Second Example: Part-of-Speech Tagging A Second Example: Part-of-Speech Tagging Hispaniola/NNP quickly/RB became/VB an/DT important/JJ base/?? from which Spain expanded its empire into the rest of the Western Hemisphere . INPUT: Profits soared at Boeing Co., easily topping forecasts on Wall • The task: model the distribution Street, as their CEO Alan Mulally announced first quarter results. P ( t i | t 1 , . . . , t i − 1 , w 1 . . . w n ) OUTPUT: where t i is the i ’th tag in the sequence, w i is the i ’th word Profits/N soared/V at/P Boeing/N Co./N ,/, easily/ADV topping/V • Again: many “features” of t 1 , . . . , t i − 1 , w 1 . . . w n may be relevant forecasts/N on/P Wall/N Street/N ,/, as/P their/POSS CEO/N Alan/N Mulally/N announced/V first/ADJ quarter/N results/N ./. P ML ( NN | w i = base ) P ML ( NN | t i − 1 is JJ ) N = Noun P ML ( NN | w i ends in “e” ) V = Verb P = Preposition P ML ( NN | w i ends in “se” ) Adv = Adverb P ML ( NN | w i − 1 is “important” ) Adj = Adjective . . . P ML ( NN | w i +1 is “from” ) 6 8

Overview Language Modeling • x is a “history” w 1 , w 2 , . . . w i − 1 , e.g., • Log-linear models Third, the notion “ grammatical in English”cannot be identified in any way with the notion “ high order of statistical approximation to English”. It • The maximum-entropy property is fair to assume that neither sentence (1) nor (2) (nor indeed any part of these sentences) has ever occurred in an English discourse. Hence, in any • Smoothing, feature selection etc. in log-linear models statistical • y is an “outcome” w i 9 11 The General Problem Feature Vector Representations • We have some input domain X • Aim is to provide a conditional probability P ( y | x ) for “decision” y given “history” x • Have a finite label set Y • A feature is a function f ( x, y ) ∈ R (Often binary features or indicator functions f ( x, y ) ∈ { 0 , 1 } ). • Aim is to provide a conditional probability P ( y | x ) for any x, y where x ∈ X , y ∈ Y • Say we have m features f k for k = 1 . . . m ⇒ A feature vector f ( x, y ) ∈ R m for any x, y 10 12

Language Modeling � 1 if y = model , author = Chomsky f 7 ( x, y ) = 0 otherwise • x is a “history” w 1 , w 2 , . . . w i − 1 , e.g., � 1 if y = model , “model” is not in w 1 , . . . w i − 1 Third, the notion “ grammatical in English”cannot be identified in any way f 8 ( x, y ) = 0 otherwise with the notion “ high order of statistical approximation to English”. It � is fair to assume that neither sentence (1) nor (2) (nor indeed any part of 1 if y = model , “grammatical” is in w 1 , . . . w i − 1 f 9 ( x, y ) = these sentences) has ever occurred in an English discourse. Hence, in any 0 otherwise statistical • y is an “outcome” w i 13 15 Defining Features in Practice • Example features: • We had the following “trigram” feature: � 1 if y = model f 1 ( x, y ) = � 0 otherwise 1 if y = model , w i − 2 = any , w i − 1 = statistical f 3 ( x, y ) = � 0 otherwise 1 if y = model and w i − 1 = statistical f 2 ( x, y ) = 0 otherwise • In practice, we would probably introduce one trigram feature � 1 if y = model , w i − 2 = any , w i − 1 = statistical for every trigram seen in the training data: i.e., for all trigrams f 3 ( x, y ) = 0 otherwise ( u, v, w ) seen in training data, create a feature � 1 if y = model , w i − 2 = any f 4 ( x, y ) = 0 otherwise � 1 if y = w , w i − 2 = u , w i − 1 = v f N ( u,v,w ) ( x, y ) = � 1 0 otherwise if y = model , w i − 1 is an adjective f 5 ( x, y ) = 0 otherwise � where N ( u, v, w ) is a function that maps each ( u, v, w ) 1 if y = model , w i − 1 ends in “ical” f 6 ( x, y ) = trigram to a different integer 0 otherwise 14 16

The POS-Tagging Example The Full Set of Features in [Ratnaparkhi 96] • Each x is a “history” of the form � t 1 , t 2 , . . . , t i − 1 , w 1 . . . w n , i � • Contextual Features, e.g., • Each y is a POS tag, such as NN, NNS, V t, V i, IN, DT, . . . � 1 if � t i − 2 , t i − 1 , y � = � DT, JJ, Vt � f 103 ( x, y ) = 0 otherwise • We have m features f k ( x, y ) for k = 1 . . . m � 1 if � t i − 1 , y � = � JJ, Vt � f 104 ( x, y ) = For example: 0 otherwise � 1 if current word w i is base and y = Vt � f 1 ( x, y ) = 1 if � y � = � Vt � 0 f 105 ( x, y ) = otherwise 0 otherwise � 1 if current word w i ends in ing and y = VBG f 2 ( x, y ) = � 1 if previous word w i − 1 = the and y = Vt 0 otherwise f 106 ( x, y ) = . . . 0 otherwise � 1 if next word w i +1 = the and y = Vt f 107 ( x, y ) = 0 otherwise 17 19 The Full Set of Features in [Ratnaparkhi 96] The Final Result • Word/tag features for all word/tag pairs, e.g., • We can come up with practically any questions ( features ) regarding history/tag pairs. � 1 if current word w i is base and y = Vt f 100 ( x, y ) = 0 otherwise • For a given history x ∈ X , each label in Y is mapped to a different feature vector • Spelling features for all prefixes/suffixes of length ≤ 4 , e.g., f ( � JJ, DT, � Hispaniola, ... � , 6 � , Vt ) = 1001011001001100110 � f ( � JJ, DT, � Hispaniola, ... � , 6 � , JJ ) = 0110010101011110010 1 if current word w i ends in ing and y = VBG f 101 ( x, y ) = f ( � JJ, DT, � Hispaniola, ... � , 6 � , NN ) = 0001111101001100100 0 otherwise f ( � JJ, DT, � Hispaniola, ... � , 6 � , IN ) = 0001011011000000010 � 1 if current word w i starts with pre and y = NN f 102 ( h, t ) = . . . 0 otherwise 18 20

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.