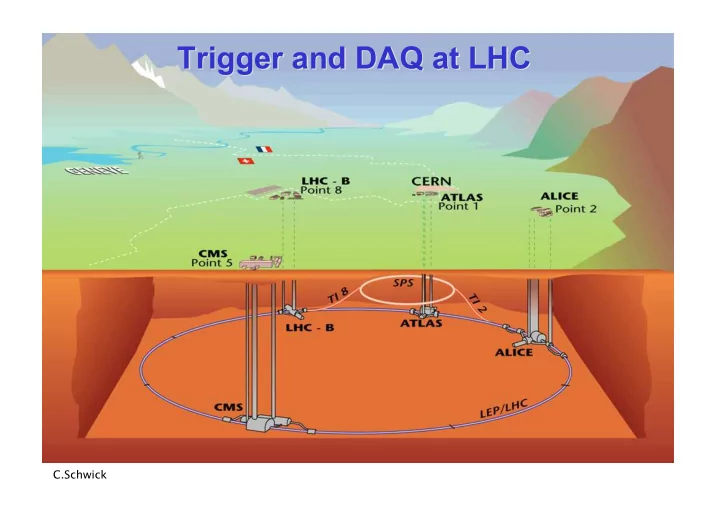

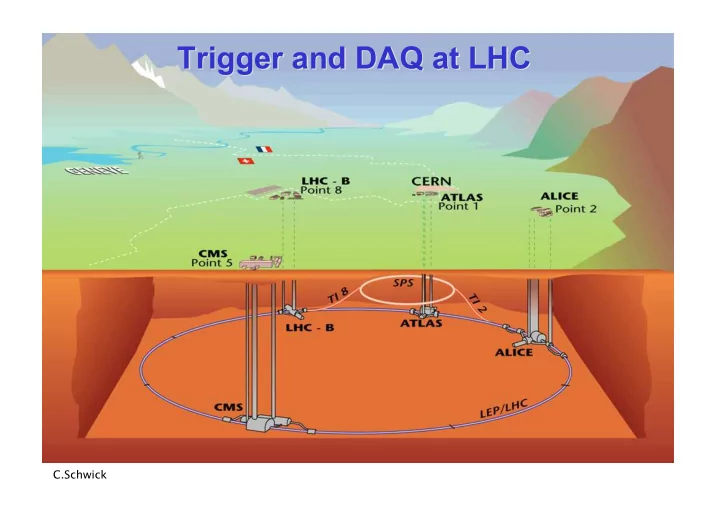

Trigger and DAQ at LHC Trigger and DAQ at LHC C.Schwick

Contents Contents INTRODUCTION The context: LHC & experiments PART1: Trigger at LHC Requirements & Concepts Muon and Calorimeter triggers (CMS and ATLAS) Specific solutions (ALICE, LHCb) Hardware implementation Part2: Data Flow, Event Building and higher trigger levels Data Flow of the 4 LHC experiments Data Readout (Interface to central DAQ systems) Event Building: CMS as an example Software: some technologies C. Schwick (CERN/CMS) 2

LHC experiments: Lvl Lvl 1 rate 1 rate vs vs size size LHC experiments: Previous or current experiments C. Schwick (CERN/CMS) 3

Further trigger levels Further trigger levels First level trigger rate still too high for permanent storage Example CMS,Atlas: Typical event size: 1MB (ATLAS, CMS) 1 MB @ 100 kHz = 100 GB/s “Reasonable” data rate to permanent Storage: 100 MB/s (CMS & ATLAS) … 1 GB/s (ALICE) More trigger levels are needed to further reduce the fraction of less interesting events in the selected sample. C. Schwick (CERN/CMS) 4

Trigger/DAQ parameters Trigger/DAQ parameters High Level Trigger No.Levels Lvl 0,1,2 Event Evt Build. HLT Out Trigger Rate (Hz) Size (Byte) Bandw.(GB/s) MB/s (Event/s) 3 LV-1 10 5 1.5x10 6 4.5 300 (2x10 2 ) LV-2 3x10 3 2 LV-1 10 5 10 6 100 100 (10 2 ) 2 LV-0 10 6 3x10 4 30 60 (2x10 3 ) 4 Pp-Pp 500 5x10 7 25 1250 (10 2 ) p-p 10 3 2x10 6 200 (10 2 ) C. Schwick (CERN/CMS) 5

Data Acquisition: main tasks Data Acquisition: main tasks custom hardware PC Lvl1 pipelines network switch Lvl1 trigger Lvl-1 Data readout from Front End Electronics Temporary buffering of event fragments in readout buffers Provide higher level Lvl-2 trigger with partial event data Assemble events in single location and provide to High Level Trigger (HLT) HLT Our “Standard Model” of Data Flow Write selected events to permanent storage C. Schwick (CERN/CMS) 6

Data Acquisition: main tasks Data Acquisition: main tasks custom hardware PC Lvl1 pipelines network switch Lvl1 trigger Lvl-1 Data readout from Front End Electronics Temporary buffering of event fragments in readout buffers Provide higher level Lvl-2 trigger with partial event data Assemble events in single location and provide to High Level Trigger (HLT) HLT Our “Standard Model” of Data Flow Write selected events to permanent storage C. Schwick (CERN/CMS) 7

Implementation of EVBs EVBs and and HLTs HLTs today today Implementation of Higher level triggers are implemented in software. Farms of PCs investigate event data in parallel. Eventbuilder and HLT Farm resemble an entire “computer center” C. Schwick (CERN/CMS) 8

Data Flow: Architecture Data Flow: Architecture C. Schwick (CERN/CMS) 9

Data Flow: ALICE Data Flow: ALICE custom hardware PC network switch front end pipeline Lvl-0,1,2 88 µ s lat 500 Hz readout link readout buffer HLT farm HLT 100 Hz event builder event buffer 100 Hz C. Schwick (CERN/CMS) 10

Data Flow: ATLAS Data Flow: ATLAS custom hardware Region Of Interest (ROI): PC Identified by Lvl1. Hint for Lvl2 to investigate further network switch 40 MHz Lvl-1 front end pipeline 3 µ s lat 100 kHz Regions Of Interest readout link 3 kHz readout buffer ROI Builder event builder Lvl2 farm Lvl-2 HLT HLT farm 200 Hz C. Schwick (CERN/CMS) 11

Data Flow: LHCb LHCb (original plan) (original plan) Data Flow: custom hardware PC network switch 10 MHz (40 MHz clock) Lvl-1 front end pipeline 4 µ s lat 1MHz 40 kHz readout buffer readout link readout/EVB network Lvl1/HLT Lvl-2 processing farm HLT Lvl1/HLT processing farm 200 Hz C. Schwick (CERN/CMS) 12

Data Flow: LHCb LHCb (final design) (final design) Data Flow: custom hardware PC network switch 10 MHz (40 MHz clock) Lvl-1 front end pipeline 4 µ s lat 1MHz readout buffer readout link readout/EVB network HLT Lvl1/HLT processing farm 2 kHz C. Schwick (CERN/CMS) 13

Data Flow: CMS Data Flow: CMS custom hardware PC network switch 40 MHz front end pipeline Lvl-1 3 µ s lat 100 kHz readout link readout buffer event builder network 100 kHz HLT processing farm 100 Hz C. Schwick (CERN/CMS) 14

Data Flow: Readout Links Data Flow: Readout Links C. Schwick (CERN/CMS) 15

Data Flow: Data Readout Data Flow: Data Readout data sources Former times: Use of bus-systems • buffer – VME or Fastbus – Parallel data transfer (typical: 32 bit) on shared data bus shared bus (bottle-neck) – One source at a time can use the bus LHC: Point to point links • – Optical or electrical – Data serialized – Custom or standard protocols – All sources can send data simultaneously • Compare trends in industry market: – 198x: ISA, SCSI(1979),IDE, parallel port, VME(1982) – 199x: PCI( 1990, 66MHz 1995), USB(1996), FireWire(1995) – 200x: USB2, FireWire 800, PCIexpress, Infiniband, GbE, 10GbE C. Schwick (CERN/CMS) 16

Readout Links of LHC Experiments Readout Links of LHC Experiments Flow Control Optical: 160 MB/s ≈ 1600 Links SLINK Yes Receiver card interfaces to PC. LVDS: 400 MB/s (max. 15m) ≈ 500 links (FE on average: 200 MB/s to readout buffer) SLINK 64 yes Receiver card interfaces to commercial NIC (Network Interface Card) Optical 200 MB/s ≈ 500 links Half duplex: Controls FE (commands, DLL yes Pedestals,Calibration data) Receiver card interfaces to PC Copper quad GbE Link ≈ 400 links TELL-1 Protocol: IPv4 (direct connection to GbE switch) no Forms “Multi Event Fragments” & GbE Link Implements readout buffer C. Schwick (CERN/CMS) 17

Readout Links: Interface to PC Readout Links: Interface to PC Problem: Read data in PC with high bandwidth and low CPU load Note: copying data costs a lot of CPU time! Solution: Buffer-Loaning – Hardware shuffles data via DMA (Direct Memory Access) engines – Software maintains tables of buffer-chains Advantage: – No CPU copy involved used for links of Atlas, CMS, ALICE C. Schwick (CERN/CMS) 18

Example readout board: LHCb LHCb Example readout board: Main board: - data reception from “Front End” via optical or copper links. - detector specific processing Readout Link - “highway to DAQ” - simple interface to main board - Implemented as “plug on” C. Schwick (CERN/CMS) 19

Event Building: example CMS Event Building: example CMS C. Schwick (CERN/CMS) 20

Data Flow: Atlas vs vs CMS CMS Data Flow: Atlas Readout Buffer Challenging “ Commodity ” Concept of “Region Of Interest” (ROI) Implemented with commercial Increased complexity PCs • ROI generation (at Lvl1) • ROI Builder (custom module) • selective readout from buffers Event Builder “ Commodity ” Challenging 1kHz @ 1 MB = O(1) GB/s 100kHz @ 1 MB = 100 GB/s Increased complexity: • traffic shaping • specialized (commercial) hardware C. Schwick (CERN/CMS) 21

“Modern Modern” ” EVB architecture EVB architecture “ Front End Trigger Readout Link Readout Buffer X EVB Control Event builder network Building Units or X X High Level Trigger Farm (some 1000 CPUs) C. Schwick (CERN/CMS) 22

Intermezzo: Networking Intermezzo: Networking • TCP/IP on Ethernet networks – All data packets are surrounded by headers and a trailer Ethernet: Ethernet - Addresses understood by hardware (NIC IP and switch) IP: TCP - unique addresses (world wide) known by DNS (you can search for www.google.com) TCP: A HTTP request - Provides programmer with an API. from a browser - Establishes “connections” = logical communication channels (“socket programming) Trailer - Makes sure that your packet arrives: requires an acknowledge for every packet sent (retries after timeout) C. Schwick (CERN/CMS) 23

Intermezzo: Networking & Switches Intermezzo: Networking & Switches Address: a3 Address: a1 Network Hub Address: a4 Dst: a4 Address: a2 Src: a2 Address: a5 Data C. Schwick (CERN/CMS) 24

Intermezzo: Networking & Switches Intermezzo: Networking & Switches Address: a3 Address: a1 Dst: a4 Src: a2 Dst: a4 Data Src: a2 Data Network Hub Dst: a4 Address: a4 Src: a2 Data Address: a2 Dst: a4 Address: a5 Src: a2 Data Packets are replicated to all hosts connected to Hub. C. Schwick (CERN/CMS) 25

Intermezzo: Networking & Switches Intermezzo: Networking & Switches Address: a3 Address: a1 Network Switch Address: a4 Dst: a4 Address: a2 Src: a2 Address: a5 Data C. Schwick (CERN/CMS) 26

Intermezzo: Networking & Switches Intermezzo: Networking & Switches Address: a3 Address: a1 Network Switch Address: a4 Dst: a4 Src: a2 Data Address: a2 Address: a5 A switch “knows” the the addresses of the hosts connected to its “ports” C. Schwick (CERN/CMS) 27

Networking: EVB traffic Networking: EVB traffic Readout Buffers Network Switch “Builder Units” C. Schwick (CERN/CMS) 28

Networking: EVB traffic Networking: EVB traffic Readout Buffers Lvl1 event Network Switch “Builder Units” C. Schwick (CERN/CMS) 29

Networking: EVB traffic Networking: EVB traffic Readout Buffers (N) Lvl1 event EVB traffic Network Switch all sources send to the same destination at (almost) concurrently. Congestion Builder Units (M) C. Schwick (CERN/CMS) 30

Recommend

More recommend