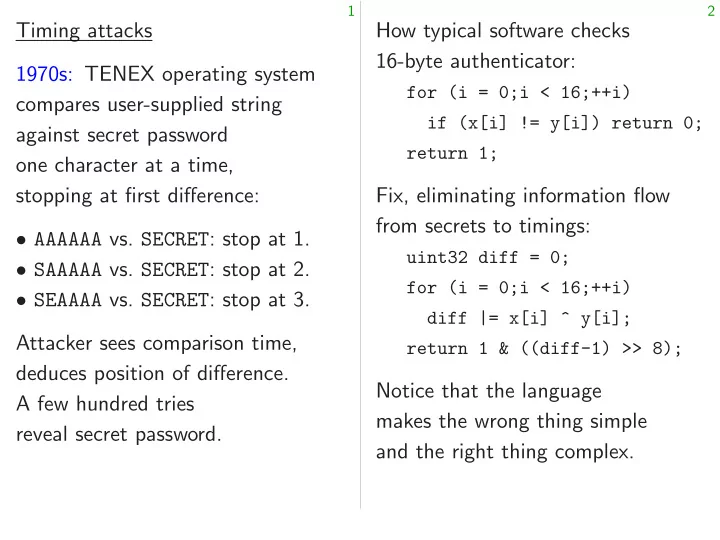

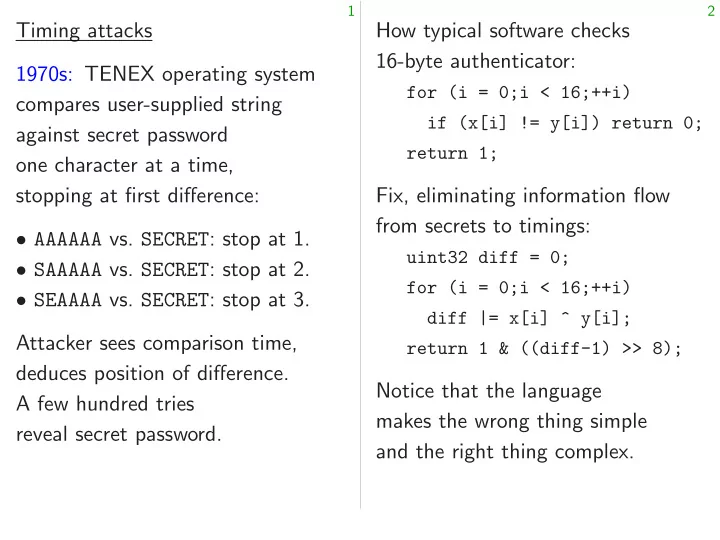

3 4 notion of Do timing attacks really work? Examples of successful attacks: security. 2005 Tromer–Osvik–Shamir: Objection: “Timings are noisy!” 65ms to steal Linux AES key happen. Answer #1: used for hard-disk encryption. examples, Does noise stop all attacks? 2013 AlFardan–Paterson “Lucky are for To guarantee security, defender Thirteen: breaking the TLS OC: must block all information flow. DTLS record protocols” steals Answer #2: Attacker uses plaintext using decryption timings. statistics to eliminate noise. 2014 van de Pol–Smart–Yarom CRYPTO_ABYTES;i++) Answer #3, what the steals Bitcoin key from timings + i]){ 1970s attackers actually did: of 25 OpenSSL signatures. RETURN_TAG_NO_MATCH; Cross page boundary, 2016 Yarom–Genkin–Heninger inducing page faults, “CacheBleed” steals RSA secret to amplify timing signal. key via timings of OpenSSL.

4 5 Do timing attacks really work? Examples of successful attacks: 2005 Tromer–Osvik–Shamir: Objection: “Timings are noisy!” 65ms to steal Linux AES key Answer #1: used for hard-disk encryption. Does noise stop all attacks? 2013 AlFardan–Paterson “Lucky To guarantee security, defender Thirteen: breaking the TLS and must block all information flow. DTLS record protocols” steals Answer #2: Attacker uses plaintext using decryption timings. statistics to eliminate noise. 2014 van de Pol–Smart–Yarom Answer #3, what the steals Bitcoin key from timings 1970s attackers actually did: of 25 OpenSSL signatures. Cross page boundary, 2016 Yarom–Genkin–Heninger inducing page faults, “CacheBleed” steals RSA secret to amplify timing signal. key via timings of OpenSSL.

4 5 timing attacks really work? Examples of successful attacks: Constant-time 2005 Tromer–Osvik–Shamir: Objection: “Timings are noisy!” ECDH com 65ms to steal Linux AES key where a er #1: used for hard-disk encryption. noise stop all attacks? Key gene 2013 AlFardan–Paterson “Lucky guarantee security, defender Signing: Thirteen: breaking the TLS and block all information flow. All of these DTLS record protocols” steals er #2: Attacker uses Does timing plaintext using decryption timings. statistics to eliminate noise. 2014 van de Pol–Smart–Yarom Are there er #3, what the steals Bitcoin key from timings ECC ops? attackers actually did: of 25 OpenSSL signatures. Do the underlying page boundary, take variable 2016 Yarom–Genkin–Heninger inducing page faults, “CacheBleed” steals RSA secret amplify timing signal. key via timings of OpenSSL.

4 5 attacks really work? Examples of successful attacks: Constant-time ECC 2005 Tromer–Osvik–Shamir: Timings are noisy!” ECDH computation: 65ms to steal Linux AES key where a is your secret used for hard-disk encryption. all attacks? Key generation: a 2013 AlFardan–Paterson “Lucky security, defender Signing: r �→ rB . Thirteen: breaking the TLS and information flow. All of these use secret DTLS record protocols” steals ttacker uses Does timing leak this plaintext using decryption timings. eliminate noise. 2014 van de Pol–Smart–Yarom Are there any branches what the steals Bitcoin key from timings ECC ops? Point ops? actually did: of 25 OpenSSL signatures. Do the underlying oundary, take variable time? 2016 Yarom–Genkin–Heninger aults, “CacheBleed” steals RSA secret timing signal. key via timings of OpenSSL.

4 5 ork? Examples of successful attacks: Constant-time ECC 2005 Tromer–Osvik–Shamir: noisy!” ECDH computation: a; P �→ 65ms to steal Linux AES key where a is your secret key . used for hard-disk encryption. attacks? Key generation: a �→ aB . 2013 AlFardan–Paterson “Lucky defender Signing: r �→ rB . Thirteen: breaking the TLS and flow. All of these use secret data. DTLS record protocols” steals Does timing leak this data? plaintext using decryption timings. noise. 2014 van de Pol–Smart–Yarom Are there any branches in steals Bitcoin key from timings ECC ops? Point ops? Field did: of 25 OpenSSL signatures. Do the underlying machine insns take variable time? 2016 Yarom–Genkin–Heninger “CacheBleed” steals RSA secret key via timings of OpenSSL.

5 6 Examples of successful attacks: Constant-time ECC 2005 Tromer–Osvik–Shamir: ECDH computation: a; P �→ aP 65ms to steal Linux AES key where a is your secret key . used for hard-disk encryption. Key generation: a �→ aB . 2013 AlFardan–Paterson “Lucky Signing: r �→ rB . Thirteen: breaking the TLS and All of these use secret data. DTLS record protocols” steals Does timing leak this data? plaintext using decryption timings. 2014 van de Pol–Smart–Yarom Are there any branches in steals Bitcoin key from timings ECC ops? Point ops? Field ops? of 25 OpenSSL signatures. Do the underlying machine insns take variable time? 2016 Yarom–Genkin–Heninger “CacheBleed” steals RSA secret key via timings of OpenSSL.

5 6 Examples of successful attacks: Constant-time ECC Recall left-to-right to compute romer–Osvik–Shamir: ECDH computation: a; P �→ aP using point to steal Linux AES key where a is your secret key . for hard-disk encryption. def scalarmult(n,P): Key generation: a �→ aB . if n == AlFardan–Paterson “Lucky Signing: r �→ rB . if n == Thirteen: breaking the TLS and R = scalarmult(n//2,P) All of these use secret data. record protocols” steals R = R Does timing leak this data? plaintext using decryption timings. if n % van de Pol–Smart–Yarom Are there any branches in return Bitcoin key from timings ECC ops? Point ops? Field ops? Many branches OpenSSL signatures. Do the underlying machine insns NAF etc. take variable time? arom–Genkin–Heninger “CacheBleed” steals RSA secret timings of OpenSSL.

5 6 successful attacks: Constant-time ECC Recall left-to-right to compute n; P �→ romer–Osvik–Shamir: ECDH computation: a; P �→ aP using point addition: Linux AES key where a is your secret key . rd-disk encryption. def scalarmult(n,P): Key generation: a �→ aB . if n == 0: return rdan–Paterson “Lucky Signing: r �→ rB . if n == 1: return reaking the TLS and R = scalarmult(n//2,P) All of these use secret data. rotocols” steals R = R + R Does timing leak this data? decryption timings. if n % 2: R = R ol–Smart–Yarom Are there any branches in return R ey from timings ECC ops? Point ops? Field ops? Many branches here. signatures. Do the underlying machine insns NAF etc. also use take variable time? rom–Genkin–Heninger steals RSA secret of OpenSSL.

5 6 attacks: Constant-time ECC Recall left-to-right binary metho to compute n; P �→ nP romer–Osvik–Shamir: ECDH computation: a; P �→ aP using point addition: ey where a is your secret key . encryption. def scalarmult(n,P): Key generation: a �→ aB . if n == 0: return 0 “Lucky Signing: r �→ rB . if n == 1: return P TLS and R = scalarmult(n//2,P) All of these use secret data. steals R = R + R Does timing leak this data? timings. if n % 2: R = R + P arom Are there any branches in return R timings ECC ops? Point ops? Field ops? Many branches here. s. Do the underlying machine insns NAF etc. also use many branches. take variable time? rom–Genkin–Heninger secret enSSL.

6 7 Constant-time ECC Recall left-to-right binary method to compute n; P �→ nP ECDH computation: a; P �→ aP using point addition: where a is your secret key . def scalarmult(n,P): Key generation: a �→ aB . if n == 0: return 0 Signing: r �→ rB . if n == 1: return P R = scalarmult(n//2,P) All of these use secret data. R = R + R Does timing leak this data? if n % 2: R = R + P Are there any branches in return R ECC ops? Point ops? Field ops? Many branches here. Do the underlying machine insns NAF etc. also use many branches. take variable time?

6 7 Constant-time ECC Recall left-to-right binary method Even if each to compute n; P �→ nP takes the computation: a; P �→ aP using point addition: (certainly a is your secret key . total time def scalarmult(n,P): generation: a �→ aB . If 2 e − 1 ≤ if n == 0: return 0 Signing: r �→ rB . n has exactly if n == 1: return P number R = scalarmult(n//2,P) these use secret data. R = R + R timing leak this data? Particula if n % 2: R = R + P usually indicates there any branches in return R “Lattice ops? Point ops? Field ops? Many branches here. compute the underlying machine insns NAF etc. also use many branches. positions variable time?

6 7 ECC Recall left-to-right binary method Even if each point to compute n; P �→ nP takes the same amount utation: a; P �→ aP using point addition: (certainly not true secret key . total time depends def scalarmult(n,P): a �→ aB . If 2 e − 1 ≤ n < 2 e and if n == 0: return 0 . n has exactly w bits if n == 1: return P number of additions R = scalarmult(n//2,P) secret data. R = R + R this data? Particularly fast total if n % 2: R = R + P usually indicates very ranches in return R “Lattice attacks” on ops? Field ops? Many branches here. compute the secret underlying machine insns NAF etc. also use many branches. positions of very small time?

6 7 Recall left-to-right binary method Even if each point addition to compute n; P �→ nP takes the same amount of time �→ aP using point addition: (certainly not true in Python), . total time depends on n . def scalarmult(n,P): If 2 e − 1 ≤ n < 2 e and if n == 0: return 0 n has exactly w bits set: if n == 1: return P number of additions is e + w R = scalarmult(n//2,P) data. R = R + R data? Particularly fast total time if n % 2: R = R + P usually indicates very small n return R “Lattice attacks” on signatures Field ops? Many branches here. compute the secret key given machine insns NAF etc. also use many branches. positions of very small nonces

7 8 Recall left-to-right binary method Even if each point addition to compute n; P �→ nP takes the same amount of time using point addition: (certainly not true in Python), total time depends on n . def scalarmult(n,P): If 2 e − 1 ≤ n < 2 e and if n == 0: return 0 n has exactly w bits set: if n == 1: return P number of additions is e + w − 2. R = scalarmult(n//2,P) R = R + R Particularly fast total time if n % 2: R = R + P usually indicates very small n . return R “Lattice attacks” on signatures Many branches here. compute the secret key given NAF etc. also use many branches. positions of very small nonces r .

7 8 left-to-right binary method Even if each point addition Even worse: compute n; P �→ nP takes the same amount of time CPUs do point addition: (certainly not true in Python), metadata total time depends on n . Actual time scalarmult(n,P): If 2 e − 1 ≤ n < 2 e and affects, and == 0: return 0 n has exactly w bits set: detailed == 1: return P number of additions is e + w − 2. branch p scalarmult(n//2,P) + R Particularly fast total time Attacker % 2: R = R + P usually indicates very small n . often sees return R “Lattice attacks” on signatures Exploited branches here. compute the secret key given etc. also use many branches. positions of very small nonces r .

7 8 left-to-right binary method Even if each point addition Even worse: �→ nP takes the same amount of time CPUs do not try to addition: (certainly not true in Python), metadata regarding total time depends on n . Actual time for a b scalarmult(n,P): If 2 e − 1 ≤ n < 2 e and affects, and is affected return 0 n has exactly w bits set: detailed state of co return P number of additions is e + w − 2. branch predictor, etc. scalarmult(n//2,P) Particularly fast total time Attacker interacts R + P usually indicates very small n . often sees pattern “Lattice attacks” on signatures Exploited in, e.g., here. compute the secret key given use many branches. positions of very small nonces r .

7 8 method Even if each point addition Even worse: takes the same amount of time CPUs do not try to protect (certainly not true in Python), metadata regarding branches. total time depends on n . Actual time for a branch If 2 e − 1 ≤ n < 2 e and affects, and is affected by, n has exactly w bits set: detailed state of code cache, number of additions is e + w − 2. branch predictor, etc. Particularly fast total time Attacker interacts with this state, usually indicates very small n . often sees pattern of branches. “Lattice attacks” on signatures Exploited in, e.g., Bitcoin attack. compute the secret key given ranches. positions of very small nonces r .

8 9 Even if each point addition Even worse: takes the same amount of time CPUs do not try to protect (certainly not true in Python), metadata regarding branches. total time depends on n . Actual time for a branch If 2 e − 1 ≤ n < 2 e and affects, and is affected by, n has exactly w bits set: detailed state of code cache, number of additions is e + w − 2. branch predictor, etc. Particularly fast total time Attacker interacts with this state, usually indicates very small n . often sees pattern of branches. “Lattice attacks” on signatures Exploited in, e.g., Bitcoin attack. compute the secret key given positions of very small nonces r .

8 9 Even if each point addition Even worse: takes the same amount of time CPUs do not try to protect (certainly not true in Python), metadata regarding branches. total time depends on n . Actual time for a branch If 2 e − 1 ≤ n < 2 e and affects, and is affected by, n has exactly w bits set: detailed state of code cache, number of additions is e + w − 2. branch predictor, etc. Particularly fast total time Attacker interacts with this state, usually indicates very small n . often sees pattern of branches. “Lattice attacks” on signatures Exploited in, e.g., Bitcoin attack. compute the secret key given Confidence-inspiring solution: positions of very small nonces r . Avoid all data flow from secrets to branch conditions.

8 9 if each point addition Even worse: Double-and-add-alw the same amount of time CPUs do not try to protect Eliminate (certainly not true in Python), metadata regarding branches. always computing time depends on n . Actual time for a branch def scalarmult(n,b,P): ≤ n < 2 e and affects, and is affected by, if b == exactly w bits set: detailed state of code cache, R = scalarmult(n//2,b-1,P) er of additions is e + w − 2. branch predictor, etc. R2 = R rticularly fast total time Attacker interacts with this state, S = [R2,R2 indicates very small n . often sees pattern of branches. return “Lattice attacks” on signatures Exploited in, e.g., Bitcoin attack. Works fo compute the secret key given Confidence-inspiring solution: Always tak ositions of very small nonces r . (including Avoid all data flow from Use public secrets to branch conditions.

8 9 oint addition Even worse: Double-and-add-alw amount of time CPUs do not try to protect Eliminate branches true in Python), metadata regarding branches. always computing ds on n . Actual time for a branch def scalarmult(n,b,P): and affects, and is affected by, if b == 0: return bits set: detailed state of code cache, R = scalarmult(n//2,b-1,P) additions is e + w − 2. branch predictor, etc. R2 = R + R total time Attacker interacts with this state, S = [R2,R2 + P] very small n . often sees pattern of branches. return S[n % 2] attacks” on signatures Exploited in, e.g., Bitcoin attack. Works for 0 ≤ n < secret key given Confidence-inspiring solution: Always takes 2 b additions small nonces r . (including b doublings). Avoid all data flow from Use public b : bits secrets to branch conditions.

8 9 addition Even worse: Double-and-add-always time CPUs do not try to protect Eliminate branches by Python), metadata regarding branches. always computing both results: Actual time for a branch def scalarmult(n,b,P): affects, and is affected by, if b == 0: return 0 detailed state of code cache, R = scalarmult(n//2,b-1,P) w − 2. branch predictor, etc. R2 = R + R Attacker interacts with this state, S = [R2,R2 + P] small n . often sees pattern of branches. return S[n % 2] signatures Exploited in, e.g., Bitcoin attack. Works for 0 ≤ n < 2 b . given Confidence-inspiring solution: Always takes 2 b additions nonces r . (including b doublings). Avoid all data flow from Use public b : bits allowed in secrets to branch conditions.

9 10 Even worse: Double-and-add-always CPUs do not try to protect Eliminate branches by metadata regarding branches. always computing both results: Actual time for a branch def scalarmult(n,b,P): affects, and is affected by, if b == 0: return 0 detailed state of code cache, R = scalarmult(n//2,b-1,P) branch predictor, etc. R2 = R + R Attacker interacts with this state, S = [R2,R2 + P] often sees pattern of branches. return S[n % 2] Exploited in, e.g., Bitcoin attack. Works for 0 ≤ n < 2 b . Confidence-inspiring solution: Always takes 2 b additions (including b doublings). Avoid all data flow from Use public b : bits allowed in n . secrets to branch conditions.

9 10 orse: Double-and-add-always Another do not try to protect CPUs do Eliminate branches by metadata regarding branches. metadata always computing both results: Actual time for a branch Actual time def scalarmult(n,b,P): affects, and is affected by, affects, and if b == 0: return 0 detailed state of code cache, detailed R = scalarmult(n//2,b-1,P) predictor, etc. store-to-load R2 = R + R er interacts with this state, Exploited S = [R2,R2 + P] sees pattern of branches. despite Intel return S[n % 2] Exploited in, e.g., Bitcoin attack. claiming Works for 0 ≤ n < 2 b . Confidence-inspiring solution: Always takes 2 b additions (including b doublings). all data flow from Use public b : bits allowed in n . secrets to branch conditions.

9 10 Double-and-add-always Another big problem: to protect CPUs do not try to Eliminate branches by ding branches. metadata regarding always computing both results: a branch Actual time for x[i] def scalarmult(n,b,P): affected by, affects, and is affected if b == 0: return 0 code cache, detailed state of data R = scalarmult(n//2,b-1,P) r, etc. store-to-load forwa R2 = R + R interacts with this state, Exploited in, e.g., S = [R2,R2 + P] pattern of branches. despite Intel and Op return S[n % 2] e.g., Bitcoin attack. claiming their code Works for 0 ≤ n < 2 b . Confidence-inspiring solution: Always takes 2 b additions (including b doublings). flow from Use public b : bits allowed in n . ranch conditions.

9 10 Double-and-add-always Another big problem: rotect CPUs do not try to protect Eliminate branches by ranches. metadata regarding array indices always computing both results: Actual time for x[i] def scalarmult(n,b,P): affects, and is affected by, if b == 0: return 0 cache, detailed state of data cache, R = scalarmult(n//2,b-1,P) store-to-load forwarder, etc. R2 = R + R this state, Exploited in, e.g., CacheBleed, S = [R2,R2 + P] ranches. despite Intel and OpenSSL return S[n % 2] attack. claiming their code was safe. Works for 0 ≤ n < 2 b . solution: Always takes 2 b additions (including b doublings). Use public b : bits allowed in n . conditions.

10 11 Double-and-add-always Another big problem: CPUs do not try to protect Eliminate branches by metadata regarding array indices . always computing both results: Actual time for x[i] def scalarmult(n,b,P): affects, and is affected by, if b == 0: return 0 detailed state of data cache, R = scalarmult(n//2,b-1,P) store-to-load forwarder, etc. R2 = R + R Exploited in, e.g., CacheBleed, S = [R2,R2 + P] despite Intel and OpenSSL return S[n % 2] claiming their code was safe. Works for 0 ≤ n < 2 b . Always takes 2 b additions (including b doublings). Use public b : bits allowed in n .

10 11 Double-and-add-always Another big problem: CPUs do not try to protect Eliminate branches by metadata regarding array indices . always computing both results: Actual time for x[i] def scalarmult(n,b,P): affects, and is affected by, if b == 0: return 0 detailed state of data cache, R = scalarmult(n//2,b-1,P) store-to-load forwarder, etc. R2 = R + R Exploited in, e.g., CacheBleed, S = [R2,R2 + P] despite Intel and OpenSSL return S[n % 2] claiming their code was safe. Works for 0 ≤ n < 2 b . Always takes 2 b additions Confidence-inspiring solution: (including b doublings). Avoid all data flow from Use public b : bits allowed in n . secrets to memory addresses.

10 11 Double-and-add-always Another big problem: Table lookups CPUs do not try to protect Eliminate branches by Always read metadata regarding array indices . computing both results: Use bit op Actual time for x[i] the desired scalarmult(n,b,P): affects, and is affected by, == 0: return 0 def scalarmult(n,b,P): detailed state of data cache, scalarmult(n//2,b-1,P) if b == store-to-load forwarder, etc. R + R R = scalarmult(n//2,b-1,P) Exploited in, e.g., CacheBleed, [R2,R2 + P] R2 = R despite Intel and OpenSSL return S[n % 2] S = [R2,R2 claiming their code was safe. mask = for 0 ≤ n < 2 b . return ys takes 2 b additions Confidence-inspiring solution: (including b doublings). Avoid all data flow from public b : bits allowed in n . secrets to memory addresses.

10 11 Double-and-add-always Another big problem: Table lookups via a CPUs do not try to protect nches by Always read all table metadata regarding array indices . computing both results: Use bit operations Actual time for x[i] the desired table entry: scalarmult(n,b,P): affects, and is affected by, return 0 def scalarmult(n,b,P): detailed state of data cache, scalarmult(n//2,b-1,P) if b == 0: return store-to-load forwarder, etc. R = scalarmult(n//2,b-1,P) Exploited in, e.g., CacheBleed, P] R2 = R + R despite Intel and OpenSSL 2] S = [R2,R2 + P] claiming their code was safe. mask = -(n % 2) < 2 b . return S[0]^(mask&(S[1]^S[0])) additions Confidence-inspiring solution: doublings). Avoid all data flow from bits allowed in n . secrets to memory addresses.

10 11 Another big problem: Table lookups via arithmetic CPUs do not try to protect Always read all table entries. metadata regarding array indices . results: Use bit operations to select Actual time for x[i] the desired table entry: affects, and is affected by, def scalarmult(n,b,P): detailed state of data cache, scalarmult(n//2,b-1,P) if b == 0: return 0 store-to-load forwarder, etc. R = scalarmult(n//2,b-1,P) Exploited in, e.g., CacheBleed, R2 = R + R despite Intel and OpenSSL S = [R2,R2 + P] claiming their code was safe. mask = -(n % 2) return S[0]^(mask&(S[1]^S[0])) Confidence-inspiring solution: Avoid all data flow from in n . secrets to memory addresses.

11 12 Another big problem: Table lookups via arithmetic CPUs do not try to protect Always read all table entries. metadata regarding array indices . Use bit operations to select Actual time for x[i] the desired table entry: affects, and is affected by, def scalarmult(n,b,P): detailed state of data cache, if b == 0: return 0 store-to-load forwarder, etc. R = scalarmult(n//2,b-1,P) Exploited in, e.g., CacheBleed, R2 = R + R despite Intel and OpenSSL S = [R2,R2 + P] claiming their code was safe. mask = -(n % 2) return S[0]^(mask&(S[1]^S[0])) Confidence-inspiring solution: Avoid all data flow from secrets to memory addresses.

11 12 Another big problem: Table lookups via arithmetic Width-2 do not try to protect Always read all table entries. def fixwin2(n,b,table): metadata regarding array indices . Use bit operations to select if b <= Actual time for x[i] the desired table entry: T = table[0] affects, and is affected by, mask = def scalarmult(n,b,P): detailed state of data cache, T ^= ~mask if b == 0: return 0 re-to-load forwarder, etc. mask = R = scalarmult(n//2,b-1,P) T ^= ~mask Exploited in, e.g., CacheBleed, R2 = R + R mask = despite Intel and OpenSSL S = [R2,R2 + P] T ^= ~mask claiming their code was safe. mask = -(n % 2) R = fixwin2(n//4,b-2,table) return S[0]^(mask&(S[1]^S[0])) Confidence-inspiring solution: R = R all data flow from R = R secrets to memory addresses. return

11 12 roblem: Table lookups via arithmetic Width-2 unsigned to protect Always read all table entries. def fixwin2(n,b,table): ding array indices . Use bit operations to select if b <= 0: return the desired table entry: x[i] T = table[0] affected by, mask = (-(1 ^ (n def scalarmult(n,b,P): data cache, T ^= ~mask & (T^table[1]) if b == 0: return 0 rwarder, etc. mask = (-(2 ^ (n R = scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) e.g., CacheBleed, R2 = R + R mask = (-(3 ^ (n OpenSSL S = [R2,R2 + P] T ^= ~mask & (T^table[3]) de was safe. mask = -(n % 2) R = fixwin2(n//4,b-2,table) return S[0]^(mask&(S[1]^S[0])) Confidence-inspiring solution: R = R + R flow from R = R + R memory addresses. return R + T

11 12 Table lookups via arithmetic Width-2 unsigned fixed windo rotect Always read all table entries. def fixwin2(n,b,table): indices . Use bit operations to select if b <= 0: return 0 the desired table entry: T = table[0] mask = (-(1 ^ (n % 4))) def scalarmult(n,b,P): cache, T ^= ~mask & (T^table[1]) if b == 0: return 0 tc. mask = (-(2 ^ (n % 4))) R = scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) CacheBleed, R2 = R + R mask = (-(3 ^ (n % 4))) S = [R2,R2 + P] T ^= ~mask & (T^table[3]) safe. mask = -(n % 2) R = fixwin2(n//4,b-2,table) return S[0]^(mask&(S[1]^S[0])) solution: R = R + R R = R + R addresses. return R + T

12 13 Table lookups via arithmetic Width-2 unsigned fixed windows Always read all table entries. def fixwin2(n,b,table): Use bit operations to select if b <= 0: return 0 the desired table entry: T = table[0] mask = (-(1 ^ (n % 4))) >> 2 def scalarmult(n,b,P): T ^= ~mask & (T^table[1]) if b == 0: return 0 mask = (-(2 ^ (n % 4))) >> 2 R = scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) R2 = R + R mask = (-(3 ^ (n % 4))) >> 2 S = [R2,R2 + P] T ^= ~mask & (T^table[3]) mask = -(n % 2) R = fixwin2(n//4,b-2,table) return S[0]^(mask&(S[1]^S[0])) R = R + R R = R + R return R + T

12 13 lookups via arithmetic Width-2 unsigned fixed windows def scalarmult(n,b,P): P2 = P+P ys read all table entries. def fixwin2(n,b,table): table bit operations to select if b <= 0: return 0 return desired table entry: T = table[0] Public branches, mask = (-(1 ^ (n % 4))) >> 2 scalarmult(n,b,P): T ^= ~mask & (T^table[1]) For b ∈ 2 == 0: return 0 mask = (-(2 ^ (n % 4))) >> 2 Always b scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) Always b R + R mask = (-(3 ^ (n % 4))) >> 2 Always 2 [R2,R2 + P] T ^= ~mask & (T^table[3]) = -(n % 2) Can simila R = fixwin2(n//4,b-2,table) return S[0]^(mask&(S[1]^S[0])) larger-width R = R + R Unsigned R = R + R Signed is return R + T

12 13 via arithmetic Width-2 unsigned fixed windows def scalarmult(n,b,P): P2 = P+P table entries. def fixwin2(n,b,table): table = [0,P,P2,P2+P] erations to select if b <= 0: return 0 return fixwin2(n,b,table) entry: T = table[0] Public branches, public mask = (-(1 ^ (n % 4))) >> 2 scalarmult(n,b,P): T ^= ~mask & (T^table[1]) For b ∈ 2 Z : return 0 mask = (-(2 ^ (n % 4))) >> 2 Always b doublings. scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) Always b= 2 additions mask = (-(3 ^ (n % 4))) >> 2 Always 2 additions P] T ^= ~mask & (T^table[3]) 2) Can similarly protect R = fixwin2(n//4,b-2,table) S[0]^(mask&(S[1]^S[0])) larger-width fixed windo R = R + R Unsigned is slightly R = R + R Signed is slightly faster. return R + T

12 13 rithmetic Width-2 unsigned fixed windows def scalarmult(n,b,P): P2 = P+P entries. def fixwin2(n,b,table): table = [0,P,P2,P2+P] select if b <= 0: return 0 return fixwin2(n,b,table) T = table[0] Public branches, public indices. mask = (-(1 ^ (n % 4))) >> 2 T ^= ~mask & (T^table[1]) For b ∈ 2 Z : mask = (-(2 ^ (n % 4))) >> 2 Always b doublings. scalarmult(n//2,b-1,P) T ^= ~mask & (T^table[2]) Always b= 2 additions of T . mask = (-(3 ^ (n % 4))) >> 2 Always 2 additions for table. T ^= ~mask & (T^table[3]) Can similarly protect R = fixwin2(n//4,b-2,table) S[0]^(mask&(S[1]^S[0])) larger-width fixed windows. R = R + R Unsigned is slightly easier. R = R + R Signed is slightly faster. return R + T

13 14 Width-2 unsigned fixed windows def scalarmult(n,b,P): P2 = P+P def fixwin2(n,b,table): table = [0,P,P2,P2+P] if b <= 0: return 0 return fixwin2(n,b,table) T = table[0] Public branches, public indices. mask = (-(1 ^ (n % 4))) >> 2 T ^= ~mask & (T^table[1]) For b ∈ 2 Z : mask = (-(2 ^ (n % 4))) >> 2 Always b doublings. T ^= ~mask & (T^table[2]) Always b= 2 additions of T . mask = (-(3 ^ (n % 4))) >> 2 Always 2 additions for table. T ^= ~mask & (T^table[3]) Can similarly protect R = fixwin2(n//4,b-2,table) larger-width fixed windows. R = R + R Unsigned is slightly easier. R = R + R Signed is slightly faster. return R + T

13 14 Width-2 unsigned fixed windows Fixed-base def scalarmult(n,b,P): P2 = P+P Obvious fixwin2(n,b,table): table = [0,P,P2,P2+P] a �→ aB <= 0: return 0 return fixwin2(n,b,table) reuse n; P table[0] Public branches, public indices. = (-(1 ^ (n % 4))) >> 2 ~mask & (T^table[1]) For b ∈ 2 Z : = (-(2 ^ (n % 4))) >> 2 Always b doublings. ~mask & (T^table[2]) Always b= 2 additions of T . = (-(3 ^ (n % 4))) >> 2 Always 2 additions for table. ~mask & (T^table[3]) Can similarly protect fixwin2(n//4,b-2,table) larger-width fixed windows. + R Unsigned is slightly easier. + R Signed is slightly faster. return R + T

13 14 unsigned fixed windows Fixed-base scalar multiplication def scalarmult(n,b,P): P2 = P+P Obvious way to handle fixwin2(n,b,table): table = [0,P,P2,P2+P] a �→ aB and signing return 0 return fixwin2(n,b,table) reuse n; P �→ nP from Public branches, public indices. (n % 4))) >> 2 (T^table[1]) For b ∈ 2 Z : (n % 4))) >> 2 Always b doublings. (T^table[2]) Always b= 2 additions of T . (n % 4))) >> 2 Always 2 additions for table. (T^table[3]) Can similarly protect fixwin2(n//4,b-2,table) larger-width fixed windows. Unsigned is slightly easier. Signed is slightly faster.

13 14 windows Fixed-base scalar multiplication def scalarmult(n,b,P): P2 = P+P Obvious way to handle keygen table = [0,P,P2,P2+P] a �→ aB and signing r �→ rB return fixwin2(n,b,table) reuse n; P �→ nP from ECDH. Public branches, public indices. 4))) >> 2 (T^table[1]) For b ∈ 2 Z : 4))) >> 2 Always b doublings. (T^table[2]) Always b= 2 additions of T . 4))) >> 2 Always 2 additions for table. (T^table[3]) Can similarly protect fixwin2(n//4,b-2,table) larger-width fixed windows. Unsigned is slightly easier. Signed is slightly faster.

14 15 Fixed-base scalar multiplication def scalarmult(n,b,P): P2 = P+P Obvious way to handle keygen table = [0,P,P2,P2+P] a �→ aB and signing r �→ rB : return fixwin2(n,b,table) reuse n; P �→ nP from ECDH. Public branches, public indices. For b ∈ 2 Z : Always b doublings. Always b= 2 additions of T . Always 2 additions for table. Can similarly protect larger-width fixed windows. Unsigned is slightly easier. Signed is slightly faster.

14 15 Fixed-base scalar multiplication def scalarmult(n,b,P): P2 = P+P Obvious way to handle keygen table = [0,P,P2,P2+P] a �→ aB and signing r �→ rB : return fixwin2(n,b,table) reuse n; P �→ nP from ECDH. Public branches, public indices. Can do much better since B is For b ∈ 2 Z : a constant: standard base point. Always b doublings. e.g. For b = 256: Compute Always b= 2 additions of T . (2 128 n 1 + n 0 ) B as n 1 B 1 + n 0 B Always 2 additions for table. using double-scalar fixed windows, after precomputing B 1 = 2 128 B . Can similarly protect larger-width fixed windows. Fun exercise: For each k , try to Unsigned is slightly easier. minimize number of additions Signed is slightly faster. using k precomputed points.

14 15 Fixed-base scalar multiplication Recall Chou scalarmult(n,b,P): 57164 cycles P+P Obvious way to handle keygen 63526 cycles = [0,P,P2,P2+P] a �→ aB and signing r �→ rB : 205741 cycles return fixwin2(n,b,table) reuse n; P �→ nP from ECDH. 159128 cycles branches, public indices. Can do much better since B is ECDH is ∈ 2 Z : a constant: standard base point. ys b doublings. Verification e.g. For b = 256: Compute ys b= 2 additions of T . somewhat (2 128 n 1 + n 0 ) B as n 1 B 1 + n 0 B ys 2 additions for table. (But batch using double-scalar fixed windows, after precomputing B 1 = 2 128 B . similarly protect Keygen is rger-width fixed windows. much faster Fun exercise: For each k , try to Unsigned is slightly easier. minimize number of additions Signing is is slightly faster. using k precomputed points. depending

14 15 Fixed-base scalar multiplication Recall Chou timings: scalarmult(n,b,P): 57164 cycles for ke Obvious way to handle keygen 63526 cycles for signature, [0,P,P2,P2+P] a �→ aB and signing r �→ rB : 205741 cycles for verification, fixwin2(n,b,table) reuse n; P �→ nP from ECDH. 159128 cycles for ECDH. public indices. Can do much better since B is ECDH is single-scala a constant: standard base point. doublings. Verification is double-scala e.g. For b = 256: Compute additions of T . somewhat slower than (2 128 n 1 + n 0 ) B as n 1 B 1 + n 0 B additions for table. (But batch verification using double-scalar fixed windows, after precomputing B 1 = 2 128 B . rotect Keygen is fixed-base fixed windows. much faster than ECDH. Fun exercise: For each k , try to slightly easier. minimize number of additions Signing is keygen plus faster. using k precomputed points. depending on message

14 15 Fixed-base scalar multiplication Recall Chou timings: 57164 cycles for keygen, Obvious way to handle keygen 63526 cycles for signature, a �→ aB and signing r �→ rB : 205741 cycles for verification, fixwin2(n,b,table) reuse n; P �→ nP from ECDH. 159128 cycles for ECDH. indices. Can do much better since B is ECDH is single-scalar mult. a constant: standard base point. Verification is double-scalar mult, e.g. For b = 256: Compute . somewhat slower than ECDH. (2 128 n 1 + n 0 ) B as n 1 B 1 + n 0 B table. (But batch verification is faster.) using double-scalar fixed windows, after precomputing B 1 = 2 128 B . Keygen is fixed-base scalar mult, ws. much faster than ECDH. Fun exercise: For each k , try to minimize number of additions Signing is keygen plus overhead using k precomputed points. depending on message length.

15 16 Fixed-base scalar multiplication Recall Chou timings: 57164 cycles for keygen, Obvious way to handle keygen 63526 cycles for signature, a �→ aB and signing r �→ rB : 205741 cycles for verification, reuse n; P �→ nP from ECDH. 159128 cycles for ECDH. Can do much better since B is ECDH is single-scalar mult. a constant: standard base point. Verification is double-scalar mult, e.g. For b = 256: Compute somewhat slower than ECDH. (2 128 n 1 + n 0 ) B as n 1 B 1 + n 0 B (But batch verification is faster.) using double-scalar fixed windows, after precomputing B 1 = 2 128 B . Keygen is fixed-base scalar mult, much faster than ECDH. Fun exercise: For each k , try to minimize number of additions Signing is keygen plus overhead using k precomputed points. depending on message length.

15 16 Fixed-base scalar multiplication Recall Chou timings: Let’s move 57164 cycles for keygen, ECC Obvious way to handle keygen 63526 cycles for signature, verify S B and signing r �→ rB : 205741 cycles for verification, n; P �→ nP from ECDH. 159128 cycles for ECDH. Point do much better since B is P; Q ECDH is single-scalar mult. constant: standard base point. Verification is double-scalar mult, r b = 256: Compute Field somewhat slower than ECDH. x 1 ; x 2 �→ 1 + n 0 ) B as n 1 B 1 + n 0 B (But batch verification is faster.) double-scalar fixed windows, recomputing B 1 = 2 128 B . Keygen is fixed-base scalar mult, Machine 32-bit multiplication much faster than ECDH. exercise: For each k , try to minimize number of additions Signing is keygen plus overhead Gates: k precomputed points. depending on message length. AND,

� � � � 15 16 r multiplication Recall Chou timings: Let’s move down a 57164 cycles for keygen, ECC ops: e.g., handle keygen 63526 cycles for signature, verify SB = R + h signing r �→ rB : 205741 cycles for verification, windowing from ECDH. 159128 cycles for ECDH. Point ops: e.g., etter since B is P; Q �→ P + Q ECDH is single-scalar mult. standard base point. faster doubling Verification is double-scalar mult, 256: Compute Field ops: e.g., somewhat slower than ECDH. x 1 ; x 2 �→ x 1 x 2 in F as n 1 B 1 + n 0 B (But batch verification is faster.) double-scalar fixed windows, delayed omputing B 1 = 2 128 B . Keygen is fixed-base scalar mult, Machine insns: e.g., 32-bit multiplication much faster than ECDH. r each k , try to � pipelining er of additions Signing is keygen plus overhead Gates: e.g., recomputed points. depending on message length. AND, OR, XOR

� � � � 15 16 multiplication Recall Chou timings: Let’s move down a level: 57164 cycles for keygen, ECC ops: e.g., eygen 63526 cycles for signature, verify SB = R + hA B : 205741 cycles for verification, windowing etc. ECDH. 159128 cycles for ECDH. Point ops: e.g., B is P; Q �→ P + Q ECDH is single-scalar mult. point. faster doubling etc Verification is double-scalar mult, Compute Field ops: e.g., somewhat slower than ECDH. x 1 ; x 2 �→ x 1 x 2 in F p n 0 B (But batch verification is faster.) windows, delayed carries etc. 2 128 B . Keygen is fixed-base scalar mult, Machine insns: e.g., 32-bit multiplication much faster than ECDH. try to � pipelining etc. additions Signing is keygen plus overhead Gates: e.g., oints. depending on message length. AND, OR, XOR

� � � � 16 17 Recall Chou timings: Let’s move down a level: 57164 cycles for keygen, ECC ops: e.g., 63526 cycles for signature, verify SB = R + hA 205741 cycles for verification, windowing etc. 159128 cycles for ECDH. Point ops: e.g., P; Q �→ P + Q ECDH is single-scalar mult. faster doubling etc. Verification is double-scalar mult, Field ops: e.g., somewhat slower than ECDH. x 1 ; x 2 �→ x 1 x 2 in F p (But batch verification is faster.) delayed carries etc. Keygen is fixed-base scalar mult, Machine insns: e.g., 32-bit multiplication much faster than ECDH. � pipelining etc. Signing is keygen plus overhead Gates: e.g., depending on message length. AND, OR, XOR

� � � � 16 17 Chou timings: Let’s move down a level: Eliminating cycles for keygen, ECC ops: e.g., Have to cycles for signature, verify SB = R + hA of curve 205741 cycles for verification, windowing etc. How to efficiently 159128 cycles for ECDH. Point ops: e.g., additions P; Q �→ P + Q is single-scalar mult. Addition faster doubling etc. erification is double-scalar mult, (( x 1 y 2 + Field ops: e.g., somewhat slower than ECDH. ( y 1 y 2 − x 1 ; x 2 �→ x 1 x 2 in F p batch verification is faster.) uses exp delayed carries etc. Keygen is fixed-base scalar mult, Machine insns: e.g., 32-bit multiplication faster than ECDH. � pipelining etc. Signing is keygen plus overhead Gates: e.g., ending on message length. AND, OR, XOR

� � � � 16 17 timings: Let’s move down a level: Eliminating divisions keygen, ECC ops: e.g., Have to do many additions signature, verify SB = R + hA of curve points: P; r verification, windowing etc. How to efficiently r ECDH. Point ops: e.g., additions into field P; Q �→ P + Q single-scalar mult. Addition ( x 1 ; y 1 ) + faster doubling etc. double-scalar mult, (( x 1 y 2 + y 1 x 2 ) = (1 Field ops: e.g., er than ECDH. ( y 1 y 2 − x 1 x 2 ) = (1 x 1 ; x 2 �→ x 1 x 2 in F p verification is faster.) uses expensive divisions. delayed carries etc. fixed-base scalar mult, Machine insns: e.g., 32-bit multiplication than ECDH. � pipelining etc. eygen plus overhead Gates: e.g., message length. AND, OR, XOR

� � � � 16 17 Let’s move down a level: Eliminating divisions ECC ops: e.g., Have to do many additions signature, verify SB = R + hA of curve points: P; Q �→ P + verification, windowing etc. How to efficiently decompose Point ops: e.g., additions into field ops? P; Q �→ P + Q mult. Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = faster doubling etc. r mult, (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 Field ops: e.g., ECDH. ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 x 1 ; x 2 �→ x 1 x 2 in F p faster.) uses expensive divisions. delayed carries etc. r mult, Machine insns: e.g., 32-bit multiplication � pipelining etc. overhead Gates: e.g., length. AND, OR, XOR

� � � � 17 18 Let’s move down a level: Eliminating divisions ECC ops: e.g., Have to do many additions verify SB = R + hA of curve points: P; Q �→ P + Q . windowing etc. How to efficiently decompose Point ops: e.g., additions into field ops? P; Q �→ P + Q Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = faster doubling etc. (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Field ops: e.g., ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) x 1 ; x 2 �→ x 1 x 2 in F p uses expensive divisions. delayed carries etc. Machine insns: e.g., 32-bit multiplication � pipelining etc. Gates: e.g., AND, OR, XOR

� � � � 17 18 Let’s move down a level: Eliminating divisions ECC ops: e.g., Have to do many additions verify SB = R + hA of curve points: P; Q �→ P + Q . windowing etc. How to efficiently decompose Point ops: e.g., additions into field ops? P; Q �→ P + Q Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = faster doubling etc. (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Field ops: e.g., ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) x 1 ; x 2 �→ x 1 x 2 in F p uses expensive divisions. delayed carries etc. Better: postpone divisions Machine insns: e.g., 32-bit multiplication and work with fractions. Represent ( x; y ) as ( X : Y : Z ) � pipelining etc. with x = X=Z , y = Y=Z , Z � = 0. Gates: e.g., AND, OR, XOR

� � � � 17 18 move down a level: Eliminating divisions Addition handle fractions ECC ops: e.g., Have to do many additions SB = R + hA „ X 1 ; Y 1 of curve points: P; Q �→ P + Q . windowing etc. Z 1 Z 1 How to efficiently decompose oint ops: e.g., X 1 Y 2 additions into field ops? Z 1 Z 2 Q �→ P + Q 1 + d X Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = faster doubling etc. Z (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Field ops: e.g., Y 1 Y 2 ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 2 �→ x 1 x 2 in F p 1 − d X uses expensive divisions. Z delayed carries etc. Better: postpone divisions Machine insns: e.g., multiplication and work with fractions. Represent ( x; y ) as ( X : Y : Z ) � pipelining etc. with x = X=Z , y = Y=Z , Z � = 0. Gates: e.g., AND, OR, XOR

17 18 a level: Eliminating divisions Addition now has to handle fractions as e.g., Have to do many additions hA „ X 1 ; Y 1 « „ X 2 of curve points: P; Q �→ P + Q . + windowing etc. Z 1 Z 1 Z 2 How to efficiently decompose e.g., X 1 Z 2 + Y 1 Y 2 X 2 additions into field ops? Z 1 Z 1 Z 2 Q 1 + d X 1 X 2 Y 1 Y 2 Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = faster doubling etc. Z 1 Z 2 Z 1 Z 2 (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), e.g., Y 1 Z 2 − X 1 Y 2 X 2 ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 in F p 1 − d X 1 X 2 Y 1 Y 2 uses expensive divisions. Z 1 Z 2 Z 1 Z 2 ed carries etc. Better: postpone divisions e.g., multiplication and work with fractions. Represent ( x; y ) as ( X : Y : Z ) elining etc. with x = X=Z , y = Y=Z , Z � = 0. e.g., OR

17 18 Eliminating divisions Addition now has to handle fractions as input: Have to do many additions „ X 1 ; Y 1 « „ X 2 ; Y 2 « of curve points: P; Q �→ P + Q . + = tc. Z 1 Z 1 Z 2 Z 2 How to efficiently decompose X 1 Z 2 + Y 1 Y 2 X 2 additions into field ops? Z 1 Z 1 Z 2 , 1 + d X 1 X 2 Y 1 Y 2 Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = etc. Z 1 Z 2 Z 1 Z 2 (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Y 1 Z 2 − X 1 Y 2 X 2 ! ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 = 1 − d X 1 X 2 Y 1 Y 2 uses expensive divisions. Z 1 Z 2 Z 1 Z 2 etc. Better: postpone divisions and work with fractions. Represent ( x; y ) as ( X : Y : Z ) with x = X=Z , y = Y=Z , Z � = 0.

18 19 Eliminating divisions Addition now has to handle fractions as input: Have to do many additions „ X 1 ; Y 1 « „ X 2 ; Y 2 « of curve points: P; Q �→ P + Q . + = Z 1 Z 1 Z 2 Z 2 How to efficiently decompose X 1 Z 2 + Y 1 Y 2 X 2 additions into field ops? Z 1 Z 1 Z 2 , 1 + d X 1 X 2 Y 1 Y 2 Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = Z 1 Z 2 Z 1 Z 2 (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Y 1 Z 2 − X 1 Y 2 X 2 ! ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 = 1 − d X 1 X 2 Y 1 Y 2 uses expensive divisions. Z 1 Z 2 Z 1 Z 2 Better: postpone divisions and work with fractions. Represent ( x; y ) as ( X : Y : Z ) with x = X=Z , y = Y=Z , Z � = 0.

18 19 Eliminating divisions Addition now has to handle fractions as input: Have to do many additions „ X 1 ; Y 1 « „ X 2 ; Y 2 « of curve points: P; Q �→ P + Q . + = Z 1 Z 1 Z 2 Z 2 How to efficiently decompose X 1 Z 2 + Y 1 Y 2 X 2 additions into field ops? Z 1 Z 1 Z 2 , 1 + d X 1 X 2 Y 1 Y 2 Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = Z 1 Z 2 Z 1 Z 2 (( x 1 y 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), Y 1 Z 2 − X 1 Y 2 X 2 ! ( y 1 y 2 − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 = 1 − d X 1 X 2 Y 1 Y 2 uses expensive divisions. Z 1 Z 2 Z 1 Z 2 Better: postpone divisions Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) , and work with fractions. Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 Represent ( x; y ) as ( X : Y : Z ) ! Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) with x = X=Z , y = Y=Z , Z � = 0. Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2

18 19 „ X 1 Eliminating divisions Addition now has to i.e. Z 1 handle fractions as input: to do many additions „ X 3 „ X 1 ; Y 1 « „ X 2 ; Y 2 « curve points: P; Q �→ P + Q . = ; + = Z 3 Z 1 Z 1 Z 2 Z 2 to efficiently decompose where X 1 Z 2 + Y 1 Y 2 X 2 additions into field ops? Z 1 Z 1 Z 2 F = Z 2 1 Z , 1 + d X 1 X 2 Y 1 Y 2 Addition ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = G = Z 2 1 Z Z 1 Z 2 Z 1 Z 2 + y 1 x 2 ) = (1 + dx 1 x 2 y 1 y 2 ), X 3 = Z 1 Y 1 Z 2 − X 1 Y 2 X 2 ! − x 1 x 2 ) = (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 Y 3 = Z 1 Z = 1 − d X 1 X 2 Y 1 Y 2 expensive divisions. Z 3 = FG Z 1 Z 2 Z 1 Z 2 Better: postpone divisions Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) Input to , ork with fractions. Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 X 1 ; Y 1 ; Z resent ( x; y ) as ( X : Y : Z ) Output from ! Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) = X=Z , y = Y=Z , Z � = 0. X 3 ; Y 3 ; Z Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2

18 19 „ X 1 « „ ; Y 1 divisions Addition now has to i.e. + Z 1 Z 1 handle fractions as input: many additions „ X 3 « ; Y 3 „ X 1 ; Y 1 « „ X 2 ; Y 2 « P; Q �→ P + Q . = + = Z 3 Z 3 Z 1 Z 1 Z 2 Z 2 efficiently decompose where X 1 Z 2 + Y 1 Y 2 X 2 field ops? Z 1 Z 1 Z 2 F = Z 2 1 Z 2 2 − dX 1 X , 1 + d X 1 X 2 Y 1 Y 2 + ( x 2 ; y 2 ) = G = Z 2 1 Z 2 2 + dX 1 X Z 1 Z 2 Z 1 Z 2 (1 + dx 1 x 2 y 1 y 2 ), X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 Z 2 − X 1 Y 2 X 2 ! (1 − dx 1 x 2 y 1 y 2 )) Z 1 Z 1 Z 2 Y 3 = Z 1 Z 2 ( Y 1 Y 2 − = 1 − d X 1 X 2 Y 1 Y 2 divisions. Z 3 = FG . Z 1 Z 2 Z 1 Z 2 one divisions Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) Input to addition algo , fractions. Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z as ( X : Y : Z ) Output from addition ! Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) = Y=Z , Z � = 0. X 3 ; Y 3 ; Z 3 . No divisions Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2

18 19 „ X 1 « „ X 2 « ; Y 1 ; Y 2 Addition now has to i.e. + Z 1 Z 1 Z 2 Z 2 handle fractions as input: additions „ X 3 « ; Y 3 „ X 1 ; Y 1 « „ X 2 ; Y 2 « + Q . = + = Z 3 Z 3 Z 1 Z 1 Z 2 Z 2 ose where X 1 Z 2 + Y 1 Y 2 X 2 Z 1 Z 1 Z 2 F = Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2 , , 1 + d X 1 X 2 Y 1 Y 2 ) = G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , Z 1 Z 2 Z 1 Z 2 y 1 y 2 ), X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , Y 1 Z 2 − X 1 Y 2 X 2 ! y 1 y 2 )) Z 1 Z 1 Z 2 Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , = 1 − d X 1 X 2 Y 1 Y 2 Z 3 = FG . Z 1 Z 2 Z 1 Z 2 Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) Input to addition algorithm: , Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . : Z ) Output from addition algorithm: ! Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) Z � = 0. X 3 ; Y 3 ; Z 3 . No divisions needed! Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2

19 20 „ X 1 « „ X 2 « ; Y 1 ; Y 2 Addition now has to i.e. + Z 1 Z 1 Z 2 Z 2 handle fractions as input: „ X 3 « ; Y 3 „ X 1 ; Y 1 « „ X 2 ; Y 2 « = + = Z 3 Z 3 Z 1 Z 1 Z 2 Z 2 where X 1 Z 2 + Y 1 Y 2 X 2 Z 1 Z 1 Z 2 F = Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2 , , 1 + d X 1 X 2 Y 1 Y 2 G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , Z 1 Z 2 Z 1 Z 2 X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , Y 1 Z 2 − X 1 Y 2 X 2 ! Z 1 Z 1 Z 2 Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , = 1 − d X 1 X 2 Y 1 Y 2 Z 3 = FG . Z 1 Z 2 Z 1 Z 2 Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) Input to addition algorithm: , Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . Output from addition algorithm: ! Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) X 3 ; Y 3 ; Z 3 . No divisions needed! Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2

19 20 „ X 1 « „ X 2 « ; Y 1 ; Y 2 Addition now has to Eliminate i.e. + Z 1 Z 1 Z 2 Z 2 fractions as input: to save multiplications: „ X 3 « ; Y 3 Y 1 « „ X 2 ; Y 2 « A = Z 1 · = + = Z 3 Z 3 Z 1 Z 2 Z 2 C = X 1 · where Z 2 + Y 1 Y 2 X 2 D = Y 1 · Z 1 Z 2 F = Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2 , , E = d · C d X 1 X 2 Y 1 Y 2 G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , Z 1 Z 2 Z 1 Z 2 F = B − X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , Z 2 − X 1 Y 2 X 2 X 3 = A · ! Z 1 Z 2 Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , = Y 3 = A · d X 1 X 2 Y 1 Y 2 Z 3 = FG . Z 1 Z 2 Z 1 Z 2 Z 3 = F · 2 ( X 1 Y 2 + Y 1 X 2 ) Input to addition algorithm: Cost: 11 , 2 2 + dX 1 X 2 Y 1 Y 2 X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . M ; S are Output from addition algorithm: Choose small ! 2 ( Y 1 Y 2 − X 1 X 2 ) X 3 ; Y 3 ; Z 3 . No divisions needed! 2 2 − dX 1 X 2 Y 1 Y 2

19 20 „ X 1 « „ X 2 « ; Y 1 ; Y 2 has to Eliminate common i.e. + Z 1 Z 1 Z 2 Z 2 as input: to save multiplications: „ X 3 « ; Y 3 X 2 ; Y 2 « A = Z 1 · Z 2 ; B = A = = Z 3 Z 3 Z 2 Z 2 C = X 1 · X 2 ; where D = Y 1 · Y 2 ; 2 F = Z 2 1 Z 2 2 2 − dX 1 X 2 Y 1 Y 2 , , E = d · C · D ; Y 2 G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , Z 2 F = B − E ; G = B X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , X 3 = A · F · ( X 1 · Y 2 ! 2 Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , = Y 3 = A · G · ( D − C Y 2 Z 3 = FG . Z 2 Z 3 = F · G . Y 1 X 2 ) Input to addition algorithm: Cost: 11 M + 1 S + , 2 Y 1 Y 2 X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . M ; S are costs of mult, Output from addition algorithm: Choose small d for ! X 1 X 2 ) X 3 ; Y 3 ; Z 3 . No divisions needed! 2 Y 1 Y 2

19 20 „ X 1 « „ X 2 « ; Y 1 ; Y 2 Eliminate common subexpressions i.e. + Z 1 Z 1 Z 2 Z 2 to save multiplications: „ X 3 « ; Y 3 A = Z 1 · Z 2 ; B = A 2 ; = = Z 3 Z 3 C = X 1 · X 2 ; where D = Y 1 · Y 2 ; F = Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2 , E = d · C · D ; G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , F = B − E ; G = B + E ; X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , X 3 = A · F · ( X 1 · Y 2 + Y 1 · X Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , Y 3 = A · G · ( D − C ); Z 3 = FG . Z 3 = F · G . Input to addition algorithm: Cost: 11 M + 1 S + 1 M d where X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . M ; S are costs of mult, squa Output from addition algorithm: Choose small d for cheap M X 3 ; Y 3 ; Z 3 . No divisions needed!

20 21 „ X 1 « „ X 2 « ; Y 1 ; Y 2 Eliminate common subexpressions i.e. + Z 1 Z 1 Z 2 Z 2 to save multiplications: „ X 3 « ; Y 3 A = Z 1 · Z 2 ; B = A 2 ; = Z 3 Z 3 C = X 1 · X 2 ; where D = Y 1 · Y 2 ; F = Z 2 1 Z 2 2 − dX 1 X 2 Y 1 Y 2 , E = d · C · D ; G = Z 2 1 Z 2 2 + dX 1 X 2 Y 1 Y 2 , F = B − E ; G = B + E ; X 3 = Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); Y 3 = Z 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , Y 3 = A · G · ( D − C ); Z 3 = FG . Z 3 = F · G . Input to addition algorithm: Cost: 11 M + 1 S + 1 M d where X 1 ; Y 1 ; Z 1 ; X 2 ; Y 2 ; Z 2 . M ; S are costs of mult, square. Output from addition algorithm: Choose small d for cheap M d . X 3 ; Y 3 ; Z 3 . No divisions needed!

20 21 « „ X 2 « ; Y 1 ; Y 2 Eliminate common subexpressions Can do b 1 + Z 1 Z 1 Z 2 Z 2 to save multiplications: Obvious « 3 ; Y 3 A = Z 1 · Z 2 ; B = A 2 ; compute Z 3 C = X 1 · X 2 ; of polys D = Y 1 · Y 2 ; C = X 1 · 1 Z 2 2 2 − dX 1 X 2 Y 1 Y 2 , E = d · C · D ; D = Y 1 · 1 Z 2 2 2 + dX 1 X 2 Y 1 Y 2 , F = B − E ; G = B + E ; M = X 1 Z 1 Z 2 ( X 1 Y 2 + Y 1 X 2 ) F , X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); 1 Z 2 ( Y 1 Y 2 − X 1 X 2 ) G , Y 3 = A · G · ( D − C ); G . Z 3 = F · G . to addition algorithm: Cost: 11 M + 1 S + 1 M d where ; Z 1 ; X 2 ; Y 2 ; Z 2 . M ; S are costs of mult, square. Output from addition algorithm: Choose small d for cheap M d . ; Z 3 . No divisions needed!

20 21 „ X 2 « ; Y 2 Eliminate common subexpressions Can do better: 10 M Z 2 Z 2 to save multiplications: Obvious 4 M metho A = Z 1 · Z 2 ; B = A 2 ; compute product C C = X 1 · X 2 ; of polys X 1 + Y 1 t , D = Y 1 · Y 2 ; C = X 1 · X 2 ; 1 X 2 Y 1 Y 2 , E = d · C · D ; D = Y 1 · Y 2 ; 1 X 2 Y 1 Y 2 , F = B − E ; G = B + E ; M = X 1 · Y 2 + Y 1 · 2 + Y 1 X 2 ) F , X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); − X 1 X 2 ) G , Y 3 = A · G · ( D − C ); Z 3 = F · G . algorithm: Cost: 11 M + 1 S + 1 M d where ; Z 2 . M ; S are costs of mult, square. dition algorithm: Choose small d for cheap M d . divisions needed!

20 21 « Eliminate common subexpressions Can do better: 10 M + 1 S + to save multiplications: Obvious 4 M method to A = Z 1 · Z 2 ; B = A 2 ; compute product C + Mt + C = X 1 · X 2 ; of polys X 1 + Y 1 t , X 2 + Y 2 t : D = Y 1 · Y 2 ; C = X 1 · X 2 ; E = d · C · D ; D = Y 1 · Y 2 ; F = B − E ; G = B + E ; M = X 1 · Y 2 + Y 1 · X 2 . F , X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); G , Y 3 = A · G · ( D − C ); Z 3 = F · G . rithm: Cost: 11 M + 1 S + 1 M d where M ; S are costs of mult, square. rithm: Choose small d for cheap M d . needed!

21 22 Eliminate common subexpressions Can do better: 10 M + 1 S + 1 M d . to save multiplications: Obvious 4 M method to A = Z 1 · Z 2 ; B = A 2 ; compute product C + Mt + Dt 2 C = X 1 · X 2 ; of polys X 1 + Y 1 t , X 2 + Y 2 t : D = Y 1 · Y 2 ; C = X 1 · X 2 ; E = d · C · D ; D = Y 1 · Y 2 ; F = B − E ; G = B + E ; M = X 1 · Y 2 + Y 1 · X 2 . X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); Y 3 = A · G · ( D − C ); Z 3 = F · G . Cost: 11 M + 1 S + 1 M d where M ; S are costs of mult, square. Choose small d for cheap M d .

21 22 Eliminate common subexpressions Can do better: 10 M + 1 S + 1 M d . to save multiplications: Obvious 4 M method to A = Z 1 · Z 2 ; B = A 2 ; compute product C + Mt + Dt 2 C = X 1 · X 2 ; of polys X 1 + Y 1 t , X 2 + Y 2 t : D = Y 1 · Y 2 ; C = X 1 · X 2 ; E = d · C · D ; D = Y 1 · Y 2 ; F = B − E ; G = B + E ; M = X 1 · Y 2 + Y 1 · X 2 . X 3 = A · F · ( X 1 · Y 2 + Y 1 · X 2 ); Karatsuba’s 3 M method: Y 3 = A · G · ( D − C ); Z 3 = F · G . C = X 1 · X 2 ; D = Y 1 · Y 2 ; Cost: 11 M + 1 S + 1 M d where M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . M ; S are costs of mult, square. Choose small d for cheap M d .

21 22 Eliminate common subexpressions Can do better: 10 M + 1 S + 1 M d . Faster doublin save multiplications: Obvious 4 M method to ( x 1 ; y 1 ) + 1 · Z 2 ; B = A 2 ; compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 · X 2 ; of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 · Y 2 ; ((2 x 1 y 1 ) = C = X 1 · X 2 ; ( y 2 1 − x 2 · C · D ; 1 D = Y 1 · Y 2 ; − E ; G = B + E ; M = X 1 · Y 2 + Y 1 · X 2 . A · F · ( X 1 · Y 2 + Y 1 · X 2 ); Karatsuba’s 3 M method: · G · ( D − C ); · G . C = X 1 · X 2 ; D = Y 1 · Y 2 ; 11 M + 1 S + 1 M d where M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . re costs of mult, square. ose small d for cheap M d .

21 22 on subexpressions Can do better: 10 M + 1 S + 1 M d . Faster doubling multiplications: Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = = A 2 ; compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − ((2 x 1 y 1 ) = (1 + dx 2 1 C = X 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx D = Y 1 · Y 2 ; B + E ; M = X 1 · Y 2 + Y 1 · X 2 . · Y 2 + Y 1 · X 2 ); Karatsuba’s 3 M method: − C ); C = X 1 · X 2 ; D = Y 1 · Y 2 ; + 1 M d where M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . of mult, square. for cheap M d .

21 22 ressions Can do better: 10 M + 1 S + 1 M d . Faster doubling Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), C = X 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). D = Y 1 · Y 2 ; M = X 1 · Y 2 + Y 1 · X 2 . · X 2 ); Karatsuba’s 3 M method: C = X 1 · X 2 ; D = Y 1 · Y 2 ; where M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . square. M d .

22 23 Can do better: 10 M + 1 S + 1 M d . Faster doubling Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), C = X 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). D = Y 1 · Y 2 ; M = X 1 · Y 2 + Y 1 · X 2 . Karatsuba’s 3 M method: C = X 1 · X 2 ; D = Y 1 · Y 2 ; M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D .

22 23 Can do better: 10 M + 1 S + 1 M d . Faster doubling Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), C = X 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). D = Y 1 · Y 2 ; x 2 1 + y 2 1 = 1 + dx 2 1 y 2 M = X 1 · Y 2 + Y 1 · X 2 . 1 so ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = Karatsuba’s 3 M method: ((2 x 1 y 1 ) = ( x 2 1 + y 2 1 ), ( y 2 1 − x 2 1 ) = (2 − x 2 1 − y 2 C = X 1 · X 2 ; 1 )). D = Y 1 · Y 2 ; M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D .

22 23 Can do better: 10 M + 1 S + 1 M d . Faster doubling Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), of polys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), C = X 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). D = Y 1 · Y 2 ; x 2 1 + y 2 1 = 1 + dx 2 1 y 2 M = X 1 · Y 2 + Y 1 · X 2 . 1 so ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = Karatsuba’s 3 M method: ((2 x 1 y 1 ) = ( x 2 1 + y 2 1 ), ( y 2 1 − x 2 1 ) = (2 − x 2 1 − y 2 C = X 1 · X 2 ; 1 )). D = Y 1 · Y 2 ; Again eliminate divisions M = ( X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . using ( X : Y : Z ): only 3 M + 4 S . Much faster than addition.

22 23 do better: 10 M + 1 S + 1 M d . Faster doubling More add Obvious 4 M method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = Dual addition compute product C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), ( x 1 ; y 1 ) + olys X 1 + Y 1 t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = (( x 1 y 1 + ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), ( x 1 y 1 − 1 · X 2 ; ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). Low degree, 1 · Y 2 ; x 2 1 + y 2 1 = 1 + dx 2 1 y 2 1 · Y 2 + Y 1 · X 2 . 1 so ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = ratsuba’s 3 M method: ((2 x 1 y 1 ) = ( x 2 1 + y 2 1 ), ( y 2 1 − x 2 1 ) = (2 − x 2 1 − y 2 1 · X 2 ; 1 )). 1 · Y 2 ; Again eliminate divisions X 1 + Y 1 ) · ( X 2 + Y 2 ) − C − D . using ( X : Y : Z ): only 3 M + 4 S . Much faster than addition.

22 23 10 M + 1 S + 1 M d . Faster doubling More addition strategies method to ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = Dual addition formula: duct C + Mt + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = t , X 2 + Y 2 t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = (( x 1 y 1 + x 2 y 2 ) = ( x 1 ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), ( x 1 y 1 − x 2 y 2 ) = ( x 1 ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). Low degree, no need x 2 1 + y 2 1 = 1 + dx 2 1 y 2 1 · X 2 . 1 so ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = method: ((2 x 1 y 1 ) = ( x 2 1 + y 2 1 ), ( y 2 1 − x 2 1 ) = (2 − x 2 1 − y 2 1 )). Again eliminate divisions ( X 2 + Y 2 ) − C − D . using ( X : Y : Z ): only 3 M + 4 S . Much faster than addition.

22 23 + 1 M d . Faster doubling More addition strategies ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = Dual addition formula: + Dt 2 (( x 1 y 1 + y 1 x 1 ) = (1+ dx 1 x 1 y 1 y 1 ), ( x 1 ; y 1 ) + ( x 2 ; y 2 ) = t : ( y 1 y 1 − x 1 x 1 ) = (1 − dx 1 x 1 y 1 y 1 )) = (( x 1 y 1 + x 2 y 2 ) = ( x 1 x 2 + y 1 y 2 ((2 x 1 y 1 ) = (1 + dx 2 1 y 2 1 ), ( x 1 y 1 − x 2 y 2 ) = ( x 1 y 2 − x 2 y 1 ( y 2 1 − x 2 1 ) = (1 − dx 2 1 y 2 1 )). Low degree, no need for d . x 2 1 + y 2 1 = 1 + dx 2 1 y 2 1 so ( x 1 ; y 1 ) + ( x 1 ; y 1 ) = ((2 x 1 y 1 ) = ( x 2 1 + y 2 1 ), ( y 2 1 − x 2 1 ) = (2 − x 2 1 − y 2 1 )). Again eliminate divisions − C − D . using ( X : Y : Z ): only 3 M + 4 S . Much faster than addition.

Recommend

More recommend