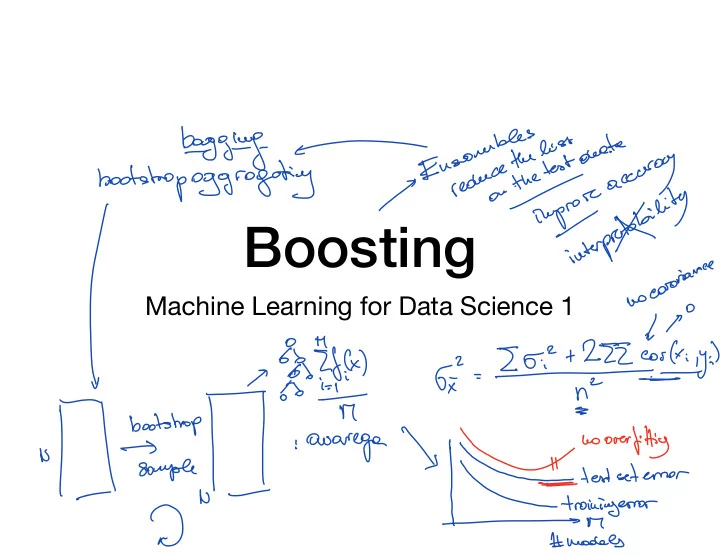

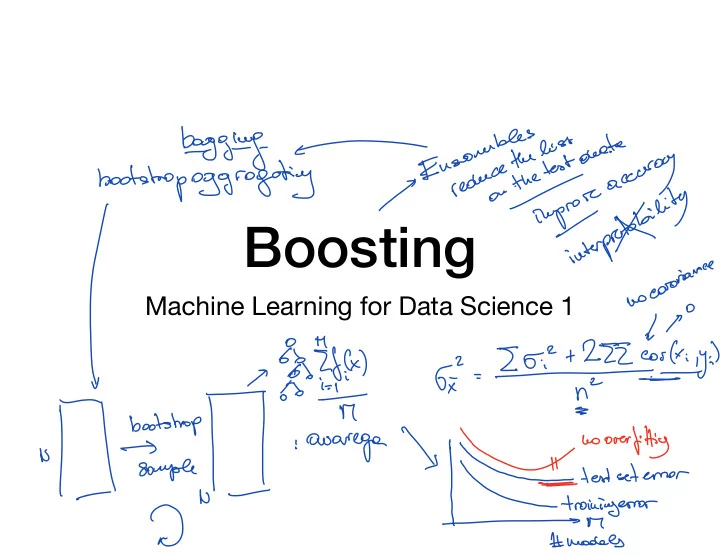

o Eez wog Boosting Twp.to qtsmpEEIEEEI.io bieiYwocooori0M Machine Learning for Data Science 1 yo Is see e u I tI fm 7iowIeorsEiii iiie µ troiuigerror Tr modees

i clossifcotion bing Shopire 1997 A C Sit 13 Gue Initialize troing data weights I 1 Wiz kN N i Item 2 Josue Gmfx to the ooeighteeedtneigolete fit Z w.tl GmCxiI compute errm Eco accuracy 1 Luz log e rr.ee Gayle arm error Genki Cgi eat set w wi for correct prediction leotideependeet weights orewetdeoyes 3 output formeoned prediction µ sign Edm Gala GG tee i e err 1 siceelor to boggy

GK xzf.INT La Gela 1 t Azaz x x T 9 9 weighted truing weighted dote sample sample increases the weights of wiselosif ed Ado.Booof.nl Coto heatouces acamog increase of performance dramatic a 9IE why terror we optimizing what are iwi.in eEEIEttei too boom oo I powerfuee

Adolitisemodess Boosting Gr Gz 3 Z G em sign EffIEntieu owe www.eototiuefcxl basis faeclion expansion EEPpubltit osetgpowdem a weight I Cgi.IE p.mbkijmDME EFG compatotouelgnteresireoptunistos optimizing oeeponometersotonee ALL AT ONCE problematic

F Iogeai iy E L Approximates the solution to Yuya new fee of on by adding a to the expansion o adjusting the coefficients w already inferred Initialize fo o 1 x 2 foe we L to te Cours ate pm gin orgp.w.iq YLCgi fm ilxi tp b ki p x fm stet fm CH t Pur b fue i k correct the output 3 output J µ Cx of the previous mode expansion

toss Function Boost M1 Adee and AdaBook is the loss facetious optimized by woot Com sourieor procedures be used for other lossfructuous our oddity mode Eby Boost foreword step Adee 145am thot uses eepouunto e loss gf Gl fl Hy f C exp expounted loss i sub demi f err i t c

E l Beast H1 Gm x I Adee coz Looe toololve For exponential loss expE gitfu lxittpfCxi Ei Gam orgp.Y.in w Ii ogp.mg zim.ex.pf E.S iEf e B i twin expc g.fm Gi to each obsenoten or weight applied deranges with evehiterolien win t Ep 2 e P Z'wi win org Gi fi Gi gi f ooguiutlepte r.EWMI.la Eki.IteIi.E eim Gm TGIFEwiuyi tamlx.tl ft Teossijer thot wimisees weighted error rote

gh is P whet emo i wi Ee d B pm I E I yiGwlx Z Ez Zain 1 books fowelier The update ofopponent'm dm fwcxyafw.int pm GK 2pm Gm ki tae e Puyi wicket Gillett I Genki update 2T gi Gula for yi Pm Aaa Boat Genki e F iI Yi l ient z wicia MI Que

AdeBoostrecopy At each iterotou clone f er thot weighted error unimises clue ox use this to estimate amor nett we use this to compute the weights we keys nor the overall dossier wth new model t Pur Gm k Jun KI Jui da found stepwise odeditor model ugaEssdfEEoenL ytaotun.mu EexpCyi JC i L wiotis tieremer theEEiy yay e mania hz T

Bo gtrees f formoliseen trees portion future space to disjoint region Rj a constant is assigned where f G Jj C Rj Foreolly x c Rj as I g j Il x Oa 1 i f r win II Ii Kyi g o I optipnow org Hayes hewrites the unon feel f j g Jj yi Rj down tearsioeport Konig feed Rj greedy top

Boosted Tree headed f GEE Texian in induced forward stepanoe orange t of each stage µ t Thi jour argufy Z Kyi Jw ki u i I orojuiRIlyiifw lxi tfju go.ae regiouswfjm i i fju fhowgregiosidiffiadht but cores in some there is www.fheopmoeeeh

jc.IT cy lkyifcxll Tm xi Kyi fue n xi Instill fg I touwmouthis residuologteee current model thefeesbooldbeftterd E totheresohol x tf fr fo the f fotfattz T 4 T residuilof resound towing fl off I t fittz f't T

Numerical Optimization L Cgi f Gilt L fl It sum of trees L J f is a x Gool beef u Uf F org F www.aelopticuisot Em IE Fm E K Fm n initiol fo guess ou Ju is the which Ivoluceed bored e fm Seine of pouiously induced vectors

steepcotouseent Fur gu GI gradient kinsmen j f toss regnal yi f exit fcx.TT regression III fCxiD lsiguIyi regression t.yi f.fi k tee compound deweese clossif gi Gu peke I puilogpei wefttheregressoutees i

CLI reegtyAhgnteu cnn.ua L yi f arginine Ei f Cx Initialize L s E 7 Tara to j tree to Rin f t regression 3 I regeous caput i ki tf for j I it fue rally Jiu org you x t.IE fjuiI xERj update Julx faf 3 mat I win.info EE I 6M

Auto ML 0 0 O as stacking µ

a

Recommend

More recommend