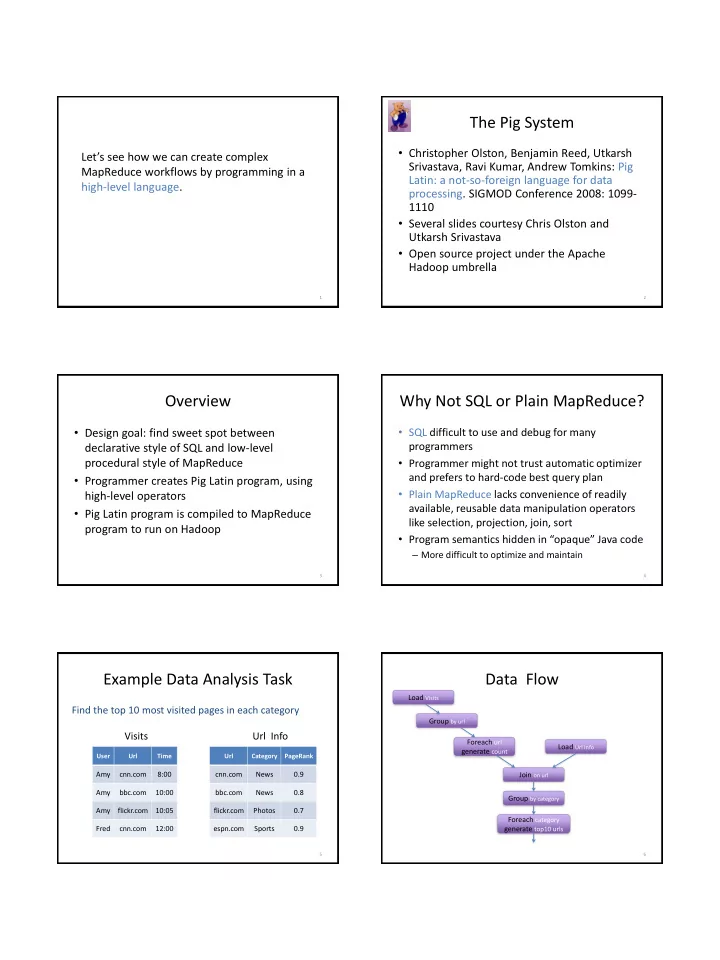

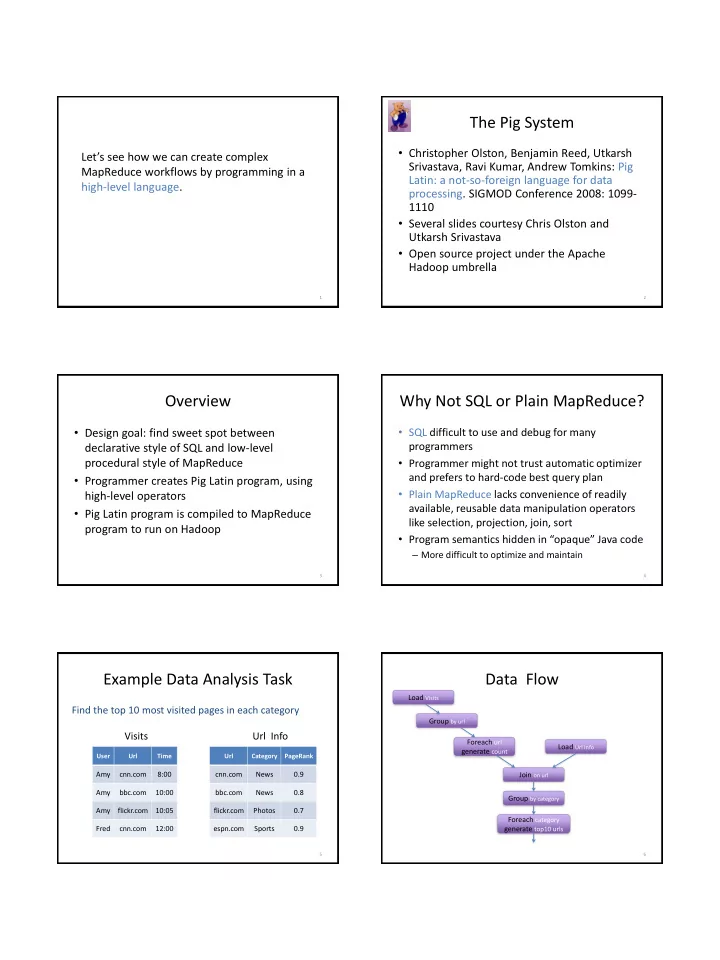

The Pig System • Christopher Olston, Benjamin Reed, Utkarsh Let’s see how we can create complex Srivastava, Ravi Kumar, Andrew Tomkins: Pig MapReduce workflows by programming in a Latin: a not-so-foreign language for data high-level language. processing. SIGMOD Conference 2008: 1099- 1110 • Several slides courtesy Chris Olston and Utkarsh Srivastava • Open source project under the Apache Hadoop umbrella 1 2 Overview Why Not SQL or Plain MapReduce? • Design goal: find sweet spot between • SQL difficult to use and debug for many programmers declarative style of SQL and low-level • Programmer might not trust automatic optimizer procedural style of MapReduce and prefers to hard-code best query plan • Programmer creates Pig Latin program, using • Plain MapReduce lacks convenience of readily high-level operators available, reusable data manipulation operators • Pig Latin program is compiled to MapReduce like selection, projection, join, sort program to run on Hadoop • Program semantics hidden in “opaque” Java code – More difficult to optimize and maintain 3 4 Example Data Analysis Task Data Flow Load Visits Find the top 10 most visited pages in each category Group by url Visits Url Info Foreach url Load Url Info generate count User Url Time Url Category PageRank Amy cnn.com 8:00 cnn.com News 0.9 Join on url Amy bbc.com 10:00 bbc.com News 0.8 Group by category Amy flickr.com 10:05 flickr.com Photos 0.7 Foreach category Fred cnn.com 12:00 espn.com Sports 0.9 generate top10 urls 5 6

In Pig Latin Pig Latin Notes visits = load ‘/data/visits’ as (user, url, time); • No need to import data into database gVisits = group visits by url; – Pig Latin works directly with files visitCounts = foreach gVisits generate url, count(visits); • Schemas are optional and can be assigned dynamically urlInfo = load ‘/data/ urlInfo ’ as (url, category, pRank); – Load ‘/data/visits’ as (user, url, time); visitCounts = join visitCounts by url, urlInfo by url; • Can call user-defined functions in every gCategories = group visitCounts by category; construct like Load, Store, Group, Filter, topUrls = foreach gCategories generate top(visitCounts,10); Foreach – Foreach gCategories generate top(visitCounts,10); store topUrls into ‘/data/ topUrls ’; 7 8 Pig Latin Data Model Pig Latin Operators: LOAD • Fully-nestable data model with: • Reads data from file and optionally assigns – Atomic values, tuples, bags (lists), and maps schema to each record • Can use custom deserializer finance yahoo , email news q ueries = LOAD ‘query_log.txt’ USING myLoad() • More natural to programmers than flat tuples AS (userID, queryString, timestamp); – Can flatten nested structures using FLATTEN • Avoids expensive joins, but more complex to process 9 10 Pig Latin Operators: FOREACH Pig Latin Operators: FILTER • Applies processing to each record of a data set • Remove records that do not pass filter • No dependence between the processing of condition different records • Can use user-defined function in filter – Allows efficient parallel implementation condition • GENERATE creates output records for a given input record real_queries = FILTER queries BY userId neq ` bot‘; expanded_queries = FOREACH queries GENERATE userId, expandQuery(queryString); 11 12

Pig Latin Operators: COGROUP Pig Latin Operators: GROUP • Group together records from one or more • Special case of COGROUP, to group single data data sets set by selected fields results • Similar to GROUP BY in SQL, but does not queryString url rank COGROUP results BY queryString, revenue BY queryString Lakers nba.com 1 need to apply aggregate function to records in Lakers espn.com 2 (Lakers, nba.com, 1) (Lakers, top, 50) each group Kings nhl.com 1 Lakers, , (Lakers, espn.com, 2) (Lakers, side, 20) Kings nba.com 2 revenue (Kings, nhl.com, 1) (Kings, top, 30) Kings, , queryString adSlot amount (Kings, nba.com, 2) (Kings, side, 10) grouped_revenue = GROUP revenue BY Lakers top 50 queryString; Lakers side 20 Kings top 30 Kings side 10 13 14 Pig Latin Operators: JOIN Other Pig Latin Operators • Computes equi-join • UNION: union of two or more bags join_result = JOIN results BY queryString, revenue • CROSS: cross product of two or more bags BY queryString; • ORDER: orders a bag by the specified field(s) • Just a syntactic shorthand for COGROUP followed • DISTINCT: eliminates duplicate records in bag by flattening • STORE: saves results to a file temp_var = COGROUP results BY queryString, revenue BY queryString; • Nested bags within records can be processed join_result = FOREACH temp_var GENERATE by nesting operators within a FOREACH FLATTEN(results), FLATTEN(revenue); operator 15 16 Load Load Visits(user, url, time) Pages(url, pagerank) MapReduce in Pig Latin (Amy, cnn.com, 8am) (Amy, http://www.snails.com, 9am) (Fred, www.snails.com/index.html, 11am) (www.cnn.com, 0.9) (www.snails.com, 0.4) Transform to (user, Canonicalize(url), time) map_result = FOREACH input GENERATE Join FLATTEN(map(*)); url = url (Amy, www.cnn.com, 8am) key_groups = GROUP map_result BY $0; (Amy, www.snails.com, 9am) (Amy, www.cnn.com, 8am, 0.9) (Fred, www.snails.com, 11am) (Amy, www.snails.com, 9am, 0.4) output = FOREACH key_groups GENERATE reduce(*); (Fred, www.snails.com, 11am, 0.4) Group by user • Map() is a UDF, where * indicates that the entire input (Amy, { (Amy, www.cnn.com, 8am, 0.9), (Amy, www.snails.com, 9am, 0.4) }) record is passed to map() (Fred, { (Fred, www.snails.com, 11am, 0.4) }) • $0 refers to first field, i.e., the intermediate key here Transform to (user, Average(pagerank) as avgPR) • Reduce() is another UDF Pig Latin workflow (Amy, 0.65) (Fred, 0.4) and example records Filter avgPR > 0.5 17 18 (Amy, 0.65)

Implementation Pig System user SQL user Parser parsed Pig Latin automatic program program or Pig rewrite + Pig Compiler optimize or cross-job output optimizer Hadoop execution f( ) Map-Reduce plan MR Compiler join map-red. cluster jobs filter Map-Reduce X Y cluster 19 20 Compilation into Map-Reduce Is Pig a DBMS? Map 1 Every group or join operation Load Visits forms a map-reduce boundary DBMS Pig Group by url Reduce 1 Bulk and random reads & Map 2 workload Bulk reads & writes only writes; indexes, transactions Foreach url Load Url Info generate count data System controls data format Pigs eat anything representation Must pre-declare schema Join on url Reduce 2 programming Map 3 System of constraints Sequence of steps style Other operations Group by category pipelined into map customizable Custom functions second- Easy to incorporate Reduce 3 Foreach category and reduce phases processing class to logic expressions custom functions generate top10(urls) 21 22

Recommend

More recommend