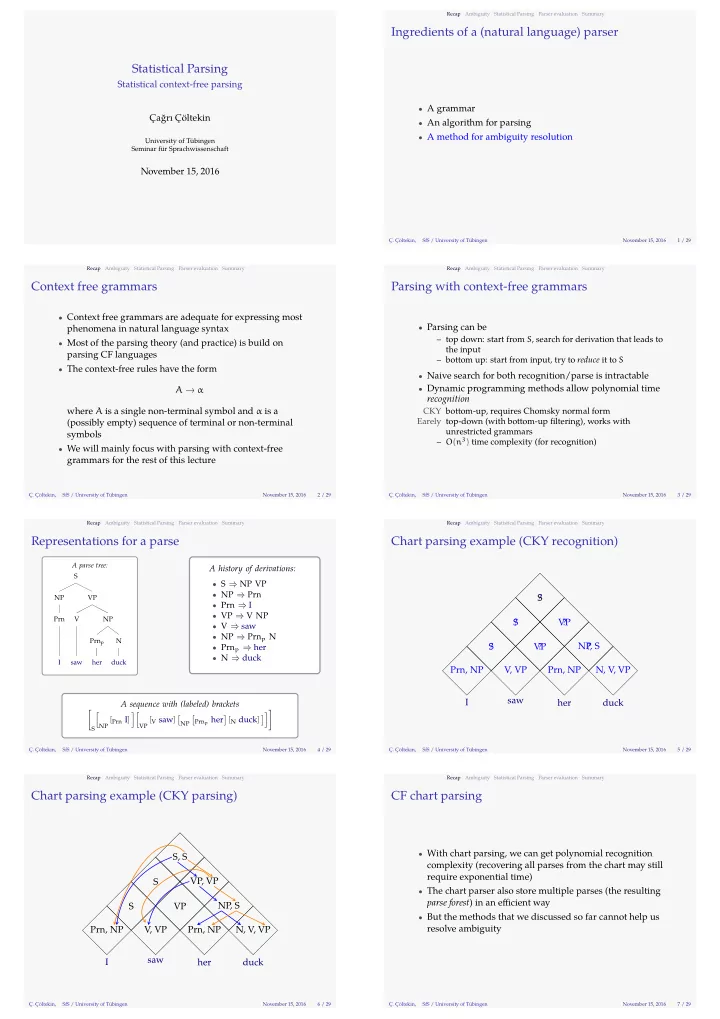

Statistical Parsing NP, S N, V, VP ? S ? VP ? ? V, VP S ? VP ? S Ç. Çöltekin, SfS / University of Tübingen Prn, NP Prn, NP 5 / 29 Recap Statistical context-free parsing S NP Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 4 / 29 Ambiguity duck Statistical Parsing Parser evaluation Summary Chart parsing example (CKY recognition) I saw her November 15, 2016 Recap duck Summary November 15, 2016 6 / 29 Recap Ambiguity Statistical Parsing Parser evaluation CF chart parsing Ç. Çöltekin, complexity (recovering all parses from the chart may still require exponential time) parse forest ) in an effjcient way resolve ambiguity Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 SfS / University of Tübingen S, S Ambiguity duck Statistical Parsing Parser evaluation Summary Chart parsing example (CKY parsing) I saw her Prn, NP VP, VP V, VP Prn, NP N, V, VP S VP NP, S S A history of derivations: A sequence with (labeled) brackets N 2 / 29 parsing CF languages (possibly empty) sequence of terminal or non-terminal symbols grammars for the rest of this lecture Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 Recap Context free grammars Ambiguity Statistical Parsing Parser evaluation Summary Parsing with context-free grammars her the input recognition phenomena in natural language syntax Summary Earely top-down (with bottom-up fjltering), works with Parser evaluation Çağrı Çöltekin University of Tübingen Seminar für Sprachwissenschaft November 15, 2016 Recap Ambiguity Statistical Parsing Summary Parser evaluation Ingredients of a (natural language) parser Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 1 / 29 Recap Ambiguity Statistical Parsing CKY bottom-up, requires Chomsky normal form 7 / 29 unrestricted grammars Statistical Parsing saw VP I Prn NP S A parse tree: Representations for a parse Summary Parser evaluation Ambiguity Recap 3 / 29 November 15, 2016 NP Prn p SfS / University of Tübingen Ç. Çöltekin, V • A grammar • An algorithm for parsing • A method for ambiguity resolution • Context free grammars are adequate for expressing most • Parsing can be – top down: start from S , search for derivation that leads to • Most of the parsing theory (and practice) is build on – bottom up: start from input, try to reduce it to S • The context-free rules have the form • Naive search for both recognition/parse is intractable A → α • Dynamic programming methods allow polynomial time where A is a single non-terminal symbol and α is a – O ( n 3 ) time complexity (for recognition) • We will mainly focus with parsing with context-free • S ⇒ NP VP • NP ⇒ Prn • Prn ⇒ I • VP ⇒ V NP • V ⇒ saw • NP ⇒ Prn p N • Prn p ⇒ her • N ⇒ duck [ ]]] [ ][ NP [ Prn I ] VP [ V saw ] [ [ ] [ N duck ] Prn p her • With chart parsing, we can get polynomial recognition • The chart parser also store multiple parses (the resulting • But the methods that we discussed so far cannot help us

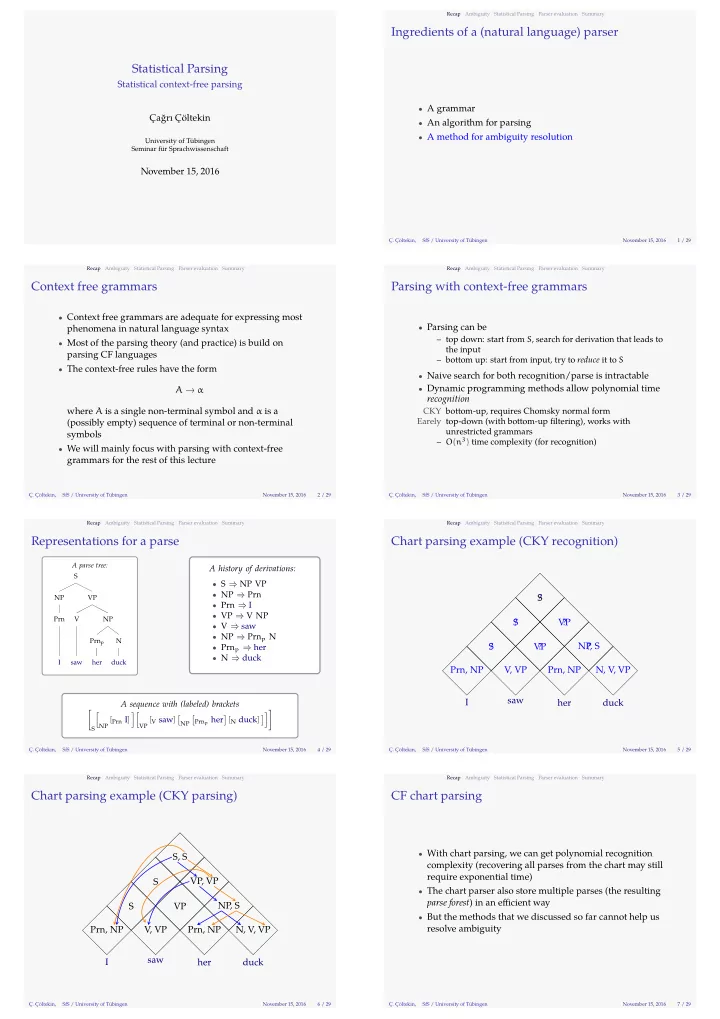

Recap P NP D the N man PP with V NP D a N hat Ç. Çöltekin, SfS / University of Tübingen saw VP 12 / 29 with NP D the N man PP P NP VP Ambiguity a N hat S NP We November 15, 2016 Recap saw Ambiguity Axioms of probability states that Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 14 / 29 Recap Statistical Parsing 0.5 the event is as likely to happen (or happened) as it is not Parser evaluation Summary Probability refresher (2) Joint and conditional probabilities, chain rule Ç. Çöltekin, SfS / University of Tübingen November 15, 2016 1 the event is certain 0 the event is impossible Ambiguity SfS / University of Tübingen Statistical Parsing Parser evaluation Summary Statistical parsing possible parses sentence to be resolved correctly Ç. Çöltekin, November 15, 2016 between 0 and 1 13 / 29 Recap Ambiguity Statistical Parsing Parser evaluation Summary Probability refresher (1) NP D V November 15, 2016 – The horse raced past the barn fell – The old man the boats – Fat people eat accumulates – Every farmer who owns a donkey beats it. Ç. Çöltekin, SfS / University of Tübingen VP – I saw the man with a telescope 9 / 29 Recap Ambiguity Statistical Parsing Parser evaluation Summary – Panda eats bamboo shoots and leaves – We saw her duck (newspaper headlines) SfS / University of Tübingen Statistical Parsing Parser evaluation Summary Pretty little girl’s school (again) Cartoon Theories of Linguistics, SpecGram Vol CLIII, No 4, 2008. http://specgram.com/CLIII.4/school.gif Ç. Çöltekin, November 15, 2016 – She is looking for a match 8 / 29 Recap Ambiguity Statistical Parsing Parser evaluation Summary Some more examples Even more examples 15 / 29 November 15, 2016 Ambiguity Parser evaluation SfS / University of Tübingen Statistical Parsing Ambiguity Recap 10 / 29 got in my pajamas, I don’t know. 11 / 29 Recap November 15, 2016 Statistical Parsing But humans do not recognize many ambiguities Parser evaluation SfS / University of Tübingen Summary Ç. Çöltekin, The task: choosing the most plausible parse S NP We Ç. Çöltekin, too hard to read Summary • Lexical ambiguity • Attachment ambiguity • Local ambiguity (garden path sentences) • Anaphora resolution • FARMER BILL DIES IN HOUSE • Time fmies like an arrow; fruit fmies like a banana • TEACHER STRIKES IDLE KIDS • Outside of a dog, a book is a man’s best friend; inside it’s • SQUAD HELPS DOG BITE VICTIM • BAN ON NUDE DANCING ON GOVERNOR’S DESK • One morning I shot an elephant in my pajamas. How he • PROSTITUTES APPEAL TO POPE • KIDS MAKE NUTRITIOUS SNACKS • Don’t eat the pizza with a knife and fork • DRUNK GETS NINE MONTHS IN VIOLIN CASE • MINERS REFUSE TO WORK AFTER DEATH • Find the most plausible parse of an input string given all • We need a scoring function, for each parse, given the input • We typically use probabilities for scoring, task becomes fjnding the parse (or tree), t , given the input string x t best = arg max P ( t | x ) t • Note that some ambiguities need a larger context than the • Probability is a measure of (un)certainty of an event • Joint probability of two events is noted as P ( x, y ) • We quantify the probability of an event with a number • The conditional probability is defjned as P ( x | y ) = P ( x,y ) P ( y ) or P ( x, y ) = P ( x | y ) P ( y ) • If the events x and x are independent, P ( x | y ) = P ( x ) , P ( y | x ) = p ( y ) , P ( x, y ) = P ( x ) P ( y ) • All possible outcomes of a trial (experiment or observation) is called the sample space ( Ω ) • For more than two variables (chain rule): P ( x, y, z ) = P ( z | x, y ) P ( y | x ) P ( x ) = P ( x | y, z ) P ( y | z ) P ( z ) = . . . 1. P ( E ) ∈ R , P ( E ) ≥ 0 • If all are independent 2. P ( Ω ) = 1 3. For disjoint events E 1 and E 2 , P ( E 1 ∪ E 2 ) = P ( E 1 ) + P ( E 2 ) P ( x, y, z ) = P ( x ) P ( y ) P ( z )

Recommend

More recommend