STAT 113 Analytic Inference for Regression Colin Reimer Dawson - PowerPoint PPT Presentation

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression STAT 113 Analytic Inference for Regression Colin Reimer Dawson Oberlin College 21-24 April 2017 1 / 33 Outline Linear Models

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression STAT 113 Analytic Inference for Regression Colin Reimer Dawson Oberlin College 21-24 April 2017 1 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression 2 / 33

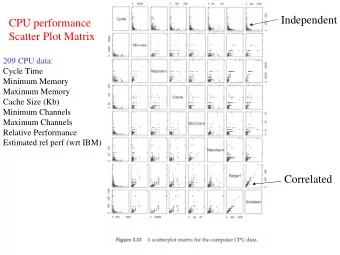

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Prediction • Correlations give us a description of the relationship between two numeric variables. • However, when two variables are related, we can go further and use knowledge of one to make predictions about the other. • Examples: • Use SAT scores to predict college GPA • Use economic indicators to predict stock prices • Use biomarkers to predict disease progression • Use bill total to predict percent tip 4 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression What’s a Good Prediction? 12 ● 10 ● • Pretty much the simplest ● ● ● model we can have is a 8 ● ● ● straight line. Y 6 ● ● ● • Two things determine 4 what line we have: 2 • The intercept 0 • The slope 4 6 8 10 12 14 X 5 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Review: Intercept Slope Form • The intercept and slope are the parameters of our regression model. • The general equation for a line is: f ( x ) = a + bx • In statistics notation, we write ˆ y (“y hat”) to represent a predicted (or fitted) value. • Given a value x i , we predict using: y = a + bx i ˆ 6 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression The Simple Linear Model Prediction Function β 0 + ˆ ˆ y ˆ = β 1 x The Population Model Y = β 0 + β 1 X + ε where ε is a residual , specific to each case. 7 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Sample vs Population “Best-Fit” Line • For a sample: choose intercept and slope to minimize sum of squared errors. • But this does not yield the “correct” (or even “best”) model for the population, due to sampling error. ● ● ● 70 ● ● ● ● 60 ● ● ● ● ● ● ● ● ● ● 50 ● ●● ● ● ● ● ● ● ● ● Y ● ● ● ● ● Population ● ● 40 ● ● ● ● ● ● ● ● ● ● ● ● ● Sample 1 ● ● ● ● ● ● ● ● ● ● ● ● ● ● Sample 2 ● ● ● 30 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Sample 3 ● ● ● ● ●● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Sample 4 ● ● ● ● ● ● ● ● 20 ● ● ● ● ● ● ● ● 20 30 40 50 60 70 X 8 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression The Sample Linear Model Prediction Function β 0 + ˆ ˆ y ˆ = β 1 x The Sample Model β 0 + ˆ ˆ Y = β 1 X + ˆ ε where ˆ ε is the estimated residual for the case, used in fitting. 9 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Tests and Intervals for the Slope • We can bootstrap sample the ( x, y ) points to get a CI for slope. • We can randomly re-pair x s and y s, computing the regression line after each fit, to get a randomization distribution. • StatKey 11 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Analytic Tests and Intervals for the Slope The bootstrap and randomization distributions are well-modeled using a t -distribution under certain conditions: 1. The linear model is appropriate 2. The residuals have constant variance across all x 3. The residuals are Normally distributed, or the sample size is large enough Refining the Linear Model Y = β 0 + β 1 X + ε where the ε are distributed as N (0 , σ ε ) for a σ ε that does not depend on x . 12 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Ways The Conditions Can Be Violated Figure: From the textbook (Fig. 9.5) 13 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Analytic Tests and Intervals for the Slope • The SE expression is not given in the book, but when the conditions are met, it is: � s 2 ε /s 2 x SE = n − 2 where s 2 ε is the variance of the residuals , and s x is the variance of the X variable. • We will not need to use this by hand, but there is insight to be gained by examining it • Then, use a t -distribution with n − 2 df (why?) for either the CI or the test. 14 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Test of H 0 : β 1 = 0 : Restaurant Tips Demo 15 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Testing Correlation vs. Slope • The correlation and the slope are different; but the tests yield the exact same results. • This is not a coincidence. Slope is zero if and only if the correlation is zero, so the same null hypotheses are equivalent. • In fact Slope = r · s y s x 16 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression A 95% CI for the Slope Demo 17 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Measuring the Predictive Power of the Model • How can we measure how useful it is to have x when trying to predict y , based on a slope of 0.049 (or whatever it is)? • Correlation measures the strength of association, which is close to what we want. • We can use the coefficient of determination : “How much better does our prediction for y get when we know x ?” 18 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression The Coefficient of Determination The Coefficient of Determination ( R 2 ) The coefficient of determination , or R 2 value, associated with a linear model, is the percent reduction in prediction uncertainty achieved by knowing the predictor vs. just predicting the mean of y . I.e., what fraction of the variation (variance) in y is predictable via x ? Turns out to just be the square of the correlation! 19 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Example: Restaurant Tips 15 ● null.tip.model tip.model.using.bill ● ● 10 ● ● ● ● ● ● ● Tip ($) ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 5 ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ●● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● 0 0 10 20 30 40 50 60 70 Total Bill ($) 20 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Example: Restaurant Tips data(RestaurantTips) tip.model <- lm(Tip ~ Bill, data = RestaurantTips) summary(tip.model) Call: lm(formula = Tip ~ Bill, data = RestaurantTips) Residuals: Min 1Q Median 3Q Max -2.3911 -0.4891 -0.1108 0.2839 5.9738 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -0.292267 0.166160 -1.759 0.0806 . Bill 0.182215 0.006451 28.247 <2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Residual standard error: 0.9795 on 155 degrees of freedom Multiple R-squared: 0.8373,Adjusted R-squared: 0.8363 F-statistic: 797.9 on 1 and 155 DF, p-value: < 2.2e-16 21 / 33

Outline Linear Models Inference for Regression Slope Confidence and Prediction Intervals for Regression Example: Restaurant Tips Tip ($) ^ 2 = 5.861 15 σ ε 0 −10 −5 0 5 10 Residual Tip (Null Model) Frequency ^ 2 = 0.953 30 σ ε 0 −10 −5 0 5 10 Residual Tip (Bill Model) 22 / 33

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.