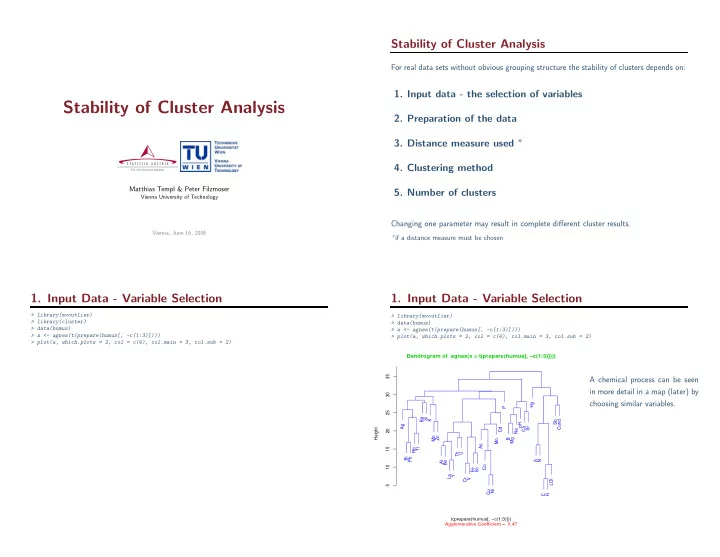

Stability of Cluster Analysis For real data sets without obvious grouping structure the stability of clusters depends on: 1. Input data - the selection of variables Stability of Cluster Analysis 2. Preparation of the data 3. Distance measure used ∗ S T A T I S T I K A U S T R I A 4. Clustering method D i e I n f o r m a t i o n s m a n a g e r Matthias Templ & Peter Filzmoser 5. Number of clusters Vienna University of Technology Changing one parameter may result in complete different cluster results. Vienna, June 16, 2006 ∗ if a distance measure must be chosen 1. Input Data - Variable Selection 1. Input Data - Variable Selection > library(mvoutlier) > library(mvoutlier) > library(cluster) > data(humus) > data(humus) > a <- agnes(t(prepare(humus[, -c(1:3)]))) > a <- agnes(t(prepare(humus[, -c(1:3)]))) > plot(a, which.plots = 2, col = c(4), col.main = 3, col.sub = 2) > plot(a, which.plots = 2, col = c(4), col.main = 3, col.sub = 2) Dendrogram of agnes(x = t(prepare(humus[, −c(1:3)]))) 35 A chemical process can be seen in more detail in a map (later) by 30 choosing similar variables. Hg P 25 Ba Si K Sb Cond pH Ag Ca Sr Cd Height 20 Na Mn Zn B Mg Mo As 15 Rb Tl Th U Bi Pb S N Al Be Co 10 Fe Sc La Y Cr V LOI 5 Cu Ni C H t(prepare(humus[, −c(1:3)])) Agglomerative Coefficient = 0.47

1. Input Data - Variable Selection 2. Data Preparation Most of real data in practice can have some or all of these properties: A selection of variables may be useful when clustering high-dimensional data because . . . • neither normal nor log-normal • clustering with all variables may hide underlying processes • strongly skewed • we want to see some processes in more detail • often multi-modal distributions • the inclusion of one irrelevant variable may hide the real clusters in the data • dependencies between observations • weak clustering structures One easy way (amongst others) for variable selection can be done by graphical inspection of a dendrogram which results from hierarchical clustering of variables. • data includes outliers • variables show a striking difference in the amount of variability 3. Distance Measure 2. Data Preparation Rand Index 0.2 0.4 0.6 0.8 1.0 Comparing clustered data and If a good clustering structure for a variable exists we expect a distribution with two or more clustered subsets of the data with modes. A transformation (e.g with a box-cox transformation) will preserve the modes pam.euclidean Rand Index. but remove large skewness. Distance measures which results pam.manhattan in high Rand Indices should be Standardisation of the variables is needed if the variables show a striking difference in Rand Index for 500 bootstrap samples pam.gower chosen. the amount of variability. pam.rf Outliers can influence the clustering (depends on which clustering algorithms is chosen) kmeans.euclidean Removing outliers before clustering may be useful. kmeans.manhattan kmeans.gower Finding outliers is not a trivial task, especially in high dimensions. (you can do this e.g. with Package mvoutlier from Filzmoser et al. (2005)) kmeans.rf

4. Clustering Method 5. Number of Clusters Data: Pollution Rand Index euclidean gower 0.2 0.4 0.6 0.8 1.0 HumusCoNiCuAsMo Comparing clustered data and manhattan none clustered subsets of the data with rf pam.euclidean 2 4 6 8 Rand Index. kccaKmedians Mclust speccPolydot Algorithms which results in high pam.manhattan Rand Indices may be chosen. Rand Index for 500 bootstrap samples pam.gower pam.rf wb.ratio bclust clara cmeans kmeans.euclidean 0.8 kmeans.manhattan 0.6 0.4 kmeans.gower 2 4 6 8 2 4 6 8 kmeans.rf number of clusters 5. Number of Clusters Example Data: Pollution euclidean gower HumusCoNiCuAsMo manhattan separation = 2.02 separation = 2.67 separation = 3.81 1 2 3 none obs = 27 obs = 35 obs = 126 rf 2 4 6 8 kccaKmedians Mclust speccPolydot 4 separation = 2.46 5 separation = 2.55 6 separation = 2.36 obs = 22 obs = 74 obs = 34 wb.ratio bclust clara cmeans 0.8 separation = 2.02 separation = 2.11 separation = 2.4 7 8 9 obs = 166 obs = 100 obs = 33 0.6 0.4 2 4 6 8 2 4 6 8 number of clusters − → − →

separation = 2.02 separation = 2.67 separation = 3.81 1 2 3 Mclust on scaled and transformed humus data separation = 2.02 separation = 2.67 separation = 3.81 1 2 3 obs = 27 obs = 35 obs = 126 obs = 27 obs = 35 obs = 126 Validity measure on each cluster cluster size Visualising all clusters each in an own map 4 separation = 2.46 5 separation = 2.55 6 separation = 2.36 Seaspray separation = 2.46 separation = 2.55 separation = 2.36 4 5 6 obs = 22 obs = 74 obs = 34 obs = 22 obs = 74 obs = 34 Cluster 5 (Greyscale depends on validity measure in each cluster) separation = 2.02 separation = 2.11 separation = 2.4 1 2 3 4 5 6 7 8 9 7 8 9 separation = 2.02 separation = 2.11 separation = 2.4 1 2 3 4 5 6 7 8 9 7 8 9 obs = 166 obs = 100 obs = 33 obs = 166 obs = 100 obs = 33 As Mo Cu As Mo Cu Ni Co Ni Cu Co Ni Cd Bi Cr Ni B V Cu P Th Sr Bi Cd Al Sc Co La Y Cr V Mg Be Fe B Pb Cr P Th Co Sr Na Bi U Al Sc Fe La Y Fe Al Fe V Al Cr Mg Be U P Pb Y As Ba Al V Na Bi U Ba Be N La Ag Pb Fe Fe Al Tl Y Be Mo Th pH U As P Th Zn Be N Y Ag Ba S U pH S Tl Hg Mn Sc Tl Ba Y Be La Pb pH As Sb Na Rb Sc pH B Th Tl Mo Th Zn Cd La Fe Rb Si S Sc Rb Ba Cr Ni Co Na S U Na Rb pH Tl Hg Mn Tl U Si pH H N Hg Mn Ca As Cd Sb Sc pH B Ag Hg Th LO Bi Sr V Cu S Rb La Ba Fe Ni Rb Si Be Mg Pb Ca C Ag Cd La Zn Co Bi Si pH Cr Co Na La Si Co Co Cd Ca Sc Zn Sr Ca K Sb C Co Ag Pb Rb Sb U Ag Hg H N Hg V Mn Ca S Zn H LO C H LO N K Mn LO H Co LO C H Cr Sb Si Mg Be Th LO Ag Bi La Sr Zn Co Cu Cr Al Bi Hg S Si Ca Mo Sb S Sr N Si Co Ca Zn Mg Pb Ca Sr C Cd Ag Bi Pb Rb Sb Cr V Y C Ag Cd Hg Sb P S Si K Mn U B Na Ag Mn K Na Zn K K N La Zn Co Cd H Sc K Ca K Mn Sb C C LO Co Cr Si Mo Ba Be Fe Mn Pb Tl Y pH Co B Co Pb Sc Tl Y B Cd Mg Hg Th Zn V C LO LO C LO N H Hg LO H Co N H Sb Mg Ag Al Sc As Rb V Mg Rb Sr V Y Mg P Th U N As pH Ba H Y Cr C Ag Al Bi P S Si Mn K U S Si Ag Ca K Na Mo Sb S Sr K Co Th As Tl Be Ba Rb Hg La Rb P V Al Cd P Sc Cr V Ba Cd Hg Mn Pb Sb Co B Na Y B Mg Mn Zn Th K N Fe Hg Mn Mo Pb As Cr La U Si Sr Al Pb Si Tl As Fe Al Mo Sc As Be Fe Mg Tl Y Co pH B Pb Sc Th Tl U Cd pH Hg V Zn LO C H Na Tl K Ni Be P Th pH Ba Be Bi Cr Ca La Mo Na U Ag Rb V Rb Sr V Y Ba Mg P N As Rb P V Ba Sc K Na C Bi Ba Bi Cu Al Fe Y U Hg Co Th U As Si Sr Tl Be Rb Hg La Al Cd P Sr B Mo Ag Mo Sc Th Be Cu Mg Ni Y Fe Mn Mo Na Pb Tl As Cr La Ni Al pH Bi Pb Si Tl As Ca Fe Mo LO H Sr Zn La Mo Sb B N Co Na C Bi K Cu Be P Th Ba Be Cr Fe La Na U Ca Cu K Ni Cu Ni Zn Co pH H K Ba Bi Al U Y Ni Y P Mg Co Cu Sc Co S C LO Sr B Mo Ag Mo Zn Mo Sc Th Be Cu Mg N Sb Al Fe Si Sr H LO K Sr Cu Ni La Sb B Co Co B Mn Ca P Cu Ni Cu Zn Co S pH H Cu Na Mg Co Sc Si Co Sr C LO N Cd Cd Co Cr Pb B Sb Al Fe B Ni S As Ba Ni Rb V Co Mn pH Mn Cu Na Cd Cr Pb Ca Tl N Cd Co Ni Mg B Ni S pH Ba As Rb V Pollution Zn Ag Mn Tl Ca Bi Ca Hg P Mg Ca Zn Ag Bi Hg P Sb Co Sb Co Highest pollution visualised by cluster 9 N S N S This can be seen in the graphic on the right H e.g. Co, Cu, Ni typical elements for reflecting pollution C LO H LO C Conclusions • Applying cluster analysis on real data results in highly non-stable results for many reasons • The selection of variables and the selection of the optimal number of clusters on real data is a non-trivial task. • Cluster analysis can be seen as explorative data analysis to get ideas about your data • Interactive tools which allow for various methods are very helpful

Recommend

More recommend