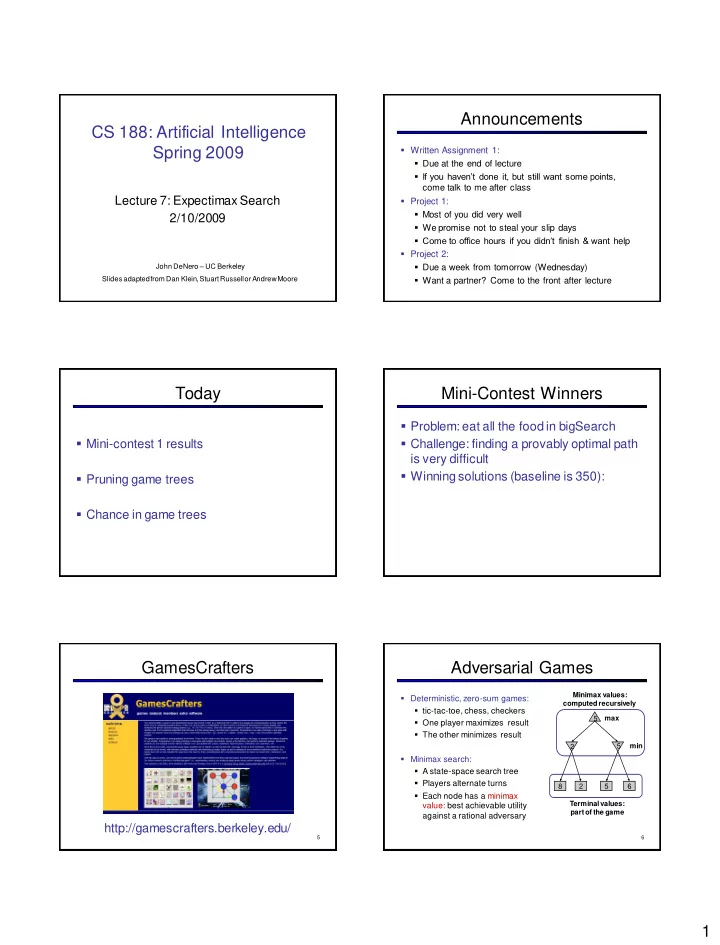

Announcements CS 188: Artificial Intelligence Spring 2009 Written Assignment 1: Due at the end of lecture If you haven’t done it, but still want some points, come talk to me after class Lecture 7: Expectimax Search Project 1: Most of you did very well 2/10/2009 We promise not to steal your slip days Come to office hours if you didn’t finish & want help Project 2: John DeNero – UC Berkeley Due a week from tomorrow (Wednesday) Slides adapted from Dan Klein, Stuart Russell or Andrew Moore Want a partner? Come to the front after lecture Today Mini-Contest Winners Problem: eat all the food in bigSearch Mini-contest 1 results Challenge: finding a provably optimal path is very difficult Winning solutions (baseline is 350): Pruning game trees 5 th : Greedy hill-climbing, Jeremy Cowles: 314 4 th : Local choices, Jon Hirschberg and Nam Do: 292 Chance in game trees 3 rd : Local choices, Richard Guo and Shendy Kurnia: 290 2 nd : Local choices, Tim Swift: 286 1 st : A* with inadmissible heuristic, Nikita Mikhaylin: 284 GamesCrafters Adversarial Games Minimax values: Deterministic, zero-sum games: computed recursively tic-tac-toe, chess, checkers 5 max One player maximizes result The other minimizes result 2 5 min Minimax search: A state-space search tree Players alternate turns 8 2 5 6 Each node has a minimax Terminal values: value: best achievable utility part of the game against a rational adversary http://gamescrafters.berkeley.edu/ 5 6 1

Computing Minimax Values Pruning in Minimax Search Two recursive functions: max-value maxes the values of successors min-value mins the values of successors [3,3] [3,14] [3,+ ] [- ,+ ] [3,5] def value(state): If the state is a terminal state: return the state’s utility If the next agent is MAX: return max-value(state) [3,3] [- ,3] [- ,2] [- ,14] [- ,5] [2,2] If the next agent is MIN: return min-value(state) def max-value(state): Initialize max = - ∞ 3 12 8 2 14 5 2 For each successor of state: Compute value(successor) Update max accordingly Return max 8 Alpha-Beta Pruning Alpha-Beta Pseudocode General configuration a is the best value that MAX MAX can get at any choice point along the MIN a current path If n becomes worse than a , MAX will avoid it, so MAX can stop considering n ’s other children b n MIN Define b similarly for MIN 9 v Alpha-Beta Pruning Example Alpha-Beta Pruning Properties This pruning has no effect on final result at the root a = - a = 3 b = + b = + 3 Values of intermediate nodes might be wrong! a = - a = - b = + b = 3 a = 3 a = 3 a = 3 Good move ordering improves effectiveness of pruning ≤2 ≤1 3 b = + b = 14 b = 5 a = 3 b = + With “perfect ordering”: Time complexity drops to O(b m/2 ) 3 12 2 14 5 1 Doubles solvable depth Full search of, e.g. chess, is still hopeless! a = - ≥8 b = 3 This is a simple example of metareasoning, and the only a is MAX’s best alternative in the branch 8 b is MIN’s best alternative in the branch one you need to know in detail 13 2

Expectimax Search Trees Maximum Expected Utility What if we don’t know what the Why should we average utilities? Why not minimax? result of an action will be? E.g., In solitaire, next card is unknown In monopoly, the dice are random max Principle of maximum expected utility: an agent should In pacman, the ghosts act randomly chose the action which maximizes its expected utility, We can do expectimax search given its knowledge Chance nodes are like min nodes, chance except the outcome is uncertain Calculate expected utilities General principle for decision making Max nodes as in minimax search Often taken as the definition of rationality Chance nodes take average (expectation) of value of children 10 4 5 7 We’ll see this idea over and over in this course! Later, we’ll learn how to formalize the underlying problem as a Let’s decompress this definition… Markov Decision Process 14 15 Reminder: Probabilities What are Probabilities? Objectivist / frequentist answer: A random variable represents an event whose outcome is unknown A probability distribution is an assignment of weights to outcomes Averages over repeated experiments E.g. empirically estimating P(rain) from historical observation Example: traffic on freeway? Assertion about how future experiments will go (in the limit) Random variable: T = how much traffic is there New evidence changes the reference class Outcomes: T in {none, light, heavy} Makes one think of inherently random events, like rolling dice Distribution: P(T=none) = 0.25, P(T=light) = 0.5, P(T=heavy) = 0.25 Common abbreviation: P(light) = 0.5 Subjectivist / Bayesian answer: Some laws of probability (more later): Degrees of belief about unobserved variables Probabilities are always non-negative E.g. an agent’s belief that it’s raining, given the temperature Probabilities over all possible outcomes sum to one E.g. pacman’s belief that the ghost will turn left, given the state As we get more evidence, probabilities may change: Often learn probabilities from past experiences (more later) P(T=heavy) = 0.25, P(T=heavy | Hour=8am) = 0.60 New evidence updates beliefs (more later) We’ll talk about methods for reasoning and updating probabilities later 16 17 Uncertainty Everywhere Reminder: Expectations We can define function f(X) of a random variable X Not just for games of chance! I’m sick: will I sneeze this minute? Email contains “FREE!”: is it spam? The expected value of a function is its average value, Tooth hurts: have cavity? weighted by the probability distribution over inputs 60 min enough to get to the airport? Robot rotated wheel three times, how far did it advance? Example: How long to get to the airport? Safe to cross street? (Look both ways!) Length of driving time as a function of traffic: Sources of uncertainty in random variables: L(none) = 20, L(light) = 30, L(heavy) = 60 Inherently random process (dice, etc) What is my expected driving time? Insufficient or weak evidence Notation: E[ L(T) ] Ignorance of underlying processes Remember, P(T) = {none: 0.25, light: 0.5, heavy: 0.25} Unmodeled variables The world’s just noisy – it doesn’t behave according to plan! E[ L(T) ] = L(none) * P(none) + L(light) * P(light) + L(heavy) * P(heavy) Compare to fuzzy logic , which has degrees of truth , rather than just degrees of belief E[ L(T) ] = (20 * 0.25) + (30 * 0.5) + (60 * 0.25) = 35 18 19 3

Expectimax Search Expectimax Pseudocode In expectimax search, we have def value(s) a probabilistic model of how the if s is a max node: return maxValue(s) opponent (or environment) will behave in any state if s is an exp node: return expValue(s) Model could be a simple if s is a terminal node: return evaluation(s) uniform distribution (roll a die) Model could be sophisticated def maxValue(s) and require a great deal of computation values = [value(s’) for s’ in successors(s)] We have a node for every return max(values) outcome out of our control: 8 4 5 6 opponent or environment The model might say that def expValue(s) adversarial actions are likely! values = [value(s’) for s’ in successors(s)] For now, assume for any state weights = [probability(s, s’) for s’ in successors(s)] we have a distribution to assign probabilities to opponent actions return expectation(values, weights) / environment outcomes Having a probabilistic belief about an agent’s action does not mean 22 23 that agent is flipping any coins! Expectimax for Pacman Expectimax for Pacman Notice that we’ve gotten away from thinking that the Results from playing 5 games ghosts are trying to minimize pacman’s score Minimizing Random Instead, they are now a part of the environment Ghost Ghost Pacman has a belief (distribution) over how they will act Won 5/5 Won 5/5 Minimax Questions: Pacman Avg. Score: Avg. Score: 493 483 Is minimax a special case of expectimax? Won 1/5 Won 5/5 What happens if we think ghosts move randomly, but Expectimax they really do try to minimize Pacman’s score? Pacman Avg. Score: Avg. Score: -303 503 Pacmanused depth 4 search with an eval function that avoids trouble 24 Ghost used depth 2 search with an eval function that seeks Pacman [Demo] Expectimax Pruning? Expectimax Evaluation For minimax search, evaluation function scale doesn’t matter We just want better states to have higher evaluations (get the ordering right) We call this property insensitivity to monotonic transformations For expectimax, we need the magnitudes to be meaningful as well E.g. must know whether a 50% / 50% lottery between A and B is better than 100% chance of C 100 or -10 vs 0 is different than 10 or -100 vs 0 26 27 4

Recommend

More recommend