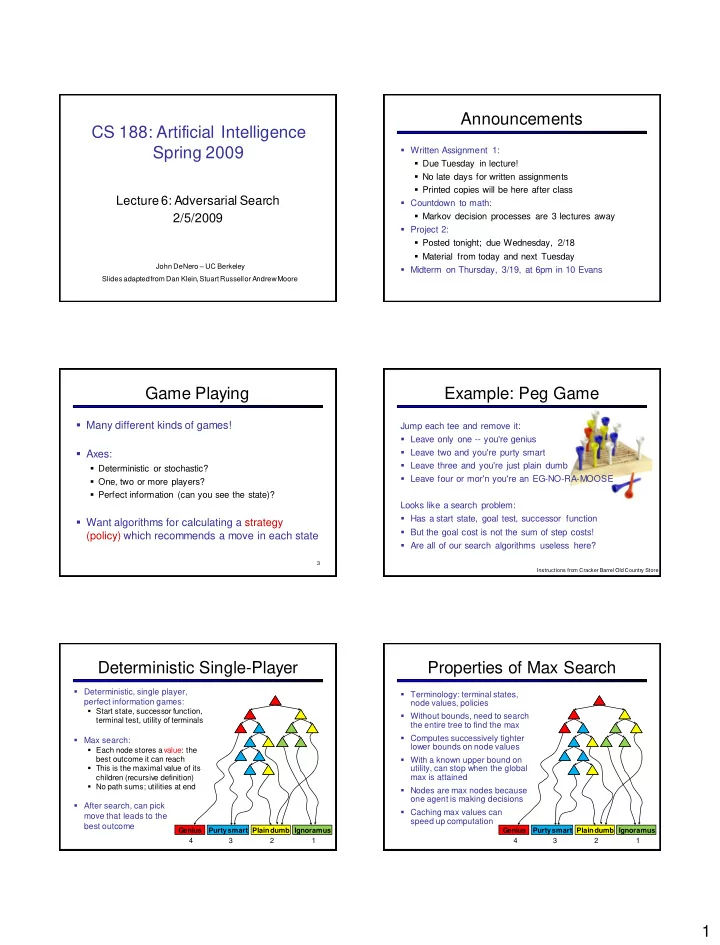

Announcements CS 188: Artificial Intelligence Spring 2009 Written Assignment 1: Due Tuesday in lecture! No late days for written assignments Printed copies will be here after class Lecture 6: Adversarial Search Countdown to math: Markov decision processes are 3 lectures away 2/5/2009 Project 2: Posted tonight; due Wednesday, 2/18 Material from today and next Tuesday John DeNero – UC Berkeley Midterm on Thursday, 3/19, at 6pm in 10 Evans Slides adapted from Dan Klein, Stuart Russell or Andrew Moore Game Playing Example: Peg Game Many different kinds of games! Jump each tee and remove it: Leave only one -- you're genius Leave two and you're purty smart Axes: Leave three and you're just plain dumb Deterministic or stochastic? Leave four or mor'n you're an EG-NO-RA-MOOSE One, two or more players? Perfect information (can you see the state)? Looks like a search problem: Has a start state, goal test, successor function Want algorithms for calculating a strategy But the goal cost is not the sum of step costs! (policy) which recommends a move in each state Are all of our search algorithms useless here? 3 Instructions from Cracker Barrel Old Country Store Deterministic Single-Player Properties of Max Search Deterministic, single player, Terminology: terminal states, perfect information games: node values, policies Start state, successor function, Without bounds, need to search terminal test, utility of terminals the entire tree to find the max Computes successively tighter Max search: lower bounds on node values Each node stores a value: the best outcome it can reach With a known upper bound on This is the maximal value of its utility, can stop when the global children (recursive definition) max is attained No path sums; utilities at end Nodes are max nodes because one agent is making decisions After search, can pick Caching max values can move that leads to the speed up computation best outcome Genius Purtysmart Plain dumb Ignoramus Genius Purtysmart Plain dumb Ignoramus 4 3 2 1 4 3 2 1 1

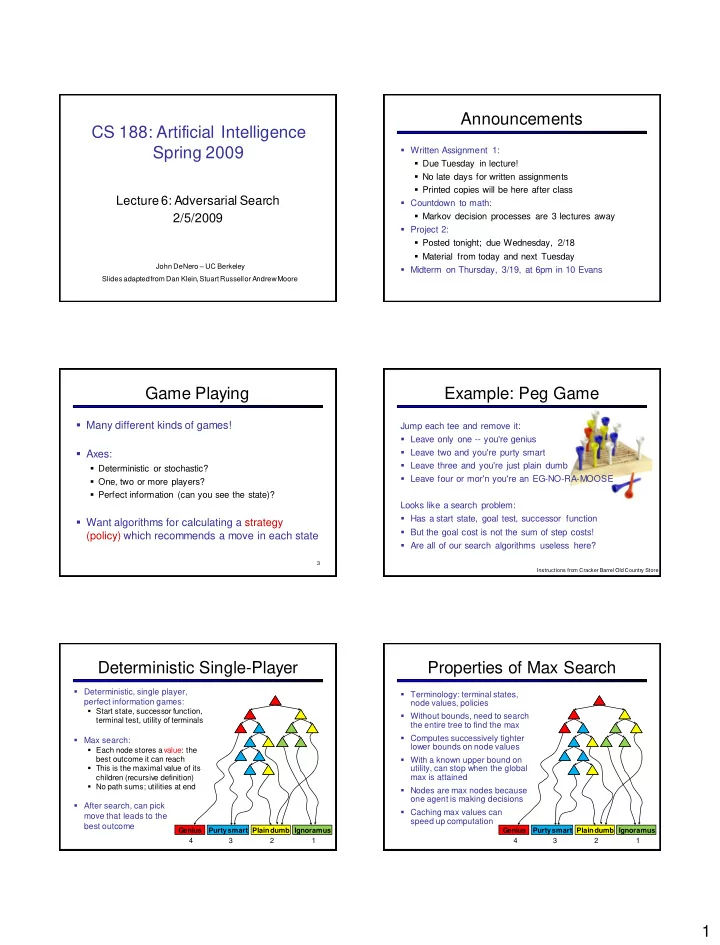

Uses of a Max Tree Adversarial Search Can select a sequence of moves that maximizes utility Can recover optimally from bad moves Can compute values for certain scenarios easily [DEMO: mystery pacman] Genius Purtysmart Plain dumb Ignoramus 8 4 3 2 1 Deterministic Two-Player Tic-tac-toe Game Tree Deterministic, zero-sum games: tic-tac-toe, chess, checkers max One player maximizes result The other minimizes result Minimax search: min A state-space search tree Players alternate Each layer, or ply, consists of a 8 2 5 6 round of moves Choose move to position with highest minimax value: best achievable utility against a rational adversary 9 10 Minimax Example Minimax Search 11 12 2

Minimax Properties Resource Limits Cannot search to leaves Optimal against a perfect player. Otherwise? max 4 Depth-limited search -2 4 min min max Instead, search a limited depth of tree Time complexity? Replace terminal utilities with an eval O(b m ) -1 -2 4 9 function for non-terminal positions min Guarantee of optimal play is gone Space complexity? O(bm) More plies makes a BIG difference [DEMO: limitedDepth] 10 10 9 100 For chess, b 35, m 100 Example: Exact solution is completely infeasible Suppose we have 100 seconds, can [DEMO: explore 10K nodes / sec Lots of approximations and pruning minVsExp] So can check 1M nodes per move - reaches about depth 8 – decent ? ? ? ? chess program 13 14 Deep Blue sometimes reached depth 40+ Evaluation Functions Evaluation for Pacman Function which scores non-terminals [DEMO: thrashing, Ideal function: returns the utility of the position smart ghosts] In practice: typically weighted linear sum of features: e.g. f 1 ( s ) = (num white queens – num black queens), etc. 15 16 Iterative Deepening Why Pacman Starves Iterative deepening uses DFS as a subroutine: b He knows his score will go … 1. Do a DFS which only searches for paths of up by eating the dot now length 1 or less. (DFS gives up on any path of He knows his score will go length 2) 2. If “ 1 ” failed, do a DFS which only searches paths up just as much by eating of length 2 or less. the dot later on 3. If “ 2 ” failed, do a DFS which only searches paths There are no point-scoring of length 3 or less. opportunities after eating ….and so on. the dot This works for single-agent search as well! Therefore, waiting seems just as good as eating Why do we want to do this for multiplayer games? 19 3

- Pruning Example - Pruning General configuration is the best value that Player MAX can get at any choice point along the Opponent current path If n becomes worse than , MAX will avoid it, so Player can stop considering n ’s other children Opponent n Define similarly for MIN 21 22 - Pruning Pseudocode - Pruning Properties This pruning has no effect on final result at the root Values of intermediate nodes might be wrong Good move ordering improves effectiveness of pruning With “perfect ordering”: Time complexity drops to O(b m/2 ) Doubles solvable depth Full search of, e.g. chess, is still hopeless! This is a simple example of metareasoning v 23 24 More Metareasoning Ideas Game Playing State-of-the-Art Checkers: Chinook ended 40-year-reign of human world champion Forward pruning – prune a Marion Tinsley in 1994. Used an endgame database defining perfect play for all positions involving 8 or fewer pieces on the board, a total node immediately without of 443,748,401,247 positions. Checkers is now solved! recursive evaluation Singular extensions – explore Chess: Deep Blue defeated human world champion Gary Kasparov in a six-game match in 1997. Deep Blue examined 200 million only one action that is clearly positions per second, used very sophisticated evaluation and undisclosed methods for extending some lines of search up to 40 better than others. Can ply. alleviate horizon effects Cutoff test – a decision function Othello: human champions refuse to compete against computers, which are too good. about when to apply evaluation Quiescence search – expand Go: human champions refuse to compete against computers, which are too bad. In go, b > 300, so most programs use pattern the tree until positions are knowledge bases to suggest plausible moves. reached that are quiescent ? ? ? ? (i.e., not volatile) 25 Pacman: unknown 26 4

GamesCrafters http://gamescrafters.berkeley.edu/ 27 5

Recommend

More recommend