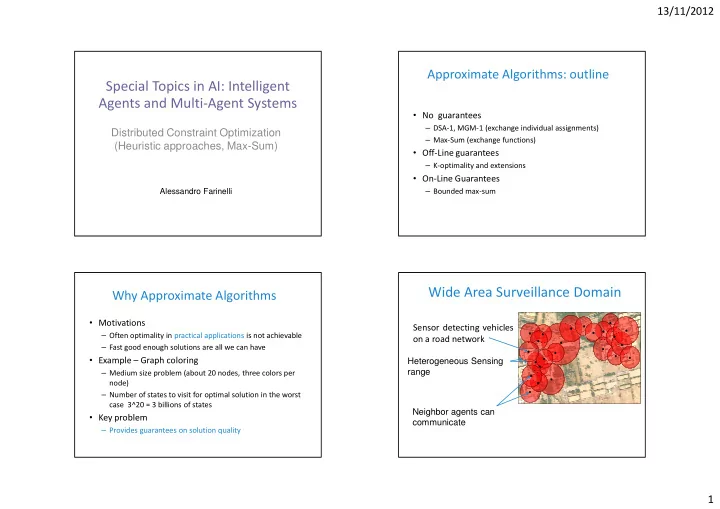

13/11/2012 Approximate Algorithms: outline Special Topics in AI: Intelligent Agents and Multi-Agent Systems • No guarantees – DSA-1, MGM-1 (exchange individual assignments) Distributed Constraint Optimization – Max-Sum (exchange functions) (Heuristic approaches, Max-Sum) • Off-Line guarantees – K-optimality and extensions • On-Line Guarantees Alessandro Farinelli – Bounded max-sum Wide Area Surveillance Domain Why Approximate Algorithms • Motivations Sensor detecting vehicles – Often optimality in practical applications is not achievable on a road network – Fast good enough solutions are all we can have • Example – Graph coloring Heterogeneous Sensing range – Medium size problem (about 20 nodes, three colors per node) – Number of states to visit for optimal solution in the worst case 3^20 = 3 billions of states Neighbor agents can • Key problem communicate – Provides guarantees on solution quality 1

13/11/2012 WAS: Model WAS: Goal • Energy Constraints duty cycle – Sense/Sleep modes time – Recharge when sleeping Coordinate sensors’ duty – Energy neutral operation cycles: duty cycle – Contrained on duty cycle time • Achieve energy neutral Good Schedule • Sensor model operations – Activity can be detected by Bad Schedule • Minimize probability of single sensor missing vehicles small road Heavy traffic road • Environment – Roads have different traffic loads WAS: system wide utility WAS: Interactions among sensors Weighted probability of event detection for each possible System wide utility decomposition in individual utilities joint schedule K={1} Traffic load in the area K={1,2} { x , x , x } 1 2 3 Assume Poisson process for event duration K={2,3} x 1 x 2 time K={1,2,3} G ( x 1 x , ) 2 S S S 1 2 3 2

13/11/2012 Surveillance demo Heuristic approaches • Local Greedy Search – DSA (Distributed Stochastic Algorithm) – MGM (Maximum Gain Message) • Inference-based approaches – Max-Sum (GDL family) Centralized Local Greedy approaches Centralized Local Greedy approaches • Greedy local search • Problems – Start from random solution – Local minima – Do local changes if global solution improves – Standard solutions: RandomWalk, Simulated Annealing – Local: change the value of a subset of variables, usually one -1 -1 -1 -1 -1 -4 -1 -2 0 0 -2 -1 -1 -1 -1 -2 -1 -1 -1 -1 -1 -1 0 3

13/11/2012 Distributed Local Greedy approaches Distributed Stochastic Algorithm • Local knowledge • Greedy local search with activation probability to mitigate issues with parallel executions • Parallel execution: • DSA-1: change value of one variable at time – A greedy local move might be harmful/useless – Need coordination • Initialize agents with a random assignment and communicate values to neighbors -1 -1 -1 -4 • Each agent: -1 – Generates a random number and execute only if rnd less than activation probability 0 -2 0 -2 – When executing changes value maximizing local gain 0 0 -2 -2 – Communicate possible variable change to neighbors -4 DSA-1: Execution Example DSA-1: discussion • Extremely “cheap” (computation/communication) rnd > ¼ ? rnd > ¼ ? rnd > ¼ ? rnd > ¼ ? • Good performance in various domains -1 -1 -1 – e.g. target tracking [Fitzpatrick Meertens 03, Zhang et al. 03], P = 1/4 -1 – Shows an anytime property (not guaranteed) – Benchmarking technique for coordination • Problems 0 -2 – Activation probablity must be tuned [Zhang et al. 03] – No general rule, hard to characterise results across domains 4

13/11/2012 Maximum Gain Message (MGM-1) MGM-1: Example • Coordinate to decide who is going to move – Compute and exchange possible gains -1 -1 – Agent with maximum (positive) gain executes • Analysis [Maheswaran et al. 04] – Empirically, similar to DSA 0 -1 -1 -2 – More communication (but still linear) -1 -1 0 -2 – No Threshold to set G = -2 – Guaranteed to be monotonic (Anytime behavior) G = 0 G = 2 G = 0 Max-sum Local greedy approaches Agents iteratively computes local functions that depend • Exchange local values for variables only on the variable they control – Similar to search based methods (e.g. ADOPT) • Consider only local information when maximizing – Values of neighbors • Anytime behaviors X1 X2 Choose arg max • Could result in very bad solutions X4 X3 5

13/11/2012 Max-Sum Messages Factor Graph H ( X 2 X | ) • Computational framework to represent factored 1 All incoming messages except xj x H ( X ) x computation 2 1 1 • Bipartite graph, Variable - Factor � q ( x ) = r ( x ) = + + H ( X , X , X ) H ( X ) H ( X | X ) H ( X | X ) i → j i k → i i x 1 2 3 1 2 1 3 1 k ∈ adj ( i ) \ j 3 H ( X 2 X | ) H ( X 3 X | ) 1 1 x Local function x x H ( X ) x 2 1 2 1 1 � � � � � r ( x ) = max U ( ) + q ( x ) x j → i i j j k → j k � � \ i x j k ∈ adj ( j ) \ i x x 3 3 H ( X 3 X | ) All incoming messages except xi Max over all variables except xi 2 Agents and the Factor Graph Max-Sum Assignments • Variables and functions must be allocated to agents • At each iteration, each agent • Allocation does not impact on solution quality – Computes its local function • Allocation does impact on load distribution/communication A H ( X 2 X | ) 2 1 x All incoming messages H ( X ) x A 2 1 1 1 • Sets its assignment as the value that maximizes its A local function 3 x 3 H ( X 3 X | ) 1 6

13/11/2012 Max-Sum on Acyclic Graphs Max-Sum on Cyclic Graphs • Convergence guaranteed in • Convergence NOT guaranteed a polynomial number of H ( X 2 X | ) • When convergence it does 1 cycles x converge to a neighbourhood x H ( X 2 X | ) 2 H ( X ) 1 1 1 • Optimal x maximum H ( X ) x 2 1 1 – Different branches are • Neighbourhood maximum: x independent 3 – greater than all other maxima H ( X | X , X ) – Z functions provide correct x 3 1 2 within a particular region of the 3 estimation H ( X 3 X | ) search space 1 – Need Value propagation to break simmetries (Loopy) Max-sum Performance Max-Sum for low power devices • Good performance on loopy networks [Farinelli et al. 08] • Low overhead – When it converges very good results – Msgs number/size • Interesting results when only one cycle [Weiss 00] • Asynchronous computation – We could remove cycle but pay an exponential price (see – Agents take decisions whenever new messages arrive DPOP) • Robust to message loss – Java Library for max-sum http://code.google.com/p/jmaxsum/ 7

13/11/2012 Max-sum on hardware GDL and Max-Sum • Generalized Distributive Law (GDL) – Unifying framework for inference in Graphical Models – Based on mathematical properties of semi-rings – Widely used in • Information theory (turbo codes) • Probabilistic Graphical models (belief propagation) GDL basic ideas Max-Sum for UAVs Task Assignment for UAVs [Delle Fave et al 12] • Max-Sum semi-ring – Sum Distributes over Max – We can efficiently maximise a summation Video Streaming Distributive Law Generalized Distributive Law Max-Sum a + max( b , c ) = max( a + b , a + c ) ⊗ ( a , ⊕ ( b , c )) = ⊕ ( ⊗ ( a , b ), ⊗ ( a , c )) Interest points � i max f ( x ) ⊕ ⊗ ( f ( x )) x i i x i i i 8

13/11/2012 Task utility Factor Graph Representation Task completion Priority UAV PDA 2 2 X PDA 2 1 T 1 T U 2 2 Urgency U 1 UAV 1 U First assigned UAVs reaches 3 task T 3 X PDA 1 Last assigned UAVs leaves 3 task (consider battery life) 33 Quality guarantees for approx. UAVs Demo techniques • Key area of research • Address trade-off between guarantees and computational effort • Particularly important for many real world applications – Critical (e.g. Search and rescue) – Constrained resource (e.g. Embedded devices) – Dynamic settings 9

13/11/2012 Terminology and notation Instance-generic guarantees Accuracy: high alpha • Assume a maximization problem Instance-specific Generality: less use of instance specific knowledge optimal solution, a solution • Bounded Max- Characterise solution quality without Sum running the algorithm • DaCSA • percentage of optimality Accuracy – [0,1] Instance-generic – The higher the better No guarantees K-optimality • approximation ratio MGM-1, T-optimality DSA-1, – >= 1 Region Opt. Max-Sum – The lower the better • is the bound Generality K-Optimality framework K-Optimal solutions • Given a characterization of solution gives bound on solution quality [Pearce and Tambe 07] 1 • Characterization of solution: k-optimal 1 1 1 1 1 1 • K-optimal solution: – Corresponding value of the objective function can not be 1 improved by changing the assignment of k or less 2-optimal ? Yes 3-optimal ? No variables. 2 2 0 0 1 0 0 2 10

Recommend

More recommend