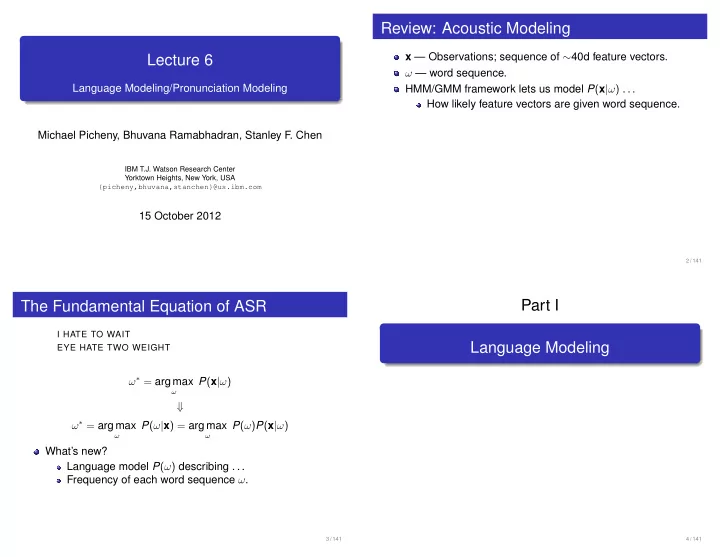

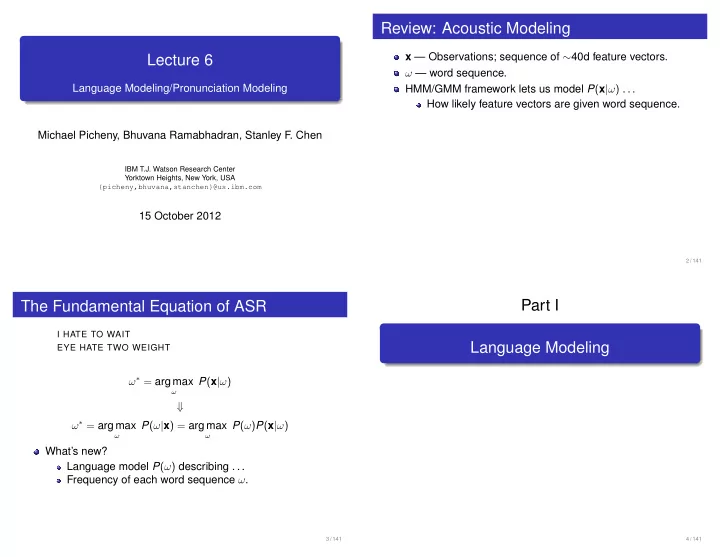

Review: Acoustic Modeling x — Observations; sequence of ∼ 40d feature vectors. Lecture 6 ω — word sequence. Language Modeling/Pronunciation Modeling HMM/GMM framework lets us model P ( x | ω ) . . . How likely feature vectors are given word sequence. Michael Picheny, Bhuvana Ramabhadran, Stanley F. Chen IBM T.J. Watson Research Center Yorktown Heights, New York, USA {picheny,bhuvana,stanchen}@us.ibm.com 15 October 2012 2 / 141 Part I The Fundamental Equation of ASR I HATE TO WAIT Language Modeling EYE HATE TWO WEIGHT ω ∗ = arg max P ( x | ω ) ω ⇓ ω ∗ = arg max P ( ω | x ) = arg max P ( ω ) P ( x | ω ) ω ω What’s new? Language model P ( ω ) describing . . . Frequency of each word sequence ω . 3 / 141 4 / 141

Language Modeling: Goals What Type of Model? Describe which word sequences are likely . Want probability distribution over sequence of symbols. e.g. , BRITNEY SPEARS vs. BRIT KNEE SPEARS . (Hidden) Markov model! Analogy: multiple-choice test. Hidden or non-hidden? LM restricts choices given to acoustic model. For hidden, too hard to come up with topology. The fewer choices, the better you do. 5 / 141 6 / 141 Where Are We? What’s an n -Gram Model? Markov model of order n − 1. N -Gram Models 1 To predict next word . . . Only need to remember last n − 1 words. Technical Details 2 Smoothing 3 Discussion 4 7 / 141 8 / 141

What’s a Markov Model? Sentence Begins and Ends Decompose probability of sequence . . . Pad left with beginning-of-sentence tokens. e.g. , w − 1 = w 0 = ⊲ . Into product of conditional probabilities. Always condition on two words to left, even at start. e.g. , trigram model ⇒ Markov order 2 ⇒ . . . Predict end-of-sentence token at end. Remember last 2 words. So true probability, i.e. , � ω P ( ω ) = 1. L � P ( w 1 · · · w L ) = P ( w i | w 1 · · · w i − 1 ) L + 1 � P ( w 1 · · · w L ) = P ( w i | w i − 2 w i − 1 ) i = 1 L i = 1 � = P ( w i | w i − 2 w i − 1 ) P ( I HATE TO WAIT ) = P ( I | ⊲ ⊲ ) × P ( HATE | ⊲ I ) × P ( TO | I HATE ) × i = 1 P ( WAIT | HATE TO ) × P ( ⊳ | TO WAIT ) P ( I HATE TO WAIT ) = P ( I ) P ( HATE | I ) P ( TO | I HATE ) P ( WAIT | HATE TO ) 9 / 141 10 / 141 How to Set Probabilities? Example: Maximum Likelihood Estimation 23M words of Wall Street Journal text. For each history w i − 2 w i − 1 . . . P ( w i | w i − 2 w i − 1 ) is multinomial distribution. FEDERAL HOME LOAN MORTGAGE CORPORATION –DASH ONE .POINT FIVE BILLION DOLLARS OF REALESTATE Maximum likelihood estimation for multinomials. MORTGAGE -HYPHEN INVESTMENT CONDUIT SECURITIES Count and normalize! OFFERED BY MERRILL LYNCH &ERSAND COMPANY NONCOMPETITIVE TENDERS MUST BE RECEIVED BY NOON c ( w i − 2 w i − 1 w i ) P MLE ( w i | w i − 2 w i − 1 ) = EASTERN TIME THURSDAY AT THE TREASURY OR AT � w c ( w i − 2 w i − 1 w ) FEDERAL RESERVE BANKS OR BRANCHES = c ( w i − 2 w i − 1 w i ) . . . . . . c ( w i − 2 w i − 1 ) P ( TO | I HATE ) = c ( I HATE TO ) = 17 45 = 0 . 378 c ( I HATE ) 11 / 141 12 / 141

Example: Bigram Model Example: Bigram Model P ( I HATE TO WAIT ) =??? P ( I HATE TO WAIT ) P ( EYE HATE TWO WEIGHT ) =??? = P ( I | ⊲ ) P ( HATE | I ) P ( TO | HATE ) P ( WAIT | TO ) P ( ⊳ | WAIT ) Step 1: Collect all bigram counts, unigram history counts. 3234 21891 × 40 45 510508 × 35 324 882 = 3 . 05 × 10 − 11 = 892669 × 246 × ⊳ ∗ EYE I HATE TO TWO WAIT WEIGHT ⊲ 3 3234 5 4064 1339 8 22 0 892669 0 0 0 26 1 0 0 52 735 EYE P ( EYE HATE TWO WEIGHT ) 0 0 45 2 1 1 0 8 21891 I 0 0 0 40 0 0 0 9 246 HATE = P ( EYE | ⊲ ) P ( HATE | EYE ) P ( TWO | HATE ) P ( WEIGHT | TWO ) × 8 6 19 21 5341 324 4 221 510508 TO 0 5 0 1617 652 0 0 4213 132914 TWO P ( ⊳ | WEIGHT ) 0 0 0 71 2 0 0 35 882 WAIT 0 0 0 38 0 0 0 45 643 3 0 0 132914 × 45 0 WEIGHT = 892669 × 735 × 246 × 643 = 0 13 / 141 14 / 141 Recap: N -Gram Models Where Are We? Simple formalism, yet effective. N -Gram Models 1 Discriminates between wheat and chaff. Easy to train: count and normalize. Technical Details 2 Generalizes. Assigns nonzero probabilities to sentences . . . Not seen in training data, e.g. , I HATE TO WAIT . Smoothing 3 Discussion 4 15 / 141 16 / 141

LM’s and Training and Decoding LM’s and Training and Decoding Decoding without LM’s. Point: n -gram model is (hidden) Markov model. Word HMM encoding allowable word sequences. Can be expressed as word HMM. Replace each word with its HMM. Replace each word with its HMM. Leave in language model probabilities. ❍▼▼♦♥❡ ❖◆❊ ❍▼▼t✇♦ ❚❲❖ ❖◆❊✴P✭❖◆❊✮ ❍▼▼♦♥❡✴P✭❖◆❊✮ ❚❲❖✴P✭❚❲❖✮ ❍▼▼t✇♦✴P✭❚❲❖✮ ❍▼▼t❤r❡❡ ❚❍❘❊❊ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ❚❍❘❊❊✴P✭❚❍❘❊❊✮ ❍▼▼t❤r❡❡✴P✭❚❍❘❊❊✮ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ ✳ Lots more details in lectures 7, 8. How do LM’s impact acoustic model training? 17 / 141 18 / 141 One Puny Prob versus Many? The Acoustic Model Weight Not a fair fight. Solution: acoustic model weight. ω ∗ = arg max P ( ω ) P ( x | ω ) α ω ♦♥❡ t✇♦ α usually somewhere between 0.05 and 0.1. t❤r❡❡ ❢♦✉r Important to tune for each LM, AM. ☞✈❡ s✐① Theoretically inelegant. s❡✈❡♥ Empirical performance trumps theory any day of week. ❡✐❣❤t ♥✐♥❡ Is it LM weight or AM weight? ③❡r♦ 19 / 141 20 / 141

Real World Toy Example What is This Word Error Rate Thing? Test set: continuous digit strings. Most popular evaluation measure for ASR systems Unigram language model: P ( ω ) = � L + 1 i = 1 P ( w i ) . Divide total number of errors in test set . . . By total number of words. 15 � utts u ( # errors in u ) WER ≡ � utts u ( # words in reference for u ) 10 What is “number of errors” in utterance? WER Minimum number of word insertions, deletions, and . . . Substitutions to transform reference to hypothesis. 5 0 AM weight=1 AM weight=0.1 21 / 141 22 / 141 Example: Word Error Rate Evaluating Language Models What is the WER? Best way: plug into ASR system; measure WER. Need ASR system. reference: THE DOG IS HERE NOW Expensive to compute (especially in old days). hypothesis: THE UH BOG IS NOW Results depend on acoustic model. Can WER be above 100%? Is there something cheaper that predicts WER well? What algorithm to compute WER? How many ways to transform reference to hypothesis? 23 / 141 24 / 141

Perplexity Example: Perplexity Basic idea: test set likelihood . . . P ( I HATE TO WAIT ) Normalized so easy to interpret. = P ( I | ⊲ ) P ( HATE | I ) P ( TO | HATE ) P ( WAIT | TO ) P ( ⊳ | WAIT ) Take (geometric) average probability p avg . . . 3234 21891 × 40 45 510508 × 35 324 Assigned to each word in test data. 882 = 3 . 05 × 10 − 11 = 892669 × 246 × 1 � L + 1 � L + 1 � p avg = P ( w i | w i − 2 w i − 1 ) 1 i = 1 � L + 1 � L + 1 � p avg = P ( w i | w i − 1 ) 1 Invert it: PP = p avg . i = 1 Interpretation: 1 = ( 3 . 05 × 10 − 11 ) 5 = 0 . 00789 Given history, how many possible next words . . . 1 (For acoustic model to choose from.) PP = = 126 . 8 p avg e.g. , uniform unigram LM over V words ⇒ PP = V . 25 / 141 26 / 141 Perplexity: Example Values Does Perplexity Predict Word-Error Rate? Not across different LM types. training case+ e.g. , word n -gram model; class n -gram model; . . . type domain data punct PP OK within LM type. human 1 biography 142 √ e.g. , vary training set; model order; pruning; . . . machine 2 Brown 600MW 790 ASR 3 WSJ 23MW 120 Varies highly across domains, languages. Why? 1 Jefferson the Virginian ; Shannon game (Shannon, 1951). 2 Trigram model (Brown et al. , 1992). 3 Trigram model; 20kw vocabulary. 27 / 141 28 / 141

Perplexity and Word-Error Rate Recap LM describes allowable word sequences. Used to build decoding graph. 35 Need AM weight for LM to have full effect. Best to evaluate LM’s using WER . . . But perplexity can be informative. 30 WER Can you think of any problems with word error rate? What do we really care about in applications? 25 20 4.5 5 5.5 6 6.5 log PP 29 / 141 30 / 141 Where Are We? An Experiment Take 50M words of WSJ; shuffle sentences; split in two. N -Gram Models 1 “Training” set: 25M words. NONCOMPETITIVE TENDERS MUST BE RECEIVED BY NOON EASTERN TIME THURSDAY AT THE TREASURY OR AT Technical Details 2 FEDERAL RESERVE BANKS OR BRANCHES .PERIOD NOT EVERYONE AGREED WITH THAT STRATEGY .PERIOD . . . Smoothing 3 . . . “Test” set: 25M words. NATIONAL PICTURE &ERSAND FRAME –DASH INITIAL Discussion 4 TWO MILLION ,COMMA TWO HUNDRED FIFTY THOUSAND SHARES ,COMMA VIA WILLIAM BLAIR .PERIOD THERE WILL EVEN BE AN EIGHTEEN -HYPHEN HOLE GOLF COURSE .PERIOD . . . . . . 31 / 141 32 / 141

Recommend

More recommend