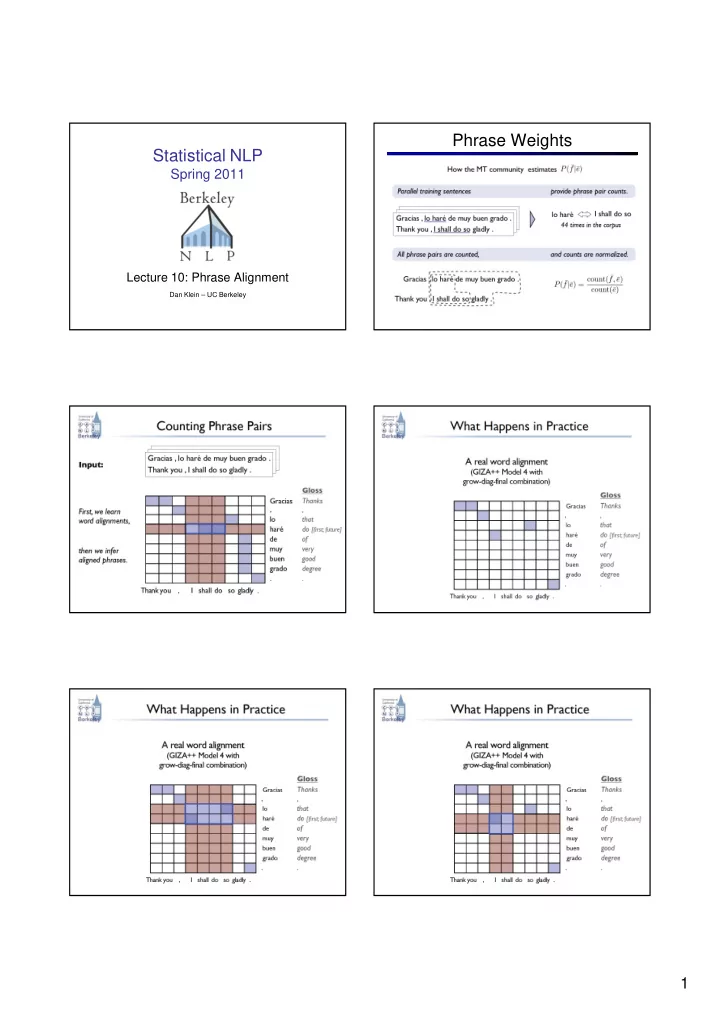

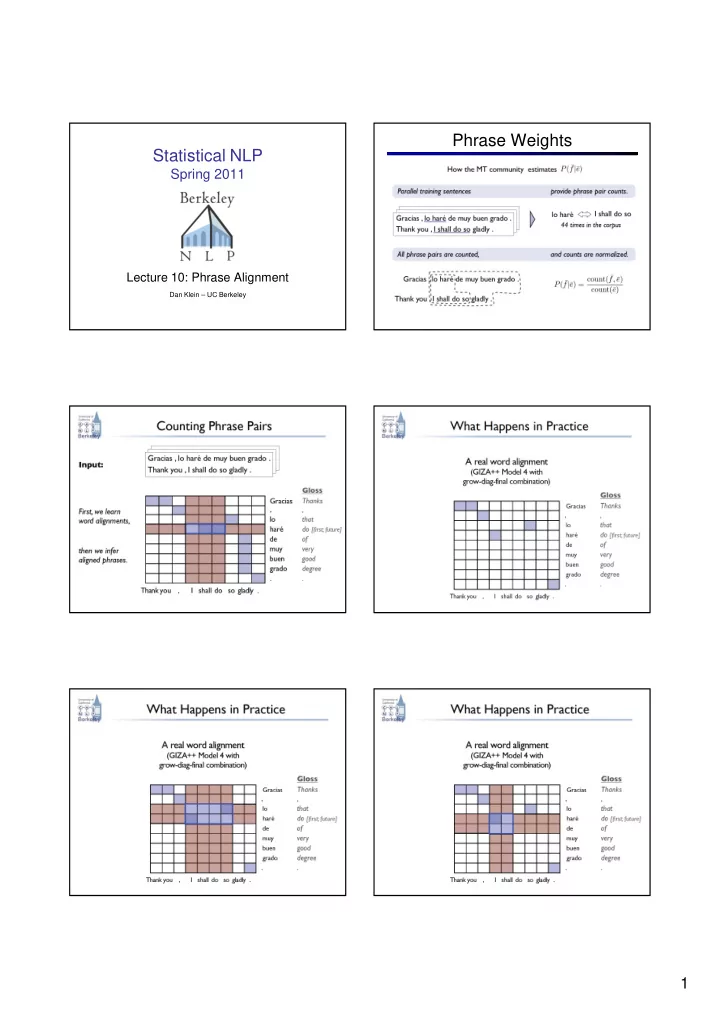

Phrase Weights Statistical NLP Spring 2011 Lecture 10: Phrase Alignment Dan Klein – UC Berkeley 1

Phrase Scoring Phrase Size � Phrases do help � Learning weights has been tried, several times: � But they don’t need � [Marcu and Wong, 02] to be long � [DeNero et al, 06] � … and others � Why should this be? aiment poisson � Seems not to work well, for les chats le frais . a variety of partially understood reasons cats like � Main issue: big chunks get fresh all the weight, obvious fish priors don’t help � . Though, [DeNero et al 08] . Lexical Weighting Phrase Alignment 2

3

Identifying Phrasal Translations In the past two years , a number of US citizens … 两 年 中 一 批 美国 公民 过去 , … past two year in , one lots US citizen Past Go over Phrase alignment models: Choose a segmentation and a one-to-one phrase alignment Underlying assumption: There is a correct phrasal segmentation Unique Segmentations? Identifying Phrasal Translations In the past two years , a number of US citizens … In the past two years , a number of US citizens … 过去 两 年 中 , 一 批 美国 公民 … 过去 两 年 中 , 一 批 美国 公民 … past two year in , one lots US citizen past two year in , one lots US citizen Problem 1: Overlapping phrases can be useful (and This talk: Modeling sets of overlapping, multi-scale phrase pairs complementary) Input: sentence pairs Problem 2: Phrases and their sub-phrases can both be useful Output: extracted phrases Hypothesis: This is why models of phrase alignment don’t work well Our Task: Predict Extraction Sets … But the Standard Pipeline has Overlap! Conditional model of extraction sets given sentence pairs 过去 past 0 0 过去 过去 两 two 1 1 两 两 2 2 年 年 年 year 3 3 中 中 4 4 中 in 0 1 2 3 4 5 0 1 2 3 4 5 In the past two years In the past two years In the past two years Sentence Word Extracted Sentence Extracted Phrases + Extracted Pair Alignment Phrases Pair ``Word Alignments’’ Phrases M O T I V A T I O N M O T I V A T I O N 4

Alignments Imply Extraction Sets Incorporating Possible Alignments Word-level Sure and Word-to-span Extraction set Word-to-span Extraction set alignment possible alignments of bispans alignments of bispans links word links 0 0 过去 past 过去 past 1 1 两 two 两 two 2 2 年 year 年 year 3 3 中 in 中 in 4 4 0 1 2 3 4 5 0 1 2 3 4 5 In the past two years In the past two years M O D E L M O D E L Linear Model for Extraction Sets Features on Bispans and Sure Links Some features on sure links HMM posteriors go over 过 Presence in dictionary Features on 地球 Earth Numbers & punctuation 0 sure links 过去 over the Earth 1 Features on bispans Features on 两 all bispans HMM phrase table features: e.g., phrase relative frequencies 2 年 Lexical indicator features for phrases with common words 3 中 Shape features: e.g., Chinese character counts 4 0 1 2 3 4 5 In the past two years Monolingual phrase features: e.g., “the _____” M O D E L F E A T U R E S Getting Gold Extraction Sets Discriminative Training with MIRA Hand Aligned: Word-to-span Extraction set Sure and possible alignments of bispans word links Deterministic: Find min and max alignment index for each word Guess (arg max w �ɸ ) Gold (annotated) Loss function: F-score of bispan errors (precision & recall) Deterministic: A bispan is included iff every Minimal change to w such that the gold is word within the bispan aligns within the Training Criterion: bispan preferred to the guess by a loss-scaled margin T R A I N I N G T R A I N I N G 5

Inference: An ITG Parser Experimental Setup ITG captures some bispans Chinese-to-English newswire Parallel corpus: 11.3 million words; sentences length ≤ 40 Supervised data: 150 training & 191 test sentences (NIST ‘02) Unsupervised Model: Jointly trained HMM (Berkeley Aligner) MT systems: Tuned and tested on NIST ‘04 and ‘05 I N F E R E N C E R E S U L T S Baselines and Limited Systems Word Alignment Performance HMM: Joint training & competitive posterior decoding 80.4 HMM Source of many features for supervised models 83.6 ITG 1 - AER 83.1 Coarse State-of-the-art unsupervised baseline 84.4 Full 76.9 ITG: Supervised ITG aligner with block terminals 83.8 Recall Re-implementation of Haghighi et al., 2009 84.2 84.0 State-of-the-art supervised baseline 84.0 83.4 Coarse: Supervised block ITG + possible alignments Precision 82.2 84.7 Coarse pass of full extraction set model R E S U L T S R E S U L T S Extracted Bispan Performance Translation Performance (BLEU) 59.9 34.5 62.8 HMM F5 34.7 72.8 Joshua ITG 74.0 35.7 Coarse 64.1 35.9 HMM Full 68.4 F1 ITG 71.4 33.2 71.6 Coarse 33.6 Full 59.5 Moses 62.3 34.2 Recall 72.9 34.4 74.2 69.5 31 32 33 34 35 36 37 75.8 Precision 70.0 Supervised conditions also included HMM alignments 69.0 R E S U L T S R E S U L T S 6

Recommend

More recommend