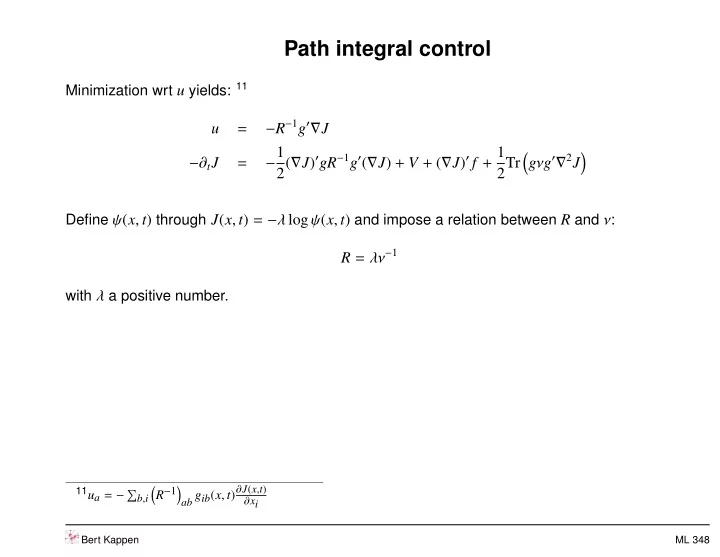

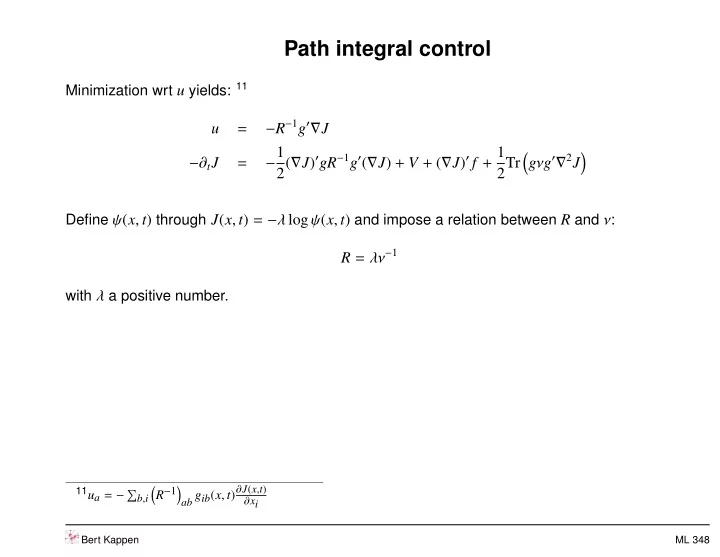

Path integral control Minimization wrt u yields: 11 − R − 1 g ′ ∇ J u = − 1 2( ∇ J ) ′ gR − 1 g ′ ( ∇ J ) + V + ( ∇ J ) ′ f + 1 � � g ν g ′ ∇ 2 J − ∂ t J = 2Tr Define ψ ( x , t ) through J ( x , t ) = − λ log ψ ( x , t ) and impose a relation between R and ν : R = λν − 1 with λ a positive number. � R − 1 � ab g ib ( x , t ) ∂ J ( x , t ) 11 u a = − � b , i ∂ xi Bert Kappen ML 348

Path integral control Then the HJB becomes linear in ψ � g ν g ′ ∇ 2 �� − V λ + f ′ ∇ + 1 � − ∂ t ψ = 2Tr ψ with end condition ψ ( x , T ) = exp( − φ ( x ) /λ ) 12 12 We sketch the derivation for g = 1 . − 1 2( ∇ J ) ′ R − 1 ( ∇ J ) + 1 = − 1 ij ∇ j J + 1 � � � � ν ∇ 2 J ∇ i JR − 1 R − 1 2Tr 2 λ ij ∇ ij J 2 ij ij = 1 � � � R − 1 −∇ i J ∇ j J + λ ∇ ij J ij 2 ij � � = 1 − λ 2 1 � R − 1 ψ ∇ ij ψ ij 2 ij since − λ 2 1 −∇ i J ∇ j J = ψ 2 ∇ i ψ ∇ j ψ � 1 � = λ 1 ψ 2 ∇ i ψ ∇ j ψ − λ 1 ∇ ij J = − λ ∇ i ∇ j log ψ = − λ ∇ i ψ ∇ j ψ ψ ∇ ij ψ Bert Kappen ML 349

Path integral control We identify ψ ( x , t ) ∝ p ( z , T | x , t ) , then the linear Bellman equation � g ν g ′ ∇ 2 �� − V λ + f ′ ∇ + 1 � − ∂ t ψ = 2Tr ψ can be interpreted as a Kolmogorov backward equation for the process � dx i f i ( x , t ) dt + g ia ( x , t ) d ξ a = a x ( t ) = † with probability V ( x , t ) dt /λ x ( T ) = † with probability φ ( x ) /λ The correspondong forward equation is ∂ t ρ = − V λρ − ∇ ( f ρ ) + 1 2Tr ∇ 2 g ν g ′ ρ with ρ ( x , t ) = p ( x , t | z , 0) and ρ ( x , 0) = δ ( x − z ) . Bert Kappen ML 350

Feynman-Kac formula Denote Q ( τ | x , s ) the distribution over uncontrolled trajectories that start at x , t : dx = f ( x , t ) dt + g ( x , t ) d ξ with τ a trajectory x ( t → T ) . Then � � � − S ( τ ) ψ ( x , t ) = dQ ( τ | x , t ) exp λ � ′ S ( τ ) = φ ( x ( T )) + dsV ( x ( s ) , s ) t ψ can be computed by forward sampling the uncontrolled process. Bert Kappen ML 351

Alternative derivation Uncontrolled dynamics specifies distribution q ( τ | x , t ) over trajectories τ from x , t . � ′ Cost for trajectory τ is S ( τ | x , t ) = φ ( x T ) + t dsV ( x s , s ) . Find optimal distribution p ( τ | x , t ) that minimizes E p S and is ’close’ to q ( τ | x , t ) . Bert Kappen ML 352

KL control Find p ∗ that minimizes � d τ p ( τ | x , t ) log p ( τ | x , t ) C ( p ) = KL ( p | q ) + E p S KL ( p | q ) = q ( τ | x , t ) The optimal solution is given by 1 p ∗ ( τ | x , t ) = ψ ( x , t ) q ( τ | x , t ) exp( − S ( τ | x , t )) � d τ q ( τ | x , t ) exp( − S ( τ | x , t )) = E q e − S ψ ( x , t ) = The optimal cost is: C ( p ∗ ) = − log ψ ( x , t ) Bert Kappen ML 353

Controlled diffusions In the case of controlled diffusions, p ( τ | x , t ) is parametrised by functions u ( x , t ) , q ( τ | x , t ) corresponds to u ( x . t ) = 0 : dX t f ( X t , t ) dt + g ( X t , t )( u ( X t , t ) dt + dW t ) E ( dW i dW j ) = ν ij dt = �� � dt 1 2 u ( X t , t ) ′ ν − 1 u ( X t , t ) + S ( τ | x , t ) C ( p ) = E p J ( x , t ) = − log ψ ( x , t ) is the solution of the Bellman equation. p ∗ is generated by optimal control u ∗ ( x , t ) : � dWe − S � E q u ∗ ( x , t ) dt E p ∗ ( dW t ) = = � e − S � E q ψ, u ∗ can be computed by forward sampling from q . Bert Kappen ML 354

Recap of the main idea 2 1 0 −1 −2 0 0.5 1 1.5 2 Consider a stochastic dynamical system dX t = f ( X t , u ) dt + dW t E ( dW t , i dW t . j ) = ν ij dt Given X 0 find control function u ( x , t ) that minimizes the expected future cost � T � � φ ( X T ) + dtR ( X t , u ( X t , t )) C = E 0 Bert Kappen ML 355

Control theory −2 2 −1 1 0 0 1 −1 2 −2 0.5 1 1.5 2 0 0.5 1 1.5 2 Standard approach: define J ( x , t ) is optimal cost-to-go from x , t . � T � � J ( x , t ) = min u t : T E u φ ( X T ) + dtR ( X t , u ( X t , t )) X t = x t J satisfies a partial differential equation � � R ( x , u ) + f ( x , u ) ∇ x J ( x , t ) + 1 2 ν ∇ 2 − ∂ t J ( t , x ) = min x J ( x , t ) J ( x , T ) = φ ( x ) u with u = u ( x , t ) .This is HJB equation. Optimal control u ∗ ( x , t ) defines distribution over trajectories p ∗ ( τ ) ( = p ( τ | x 0 , 0)) . Bert Kappen ML 356

Path integral control theory 2 1 0 −1 −2 0 0.5 1 1.5 2 f ( X t ) dt + g ( X t )( u ( X t , t ) dt + dW t ) dX t = X 0 = x 0 � �������������������������� �� �������������������������� � f ( Xt , u ) dt Goal is to find function u ( x , t ) that minimizes � T � T � � dt V ( X t , t ) + 1 dt 1 2 u ( X t , t ) 2 2 u ( X t , t ) 2 C = E φ ( X T ) + = E S ( τ ) + 0 0 � ������������������� �� ������������������� � R ( Xt , u ( Xt , t )) � T S ( τ ) = φ ( X T ) + V ( X t , t ) 0 Bert Kappen ML 357

Path integral control theory 2 2 1 1 0 0 −1 −1 −2 −2 0 0.5 1 1.5 2 0 0.5 1 1.5 2 Equivalent formulation: Find distribution over trajectories p that minimizes 13 � � � S ( τ ) + log p ( τ ) C ( p ) = d τ p ( τ ) q ( τ ) q ( τ | x 0 , 0) is distribution over uncontrolled trajectories. The optimal solution is given by p ∗ ( τ ) = 1 ψ q ( τ ) e − S ( τ ) � T � d τ p ( τ ) log p ( τ ) 2 u ( X t , t ) 2 = 13 E u 0 dt 1 q ( τ ) . Bert Kappen ML 358

Path integral control theory 2 2 1 1 0 0 −1 −1 −2 −2 0 0.5 1 1.5 2 0 0.5 1 1.5 2 Equivalent formulation: Find distribution over trajectories p that minimizes � � � S ( τ ) + log p ( τ ) C ( p ) = d τ p ( τ ) q ( τ ) q ( τ | x 0 , 0) is distribution over uncontrolled trajectories. ψ q ( τ ) e − S ( τ ) = p ( τ | u ∗ ) . The optimal solution is given by p ∗ ( τ ) = 1 Equivalence of optimal control and discounted cost (Girsanov) Bert Kappen ML 359

Path integral control theory 2 2 1 1 0 0 −1 −1 −2 −2 0 0.5 1 1.5 2 0 0.5 1 1.5 2 The optimal control cost is C ( p ∗ ) = − log ψ = J ( x 0 , 0) with � d τ q ( τ ) e − S ( τ ) = E q e − S ψ = J ( x , t ) can be computed by forward sampling from q . Bert Kappen ML 360

Delayed choice Time-to-go T = 2 − t . 1.8 3 T=2 1.6 2 T=1 1.4 1 T=0.5 1.2 J(x,t) 0 1 0.8 −1 0.6 −2 0.4 −2 −1 0 1 2 −3 x 0 0.5 1 1.5 2 J ( x , t ) = − ν log E q exp( − φ ( X 2 ) /ν ) Decision is made at T = 1 ν Bert Kappen ML 361

Delayed choice Time-to-go T = 2 − t . 1.8 3 T=2 1.6 2 T=1 1.4 1 T=0.5 1.2 J(x,t) 0 1 0.8 −1 0.6 −2 0.4 −2 −1 0 1 2 −3 x 0 0.5 1 1.5 2 J ( x , t ) = − ν log E q exp( − φ ( X 2 ) /ν ) ”When the future is uncertain, delay your decisions.” Bert Kappen ML 362

Bert Kappen ML 363

Bert Kappen ML 364

Delayed choice (details) E dW 2 dX t = udt + dW t t = ν dt 2 u 2 and end cost φ ( z = ± 1) = 0 , φ ( z ) = ∞ else encodes two targets at z = ± 1 at V = 0 , path cost is 1 t = T . PI recipe: 1. � ψ ( x , t ) = dQ ( τ | x , t ) exp( − S ( τ ) /λ ) S ( τ ) = φ ( x ( T )) � ψ ( x , t ) = dzq ( z , T | x , t ) exp( − φ ( z ) /λ ) = q (1 , T | x , t ) + q ( − 1 , T | x , t ) q ( z , T | x , t ) = N ( z | x , ν ( T − t )) 2. Compute � 1 � 1 x 2 x 2 − ν ( T − t ) log 2 cosh J ( x , t ) − λ log ψ ( x , t ) = = T − t ν ( T − t ) Bert Kappen ML 365

3. � � 1 x u ( x , t ) = −∇ J ( x , t ) = tanh ν ( T − t ) − x T − t stochastic deterministic 2 2 1 1 0 0 −1 −1 −2 −2 0 0.5 1 1.5 2 0 0.5 1 1.5 2 2 2 1 1 0 0 −1 −1 −2 −2 0 0.5 1 1.5 2 0 0.5 1 1.5 2 Bert Kappen ML 366

Coordination of UAVs (AAMAS 2015.mp4) ≈ 10 . 000 trajectories per iteration, 3 iterations per second. Video at: http://www.snn.ru.nl/˜bertk/control_theory/PI_quadrotors.mp4 Gomez et al. 2015 Bert Kappen ML 367

Coordination of UAVs Chao Xu ACC 2017 Bert Kappen ML 368

Importance sampling and control 10 10 5 5 0 0 −5 −5 −10 −10 0 0.5 1 1.5 2 0 0.5 1 1.5 2 � T ψ ( x , t ) = E q e − S S ( τ | x , t ) = φ ( x T ) + dsV ( x s , s ) t Sampling is ’correct’ but inefficient. Bert Kappen ML 369

”To compute or not to compute, that is the question” There are two extreme approaches to compute actions: • precompute the appropriate action u ( x ) for any possible situation x . Complex to learn and to store. Fast to execute • compute the appropriate action u ( x ) for the current situation x . Low learning and storage cost. Slow execution. Intuitively, one can imagine that the most efficient approach is to combine both ideas (like ’just-in- time’ manufacturing): • precompute ’basic motor skills’, the ’halffabrikaat’ • compute the appropriate action u ( x ) from the basic motor skills Bert Kappen ML 370

Recommend

More recommend