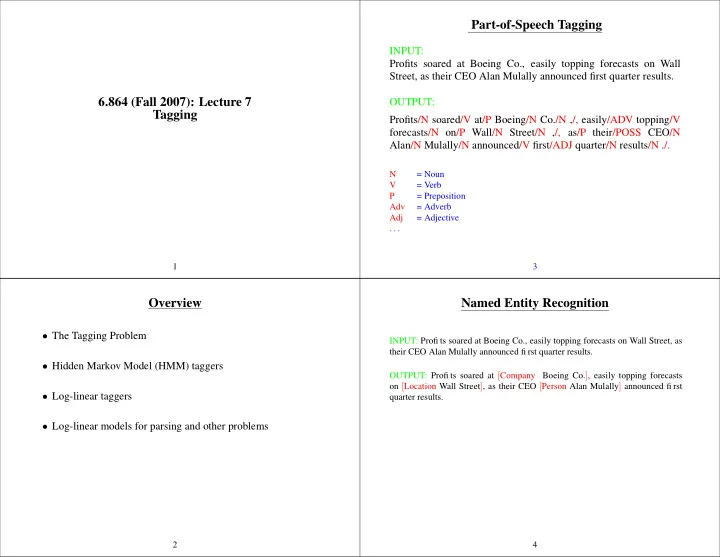

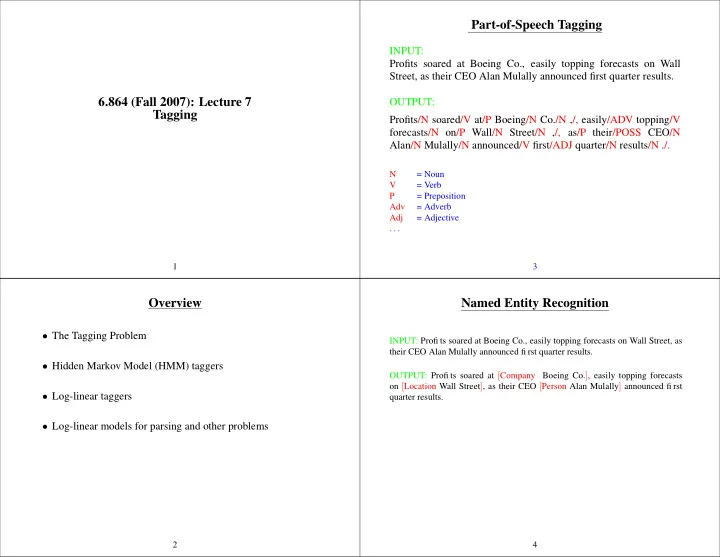

Part-of-Speech Tagging INPUT: Profits soared at Boeing Co., easily topping forecasts on Wall Street, as their CEO Alan Mulally announced first quarter results. 6.864 (Fall 2007): Lecture 7 OUTPUT: Tagging Profits/N soared/V at/P Boeing/N Co./N ,/, easily/ADV topping/V forecasts/N on/P Wall/N Street/N ,/, as/P their/POSS CEO/N Alan/N Mulally/N announced/V first/ADJ quarter/N results/N ./. N = Noun V = Verb P = Preposition Adv = Adverb Adj = Adjective . . . 1 3 Overview Named Entity Recognition • The Tagging Problem INPUT: Profi ts soared at Boeing Co., easily topping forecasts on Wall Street, as their CEO Alan Mulally announced fi rst quarter results. • Hidden Markov Model (HMM) taggers OUTPUT: Profi ts soared at [ Company Boeing Co. ] , easily topping forecasts on [ Location Wall Street ] , as their CEO [ Person Alan Mulally ] announced fi rst • Log-linear taggers quarter results. • Log-linear models for parsing and other problems 2 4

Named Entity Extraction as Tagging Two Types of Constraints INPUT: Influential/JJ members/NNS of/IN the/DT House/NNP Ways/NNP and/CC Means/NNP Profits soared at Boeing Co., easily topping forecasts on Wall Committee/NNP introduced/VBD legislation/NN that/WDT would/MD restrict/VB Street, as their CEO Alan Mulally announced first quarter results. how/WRB the/DT new/JJ savings-and-loan/NN bailout/NN agency/NN can/MD raise/VB capital/NN ./. OUTPUT: • “Local”: e.g., can is more likely to be a modal verb MD rather Profits/NA soared/NA at/NA Boeing/SC Co./CC ,/NA easily/NA than a noun NN topping/NA forecasts/NA on/NA Wall/SL Street/CL ,/NA as/NA their/NA CEO/NA Alan/SP Mulally/CP announced/NA first/NA • “Contextual”: e.g., a noun is much more likely than a verb to quarter/NA results/NA ./NA follow a determiner NA = No entity • Sometimes these preferences are in conflict: SC = Start Company The trash can is in the garage CC = Continue Company SL = Start Location CL = Continue Location . . . 5 7 Our Goal A Naive Approach Training set: • Use a machine learning method to build a “classifier” that 1 Pierre/NNP Vinken/NNP ,/, 61/CD years/NNS old/JJ ,/, will/MD join/VB the/DT maps each word individually to its tag board/NN as/IN a/DT nonexecutive/JJ director/NN Nov./NNP 29/CD ./. 2 Mr./NNP Vinken/NNP is/VBZ chairman/NN of/IN Elsevier/NNP N.V./NNP ,/, the/DT • A problem: does not take contextual constraints into account Dutch/NNP publishing/VBG group/NN ./. 3 Rudolph/NNP Agnew/NNP ,/, 55/CD years/NNS old/JJ and/CC chairman/NN of/IN Consolidated/NNP Gold/NNP Fields/NNP PLC/NNP ,/, was/VBD named/VBN a/DT nonexecutive/JJ director/NN of/IN this/DT British/JJ industrial/JJ conglomerate/NN ./. . . . 38,219 It/PRP is/VBZ also/RB pulling/VBG 20/CD people/NNS out/IN of/IN Puerto/NNP Rico/NNP ,/, who/WP were/VBD helping/VBG Huricane/NNP Hugo/NNP victims/NNS ,/, and/CC sending/VBG them/PRP to/TO San/NNP Francisco/NNP instead/RB ./. • From the training set, induce a function/algorithm that maps new sentences to their tag sequences. 6 8

How to model P ( T, S ) ? Overview • The Tagging Problem A Trigram HMM Tagger: • Hidden Markov Model (HMM) taggers P ( T, S ) = P ( END | w 1 . . . w n , t 1 . . . t n ) × � n j =1 [ P ( t j | w 1 . . . w j − 1 , t 1 . . . t j − 1 ) × – Basic definitions P ( w j | w 1 . . . w j − 1 , t 1 . . . t j )] Chain rule = P ( END | t n − 1 , t n ) × – Parameter estimation � n j =1 [ P ( t j | t j − 2 , t j − 1 ) × P ( w j | t j )] Independence assumptions – The Viterbi Algorithm • END is a special tag that terminates the sequence • Log-linear taggers • We take t 0 = t − 1 = *, where * is a special “padding” symbol • Log-linear models for parsing and other problems 9 11 Hidden Markov Models Independence Assumptions in the Trigram HMM Tagger • We have an input sentence S = w 1 , w 2 , . . . , w n • 1st independence assumption: each tag only depends on ( w i is the i ’th word in the sentence) previous two tags • We have a tag sequence T = t 1 , t 2 , . . . , t n P ( t j | w 1 . . . w j − 1 , t 1 . . . t j − 1 ) = P ( t j | t j − 2 , t j − 1 ) ( t i is the i ’th tag in the sentence) • 2nd independence assumption: each word only depends on • We’ll use an HMM to define underlying tag P ( t 1 , t 2 , . . . , t n , w 1 , w 2 , . . . , w n ) P ( w j | w 1 . . . w j − 1 , t 1 . . . t j ) = P ( w j | t j ) for any sentence S and tag sequence T of the same length. • Then the most likely tag sequence for S is T ∗ = argmax T P ( T, S ) 10 12

How to model P ( T, S ) ? An Example • S = the boy laughed Hispaniola/NNP quickly/RB became/VB an/DT important/JJ base/Vt • T = DT NN VBD Probability of generating base/Vt : P ( Vt | DT, JJ ) × P ( base | Vt ) P ( T, S ) = P ( DT | START, START ) × P ( NN | START, DT ) × P ( VBD | DT, NN ) × P ( END | NN, VBD ) × P ( the | DT ) × P ( boy | NN ) × P ( laughed | VBD ) 13 15 Why the Name? Overview n n • The Tagging Problem � � P ( T, S ) = P ( END | t n − 1 , t n ) P ( t j | t j − 2 , t j − 1 ) × P ( w j | t j ) j =1 j =1 • Hidden Markov Model (HMM) taggers � �� � � �� � w j ’s are observed Hidden Markov Chain – Basic definitions – Parameter estimation – The Viterbi Algorithm • Log-linear taggers • Log-linear models for parsing and other problems 14 16

Smoothed Estimation Dealing with Low-Frequency Words: An Example [ Bikel et. al 1999 ] (named-entity recognition) λ 1 × Count ( Dt, JJ, Vt ) P ( Vt | DT, JJ ) = Word class Example Intuition Count ( Dt, JJ ) + λ 2 × Count ( JJ, Vt ) twoDigitNum 90 Two digit year fourDigitNum 1990 Four digit year Count ( JJ ) containsDigitAndAlpha A8956-67 Product code + λ 3 × Count ( Vt ) containsDigitAndDash 09-96 Date Count () containsDigitAndSlash 11/9/89 Date containsDigitAndComma 23,000.00 Monetary amount containsDigitAndPeriod 1.00 Monetary amount,percentage othernum 456789 Other number λ 1 + λ 2 + λ 3 = 1 , and for all i , λ i ≥ 0 allCaps BBN Organization capPeriod M. Person name initial fi rstWord fi rst word of sentence no useful capitalization information initCap Sally Capitalized word lowercase can Uncapitalized word Count ( Vt, base ) P ( base | Vt ) = other , Punctuation marks, all other words Count ( Vt ) 17 19 Dealing with Low-Frequency Words: An Example Dealing with Low-Frequency Words Profi ts/NA soared/NA at/NA Boeing/SC Co./CC ,/NA easily/NA topping/NA A common method is as follows: forecasts/NA on/NA Wall/SL Street/CL ,/NA as/NA their/NA CEO/NA Alan/SP Mulally/CP announced/NA fi rst/NA quarter/NA results/NA ./NA • Step 1 : Split vocabulary into two sets ⇓ firstword /NA soared/NA at/NA initCap /SC Co./CC ,/NA easily/NA = words occurring ≥ 5 times in training Frequent words lowercase /NA forecasts/NA on/NA initCap /SL Street/CL ,/NA as/NA Low frequency words = all other words their/NA CEO/NA Alan/SP initCap /CP announced/NA fi rst/NA quarter/NA results/NA ./NA • Step 2 : Map low frequency words into a small, finite set, NA = No entity depending on prefixes, suffixes etc. SC = Start Company CC = Continue Company SL = Start Location CL = Continue Location . . . 18 20

The Viterbi Algorithm: Recursive Definitions Overview • Base case: • The Tagging Problem π [0 , ∗ , ∗ ] = log 1 = 0 π [0 , u, v ] = log 0 = −∞ for all other u, v • Hidden Markov Model (HMM) taggers here ∗ is a special tag padding the beginning of the sentence. – Basic definitions • Recursive case: for i = 1 . . . n , for all u, v , π [ i, u, v ] = t ∈T ∪{∗} { π [ i − 1 , t, u ] + Score ( S, i, t, u, v ) } max – Parameter estimation Backpointers allow us to recover the max probability sequence: – The Viterbi Algorithm BP [ i, u, v ] = argmax t ∈T ∪{∗} { π [ i − 1 , t, u ] + Score ( S, i, t, u, v ) } • Log-linear taggers Where Score ( S, i, t, u, v ) = log P ( v | t, u ) + log P ( w i | v ) Complexity is O ( nk 3 ) , where n = length of sentence, k is number • Log-linear models for parsing and other problems of possible tags 21 23 The Viterbi Algorithm The Viterbi Algorithm: Running Time • Question: how do we calculate the following?: • O ( n |T | 3 ) time to calculate Score ( S, i, t, u, v ) for all i , t , u , v . T ∗ = argmax T log P ( T, S ) • n |T | 2 entries in π to be filled in. • Define n to be the length of the sentence • O ( T ) time to fill in one entry • Define a dynamic programming table • ⇒ O ( n |T | 3 ) time π [ i, u, v ] = maximum log probability of a tag sequence ending in tags u, v at position i • Our goal is to calculate u,v ∈T π [ n, u, v ] max 22 24

Recommend

More recommend