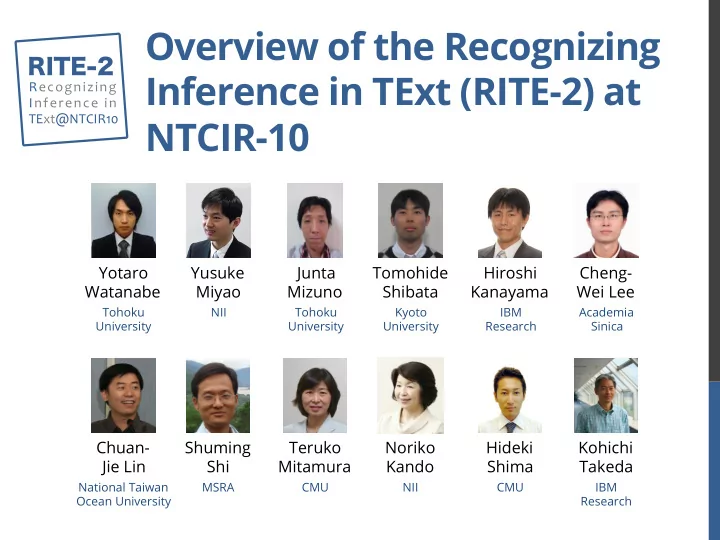

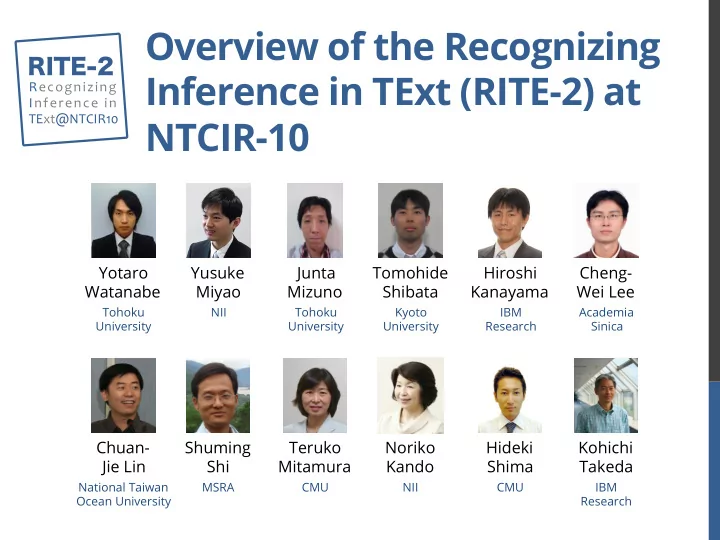

RITE-2 Overview of the Recognizing Inference in TExt (RITE-2) at Recognizing ¡ Inference ¡in ¡ TExt@NTCIR10 ¡ NTCIR-10 � Yotaro Yusuke Junta Tomohide Hiroshi Cheng- Watanabe Miyao Mizuno Shibata Kanayama Wei Lee Tohoku NII Tohoku Kyoto IBM Academia University University University Research Sinica Chuan- Shuming Teruko Noriko Hideki Kohichi Jie Lin Shi Mitamura Kando Shima Takeda National Taiwan MSRA CMU NII CMU IBM Ocean University Research

Overview of RITE-2 � • RITE-2 is a generic benchmark task that addresses a common semantic inference required in various NLP/IA applications The Kamakura Shogunate was considered to have begun in 1192, but the current leading t 1 : theory is that it was e ff ectively formed in 1185. Can t 2 be inferred from t 1 ? (entailment?) The Kamakura Shogunate began in Japan in the t 2 : 12 th century. 2 The 10th NTCIR Conference

Motivation � • Natural Language Processing (NLP) / Information Access (IA) applications Ø Question Answering, Information Retrieval, Information Extraction, Text Summarization, Automatic evaluation for Machine Translation, Complex Question Answering • The current entailment recognition systems have not been mature enough Ø The highest accuracy on Japanese BC subtask in NTCIR-9 RITE was only 58% Ø There is still enough room to address the task to advance entailment recognition technologies 3 The 10th NTCIR Conference

≡ ≡ � ≡ ≡ ≡ RITE vs. RITE-2 � QA� Bio.� apps� IR� Application oriented sentence Search ¡ Multiple sentence- Pyramid of entailment ¡? ¡ entailment level contradiction? documents recognition inference � technology entailment ¡? BC ¡ RITE-2 � RITE � sentence paraphrase? ¡ Sentence-level entailment ¡? ¡ MC ¡ sentence inference contradiction? case ¡alternation� quantification� coordination� modification� phrase ¡rel.� lexical ¡rel.� negation� World ¡ knowledge� … Linguistic Unit ¡ phenomena- Test � sent. sent. sent. sent. sent. sent. sent. sent. level inference � Foundation oriented sent. sent. sent. sent. sent. sent. sent. sent. 4 The 10th NTCIR Conference

RITE-2 Subtasks � 5 The 10th NTCIR Conference

BC and MC subtasks � The Kamakura Shogunate was considered to BC Y or N have begun in 1192, but the current leading t 1 : theory is that it was e ff ectively formed in 1185. MC The Kamakura Shogunate began in Japan in the B,F,C or I t 2 : 12 th century. • BC subtask Ø Entailment (t 1 entails t 2 ) or Non-Entailment (otherwise) • MC subtask Ø Bi-directional Entailment (t 1 entails t 2 & t 2 entails t 1 ) Ø Forward Entailment (t 1 entails t 2 & t 2 does not entail t 1 ) Ø Contradiction (t 1 contradicts t 2 or cannot be true at the same time) Ø Independence (otherwise) � 6 The 10th NTCIR Conference

Development of BC and MC data � retrieve pairs edit pairs of sentences if needed 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> 1: <t1, t2> for each example, 5 annotators assigned its semantic label 1: <t1, t2> RITE2 1: <t1, t2> 1: <t1, t2> BC, MC 1: <t1, t2> accept an example if data 4 or more annotators assigned the same label to the example 7 The 10th NTCIR Conference

成功を収めてオスマン帝国を最盛期 Entrance Exam subtasks ( Japanese only) � Entrance ¡exam ¡problem ¡ National ¡Center ¡Test ¡for ¡University ¡Admission ¡ ( Daigaku ¡Nyushi ¡Center ¡Shiken ) ¡ t 1 : ¡ スレイマン 1 世は数多くの軍事的 に導いた. (Suleiman ¡I ¡contributed ¡in ¡a ¡ lot ¡of ¡military ¡successes ¡and ¡led ¡the ¡ Ottoman ¡Empire ¡to ¡its ¡peak. ¡ t 2 : ¡ オスマン帝国ではスレイマン 1 世 の時代が最盛期であった. (The ¡ Ottoman ¡Empire’s ¡peak ¡was ¡during ¡ the ¡reign ¡of ¡Suleiman ¡I). ¡ 8 The 10th NTCIR Conference

Entrance Exam subtask: BC and Search � • Entrance Exam BC Ø Binary-classi fi cation problem ( Entailment or Non- entailment ) Ø t1 and t2 are given • Entrance Exam Search Ø Binary-classi fi cation problem ( Entailment or Non- entailment ) Ø t2 and a set of documents are given v Systems are required to search sentences in Wikipedia and textbooks to decide semantic labels � 9 The 10th NTCIR Conference

UnitTest ( Japanese only) � • Motivation Ø Evaluate how systems can handle linguistic phenomena that a ff ects entailment relations • Task de fi nition Ø Binary classi fi cation problem (same as BC subtask) t 1 : In the Meiji Constitution, legal clear distinction between the Imperial Family and Japan had been allowed. Category: modi fi er t 2 : In the Meiji Constitution, distinction between the Imperial Family and Japan had been allowed. t 1 : In the Meiji Constitution, distinction between the Imperial Family and Japan had been allowed. Category: melonymy t 2 : In the Meiji Constitution, distinction between the Emperor and Japan had been allowed 10 The 10th NTCIR Conference

Development of the UnitTest data � 1: <t 1 , t 2 > 1: <t 1 , t 2 > 1: <t 1 , t 2 > 1: <t 1 , t 2 > 1: <t1, t2> 1: <t 1 , t 2 > 2: <t1, t2> 1: <t1, t2> 1: <t 1 , t 2 > 1: <t 1 , t 2 > 1: <t 1 , t 2 > sampling 1: <t 1 , t 2 > 1: <t1, t2> break down 1: <t 1 , t 2 > 1.2: <t 1 , t 2 > 1: <t1, t2> 1: <t 1 , t 2 > Sampled 1.1: <t 1 , t 2 > BC subtask sentence UnitTest data data pairs • Procedure Ø Sentence pairs {<t 1 , t 2 >} were sampled from the BC subtask data Ø An annotator transformed each sampled sentence pair from t1 to t2 by breaking down the pair in a set of linguistic phenomena • [Kaneko+ 13] (to appear in ACL 2013) 11 The 10th NTCIR Conference

Distribution of the linguistic phenomena in UnitTest data � dev test dev test list 11 3 lexical synonymy 10 10 quantity 1 0 hypernymy 6 3 scrambling 16 15 meronymy 1 1 inference 4 2 entailment 1 0 Implicit relation 10 18 phrase synonymy 45 35 apposition 3 1 hypernymy 3 0 temporal 2 1 entailment 28 45 spatial 4 1 case alternation 9 7 disagree lexical 5 2 modi fi er 30 42 phrase 25 25 nominalization 2 1 modality 2 1 coreference 12 4 spatial 1 1 clause 29 14 temporal 0 1 relative clause 10 8 Total 272 241 transparent head 2 1 12 The 10th NTCIR Conference

RITE4QA (Chinese only) � • Motivation Ø Can an entailment recognition system rank a set of unordered answer candidates in QA? • Dataset Ø Developed from NTCIR-7 and NTCIR-8 CLQA data v t1: answer-candidate-bearing sentence v t2: a question in an a ffi rmative form • Requirements Ø Generate con fi dence scores for ranking process 13 The 10th NTCIR Conference

Evaluation Metrics � • Macro F1 and Accuracy (BC, MC, ExamBC, ExamSearch and UnitTest) Accuracy = 100 × N correct 1 X MacroF 1 = F 1 c | C | N examples c ∈ C • Correct Answer Ratio (Entrance Exam) Ø Y/N labels are mapped into selections of answers and calculate accuracy of the answers • Top1 and MRR (RITE4QA) � | Q | | Q | 1 1 1 X Top 1 = [top answer is correct] X MRR = | Q | | Q | rank i i =1 i =1 14 The 10th NTCIR Conference

Organization E ff ort �

≡ ≡ ≡ ≡ ≡ Generic Framework � • We provided pre-processed data and tools to lower barriers to entry � (1) Provided pre- (2) Provided a fundamental processed data entailment recognition tool sentence Linguistic Entailment outputs Analyzer Recognizer documents (3) Provided Evaluation results Evaluator RITE-2 evaluators (accuracies, F1-values…) 16 The 10th NTCIR Conference

(1) Pre-processed data � • Morphological and syntactic analysis Ø MeCab [Kudo+ 05] + CaboCha [Kudo+ 02] Ø Juman + KNP Ø Provided as XML data • Search Results for Exam Search subtask Ø Used TSUBAKI [Shinzato+ 11] to provide search results Ø Provided at most fi ve search results extracted from Wikipedia and textbooks � 17 The 10th NTCIR Conference

≡ (2) A fundamental entailment recognition tool (Baseline tool) � Baseline Tool Y or N Feature Machine B,F,C or I Extractor Learning instance (XML) relation • Features Ø a machine learning-based entailment recognition system Ø simple features are implemented (Feature Extractor) v Bag-of- {content words, aligned chunks, head words} v Ratio of aligned {content words, aligned chunks} Ø new features can be easily added Ø outputs fi les compatible with the format of the RITE-2 formal run 18 The 10th NTCIR Conference

� (3) RITE-2 Evaluators � • Generic Evaluator (all of the subtasks) $ java -jar rite2eval.jar -g RITE2_JA_test_bc.xml -s output_bc.txt � ------------------------------------------------------------ � |Label| #| Precision| Recall| F1| � | N| 354| 60.18( 204/ 339)| 57.63( 204/ 354)| 58.87| � | Y| 256| 44.65( 121/ 271)| 47.27( 121/ 256)| 45.92| � ------------------------------------------------------------ � Accuracy: � 53.28( 325/ 610) � Macro F1: � 52.40 � Confusion Matrix � --------------------- � |gold \ sys| N Y| � --------------------- � | N| 204 150| � | Y| 135 121| � --------------------- • Additional Evaluator (Entrance Exam) Ø Calculate correct answer ratio 19 The 10th NTCIR Conference

Recommend

More recommend