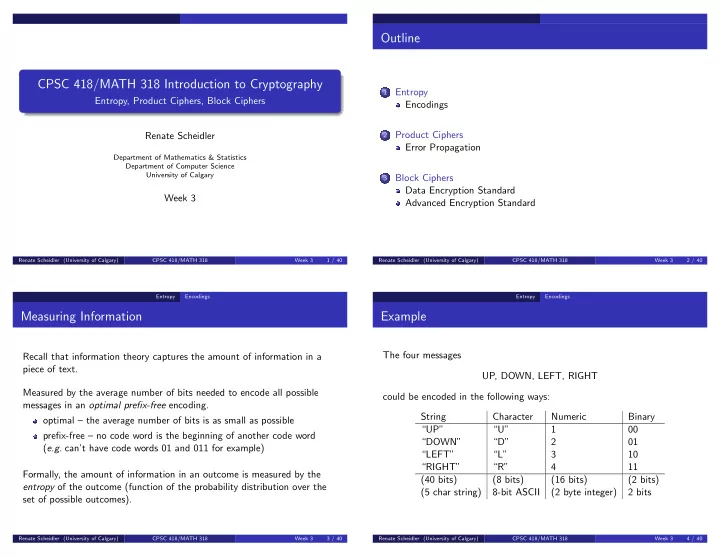

Outline CPSC 418/MATH 318 Introduction to Cryptography Entropy 1 Entropy, Product Ciphers, Block Ciphers Encodings Product Ciphers Renate Scheidler 2 Error Propagation Department of Mathematics & Statistics Department of Computer Science University of Calgary Block Ciphers 3 Data Encryption Standard Week 3 Advanced Encryption Standard Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 1 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 2 / 40 Entropy Encodings Entropy Encodings Measuring Information Example The four messages Recall that information theory captures the amount of information in a piece of text. UP, DOWN, LEFT, RIGHT Measured by the average number of bits needed to encode all possible could be encoded in the following ways: messages in an optimal prefix-free encoding. String Character Numeric Binary optimal – the average number of bits is as small as possible “UP” “U” 1 00 prefix-free – no code word is the beginning of another code word “DOWN” “D” 2 01 ( e.g. can’t have code words 01 and 011 for example) “LEFT” “L” 3 10 “RIGHT” “R” 4 11 Formally, the amount of information in an outcome is measured by the (40 bits) (8 bits) (16 bits) (2 bits) entropy of the outcome (function of the probability distribution over the (5 char string) 8-bit ASCII (2 byte integer) 2 bits set of possible outcomes). Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 3 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 4 / 40

Entropy Encodings Entropy Encodings Coding Theory Entropy Definition 1 Let X be a random variable taking on the values X 1 , X 2 , . . . , X n with a In the example, all encodings carry the same information (which we will be probability distribution able to measure), but some are more efficient (in terms of the number of bits required) than others. n � p ( X 1 ) , p ( X 2 ) , . . . , p ( X n ) where p ( X i ) = 1 Note: Huffmann encoding can be used to improve on the above example i =1 if the directions occur with different probabilities. The entropy of X is defined by the weighted average This branch of mathematics is called coding theory (and has nothing to do n n � 1 � with the term “code” defined previously). � � H ( X ) = p ( X i ) log 2 = − p ( X i ) log 2 ( p ( X i )) . p ( X i ) i =1 i =1 p ( X i ) � =0 p ( X i ) � =0 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 5 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 6 / 40 Entropy Encodings Entropy Encodings Intuition Example 1 An event occurring with probability 1 / 2 n can be optimally encoded with n bits. Suppose n = 1 (only one outcome). Then 1 1 An event occurring with probability p can be optimally encoded with p ( X 1 ) = 1 ⇐ ⇒ p ( X 1 ) = 1 ⇐ ⇒ log 2 p ( X 1 ) = 0 ⇐ ⇒ H ( X ) = 0 . log 2 (1 / p ) = − log 2 ( p ) bits. No information is needed to represent the outcome (you already know with The weighted sum H ( X ) is the expected number of bits ( i.e. the certainly what it’s going to be). amount of information) in an optimal encoding of X ( i.e. one that minimizes the number of bits required). In fact, for arbitrary n , H ( X ) = 0 if and only of p i = 1 for exactly one i If X 1 , X 2 , . . . , X n are outcomes (e.g. plaintexts, ciphertexts, keys) and p j = 0 for all j � = i . occurring with respective probabilities p ( X 1 ) , p ( X 2 ) , . . . , p ( X n ), then H ( X ) is the average amount of information required to represent an outcome. Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 7 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 8 / 40

Entropy Encodings Entropy Encodings Example 2 Example 3 Suppose n > 1 and p ( X i ) > 0 for all i . Then 0 < p ( X i ) < 1 ( i = 1 , 2 , . . . , n ) Suppose there are two possible outcomes which are equally likely: 1 p ( X i ) > 1 p (heads) = p (tails) = 1 2 , � 1 � H ( X ) = 1 2 log 2 (2) + 1 log 2 > 0 , 2 log 2 (2) = 1 . p ( X i ) hence H ( X ) > 0 if n > 1 . So either outcome needs 1 bit of information (heads or tails). If there are at least 2 outcomes, both occurring with nonzero probability, either one of the outcomes needs information to be represented. Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 9 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 10 / 40 Entropy Encodings Entropy Encodings Example 4 Example 5 Suppose we have p ( UP ) = 1 p ( DOWN ) = 1 p ( LEFT ) = 1 p ( RIGHT ) = 1 2 , 4 , 8 , 8 . Suppose we have n outcomes which are equally likely: p ( X i ) = 1 / n . Then n 1 H ( X ) = 1 2 log 2 (2) + 1 4 log 2 (4) + 1 8 log 2 (8) + 1 � H ( X ) = n log 2 n = log 2 ( n ) . 8 log 2 (8) i =1 = 1 2 + 2 4 + 3 8 + 3 8 = 14 8 = 7 4 = 1 . 75 . So if all outcomes are equally likely, then H ( X ) = log 2 ( n ) . An optimal prefix-free (Huffman) encoding is If n = 2 k ( e.g. each outcome is encoded with k bits), then H ( X ) = k . UP = 0 , DOWN = 10 , LEFT = 110 , RIGHT = 111 . Because UP is more probable than the other messages, receiving UP is more certain (i.e. encodes less information) than receiving one of the other messages. The average amount of information required is 1 . 75 bits. Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 11 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 12 / 40

Entropy Encodings Entropy Encodings Applications Extremal Entropy Recall that the entropy of n equally likely outcomes ( i.e. each occurring with probability 1 / n ) is log 2 ( n ). This is indeed the maximum: For a plaintext space M , H ( M ) measures the uncertainty of plaintexts. Gives the amount of partial information that must be learned about a Theorem 1 message in order to know its whole content when it has been H ( X ) is maximized if and only if all outcomes are equally likely. That is, distorted by a noisy channel (coding theory) or for any n , H ( X ) = log 2 ( n ) is maximal if and only if p ( X i ) = 1 / n for hidden in a ciphertext (cryptography) 1 ≤ i ≤ n. For example, consider a ciphertext C = X$7PK that is known to H ( X ) = 0 is minimized if and only if p ( X i ) = 1 for or exactly one i and correspond to a plaintext M ∈ M = { “heads”,“tails” } in a fair coin toss. p ( X j ) = 0 for all j � = i. H ( M ) = 1, so the cryptanalyst only needs to find the distinguishing bit in the first character of M , not all of M . Intuitively, H ( X ) decreases as the distribution of messages becomes increasingly skewed. Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 13 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 14 / 40 Entropy Encodings Entropy Encodings Minimal Entropy – Proof Maximal Entropy – Proof Sketch Proof. Proof sketch, n = 1 and n = 2. If one probability is 1, say p ( X 1 ) = 1, and all the others are 0, then Case n = 1: this is Example 1: p ( X 1 ) = 1 ⇐ ⇒ H ( X ) = 0. Case n = 2: “If” part is Example 3: H ( X ) = − p ( X 1 ) log 2 ( p ( X 1 )) = − 1 · 0 = 0 . p ( X 1 ) = p ( X 2 ) = 1 Conversely: 2 = ⇒ H ( X ) = log 2 (2) = 1 . H ( X ) = 0 “Only if” part is Problem 4 on Assignment 1. ⇒ p ( X i ) log 2 ( p ( X i )) = 0 for each i with p ( X i ) > 0 Put p ( X 1 ) = p > 0. Then p ( X 2 ) = 1 − p > 0, so ⇒ log 2 ( p ( X i )) = 0 for each i with p ( X i ) > 0 H ( X ) = − p log 2 ( p ) − (1 − p ) log 2 (1 − p ) . ⇒ p ( X i ) = 1 for each i with p ( X i ) > 0 , Use calculus to show that as a function of p , H has a maximum iff but since all probabilities sum to one, this means there can only be one p = 1 / 2. (Note that the derivative of log 2 ( x ) is 1 / ( x ln(2)), not 1 / x .) non-zero probability which is 1. Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 15 / 40 Renate Scheidler (University of Calgary) CPSC 418/MATH 318 Week 3 16 / 40

Recommend

More recommend