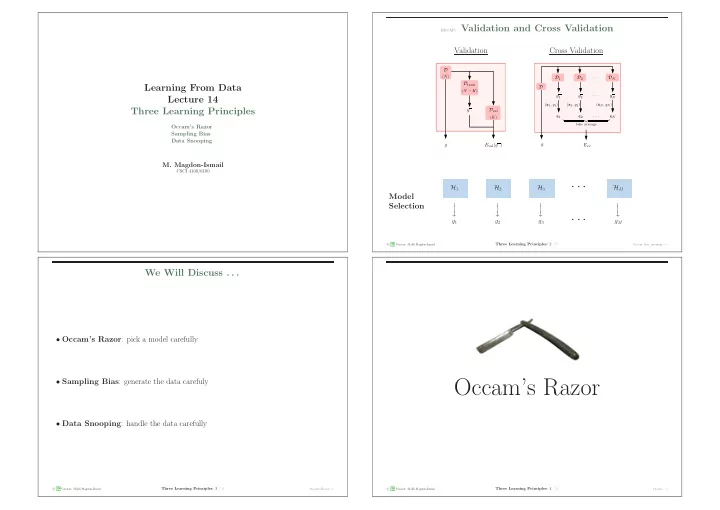

recap: Validation and Cross Validation Validation Cross Validation D ( N ) D 1 D 2 · · · D N D train Learning From Data D ( N − K ) g 1 g 2 · · · g N Lecture 14 ( x 1 , y 1 ) ( x 2 , y 2 ) ( x N , y N ) Three Learning Principles D val g · · · ( K ) e 1 e 2 e N � �� � take average Occam’s Razor Sampling Bias Data Snooping E val ( g ) g g E cv M. Magdon-Ismail CSCI 4100/6100 · · · H 1 H 2 H 3 H M Model Selection − − − − − − − − − − − − → → → → · · · g 1 g 2 g 3 g M � A M Three Learning Principles : 2 /58 c L Creator: Malik Magdon-Ismail Occam, bias, snooping − → We Will Discuss . . . • Occam’s Razor : pick a model carefully Occam’s Razor • Sampling Bias : generate the data carefuly • Data Snooping : handle the data carefully � A c M Three Learning Principles : 3 /58 � A c M Three Learning Principles : 4 /58 L Creator: Malik Magdon-Ismail Occam’s Razor − → L Creator: Malik Magdon-Ismail Occam − →

Simpler is Better Occam’s Razor The simplest model that fits the data is also the most plausible. use a ‘ razor ’ to ‘trim down’ . . . or, beware of using complex models to fit data “an explanation of the data to make it as simple as possible but no simpler.” attributed to William of Occam (14th Century) and often mistakenly to Einstein � A M Three Learning Principles : 5 /58 � A M Three Learning Principles : 6 /58 c L Creator: Malik Magdon-Ismail Simpler is Better − → c L Creator: Malik Magdon-Ismail What is Simpler? − → What is Simpler? What is Simpler? simple hypothesis h simple hypothesis set H simple hypothesis h simple hypothesis set H Ω( h ) Ω( H ) Ω( h ) Ω( H ) low order polynomial H with small d vc low order polynomial H with small d vc hypothesis with small weights small number of hypotheses hypothesis with small weights small number of hypotheses easily described hypothesis low entropy set easily described hypothesis low entropy set . . . . . . . . . . . . The equivalence: The equivalence: A hypothesis set with simple hypotheses must be small A hypothesis set with simple hypotheses must be small We had a glimpse of this: We had a glimpse of this: λ λ soft order constraint (smaller H ) ← − − − − → minimize E aug (favors simpler h ) . soft order constraint (smaller H ) ← − − − − → minimize E aug (favors simpler h ) . � A c M Three Learning Principles : 7 /58 � A c M Three Learning Principles : 8 /58 L Creator: Malik Magdon-Ismail What is Simpler? − → L Creator: Malik Magdon-Ismail What is Simpler? − →

What is Simpler? Why is Simpler Better simple hypothesis h simple hypothesis set H Mathematically: simple curtails ability to fit noise, VC-dimension is small, and blah and blah . . . Ω( h ) Ω( H ) low order polynomial H with small d vc hypothesis with small weights small number of hypotheses simpler is better because you will be more “surprised” when you fit the data. easily described hypothesis low entropy set . . . . . . The equivalence: If something unlikely happens, it is very significant when it happens. A hypothesis set with simple hypotheses must be small . . . Detective Gregory: “Is there any other point to which you would wish to draw my attention?” Sherlock Holmes: “To the curious incident of the dog in the night-time.” Detective Gregory: “The dog did nothing in the night-time.” We had a glimpse of this: Sherlock Holmes: “That was the curious incident.” λ . soft order constraint (smaller H ) → minimize E aug (favors simpler h ) . . ← − − − − . – Silver Blaze , Sir Arthur Conan Doyle � A M Three Learning Principles : 9 /58 � A M Three Learning Principles : 10 /58 c L Creator: Malik Magdon-Ismail Why is Simpler Better − → c L Creator: Malik Magdon-Ismail Scientific Experiment − → A Scientific Experiment A Scientific Experiment Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of that experiment provides no evidence one way or the other for the hypothesis. that experiment provides no evidence one way or the other for the hypothesis. Scientist 3 Scientist 2 Scientist 3 resistivity ρ resistivity ρ resistivity ρ temperature T temperature T temperature T no evidence very convincing some evidence? no evidence very convincing some evidence? Who provides most evidence for the hypothesis “ ρ is linear in T ”? Who provides most evidence for the hypothesis “ ρ is linear in T ”? � A c M Three Learning Principles : 11 /58 � A c M Three Learning Principles : 12 /58 L Creator: Malik Magdon-Ismail Scientific Experiment − → L Creator: Malik Magdon-Ismail Scientific Experiment − →

A Scientific Experiment A Scientific Experiment Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of that experiment provides no evidence one way or the other for the hypothesis. that experiment provides no evidence one way or the other for the hypothesis. Scientist 1 Scientist 2 Scientist 3 Scientist 1 Scientist 2 Scientist 3 resistivity ρ resistivity ρ resistivity ρ resistivity ρ resistivity ρ resistivity ρ temperature T temperature T temperature T temperature T temperature T temperature T no evidence very convincing some evidence? no evidence very convincing some evidence? Who provides most evidence for the hypothesis “ ρ is linear in T ”? Who provides most evidence for the hypothesis “ ρ is linear in T ”? � A M Three Learning Principles : 13 /58 � A M Three Learning Principles : 14 /58 c L Creator: Malik Magdon-Ismail Scientific Experiment − → c L Creator: Malik Magdon-Ismail Scientist 2 vs. 3 − → Scientist 2 Versus Scientist 3 Scientist 1 versus Scientist 3 Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of that experiment provides no evidence one way or the other for the hypothesis. that experiment provides no evidence one way or the other for the hypothesis. Scientist 1 Scientist 2 Scientist 3 Scientist 1 Scientist 2 Scientist 3 resistivity ρ resistivity ρ resistivity ρ resistivity ρ resistivity ρ resistivity ρ temperature T temperature T temperature T temperature T temperature T temperature T no evidence very convincing some evidence? no evidence very convincing some evidence? Who provides most evidence? Who provides most evidence? � A c M Three Learning Principles : 15 /58 � A c M Three Learning Principles : 16 /58 L Creator: Malik Magdon-Ismail Scientist 1 vs. 3 − → L Creator: Malik Magdon-Ismail Non-Falsifiability − →

Axiom of Non-Falsifiability Falsification and m H ( N ) If H shatters x 1 , · · · , x N , Axiom. If an experiment has no chance of falsifying a hypothesis, then the result of that experiment provides no evidence one way or the other for the hypothesis. – Don’t be surprised if you fit the data. – Can’t falsify “ H is a good set of candidate hypotheses for f ”. Scientist 1 Scientist 2 Scientist 3 If H doesn’t shatter x 1 , · · · , x N , and the target values are uniformly distributed, P [falsification] ≥ 1 − m H ( N ) . resistivity ρ resistivity ρ 2 N A good fit is surprising with simple H , hence significant. You can, but didn’t falsify “ H is a good set of candidate hypotheses for f ” temperature T temperature T no evidence very convincing some evidence? The data must have a chance to win. Who provides most evidence? � A M Three Learning Principles : 17 /58 � A M Three Learning Principles : 18 /58 c L Creator: Malik Magdon-Ismail Falsification and m H ( N ) − → c L Creator: Malik Magdon-Ismail Falsification and m H ( N ) − → Falsification and m H ( N ) Falsification and m H ( N ) If H shatters x 1 , · · · , x N , If H shatters x 1 , · · · , x N , – Don’t be surprised if you fit the data. – Don’t be surprised if you fit the data. – Can’t falsify “ H is a good set of candidate hypotheses for f ”. – Can’t falsify “ H is a good set of candidate hypotheses for f ”. If H doesn’t shatter x 1 , · · · , x N , and the target values are uniformly distributed, If H doesn’t shatter x 1 , · · · , x N , and the target values are uniformly distributed, P [falsification] ≥ 1 − m H ( N ) P [falsification] ≥ 1 − m H ( N ) . . 2 N 2 N A good fit is surprising with simple H , hence significant. You can, but didn’t falsify A good fit is surprising with simple H , hence significant. You can, but didn’t falsify “ H is a good set of candidate hypotheses for f ” “ H is a good set of candidate hypotheses for f ” The data must have a chance to win. The data must have a chance to win. � A c M Three Learning Principles : 19 /58 � A c M Three Learning Principles : 20 /58 L Creator: Malik Magdon-Ismail Falsification and m H ( N ) − → L Creator: Malik Magdon-Ismail Beyond Occam − →

Recommend

More recommend