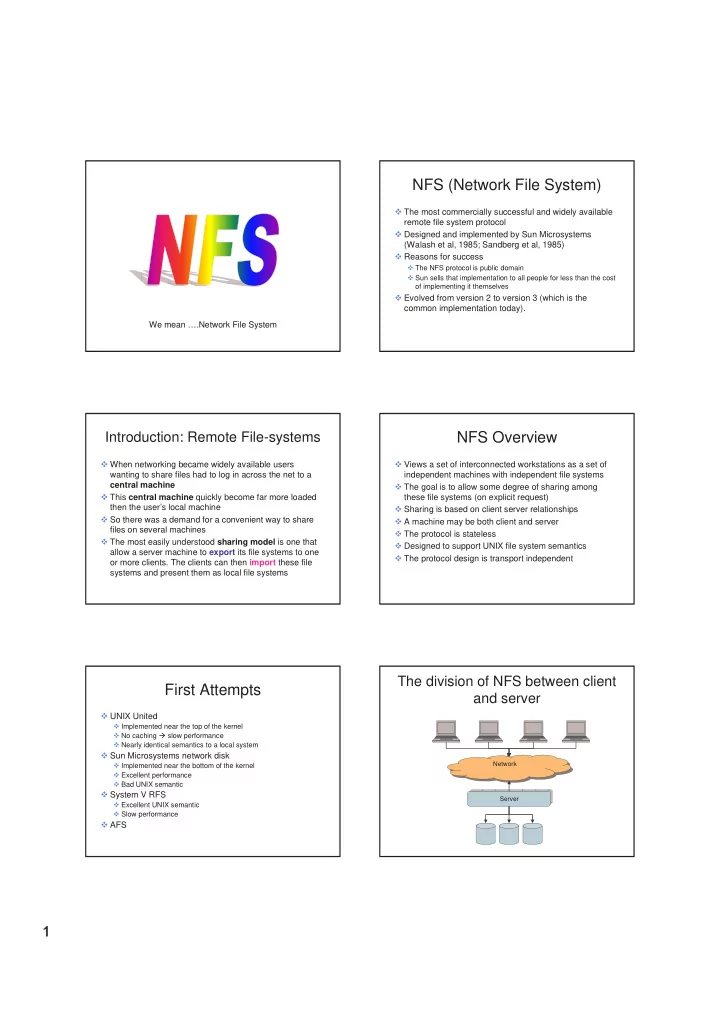

� NFS (Network File System) � The most commercially successful and widely available remote file system protocol � Designed and implemented by Sun Microsystems (Walash et al, 1985; Sandberg et al, 1985) � Reasons for success � The NFS protocol is public domain � Sun sells that implementation to all people for less than the cost of implementing it themselves � Evolved from version 2 to version 3 (which is the common implementation today). We mean ….Network File System NFS Overview Introduction: Remote File-systems � When networking became widely available users � Views a set of interconnected workstations as a set of wanting to share files had to log in across the net to a independent machines with independent file systems central machine � The goal is to allow some degree of sharing among � This central machine quickly become far more loaded these file systems (on explicit request) then the user’s local machine � Sharing is based on client server relationships � So there was a demand for a convenient way to share � A machine may be both client and server files on several machines � The protocol is stateless � The most easily understood sharing model is one that � Designed to support UNIX file system semantics allow a server machine to export its file systems to one � The protocol design is transport independent or more clients. The clients can then import these file systems and present them as local file systems The division of NFS between client First Attempts and server � UNIX United � Implemented near the top of the kernel � No caching � slow performance � Nearly identical semantics to a local system � Sun Microsystems network disk � Implemented near the bottom of the kernel Network Network � Excellent performance � Bad UNIX semantic � System V RFS Server Server � Excellent UNIX semantic � Slow performance � AFS

� Mounting Example 3: cascading mounts The effects of mounting S2:/usr/dir3 over U:/usr/local/dir1 � A machine (M1) wants to access transparently a remote directory (On another machine M2) � To do that a client on M1 should perform a mount S2: operation U: S1: � The semantics are that a remote directory is mounted over a directory of a local file system usr usr usr � Once the mount operation is complete, the mounted directory looks like an integral sub tree of the local file dir3 local shared system dir1 dir1 � The previous sub tree accessed from the local directory is not accessible anymore Example 1: Initial situation RPC/XDR � One of the design goals of NFS is to operate in heterogeneous environments � The NFS specification is independent from the U: S1: S2: communication media � This independence is achieved through the use usr usr usr of RPC primitives built on top of an External Data Representation (XDR) protocol local shared dir3 dir1 Example 2: Simple mount RPC The effects of mounting S1:/usr/shared over U:/usr/local � A server registers a procedure implementation � A client calls a function which looks local to the client U: S1: � E.g., add(a,b) � The RPC implementation packs (marshals) the usr usr function name and parameter values into a message and sends it to the server local shared dir1 dir1

� RPC The mount protocol � Server accepts the message, unpacks (un-marshals) 1. Client’s mount process send message to the server’s parameters and calls the local function portmap daemon requesting port number of the server’s � Return values is then marshaled into a message and mountd daemon sent back to the client 2. Server’s portmap daemon returns the requested info � Marshaling/un-marshaling must take into account 3. Client’s mountd send the server’s mountd a request with differences in data representation the path of the flie system it wants to mount 4. Server’s mountd request a file handle from the kernel 1. If the request is successful the handle is returned to the client 2. If not error is returned 5. The client’s mountd perform the mount() system call using the received file handle RPC The mount protocol � Transport: both TCP and UDP client Server � Data types: atomic types and non-recursive structures � Pointers are not supported � Complex memory objects (e.g., linked lists) are not mount portmap mountd user supported user � NFS is built on top of RPC kernel kernel 3 2 1 4 The mount protocol Access Models Remote access mode: � Is used to establish the initial logical connection between a Client Server server and a client •Client send access requests to server � Each machine has a server process (daemon) (outside the •File stays on server kernel) performing the protocol functions � The server has a list of exported directories (/etc/exports) � The portmap service is used to find the location (port number) of the server mount service Upload/download mode: Client Server 1. File moved to client 2. Accesses are done Old file on client Old file New file 3. When client is done file is returned to server

� NFS Protocol Requests File handle � Provides a set � Built from the file system identifier , an inode number and RPC request Action Idempotent of RPCs for a generation number Yes GETATTR Get file attributes remote file Yes � The server creates a unique identifier for each local fs SETATTR Set file attributes operations Yes LOOKUP Look up file name � A generation number is assigned to an inode each time Yes READLINK Read from symbolic name the latter is allocated to represent a new file Yes READ Read from file Yes WRITE Write to file � MOST NFS implementations use a random number Yes CREATE Create file generator to allocate generation numbers No REMOVE Remove file � The generation number verifies that the inode still No RENAME Rename file No LINK Create link to file references that same file that it referenced when the file Yes SYMLINK Create symbolic link was first accessed No MKDIR Create directory � 32 bits in NFS-V2, 64 bits in NFS-V3, 128 bits in NFS-V4 No RMDIR Remove directory Yes READDIR Read from directory Yes STATFS Get file system attributes Idempotent Operations Statelessness � We can see that most operations are idempotent � If you noticed, open and close are missing from the � An idempotent operation is one that can be repeated “requests table” � NFS servers are stateless , which means that they don’t several times without the final result being changed or an error being caused maintain information about their clients from one access � For example writing the same data to the same offset in to another � Since there is no parallel to open files table, every the file � However removing the file is not idempotent request has to provide a full set of arguments, including a unique file identifier and an absolute offset � This is an issue when RPC acknowledgments are lost (self contained) and the client retransmit the request � The resulting design is robust, no special measures � If a non idempotent request is retransmitted and is not in need to be taken to recover the server after a crash the server recent request cache we get an error The NFS File handle Drawback to statelessness � Each file on the server can be identified by a unique � Semantics of the local file system imply state file handle � When a file is unlinked, it continue to be accessible until the last reference to it is closed � Are globally unique and are passed in operations � Advisory locking � Created by the server when pathname translation � NFS doesn’t know about clients so it cannot properly request (lookup) is received know when to free file space (.nfsAxxxx4.4) � The server find the requested file or directory and ensure � Performance: All operations that modify the file-system that the user has access permissions must be committed to stable storage before the RPC can � If all is O.K the server returns the file handle be acknowledged � It identify the file in future requests � In NFS-V3 new asynchronous write RPC request eliminates some of the synchronous writs

Recommend

More recommend