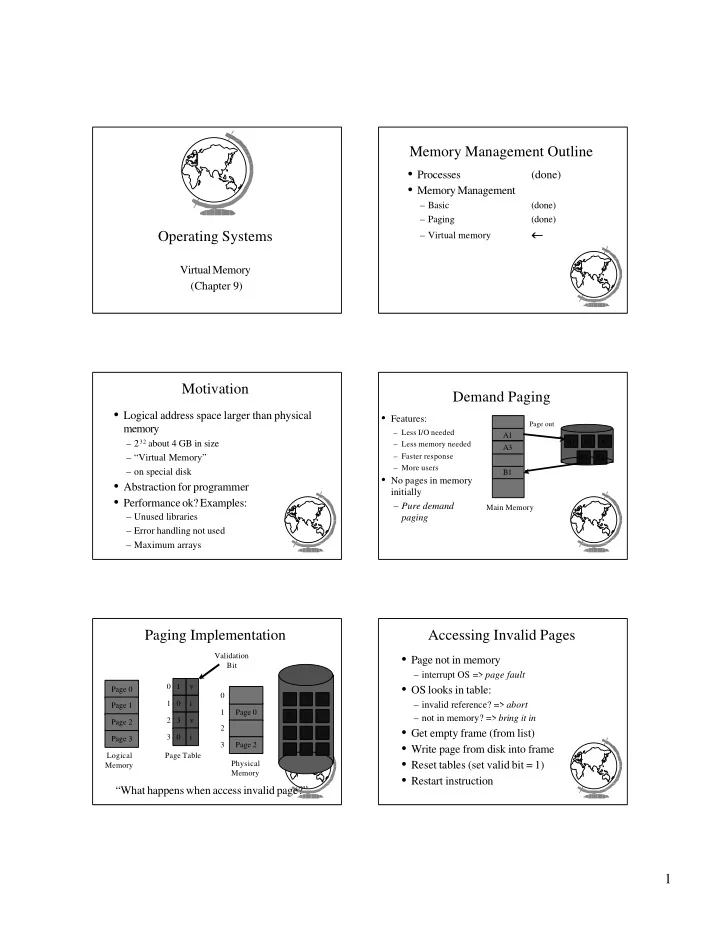

Memory Management Outline • Processes (done) • Memory Management – Basic (done) – Paging (done) ← ← Operating Systems – Virtual memory Virtual Memory (Chapter 9) Motivation Demand Paging • Logical address space larger than physical • Features: Page out memory – Less I/O needed A1 – 2 32 about 4 GB in size A1 A2 A3 – Less memory needed A3 – “Virtual Memory” – Faster response B1 B2 – More users – on special disk B1 • No pages in memory • Abstraction for programmer initially • Performance ok? Examples: – Pure demand Main Memory – Unused libraries paging – Error handling not used – Maximum arrays Paging Implementation Accessing Invalid Pages Validation • Page not in memory Bit – interrupt OS => page fault 0 1 v • OS looks in table: Page 0 0 1 0 i – invalid reference? => abort Page 1 1 Page 0 0 3 – not in memory? => bring it in 2 3 v Page 2 2 • Get empty frame (from list) 1 3 0 i Page 3 3 Page 2 • Write page from disk into frame 2 Logical Page Table • Reset tables (set valid bit = 1) Physical Memory Memory • Restart instruction “What happens when access invalid page?” 1

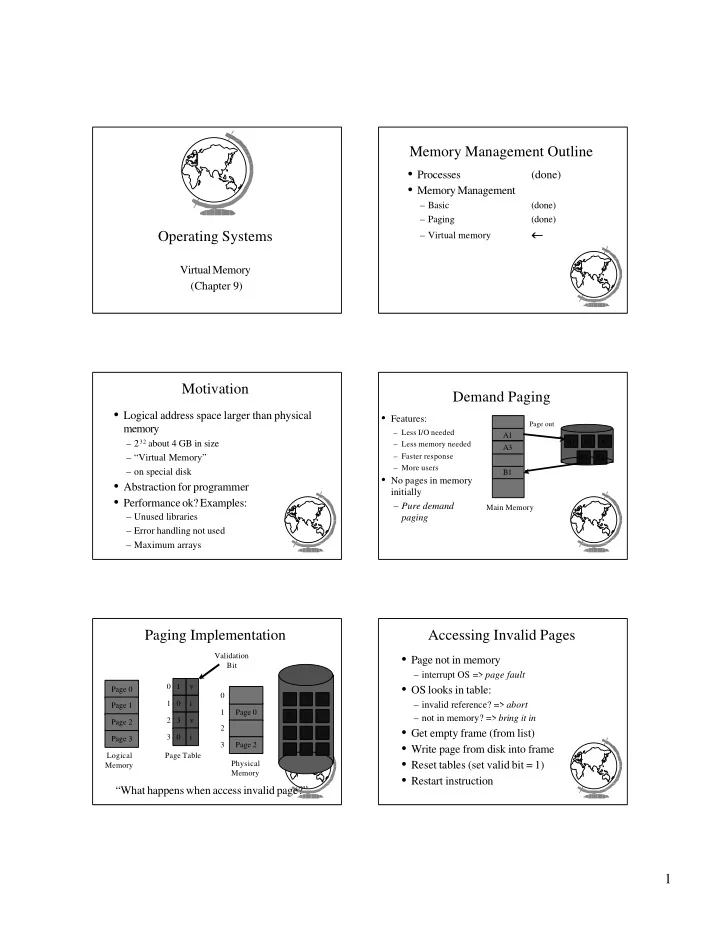

Performance Example Performance of Demand Paging • Memory access time = 100 nanoseconds • Page fault overhead = 25msec • Page Fault Rate ( p ) • Page fault rate = 1/1000 0 < p < 1.0 (no page faults to every ref is a fault) • EAT = (1-p) * 100 + p * (25 msec) • Page Fault Overhead = (1-p) * 100 + p * 25,000,000 = write page in + update + restart = 100 + 24,999,900 * p – Dominated by time to write page in = 100 + 24,999,900 * 1/1000 = 25 microseconds! • Effective Access Time • Want less than 10% degradation = (1- p ) (memory access) + p (page fault overhead) 110 > 100 + 24,999,900 * p 10 > 24,999,9000 * p p < .0000004 or 1 fault in 2,500,000 accesses! Page Replacement No Free Frames • Page fault => What if no free frames? 0 1 v – terminate process (out of memory) (0) 1 0 2 i v (3) – swap out process (reduces degree of multiprog) – replace another page with needed page Page 0 2 3 v 0 – Page replacement (1) Page 1 (4) 3 0 i • Page fault with page replacement: 1 Page 0 0 3 Page 2 Page Table – if free frame, use it 2 victim 1 – else use algorithm to select victim frame Page 3 (2) 0 1 v i 3 Page 2 – write page to disk (3) 2 Logical – read in new page 1 0 i Physical Memory – change page tables Memory – restart process Page Table First-In-First-Out (FIFO) Page Replacement Algorithms 1,2,3,4,1,2,5,1,2,3,4,5 • Every system has its own 1 • Want lowest page fault rate 3 Frames / Process 2 • Evaluate by running it on a particular string 3 of memory references ( reference string ) and computing number of page faults • Example: 1,2,3,4,1,2,5,1,2,3,4,5 2

First-In-First-Out (FIFO) First-In-First-Out (FIFO) 1,2,3,4,1,2,5,1,2,3,4,5 1,2,3,4,1,2,5,1,2,3,4,5 1 4 5 1 4 5 3 Frames / Process 3 Frames / Process 9 Page Faults 9 Page Faults 2 1 3 2 1 3 3 2 4 3 2 4 1 How could we reduce the number of page faults? 4 Frames / Process 2 3 4 First-In-First-Out (FIFO) GroupWork 1,2,3,4,1,2,5,1,2,3,4,5 1 4 5 3 Frames / Process • How else can we reduce the number of page 9 Page Faults 2 1 3 faults? 3 2 4 – Try a new algorithm 1,2,3,4,1,2,5,1,2,3,4,5 1 5 4 1 4 Frames / Process 4 Frames / Process 10 Page Faults! 2 1 5 2 3 2 Belady’s Anomaly 3 4 3 4 Optimal Optimal vs. vs. • Replace the page that will not be used for • Replace the page that will not be used for the longest period of time the longest period of time 1,2,3,4,1,2,5,1,2,3,4,5 1,2,3,4,1,2,5,1,2,3,4,5 1 1 4 4 Frames / Process 4 Frames / Process 2 2 6 Page Faults 3 3 How do we know this? 4 4 5 Use as benchmark 3

Least Recently Used Least Recently Used • Replace the page that has not been used for • Replace the page that has not been used for the longest period of time the longest period of time 1,2,3,4,1,2,5,1,2,3,4,5 1,2,3,4,1,2,5,1,2,3,4,5 1 1 5 2 2 8 Page Faults 3 3 5 4 No Belady’s Anomoly 4 4 3 - “Stack” Algorithm - N frames subset of N +1 LRU Implementation LRU Approximations • Counter implementation • LRU good, but hardware support expensive – every page has a counter; every time page is • Some hardware support by reference bit referenced, copy clock to counter – when a page needs to be changed, compare the – with each page, initially = 0 counters to determine which to change – when page is referenced, set = 1 • Stack implementation – replace the one which is 0 (no order) – keep a stack of page numbers – page referenced: move to top • Enhance by having 8 bits and shifting – no search needed for replacement • (Can we do this in software?) – approximate LRU Second-Chance Second-Chance • FIFO replacement, but … (a) (b) – Get first in FIFO 1 1 0 1 – Look at reference bit 0 2 0 2 + bit == 0 then replace Next 1 3 0 3 + bit == 1 then set bit = 0, get next in FIFO Vicitm • If page referenced enough, never replaced 1 4 0 4 • Implement with circular queue If all 1, degenerates to FIFO 4

Enhanced Second-Chance Counting Algorithms • 2-bits, reference bit and modify bit • Keep a counter of number of references • (0,0) neither recently used nor modified – LFU - replace page with smallest count – best page to replace + if does all in beginning, won’t be replaced • (0,1) not recently used but modified + decay values by shift – needs write-out (“dirty” page) – MFU - replace page with largest count • (1,0) recently used but “clean” + smallest count just brought in and will probably be used – probably used again soon + lock in place for some time, maybe • (1,1) recently used and modified • Not too common (expensive) and not too – used soon, needs write-out good • Circular queue in each class -- (Macintosh) Page Buffering Allocation of Frames • Pool of frames • How many fixed frames per process? • Two allocation schemes: – start new process immediately, before writing old + write out when system idle – fixed allocation – list of modified pages – priority allocation + write out when system idle – pool of free frames, remember content + page fault => check pool Fixed Allocation Priority Allocation • Equal allocation • Use a proportional scheme based on priority – ex: 93 frames, 5 procs = 18 per proc (3 in pool) • If process generates a page fault • Proportional Allocation – select replacement a process with lower – number of frames proportional to size priority – ex: 64 frames, s1 = 10, s2 = 127 • “Global” versus “Local” replacement + f1 = 10 / 137 x 64 = 5 – local consistent (not influenced by others) + f2 = 127 / 137 x 64 = 59 • Treat processes equal – global more efficient (used more often) 5

Thrashing Thrashing • If a process does not have “enough” pages, the page-fault rate is very high utilization – low CPU utilization CPU – OS thinks it needs increased multiprogramming – adds another process to system • Thrashing is when a process is busy swapping pages in and out degree of muliprogramming Cause of Thrashing Working-Set Model • Why does paging work? • Working set window W = a fixed number of – Locality model page references + process migrates from one locality to another – total number of pages references in time T + localities may overlap • D = sum of size of W ’s • Why does thrashing occur? – sum of localities > total memory size • How do we fix thrashing? – Working Set Model – Page Fault Frequency Page Fault Frequency Working Set Example increase number of • T = 5 frames Page Fault Rate • 1 2 3 2 3 1 2 4 3 4 7 4 3 3 4 1 1 2 2 2 1 upper bound W={1,2,3} W={3,4,7} W={1,2} lower bound – if T too small, will not encompass locality decrease number of – if T too large, will encompass several localities frames – if T => infinity, will encompass entire program Number of Frames • if D > m => thrashing, so suspend a process • Establish “acceptable” page-fault rate • Modify LRU appx to include Working Set – If rate too low, process loses frame – If rate too high, process gains frame 6

Recommend

More recommend