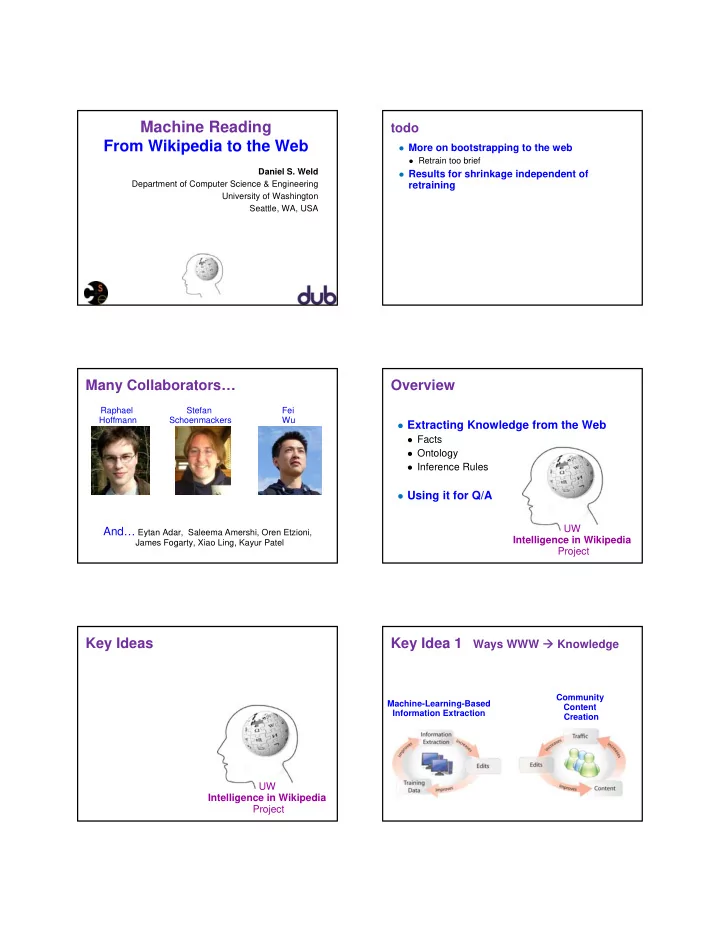

Machine Reading todo From Wikipedia to the Web � More on bootstrapping to the web � Retrain too brief Daniel S. Weld � Results for shrinkage independent of Department of Computer Science & Engineering retraining University of Washington Seattle, WA, USA Many Collaborators… Overview Raphael Stefan Fei Hoffmann Schoenmackers Wu � Extracting Knowledge from the Web � Facts � Ontology � Inference Rules � Using it for Q/A UW And… Eytan Adar, Saleema Amershi, Oren Etzioni, Intelligence in Wikipedia James Fogarty, Xiao Ling, Kayur Patel Project Key Ideas Key Idea 1 Ways WWW � Knowledge Community Machine-Learning-Based Content Information Extraction Creation UW Intelligence in Wikipedia Project

Key Idea 1 Key Idea 2 � Synergy (Positive Feedback) � Synergy (Positive Feedback) � Between ML Extraction & Community Content Creation � Between ML Extraction & Community Content Creation � Self Supervised Learning � Heuristics for Generating (Noisy) Training Data Match Key Idea 3 Key Idea 4 � Synergy (Positive Feedback) � Synergy (Positive Feedback) � Between ML Extraction & Community Content Creation � Between ML Extraction & Community Content Creation � Self Supervised Learning � Self Supervised Learning � Heuristics for Generating (Noisy) Training Data � Heuristics for Generating (Noisy) Training Data � Shrinkage (Ontological Smoothing) & Retraining � Shrinkage (Ontological Smoothing) & Retraining � For Improving Extraction in Sparse Domains � For Improving Extraction in Sparse Domains � Approximately Pseudo-Functional (APF) Relations person � Efficient Inference Using Learned Rules performer actor comedian Next-Generation Search Motivating Vision Next-Generation Search = Information Extraction � Information Extraction + Ontology Scalable � <Einstein, Born-In, Germany> Self-Supervised + Inference Means � <Einstein, ISA, Physicist> � <Einstein, Lectured-At, IAS> � <IAS, In, New-Jersey> Which German � <New-Jersey, In, United-States> … Scientists Taught at US � Ontology Universities? … � Physicist (x) � Scientist(x) … Einstein was a guest lecturer at � Inference the Institute for Advanced Study � Lectured-At(x, y) ∧ University(y) � Taught-At(x, y) in New Jersey … � Einstein = Einstein …

TextRunner Open Information Extraction For each sentence Apply POS Tagger For each pairs of noun phrases, NP 1 , NP 2 If classifier confirms they are “Related?” Use CRF to extract relation from intervening text Return relation(NP 1 , , NP 2 ) Train classifier & extractor on Penn Treebank data ( , ) ? } } Mark Emmert Mark Emmert was born in Fife and graduated from UW in 1975 was-born-in Fife Why Wikipedia? Wikipedia Structure � Pros � Unique IDs & Links � Comprehensive � Infoboxes � High Quality � Categories & Lists [Giles Nature 05] � First Sentence � Useful Structure � Redirection pages � Cons � Disambiguation pages � Natural-Language � Missing Data � Revision History Comscore MediaMetrix – August 2007 � Inconsistent � Multilingual � Low Redundancy

Traditional, Supervised I.E. Status Update Raw Data Outline Key Ideas Motivation Synergy Extracting Facts from Wikipedia Self-Supervised Learning Labeled Ontology Generation Shrinkage & Retraining Learning Training Improving Fact Extraction APF Relations Algorithm Data Bootstrapping to the Web Validating Extractions Kirkland -based Microsoft is the largest software company. Improving Recall with Inference Boeing moved it’s headquarters to Chicago in 2003. Hank Levy was named chair of Computer Science & Engr. Conclusions … Extractor HeadquarterOf(<company>,<city>) [Wu & Weld CIKM 2007] Kylin Architecture Kylin: Self-Supervised Information Extraction from Wikipedia From infoboxes to a training set Clearfield County was created in 1804 from parts of Huntingdon and Lycoming Counties but was administered as part of Centre County until 1812. Its county seat is Clearfield. 2,972 km² (1,147 mi²) of it is land and 17 km² (7 mi²) of it (0.56%) is water. As of 2005, the population density was 28.2/km². Preliminary Evaluation The Precision / Recall Tradeoff Correct Tuples tp � Precision Kylin Performed Well on Popular Classes: + tp fp tn Precision: mid 70% ~ high 90% � Proportion of selected fp tp fn Recall: low 50% ~ mid 90% items that are correct tp + tp fn � Recall Tuples returned by System ... But Floundered on Sparse Classes � Proportion of target items that were selected (Too Little Training Data) Precision AuC � Precision-Recall curve Is this a Big Problem? � Shows tradeoff Recall

Long Tail: Sparse Classes Long-Tail 2: Incomplete Articles � Desired Information Missing from Wikipedia Too Little Training Data 800,000/1,800,000 (44.2%) stub pages [Wikipedia July 2007] Length ID 82% < 100 instances; 40% <10 instances Status Update Shrinkage? person Outline Key Ideas (1201) Motivation Synergy .birth_place Extracting Facts from Wikipedia Self-Supervised Learning Ontology Generation Shrinkage & Retraining performer .location Improving Fact Extraction APF Relations (44) Bootstrapping to the Web Validating Extractions .birthplace Improving Recall with Inference .birth_place .cityofbirth Conclusions actor comedian .origin (8738) (106) KOG: Kylin Ontology Generator How Can We Get a [Wu & Weld, WWW08] Taxonomy for Wikipedia? Do We Need to? What about Category Tags? Conjunctions Schema Mapping Person Performer birth_date birthdate birth_place location name name other_names othername … …

KOG Architecture Subsumption Detection � Binary Classification Problem n i e t s Person � Nine Complex Features n i E : 7 0 E.g., String Features / 6 Scientist … IR Measures … Mapping to Wordnet Physicist … Hearst Pattern Matches … Class Transitions in Revision History � Learning Algorithm SVM & MLN Joint Inference Schema Mapping KOG: Kylin Ontology Generator [Wu & Weld, WWW08] Performer Person birth_date birthdate person birth_place location (1201) name name other_names othername performer … … (44) � Heuristics actor � Edit History comedian (8738) (106) � String Similarity • Experiments .birth_place • Precision: 94% Recall: 87% .location • Future .birthplace • Integrated Joint Inference .birth_place .cityofbirth .origin Improving Recall on Sparse Classes Status Update [Wu et al. KDD-08] Outline Key Ideas person � Shrinkage (1201) Motivation Synergy � Extra Training Examples Extracting Facts from Wikipedia Self-Supervised Learning from Related Classes Ontology Generation Shrinkage & Retraining performer (44) Improving Fact Extraction APF Relations � How Weight New Examples? Bootstrapping to the Web Validating Extractions actor comedian (8738) (106) Improving Recall with Inference Conclusions

Recall after Shrinkage / Retraining… Improving Recall on Sparse Classes [ Wu et al. KDD-08] Retraining � Compare Kylin Extractions with Tuples from Textrunner � Additional Positive Examples � Eliminate False Negatives TextRunner [Banko et al. IJCAI-07, ACL-08 ] � Relation-Independent Extraction � Exploits Grammatical Structure � CRF Extractor with POS Tag Features Status Update Long-Tail 2: Incomplete Articles � Desired Information Missing from Wikipedia Outline Key Ideas 800,000/1,800,000(44.2%) stub pages [July 2007 of Wikipedia ] Motivation Synergy Extracting Facts from Wikipedia Self-Supervised Learning Length Ontology Generation Shrinkage & Retraining Improving Fact Extraction APF Relations Bootstrapping to the Web Validating Extractions Improving Recall with Inference Conclusions ID Bootstrapping to the Web Extracting from the Broader Web [Wu et al. KDD-08] � Extractor Quality Irrelevant � If no information to extract… 1) Send Query to Google � 44% of Wikipedia Pages = “stub” Object Name + Attribute Synonym � Instead, … Extract from Broader Web 2) Find Best Region on the Page Heuristics > Dependency Parse � Challenges 3) Apply Extractor � How maintain high precision? � Many Web pages noisy, 4) Vote if Multiple Extractions � Describe multiple objects

Bootstrapping to the Web Problem � Information Extraction is Still Imprecise � Do Wikipedians Want 90% Precision? � How Improve Precision? � People! Status Update Accelerate Outline Key Ideas Motivation Synergy Extracting Facts from Wikipedia Self-Supervised Learning Ontology Generation Shrinkage & Retraining Improving Fact Extraction APF Relations Bootstrapping to the Web Validating Extractions Improving Recall with Inference Conclusions Contributing as a Non-Primary Task [Hoffman CHI-09] � Encourage contributions � Without annoying or abusing readers Designed Three Interfaces � Popup (immediate interruption strategy) � Highlight (negotiated interruption strategy) � Icon (negotiated interruption strategy) Popup Interface

r v e o h Highlight Interface Highlight Interface r e v o h Highlight Interface Highlight Interface r e v o h Icon Interface Icon Interface

Recommend

More recommend