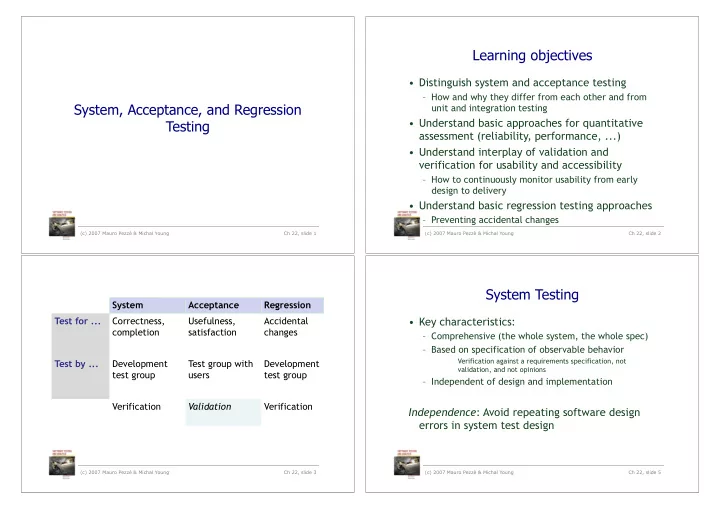

Learning objectives • � Distinguish system and acceptance testing – � How and why they differ from each other and from System, Acceptance, and Regression unit and integration testing • � Understand basic approaches for quantitative Testing assessment (reliability, performance, ...) • � Understand interplay of validation and verification for usability and accessibility – � How to continuously monitor usability from early design to delivery • � Understand basic regression testing approaches – � Preventing accidental changes (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 1 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 2 System Testing System Acceptance Regression Test for ... Correctness, Usefulness, Accidental • � Key characteristics: completion satisfaction changes – � Comprehensive (the whole system, the whole spec) – � Based on specification of observable behavior Verification against a requirements specification, not Test by ... Development Test group with Development validation, and not opinions test group users test group – � Independent of design and implementation Verification Validation Verification Independence : Avoid repeating software design errors in system test design (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 3 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 5

Independent V&V Independence without changing staff • � One strategy for maximizing independence: • � If the development organization controls System (and acceptance) test performed by a system testing ... different organization – � Perfect independence may be unattainable, but we can reduce undue influence – � Organizationally isolated from developers (no pressure to say “ok”) • � Develop system test cases early – � Sometimes outsourced to another company or – � As part of requirements specification, before major agency design decisions have been made • � Especially for critical systems • � Agile “test first” and conventional “V model” are both • � Outsourcing for independent judgment, not to save money examples of designing system test cases before designing the implementation • � May be additional system test, not replacing internal V&V • � An opportunity for “design for test”: Structure system for – � Not all outsourced testing is IV&V critical system testing early in project • � Not independent if controlled by development organization (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 6 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 7 Incremental System Testing Global Properties • � System tests are often used to measure • � Some system properties are inherently global progress – � Performance, latency, reliability, ... – � System test suite covers all features and scenarios of – � Early and incremental testing is still necessary, but use provide only estimates – � As project progresses, the system passes more and • � A major focus of system testing more system tests – � The only opportunity to verify global properties • � Assumes a “threaded” incremental build plan: against actual system specifications Features exposed at top level as they are – � Especially to find unanticipated effects, e.g., an developed unexpected performance bottleneck (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 8 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 9

Context-Dependent Properties Establishing an Operational Envelope • � Beyond system-global: Some properties depend • � When a property (e.g., performance or real- on the system context and use time response) is parameterized by use ... – � Example: Performance properties depend on – � requests per second, size of database, ... environment and configuration • � Extensive stress testing is required – � Example: Privacy depends both on system and how it – � varying parameters within the envelope, near the is used bounds, and beyond • � Medical records system must protect against unauthorized • � Goal: A well-understood model of how the use, and authorization must be provided only as needed property varies with the parameter – � Example: Security depends on threat profiles • � And threats change! – � How sensitive is the property to the parameter? • � Testing is just one part of the approach – � Where is the “edge of the envelope”? – � What can we expect when the envelope is exceeded? (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 10 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 11 Stress Testing Estimating Dependability • � Often requires extensive simulation of the • � Measuring quality, not searching for faults execution environment – � Fundamentally different goal than systematic testing – � With systematic variation: What happens when we • � Quantitative dependability goals are statistical push the parameters? What if the number of users – � Reliability or requests is 10 times more, or 1000 times more? – � Availability • � Often requires more resources (human and – � Mean time to failure machine) than typical test cases – � ... – � Separate from regular feature tests • � Requires valid statistical samples from – � Run less often, with more manual control operational profile – � Diagnose deviations from expectation – � Fundamentally different from systematic testing • � Which may include difficult debugging of latent faults! (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 12 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 14

Statistical Sampling Is Statistical Testing Worthwhile? • � We need a valid operational profile (model) • � Necessary for ... – � Sometimes from an older version of the system – � Critical systems (safety critical, infrastructure, ...) – � Sometimes from operational environment (e.g., for an embedded controller) • � But difficult or impossible when ... – � Sensitivity testing reveals which parameters are – � Operational profile is unavailable or just a guess most important, and which can be rough guesses • � Often for new functionality involving human interaction • � And a clear, precise definition of what is being – � But we may factor critical functions from overall use to measured obtain a good model of only the critical properties – � Reliability requirement is very high – � Failure rate? Per session, per hour, per operation? • � Required sample size (number of test cases) might require • � And many, many random samples years of test execution – � Especially for high reliability measures • � Ultra-reliability can seldom be demonstrated by testing (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 15 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 16 Process-based Measures Usability • � Less rigorous than statistical testing • � A usable product – � Based on similarity with prior projects – � is quickly learned • � System testing process – � allows users to work efficiently – � is pleasant to use – � Expected history of bugs found and resolved • � Objective criteria • � Alpha, beta testing – � Time and number of operations to perform a task – � Alpha testing: Real users, controlled environment – � Frequency of user error – � Beta testing: Real users, real (uncontrolled) environment • � blame user errors on the product! • � Plus overall, subjective satisfaction – � May statistically sample users rather than uses – � Expected history of bug reports (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 17 (c) 2007 Mauro Pezzè & Michal Young Ch 22, slide 19

Recommend

More recommend