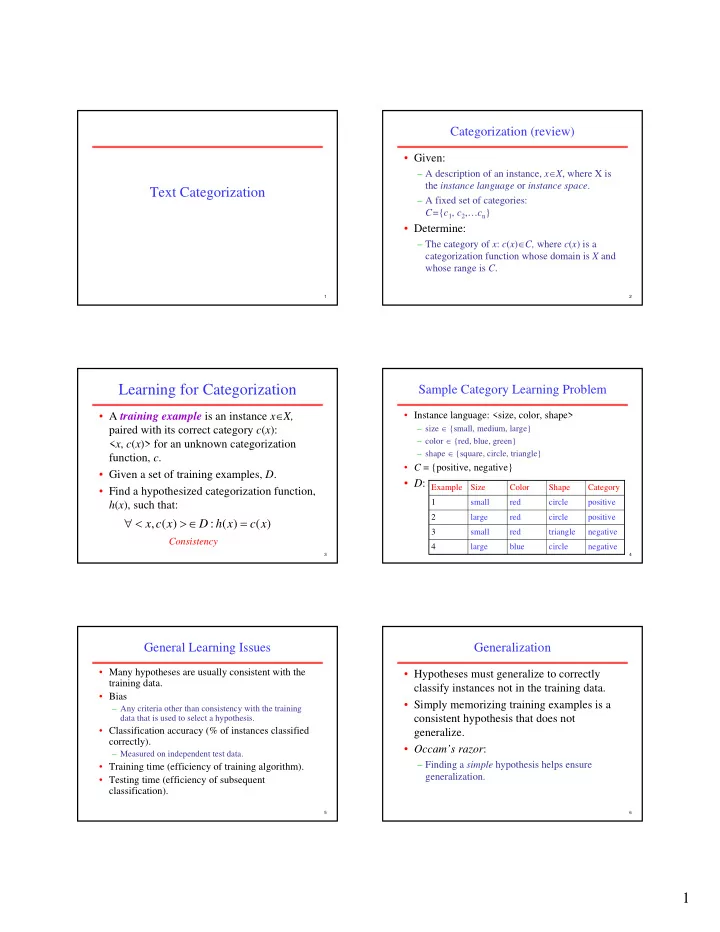

Categorization (review) • Given: – A description of an instance, x ∈ X , where X is the instance language or instance space . Text Categorization – A fixed set of categories: C= { c 1 , c 2 ,… c n } • Determine: – The category of x : c ( x ) ∈ C, where c ( x ) is a categorization function whose domain is X and whose range is C . 1 2 Learning for Categorization Sample Category Learning Problem • A training example is an instance x ∈ X, • Instance language: <size, color, shape> – size ∈ {small, medium, large} paired with its correct category c ( x ): – color ∈ {red, blue, green} < x , c ( x )> for an unknown categorization – shape ∈ {square, circle, triangle} function, c . • C = {positive, negative} • Given a set of training examples, D . • D : Example Size Color Shape Category • Find a hypothesized categorization function, 1 small red circle positive h ( x ), such that: 2 large red circle positive ∀ < > ∈ = x , c ( x ) D : h ( x ) c ( x ) 3 small red triangle negative Consistency 4 large blue circle negative 3 4 General Learning Issues Generalization • Many hypotheses are usually consistent with the • Hypotheses must generalize to correctly training data. classify instances not in the training data. • Bias • Simply memorizing training examples is a – Any criteria other than consistency with the training consistent hypothesis that does not data that is used to select a hypothesis. • Classification accuracy (% of instances classified generalize. correctly). • Occam’s razor : – Measured on independent test data. – Finding a simple hypothesis helps ensure • Training time (efficiency of training algorithm). generalization. • Testing time (efficiency of subsequent classification). 5 6 1

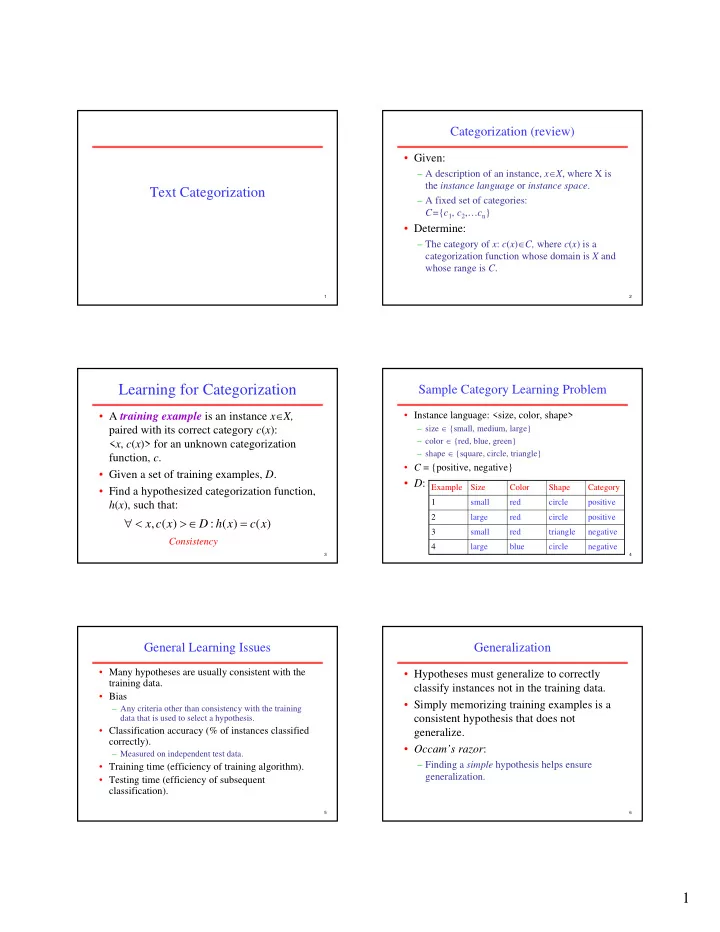

Text Categorization Learning for Text Categorization • Assigning documents to a fixed set of categories, e.g. • Hard to construct text categorization functions. • Web pages • Learning Algorithms: – Categories in search (see microsoft.com) – Yahoo-like classification – Bayesian (naïve) • Newsgroup Messages – Neural network – Recommending – Relevance Feedback (Rocchio) – Spam filtering – Rule based (C4.5, Ripper, Slipper) • News articles – Nearest Neighbor (case based) – Personalized newspaper – Support Vector Machines (SVM) • Email messages – Routing – Prioritizing – Folderizing – spam filtering 7 8 Rocchio Text Categorization Algorithm Using Relevance Feedback (Rocchio) (Training) • Use standard TF/IDF weighted vectors to represent text documents (normalized by Assume the set of categories is { c 1 , c 2 ,… c n } maximum term frequency). For i from 1 to n let p i = <0, 0,…,0> ( init. prototype vectors ) For each training example < x , c ( x )> ∈ D • For each category, compute a prototype vector by summing the vectors of the training documents in Let d be the frequency normalized TF/IDF term vector for doc x the category. Let i = j such that ( c j = c ( x )) ( sum all the document vectors in c i to get p i ) • Assign test documents to the category with the Let p i = p i + d closest prototype vector based on cosine similarity. 9 10 Rocchio Text Categorization Algorithm Illustration of Rocchio Text Categorization (Test) Given test document x Let d be the TF/IDF weighted term vector for x Let m = –2 ( init. maximum cosSim ) For i from 1 to n : ( compute similarity to prototype vector ) Let s = cosSim( d , p i ) if s > m let m = s let r = c i ( update most similar class prototype ) Return class r 11 12 2

Rocchio Properties Rocchio Anomaly • Does not guarantee a consistent hypothesis. • Prototype models have problems with polymorphic (disjunctive) categories. • Forms a simple generalization of the examples in each class (a prototype ). • Prototype vector does not need to be averaged or otherwise normalized for length since cosine similarity is insensitive to vector length. • Classification is based on similarity to class prototypes. 13 14 Rocchio Time Complexity Nearest-Neighbor Learning Algorithm • Note: The time to add two sparse vectors is • Learning is just storing the representations of the proportional to minimum number of non-zero training examples in D . entries in the two vectors. • Testing instance x : • Training Time: O(| D |( L d + | V d |)) = O(| D | L d ) – Compute similarity between x and all examples in D . where L d is the average length of a document in D and V d – Assign x the category of the most similar example in D . is the average vocabulary size for a document in D. • Does not explicitly compute a generalization or • Test Time: O( L t + |C||V t | ) category prototypes. where L t is the average length of a test document and | V t | • Also called: is the average vocabulary size for a test document. – Assumes lengths of p i vectors are computed and stored during – Case-based training, allowing cosSim( d , p i ) to be computed in time – Memory-based proportional to the number of non-zero entries in d (i.e. |V t | ) – Lazy learning 15 16 K Nearest-Neighbor Similarity Metrics • Using only the closest example to determine • Nearest neighbor method depends on a categorization is subject to errors due to: similarity (or distance) metric. – A single atypical example. • Simplest for continuous m -dimensional instance space is Euclidian distance . – Noise (i.e. error) in the category label of a single training example. • Simplest for m -dimensional binary instance • More robust alternative is to find the k space is Hamming distance (number of most-similar examples and return the feature values that differ). majority category of these k examples. • For text, cosine similarity of TF-IDF • Value of k is typically odd to avoid ties, 3 weighted vectors is typically most effective. and 5 are most common. 17 18 3

3 Nearest Neighbor Illustration K Nearest Neighbor for Text (Euclidian Distance) Training: For each each training example < x , c ( x )> ∈ D . . Compute the corresponding TF-IDF vector, d x , for document x . . . Test instance y : . . Compute TF-IDF vector d for document y . For each < x , c ( x )> ∈ D . . Let s x = cosSim( d , d x ) . Sort examples, x , in D by decreasing value of s x Let N be the first k examples in D. ( get most similar neighbors ) Return the majority class of examples in N 19 20 Illustration of 3 Nearest Neighbor for Text 3NN on Rocchio Anomaly • Nearest Neighbor handles polymorphic categories better. 21 22 Nearest Neighbor Time Complexity Nearest Neighbor with Inverted Index • Training Time: O(| D | L d ) to compose • Determining k nearest neighbors is the same as determining the k best retrievals using the test TF-IDF vectors. document as a query to a database of training • Testing Time: O( L t + |D||V t | ) to compare to documents. all training vectors. • Use standard VSR inverted index methods to find – Assumes lengths of d x vectors are computed and stored the k nearest neighbors. during training, allowing cosSim( d , d x ) to be computed • Testing Time: O( B|V t | ) in time proportional to the number of non-zero entries where B is the average number of training documents in in d (i.e. |V t | ) which a test-document word appears. • Testing time can be high for large training • Therefore, overall classification is O( L t + B|V t | ) sets. – Typically B << | D | 23 24 4

Recommend

More recommend