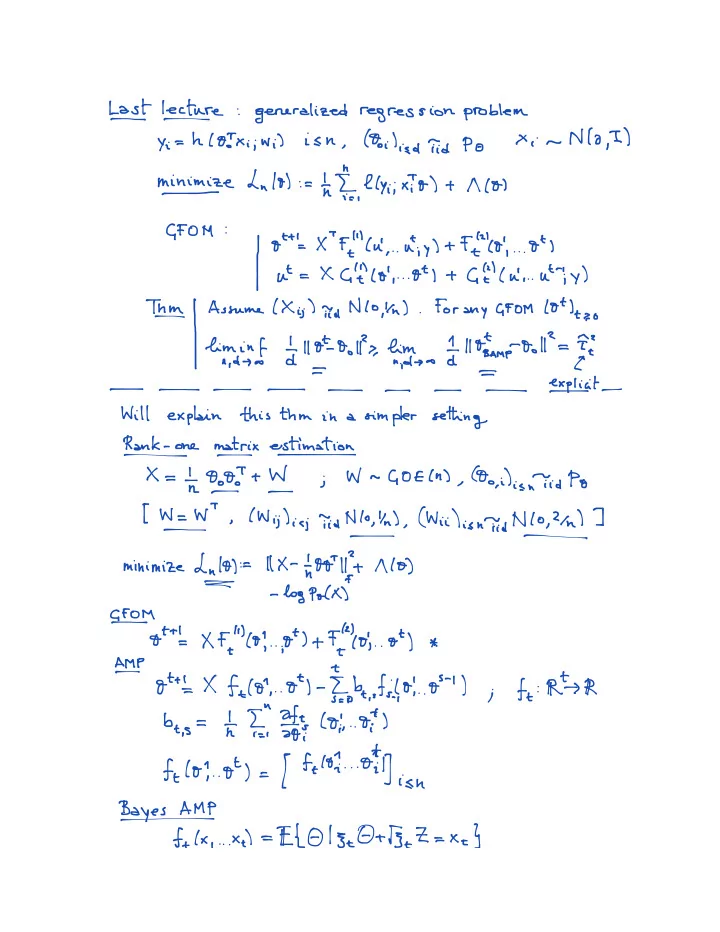

Lest lecture generalized regression problem N 10,1 hlOoxi wi is n Qi Xi d Po yi a minimize Ln lol In llyiix.to t 11101 GFOM Ott's x Ff Cu at y FILO Ot X G't lo GE cu ut ut Otl y Assume Xi o N lo kn For any GFOM lot It Thin o le a'E ans.e a'Eat will explain this thin in a simpler setting Rank one matrix estimation X In DOIT t I W GOE n o.ilienTidPo j WWT.lWijliajTioiNlo.Yn Wii i.sn idNlo 21n T Il X thot minimize Ln 10 HIT 1110 log Polk GEOM Ott's XF Yo pt 40 Ot AMP ft RFK Ott's X felon Ot t.E.obt.sfj.to Os bt.s h.FI iiCoi oI I felt Off Eh ft lo Ot BayesA MP xd EL 013 0 1 Be Z fth xe

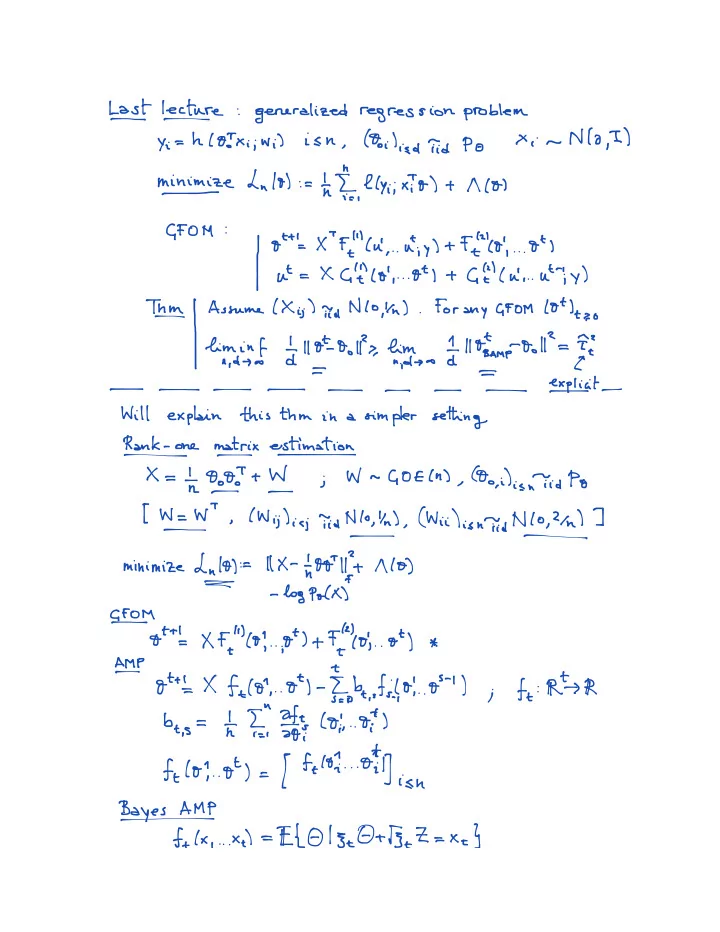

L Se I 3 e i OBEO trz.cz t LFLEC Set N lo l O Z Po ft FEamp Optamp ifIf sEm 9iIqI.i rI e.mn nooT Reduction tJes.o lot Rt Rst Lem_V GFoMlEtJts.oFAMP ft Ot del O G FOM Post processing AMP Analysis of AMP Lipschitz V If ft Thin ttnE.n8 21 Law 0 µ 0 2 k at oi.o NO Qst Z Zt Po El 0 felt 0 2 0 2 1 Htt Me LF Q ii.tt E fs µ 01 2 1 Fs Zs µ Intel 0 1 0 rect indep of X W v2 a v i X z i 3 then EE.si iEinio.aTot Inf Q Xffo Ot D

J ft J I are dependent as if independent act Interest C 2 1 I 8 I 8 M Po 1 Ite 8 1 tf 8 Po e Ct Q 00 t Q 00 CV X infinite tree of degree n Tn Vn En Hoi lien Tin Po Xij Doing Wig ol N lo kn Nij een it Iii Message passing u i Izzi Xie fecwe.si utensil meme murmur Fi y Wem i tem EE Ti j EE VCTi j O Doe Em Fits H nti e Mt0 27 Qi Ui 0 140 2 1 U 2 j

Optimality of Bayes AMP Sufficient to prove LB for estimation on Tn a local alg Message passing is uti j c BiH End Xen i m local algorithm Optimal E E Ooi Xen mk Bc.lt Can be implemented as a message passing algorithm Belief Propagation Wdy should we expect state evolution Do D Ott's wffof.at II d f 101 Os t s 0191 Otthfty it Wfe ft Ott't fide sfs lo O'J on Eye Conditioning Condition on W fo xt w ft Wfl x x Xt _W ft Fe L fo ft I Xt Ix it t PENTTI 1 Ethel Stt Wl g X t.FI t rank _n M P ftt Mke El ft gttldp.tw Fide f WTPt 0 Ed Ident f

f I e

Recommend

More recommend