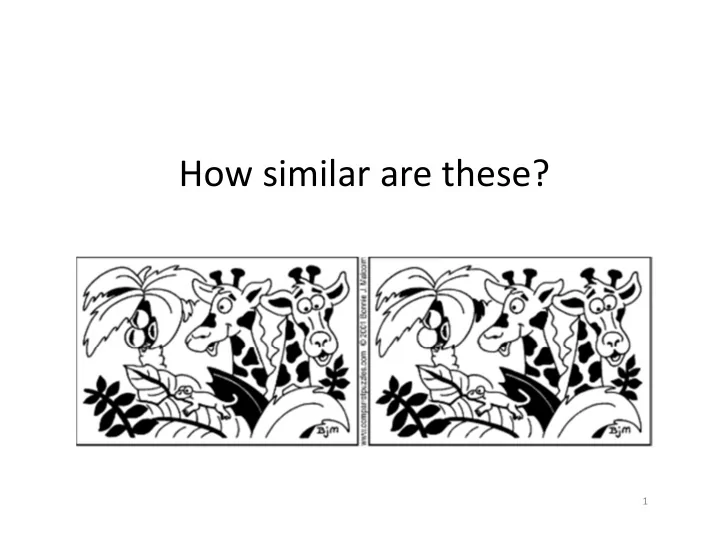

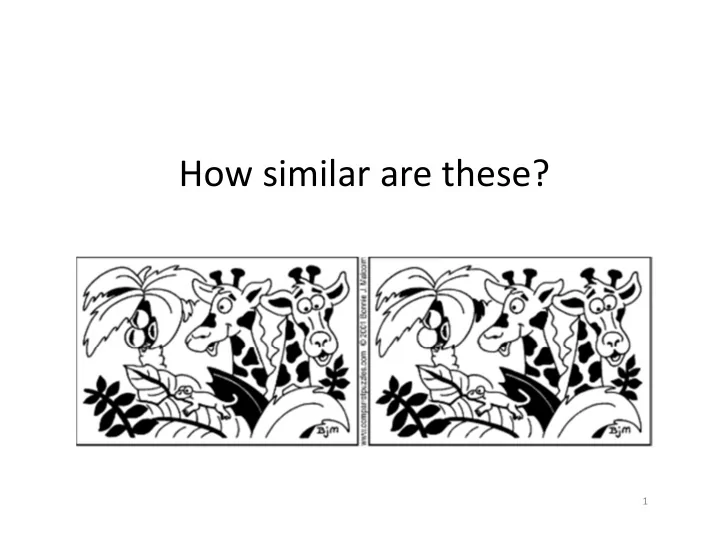

How similar are these? 1

What’s the Problem? • Finding similar items with respect to some distance metric • Two variants of the problem: – Offline: extract all similar pairs of objects from a large collection – Online: is this object similar to something I’ve seen before? 2

Application: Plagiarism Detection 3

Application: Content ‐ based Search 4

Other Applications • Near ‐ duplicate detection of webpages – Mirror pages – Similar news articles • Recommender systems – Find users with similar tastes in movies, etc – Find products with similar customer sets • Sequence/tree alignment – Find similar DNA or protein sequences • … 5

Finding similar items ‐ Three Components • How to quantify similarity? – Distance measures • Euclidean distance – based on locations of points in space, e.g., L r ‐ norm • Non ‐ Euclidean distance, ‐ based on properties of points, e.g., Jaccard, cosine, edit • Compute representation – Shingling, tf.idf, etc. • Space and Time Efficient algorithms – Transformation: Minhash – All ‐ pair comparison: Locality sensitive hashing 6

(Axioms of) Distance Metrics d is a distance measure between points x and y 1. Non ‐ negativity: 2. Identity: 3. Symmetry: 4. Triangle Inequality 7

Problem: Finding Similar Documents • Given N text documents, find pairs that are “near duplicates” Doc 1 to N – Find similarity between a pair – For large N, it can be very compute intensive degree of similarity • Can we avoid all ‐ pair comparisons? between doc i and j 8

Comparing two documents … • Naïve methods – Feature: Treat each document as a set/bag of words – Distance: Jaccard distance (or cosine distance or hamming distance) 9

Distance: Jaccard • Given two sets A, B • Jaccard similarity : • Jaccard distance : • E.g., A = {I, like, CS5344}; B = {CS5344, is, not, for, me}, d(A, B) = 6/7 10

Comparing two documents … • Naïve methods – Feature: Treat each document as a set/bag of words – Distance: Jaccard distance or cosine distance or hamming distance – Textual rather than semantics • Documents with many common words are more similar, even if the text appears in different order – What good is this? • Fast filtering before the slower refinement 11

Shingling: Account for ordering of words … • Instead of treating each word independently, we can consider a sequence of k words – More effective in terms of accuracy • A k ‐ shingle (or k ‐ gram) for a document D is a sequence of k tokens that appear in D – Tokens can be characters or words or some feature/object depending on the application • E.g., k = 3 characters; D = {This is a test} will give rise to the following set of 3 ‐ shingles: S(D) = {Thi, his, is_, s_i, _is, is_, s_a, _a_, a_t, _te, tes, est} • E.g., k = 3 words; we have S(D) = {{This is a}, {is a test}} NOTE: Assume “characters” in our discussion 12

Shingles and Similarity • Documents that are similar should have many shingles in common • What if two documents differ by a word? – Affects only k ‐ shingles within distance k from the word • What if we reorder paragraphs? – Affects only the 2k shingles that cross paragraph boundaries • Example: k=3 – The dog which chased the cat vs The dog that chased the cat – Only 3 ‐ shingles replaced are g_w, _wh, whi, hic, ich, ch_, and h_c

Shingles • We can represent document D as a set of its k ‐ shingles – Distance metrics: Jaccard distance • What’s the effect of the value of k (in terms of characters)? – Recommended values of k • 5 for small documents • 10 for large documents 14

Shingles • How about space overhead? – Each character can be represented as a byte (integer) – k ‐ shingle requires k bytes (integers) • Can compress by hashing a k ‐ shingle to say 4 bytes – D is now a set of 4 ‐ byte hash values of its k ‐ shingles – False positive may occur in matching • What’s the advantage? – Tradeoffs between ability to differentiate vs space • It is better to hash 10 ‐ shingles to say 4 bytes than to use 4 ‐ shingles! 15

So far … • Represent a document as a set of k ‐ shingles or its hash values • Use Jaccard distance to compare two documents • Can we do better? – Parallelism vs Sampling • This scheme works but … – What if the set of hash values (or k ‐ shingles) is too large to fit in the memory? – Or the number of documents are too large? Idea: Find a way to hash a document to a single (small size) value! and similar documents to the same value! 16

Minhash • Seminal algorithm for near ‐ duplicate detection of webpages – Used by AltaVista – Documents (HTML pages) represented by shingles ( n ‐ grams) – Jaccard similarity: dups are pairs with high similarity 17

MinHash – Key Idea • Hash the set of document shingles (big in terms of space requirement) into a signature (relatively small size) • Instead of comparing shingles, we compare signatures – ESSENTIAL: Similarities of signatures and similarities of shingles MUST BE related !! – Not every hashing function is applicable! – Need one that satisfies the following: • if Sim (D 1 ,D 2 ) is high, then with high prob. h (D 1 ) = h (D 2 ) • if Sim (D 1 ,D 2 ) is low, then with high prob. h (D 1 ) ≠ h (D 2 ) – It is possible to have false positives, and false negatives! • minhashing turns out to be one such function for Jaccard similarity 18

Preliminaries: Representation & Jacaad Measure • Sets: – A = { e 1 , e 3 , e 7 } Let: – B = { e 3 , e 5 , e 7 } M 00 = # rows where both elements are 0 • Can be equivalently M 11 = # rows where both elements are 1 expressed as matrices: M 01 = # rows where A=0, B=1 M 10 = # rows where A=1, B=0 19

Computing Minhash • Start with the matrix representation of the set • Randomly permute the rows of the matrix • minhash (which is the signature) is the first row (in the permuted order) with a “1” • Example: Input matrix Permuted matrix Row 1 2 h(A) = 4 3 4 h(B) = 3 5 6 7 20

Minhash and Jaccard M 00 M 00 M 01 M 11 M 11 M 00 M 10 21

MinHash – False positive/negative • Instead of comparing sets, we now compare only 1 hash value! • False positive? – False positive can be easily dealt with by doing an additional layer of checking (treat minhash as a filtering mechanism) • False negative? • High error rate! Can we do better? 22

Using multiple minhash signatures Comparison between two sets (original matrix) becomes comparison between two columns of minhash values (signature matrix) The similarity between signatures of two columns is given by the fraction of hash functions in which they agree Input Permutations Minhash signatures Similarities 23

Implementation of MinHash Computation • Permutations are expensive – Incur space and random access (if data cannot fit into memory) • Interpret the hash value as the permutation • Only need to keep track of the minimum hash values 24

Implementation of minhash (By example) 2 4 3 1 h(x) = x mod 5 + 1 4 3 g(x) = (2x+1) mod 5 + 1 5 5 1 2 2 1 3 1 25

Implementation of minhash (By example) Sig1 Sig2 h(1) = 2 2 g(1) = 4 4 h(2) = 3 2 3 g(2) = 1 4 1 h(3) = 4 2 3 h(x) = x mod 5 + 1 g(3) = 3 3 1 g(x) = (2x+1) mod 5 + 1 h(4) = 5 2 3 g(4) = 5 3 1 Initialization: set signatures to Apply all hash functions on each row h(5) = 1 2 1 • If column value (of the source matrix) is 1, g(5) = 2 3 1 keep the minimum value 26 • Otherwise, do nothing

So far … • Represent a document as a set of hash values (of its k ‐ shingles) • Transform set of k ‐ shingles to a set of minhash signatures • Use Jaccard to compare two documents by comparing their signatures • Is this method (i.e., transforming sets to signature) necessarily “better”?? 27

The BIG Picture (All ‐ pair comparison) Locality Sensitive Shingling Hashing Signatures falling Minhashing This course is into the same course is about bucket are is about big “similar” about big data big data analytics Set of strings Signature for Shingling of length k the set of strings (can capture similarity) Another document 28

Find all near ‐ duplicates among N documents • Naïve solution – For each document, compare with the other N ‐ 1 documents • Takes N ‐ 1 comparisons • Can optimize using filter ‐ and ‐ refine mechanisms – Requires N*(N ‐ 1)/2 comparisons – For large N, still takes ages … • E.g., N = 10 7 , we have ~10 14 comparisons; if each comparison takes 1 μ s, we need 10 8 sec ( ~ 3 years!) 29

Locality Sensitive Hashing (LSH) • Suppose we have N documents • For each document, we can derive say k minhash signatures D 1 D 2 D 3 D 4 …. D N minhash 1 3 3 2 2 3 2 7 7 5 5 7 k ‐ 1 2 2 2 2 2 k 1 1 1 1 2 30

Idea of hashing D 1 D 2 D 3 D 4 …. D N minhash 1 3 3 2 2 3 2 7 7 5 5 7 k ‐ 1 2 2 2 2 2 k 1 1 1 1 1 Hash function F(column) 31 Buckets

Recommend

More recommend