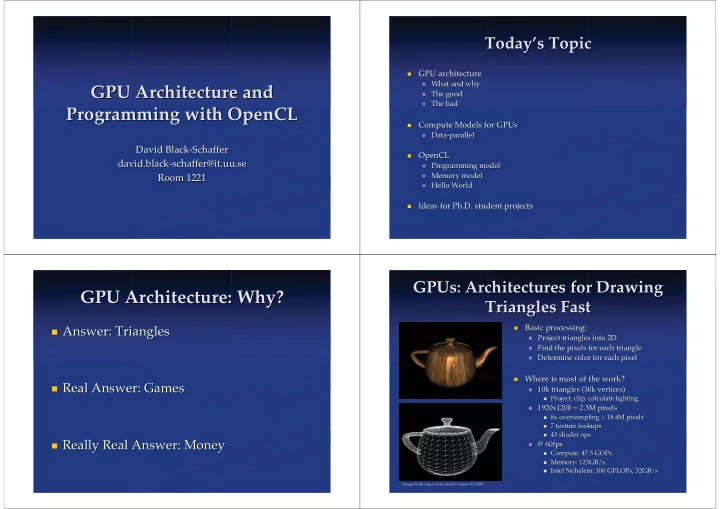

Today’ ’s Topic s Topic Today GPU architecture GPU architecture � � � What and why What and why � GPU Architecture and chitecture and GPU Ar � The good The good � � The bad The bad � Programming with OpenCL OpenCL Programming with Compute Models for GPUs Compute Models for GPUs � � � Data-parallel Data-parallel � David Black-Schaffer David Black-Schaffer OpenCL OpenCL � � david. david .black-schaffer@it black-schaffer@it. .uu uu.se .se � Programming model Programming model � � Memory Memory model model Room 1221 Room 1221 � � Hello World Hello World � Ideas for Ph.D. student projects Ideas for Ph.D. student projects � � GPUs: Architectures for Drawing GPUs : Architectures for Drawing GPU Architecture: Why? GPU Architecture: Why? Triangles Fast Triangles Fast Basic processing: Basic processing: � Answer: Triangles Answer: Triangles � � � � Project triangles into 2D Project triangles into 2D � � Find the pixels for each triangle Find the pixels for each triangle � � Determine color for each pixel Determine color for each pixel � Where is most of the work? Where is most of the work? � � � Real Answer: Games Real Answer: Games � � 10k triangles (30k vertices) 10k triangles (30k vertices) � � Project, clip, calculate lighting Project, clip, calculate lighting � � 1920x1200 = 2.3M pixels 1920x1200 = 2.3M pixels � � 8x 8x oversampling oversampling = 18.4M pixels = 18.4M pixels � � 7 texture lookups 7 texture lookups � � 43 43 shader shader ops ops � � Really Real Answer: Money Really Real Answer: Money � @ 60fps @ 60fps � � � Compute: 47.5 Compute: 47.5 GOPs GOPs � � Memory: 123GB/s Memory: 123GB/s � � Intel Nehalem: 106 Intel Nehalem: 106 GFLOPs GFLOPs, , 32GB/s 32GB/s � Images from caig.cs.nctu.edu.tw/course/CG2007

Example Shader Shader: Water : Water Example GPGPU: General Purpose GPUs GPUs GPGPU: General Purpose � Question: Question: Can we use Can we use GPUs GPUs for non-graphics tasks? for non-graphics tasks? � � Answer: Answer: Yes! Yes! � � They They’ ’re incredibly fast and awesome re incredibly fast and awesome � � Answer: Answer: Maybe Maybe � � They They’ ’re fast, but hard to program re fast, but hard to program � � Answer: Answer: Not really Not really � � My algorithm runs slower on the GPU than on the CPU My algorithm runs slower on the GPU than on the CPU � Vectors Vectors � � � Answer: Answer: No No Texture lookups Texture lookups � � � Complex math Complex math � � � I need more precision/memory/synchronization/other I need more precision/memory/synchronization/other � Function calls calls Function � � Control flow Control flow � � No loops No loops � � From http://www2.ati.com/developer/gdc/D3DTutorial10_Half-Life2_Shading.pdf Why Should You Care? GPU Design Why Should You Care? GPU Design Intel Nehalem 4-core Nehalem 4-core AMD Radeon Radeon 5870 5870 Intel AMD 1) Process pixels in parallel 1) Process pixels in parallel � Data-parallel: Data-parallel: � � 2.3M 2.3M pixels per frame pixels per frame � => lots of work => lots of work � All pixels are independent All pixels are independent � => no synchronization => no synchronization � Lots of spatial locality Lots of spatial locality � => regular memory access => regular memory access 130W, 263mm 2 188W, 334mm 2 � Great speedups Great speedups � 32 GB/s BW, 106 GFLOPs (SP) 154 GB/s BW, 2720 GFLOPs (SP) � Limited only by the Limited only by the amount of hardware amount of hardware Big caches (8MB) Small caches (<1MB) � Out-of-order Hardware thread scheduling 0.8 GFLOPs/W 14.5 GFLOPs/W

CPU vs vs. GPU . GPU Philosophy: Philosophy: CPU GPU Design GPU Design Performance Performance LM LM I$ I$ LM LM I$ I$ 2) Focus on throughput, not latency 2) Focus on throughput, not latency L2 L2 L2 L2 � Each pixel can take a long time Each pixel can take a long time… … � LM LM I$ I$ LM LM I$ I$ … …as long as as long as we process many at the same time. we process many at the same time. BP BP L1 L1 BP BP L1 L1 � Great scalability Great scalability LM I$ LM I$ LM I$ LM I$ � L2 L2 L2 L2 � Lots of simple parallel processors Lots of simple parallel processors � � Low clock speed Low clock speed � LM LM I$ I$ LM LM I$ I$ BP BP L1 L1 BP BP L1 L1 Latency-optimized (fast, serial) Throughput-optimized (slow, parallel) 4 Massive CPU Cores: Big caches, Big caches, branch branch 8*8 Wimpy GPU Cores: No caches 8*8 Wimpy GPU Cores: No caches, in- , in- 4 Massive CPU Cores: order, single-issue, single-precision… … predictors, o predictors, out-of-order, multiple-issue, ut-of-order, multiple-issue, order, single-issue, single-precision speculative execution, double-precision speculative execution, double-precision… … About 2 IPC per core, 8 IPC total @3GHz About 2 IPC per core, 8 IPC total @3GHz About 1 IPC per core, About 1 IPC per core, 64 IPC total @1.5GHz 64 IPC total @1.5GHz Example GPUs GPUs CPU Memory Philosophy Example CPU Memory Philosophy Instructions� Instructions� Fixed-function Logic g= f f+1 +1 g= f= f =ld ld( (e e) ) Fixed-function Logic d= d= d+1 d+1 Lots of Small Parallel Processors e= =ld ld( (d d) ) e Lots of Small Parallel Processors Limited Interconnect c= c= b b+a +a Limited Interconnect Limited Memory b= = a+1 a+1 Limited Memory b Lots of Memory Controllers Very Small Caches Lots of Memory Controllers Very Small Caches Nvidia G80 AMD 5870

CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� g= f f+1 +1 g= f f+1 +1 g= g= f= f =ld ld( (e e) ) f f= =ld ld( (e e) ) d= d+1 d= d+1 d= d+1 d= d+1 e e= =ld ld( (d d) ) c= b c= b+a +a c= c= b b+a +a b= = a+1 a+1 b + + ld/st ld/st + + ld/st ld/st b= b = a+1 a+1 e= e =ld ld( (d d) ) Cycle 0 Cycle 0 Cycle 0 Cycle 0 CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� g= g= f f+1 +1 g= g= f f+1 +1 f= =ld ld( (e e) ) f= =ld ld( (e e) ) f f d= d= d+1 d+1 d= d+1 d= d+1 c= b b+a +a c= b b+a +a c= c= L1 L1 Memory access will take ~100 cycles… Memory access will take ~100 cycles … Cache Cache + ld/st + ld/st + ld/st + ld/st b= b = a+1 a+1 e e= =ld ld( (d d) ) b= b = a+1 a+1 e e= =ld ld( (d d) ) Hit! Hit! Cycle 0 Cycle 0 Cycle 0 Cycle 0

CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� g= g= f f+1 +1 f f= =ld ld( (e e) ) g= g= f f+1 +1 d= d= d+1 d+1 f= f =ld ld( (e e) ) d= d+1 d+1 d= L1 L1 L1 L1 Cache Cache Cache Cache + + ld/st ld/st + + ld/st ld/st c= b c= b+a +a e e= =ld ld( (d d) ) c= c= b b+a +a e e= =ld ld( (d d) ) b b= = a+1 a+1 b b= = a+1 a+1 Cycle 1 Cycle 1 Cycle 1 Cycle 1 CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� g= f f+1 +1 g= f f+1 +1 g= g= f f= =ld ld( (e e) ) d= d+1 d+1 d= L1 L1 L1 L1 Cache Cache Cache Cache + ld/st + ld/st + ld/st + ld/st d= d+1 d= d+1 f f= =ld ld( (e e) ) e= e =ld ld( (d d) ) e e= =ld ld( (d d) ) c= b b+a +a c= b b+a +a c= c= b b= = a+1 a+1 b b= = a+1 a+1 Cycle 1 Cycle 1 Cycle 2 Cycle 2

CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� L2 L2 Cache Cache g= g= f f+1 +1 g= f g= f+1 +1 L1 L1 L1 L1 Cache Cache Cache Cache + + ld/st ld/st + + ld/st ld/st f= f =ld ld( (e e) ) f f= =ld ld( (e e) ) Miss! Miss! d= d= d+1 d+1 d= d= d+1 d+1 Hit! e= e =ld ld( (d d) ) e e= =ld ld( (d d) ) Now we stall the processor for Now we stall the processor for c= c= b b+a +a c= c= b b+a +a b= = a+1 a+1 b= = a+1 a+1 20 cycles waiting on the L2… 20 cycles waiting on the L2 … b b Cycle 3 Cycle 3 Cycle 3 Cycle 3 CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy CPU Memory Philosophy Instructions� Instructions� Instructions� Instructions� L2 L2 L2 L2 Cache Cache Cache Cache g= f f+1 +1 g= f f+1 +1 g= g= L1 L1 L1 L1 Cache Cache Cache Cache + ld/st + ld/st + ld/st + ld/st f= f =ld ld( (e e) ) f= f =ld ld( (e e) ) d= d= d+1 d+1 d= d+1 d= d+1 e= =ld ld( (d d) ) e= =ld ld( (d d) ) e e c= b c= b+a +a c= b c= b+a +a b= = a+1 a+1 b= = a+1 a+1 b b Cycle 23 Cycle 23 Cycle 24 Cycle 24

Recommend

More recommend