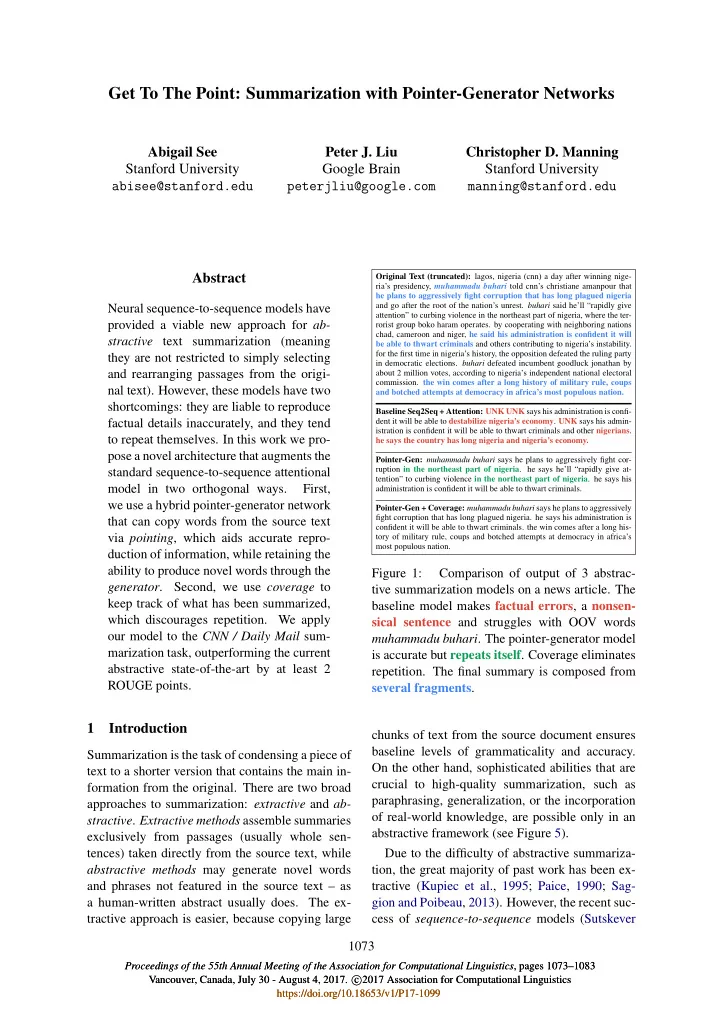

Get To The Point: Summarization with Pointer-Generator Networks Abigail See Peter J. Liu Christopher D. Manning Stanford University Google Brain Stanford University abisee@stanford.edu peterjliu@google.com manning@stanford.edu Abstract Original Text (truncated): lagos, nigeria (cnn) a day after winning nige- ria’s presidency, muhammadu buhari told cnn’s christiane amanpour that he plans to aggressively fight corruption that has long plagued nigeria and go after the root of the nation’s unrest. buhari said he’ll “rapidly give Neural sequence-to-sequence models have attention” to curbing violence in the northeast part of nigeria, where the ter- provided a viable new approach for ab- rorist group boko haram operates. by cooperating with neighboring nations chad, cameroon and niger, he said his administration is confident it will stractive text summarization (meaning be able to thwart criminals and others contributing to nigeria’s instability. for the first time in nigeria’s history, the opposition defeated the ruling party they are not restricted to simply selecting in democratic elections. buhari defeated incumbent goodluck jonathan by and rearranging passages from the origi- about 2 million votes, according to nigeria’s independent national electoral commission. the win comes after a long history of military rule, coups nal text). However, these models have two and botched attempts at democracy in africa’s most populous nation. shortcomings: they are liable to reproduce Baseline Seq2Seq + Attention: UNK UNK says his administration is confi- factual details inaccurately, and they tend dent it will be able to destabilize nigeria’s economy . UNK says his admin- istration is confident it will be able to thwart criminals and other nigerians . to repeat themselves. In this work we pro- he says the country has long nigeria and nigeria’s economy. pose a novel architecture that augments the Pointer-Gen: muhammadu buhari says he plans to aggressively fight cor- ruption in the northeast part of nigeria . he says he’ll “rapidly give at- standard sequence-to-sequence attentional tention” to curbing violence in the northeast part of nigeria . he says his model in two orthogonal ways. First, administration is confident it will be able to thwart criminals. we use a hybrid pointer-generator network Pointer-Gen + Coverage: muhammadu buhari says he plans to aggressively fight corruption that has long plagued nigeria. he says his administration is that can copy words from the source text confident it will be able to thwart criminals. the win comes after a long his- via pointing , which aids accurate repro- tory of military rule, coups and botched attempts at democracy in africa’s most populous nation. duction of information, while retaining the ability to produce novel words through the Figure 1: Comparison of output of 3 abstrac- generator . Second, we use coverage to tive summarization models on a news article. The keep track of what has been summarized, baseline model makes factual errors , a nonsen- which discourages repetition. We apply sical sentence and struggles with OOV words our model to the CNN / Daily Mail sum- muhammadu buhari . The pointer-generator model marization task, outperforming the current is accurate but repeats itself . Coverage eliminates abstractive state-of-the-art by at least 2 repetition. The final summary is composed from ROUGE points. several fragments . 1 Introduction chunks of text from the source document ensures baseline levels of grammaticality and accuracy. Summarization is the task of condensing a piece of On the other hand, sophisticated abilities that are text to a shorter version that contains the main in- crucial to high-quality summarization, such as formation from the original. There are two broad paraphrasing, generalization, or the incorporation approaches to summarization: extractive and ab- of real-world knowledge, are possible only in an stractive . Extractive methods assemble summaries abstractive framework (see Figure 5). exclusively from passages (usually whole sen- tences) taken directly from the source text, while Due to the difficulty of abstractive summariza- abstractive methods may generate novel words tion, the great majority of past work has been ex- and phrases not featured in the source text – as tractive (Kupiec et al., 1995; Paice, 1990; Sag- a human-written abstract usually does. The ex- gion and Poibeau, 2013). However, the recent suc- tractive approach is easier, because copying large cess of sequence-to-sequence models (Sutskever 1073 Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics , pages 1073–1083 Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics , pages 1073–1083 Vancouver, Canada, July 30 - August 4, 2017. c Vancouver, Canada, July 30 - August 4, 2017. c � 2017 Association for Computational Linguistics � 2017 Association for Computational Linguistics https://doi.org/10.18653/v1/P17-1099 https://doi.org/10.18653/v1/P17-1099

Context Vector "beat" Distribution Vocabulary Distribution Attention a zoo Hidden States Encoder Decoder Hidden States ... Germany emerge victorious in 2-0 win against Argentina on Saturday ... <START> Germany Source Text Partial Summary Figure 2: Baseline sequence-to-sequence model with attention. The model may attend to relevant words in the source text to generate novel words, e.g., to produce the novel word beat in the abstractive summary Germany beat Argentina 2-0 the model may attend to the words victorious and win in the source text. et al., 2014), in which recurrent neural networks that were applied to short-text summarization. We (RNNs) both read and freely generate text, has propose a novel variant of the coverage vector (Tu made abstractive summarization viable (Chopra et al., 2016) from Neural Machine Translation, et al., 2016; Nallapati et al., 2016; Rush et al., which we use to track and control coverage of the 2015; Zeng et al., 2016). Though these systems source document. We show that coverage is re- are promising, they exhibit undesirable behavior markably effective for eliminating repetition. such as inaccurately reproducing factual details, 2 Our Models an inability to deal with out-of-vocabulary (OOV) words, and repeating themselves (see Figure 1). In this section we describe (1) our baseline In this paper we present an architecture that sequence-to-sequence model, (2) our pointer- addresses these three issues in the context of generator model, and (3) our coverage mechanism multi-sentence summaries. While most recent ab- that can be added to either of the first two models. The code for our models is available online. 1 stractive work has focused on headline genera- tion tasks (reducing one or two sentences to a 2.1 Sequence-to-sequence attentional model single headline), we believe that longer-text sum- marization is both more challenging (requiring Our baseline model is similar to that of Nallapati higher levels of abstraction while avoiding repe- et al. (2016), and is depicted in Figure 2. The to- tition) and ultimately more useful. Therefore we kens of the article w i are fed one-by-one into the apply our model to the recently-introduced CNN/ encoder (a single-layer bidirectional LSTM), pro- Daily Mail dataset (Hermann et al., 2015; Nallap- ducing a sequence of encoder hidden states h i . On ati et al., 2016), which contains news articles (39 each step t , the decoder (a single-layer unidirec- sentences on average) paired with multi-sentence tional LSTM) receives the word embedding of the summaries, and show that we outperform the state- previous word (while training, this is the previous of-the-art abstractive system by at least 2 ROUGE word of the reference summary; at test time it is points. the previous word emitted by the decoder), and has decoder state s t . The attention distribution a t Our hybrid pointer-generator network facili- is calculated as in Bahdanau et al. (2015): tates copying words from the source text via point- ing (Vinyals et al., 2015), which improves accu- i = v T tanh ( W h h i + W s s t + b attn ) e t (1) racy and handling of OOV words, while retaining a t = softmax ( e t ) (2) the ability to generate new words. The network, which can be viewed as a balance between extrac- where v , W h , W s and b attn are learnable parame- tive and abstractive approaches, is similar to Gu ters. The attention distribution can be viewed as et al.’s (2016) CopyNet and Miao and Blunsom’s 1 www.github.com/abisee/pointer-generator (2016) Forced-Attention Sentence Compression, 1074

Recommend

More recommend