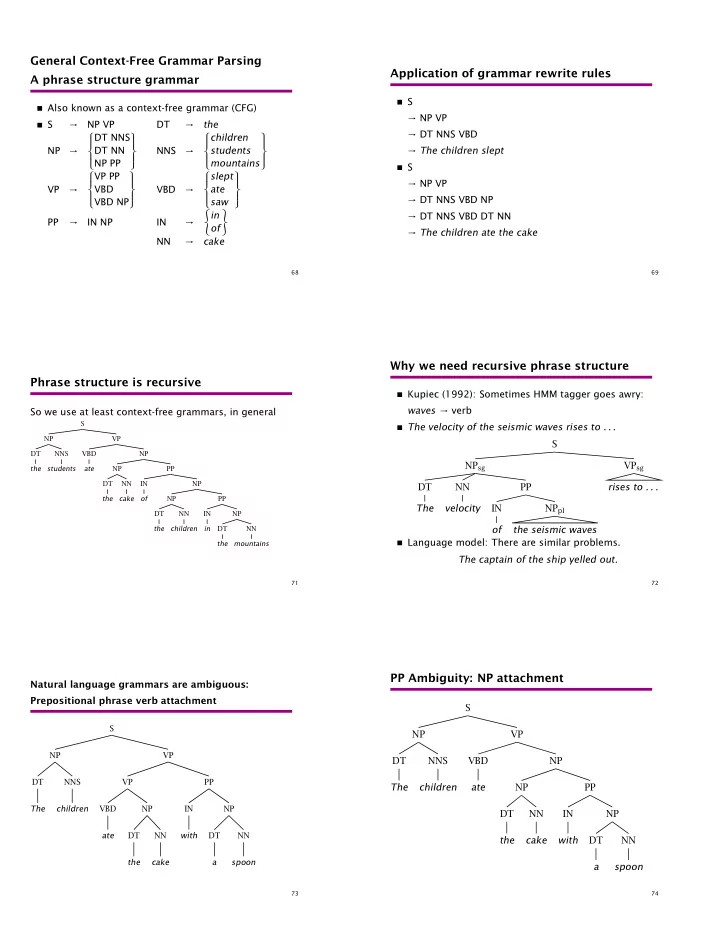

General Context-Free Grammar Parsing Application of grammar rewrite rules A phrase structure grammar � S � Also known as a context-free grammar (CFG) → NP VP � S → NP VP DT → the → DT NNS VBD DT NNS children NP → DT NN NNS → students → The children slept NP PP mountains � S VP PP slept → NP VP VP → VBD VBD → ate → DT NNS VBD NP VBD NP saw � � in → DT NNS VBD DT NN PP → IN NP IN → of → The children ate the cake NN → cake 68 69 Why we need recursive phrase structure Phrase structure is recursive � Kupiec (1992): Sometimes HMM tagger goes awry: waves → verb So we use at least context-free grammars, in general S � The velocity of the seismic waves rises to . . . NP VP S DT NNS VBD NP NP sg VP sg the students ate NP PP DT NN IN NP DT NN PP rises to . . . the cake of NP PP The velocity IN NP pl DT NN IN NP the children in DT NN of the seismic waves � Language model: There are similar problems. the mountains The captain of the ship yelled out. 71 72 PP Ambiguity: NP attachment Natural language grammars are ambiguous: Prepositional phrase verb attachment S S NP VP NP VP DT NNS VBD NP DT NNS VP PP The children ate NP PP The children VBD NP IN NP DT NN IN NP ate DT NN with DT NN the cake with DT NN the cake a spoon a spoon 73 74

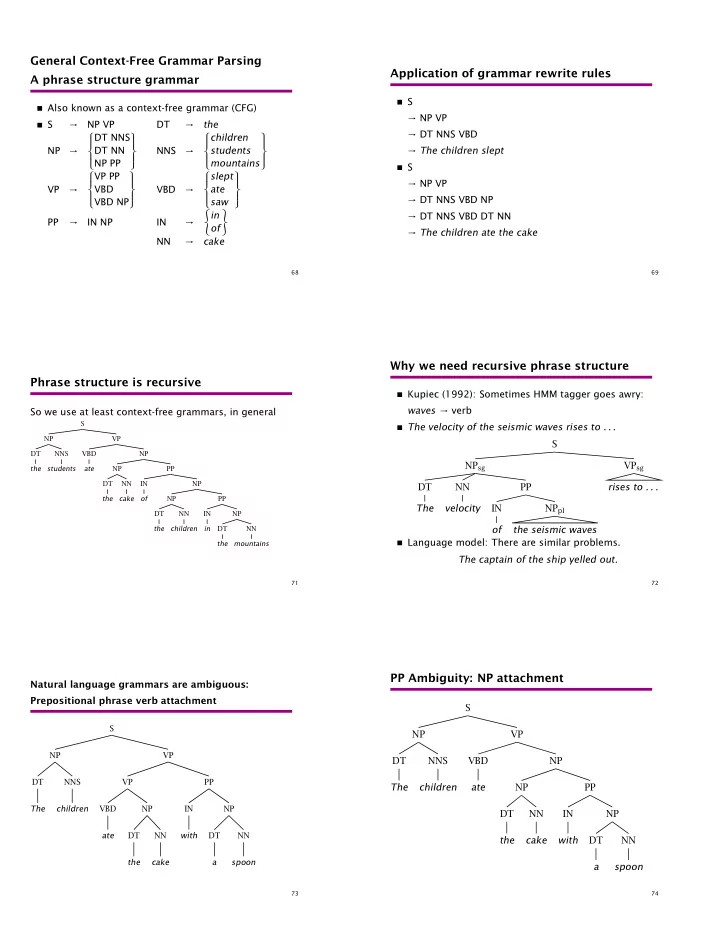

Attachment ambiguities in a real sentence Ambiguity � Programming language parsers resolve local ambigui- The board approved [its acquisition] [by Royal Trustco Ltd.] ties with lookahead [of Toronto] � Natural languages have global ambiguities: [for $27 a share] � I saw that gasoline can explode � Size of embedded NP? [at its monthly meeting]. 75 76 What is parsing? State space search � States: � We want to run the grammar backwards to find the struc- � Operators: tures � Start state: � Parsing can be viewed as a search problem � Goal test: � Parsing is a hidden data problem � Algorithm � We search through the legal rewritings of the grammar Put start state on stack � We want to find all structures for a string of words (for solutions = {} the moment) loop � We can do this bottom-up or top-down if stack is empty, return solutions � This distinction is independent of depth-first/bread- state = remove-front(stack) first etc. – we can do either both ways if goal(state) push(state, solutions) � Doing this we build a search tree which is different stack = push(expand(state, operators), nodes) from the parse tree end 77 78 cats scratch people with claws S NP VP Another phrase structure grammar NP PP VP 3 choices NP PP PP VP S → NP VP N → cats oops! VP → V NP N → claws N VP VP → V NP PP N → people cats VP NP → NP PP N → scratch cats V NP 2 choices NP → N V → scratch cats scratch NP NP → e P → with cats scratch N 3 choices – showing 2nd NP → N N PP → P NP cats scratch people oops! cats scratch NP PP cats scratch N PP 3 choices – showing 2nd . . . cats scratch people with claws 79 80

Phrase Structure (CF) Grammars G = � T, N, S, R � Recognizers and parsers � T is set of terminals � A recognizer is a program for which a given grammar � N is set of nonterminals and a given sentence returns yes if the sentence is ac- � For NLP, we usually distinguish out a set P ⊂ N of cepted by the grammar (i.e., the sentence is in the lan- preterminals which always rewrite as terminals guage) and no otherwise � S is start symbol (one of the nonterminals) � A parser in addition to doing the work of a recognizer � R is rules/productions of the form X → γ , where X also returns the set of parse trees for the string is a nonterminal and γ is a sequence of terminals and nonterminals (may be empty) � A grammar G generates a language L 81 82 Top-down parsing Soundness and completeness � Top-down parsing is goal directed � A parser is sound if every parse it returns is valid/correct � A top-down parser starts with a list of constituents to � A parser terminates if it is guaranteed to not go off into be built. The top-down parser rewrites the goals in the an infinite loop goal list by matching one against the LHS of the gram- � A parser is complete if for any given grammar and sen- mar rules, and expanding it with the RHS, attempting to tence it is sound, produces every valid parse for that match the sentence to be derived. sentence, and terminates � If a goal can be rewritten in several ways, then there is a � (For many purposes, we settle for sound but incomplete choice of which rule to apply (search problem) parsers: e.g., probabilistic parsers that return a k -best � Can use depth-first or breadth-first search, and goal or- list) dering. 83 84 Bottom-up parsing Problems with top-down parsing � Left recursive rules � Bottom-up parsing is data directed � A top-down parser will do badly if there are many dif- � The initial goal list of a bottom-up parser is the string ferent rules for the same LHS. Consider if there are 600 to be parsed. If a sequence in the goal list matches the rules for S, 599 of which start with NP, but one of which RHS of a rule, then this sequence may be replaced by starts with V, and the sentence starts with V. the LHS of the rule. � Useless work: expands things that are possible top-down � Parsing is finished when the goal list contains just the but not there start category. � Top-down parsers do well if there is useful grammar- � If the RHS of several rules match the goal list, then there driven control: search is directed by the grammar is a choice of which rule to apply (search problem) � Top-down is hopeless for rewriting parts of speech (preter- � Can use depth-first or breadth-first search, and goal or- minals) with words (terminals). In practice that is always dering. done bottom-up as lexical lookup. � The standard presentation is as shift-reduce parsing. � Repeated work: anywhere there is common substructure 85 86

Problems with bottom-up parsing Principles for success: what one needs to do � Unable to deal with empty categories: termination prob- � Left recursive structures must be found, not predicted lem, unless rewriting empties as constituents is some- � Empty categories must be predicted, not found how restricted (but then it’s generally incomplete) An alternative way to fix things � Useless work: locally possible, but globally impossible. � Inefficient when there is great lexical ambiguity (grammar- � Grammar transformations can fix both left-recursion and driven control might help here) epsilon productions � Conversely, it is data-directed: it attempts to parse the � Then you parse the same language but with different words that are there. trees � Repeated work: anywhere there is common substructure � Linguists tend to hate you. � Both TD (LL) and BU (LR) parsers can (and frequently � But they shouldn’t providing you can fix the trees post do) do work exponential in the sentence length on NLP problems. hoc. 87 88

Recommend

More recommend