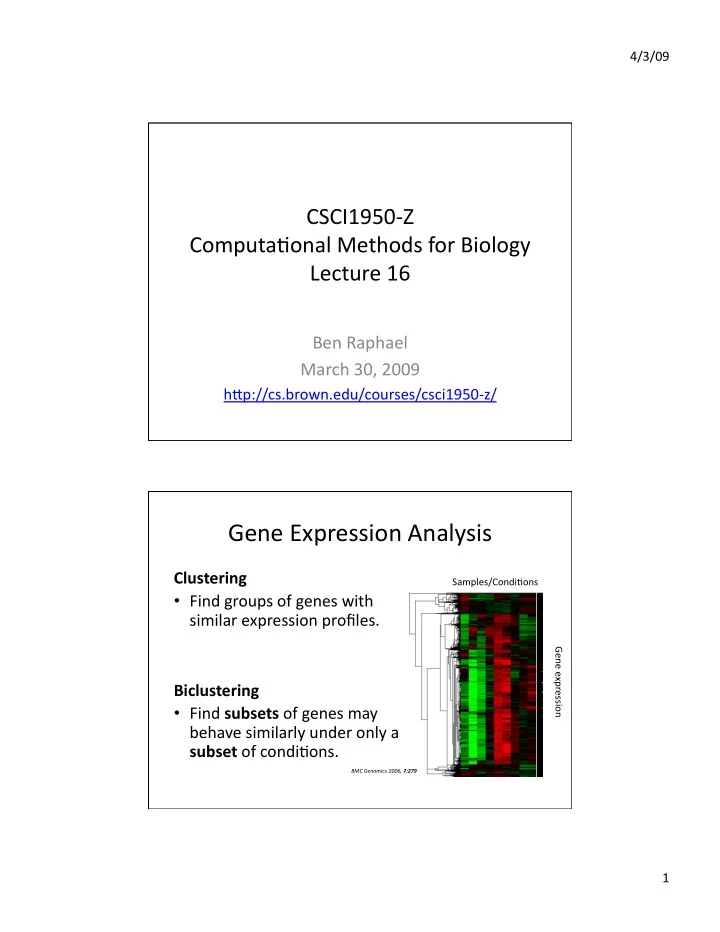

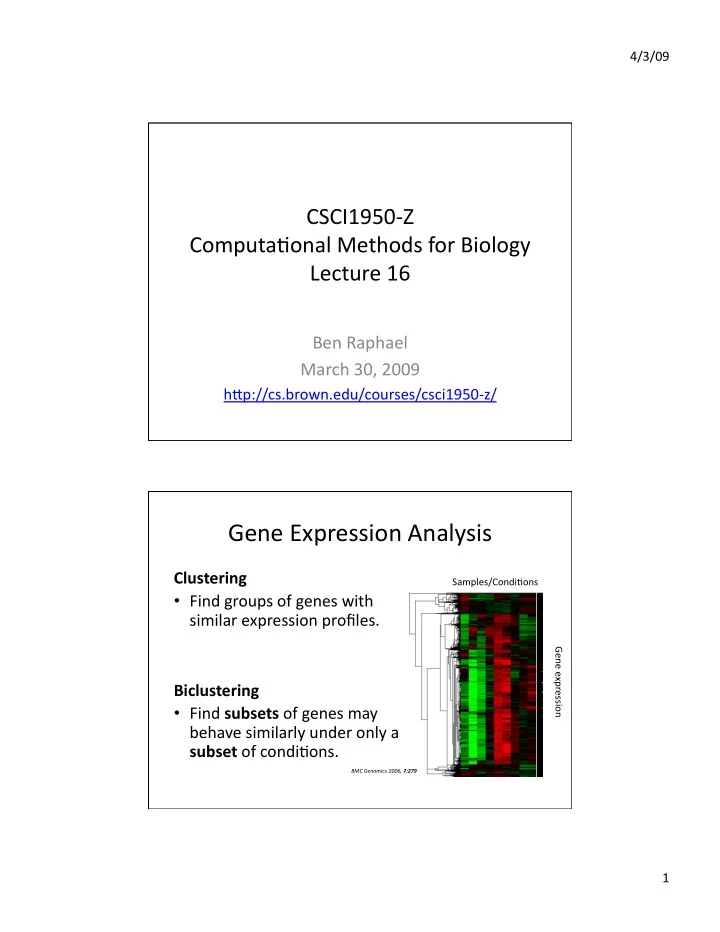

4/3/09 CSCI1950‐Z Computa4onal Methods for Biology Lecture 16 Ben Raphael March 30, 2009 hHp://cs.brown.edu/courses/csci1950‐z/ Gene Expression Analysis Clustering Samples/Condi4ons • Find groups of genes with similar expression profiles. Gene expression Biclustering • Find subsets of genes may behave similarly under only a subset of condi4ons. BMC Genomics 2006, 7:279 1

4/3/09 Classifica4on Use gene expression profiles Samples/Condi4ons to predict/classify samples into a number of predefined groups. Gene expression • Tissue of origin (heart, kidney, brain, etc.) • Cancer vs. normal • Cancer subtypes. BMC Genomics 2006, 7:279 Cancer Subtype Classifica4on Dis4nguish acute lyphoblas4c leukemia (ALL) and acute myeloid leukemia (AML). (Golub et al. Science 1999) 2

4/3/09 Breast Cancer Prognosis • 70 gene signature to predict breast cancer pa4ents with metastasis within 5 years (van de Vijver et al. 2002, van’t Veer et al. 2002) • Now an FDA approved test: Mammaprint Classifica4on Binary classifica2on Given a set of examples ( x i , y i ) , where y i = +‐ 1, from unknown distribu4on D. Design func4on f: R n {‐1,+1} that op7mally assigns addi4onal samples x i to one of two classes. Supervised learning ( x i , y i ) training data x i (j) : feature. R n : feature space. 3

4/3/09 Classifica4on in 2D k‐nearest neighbors 4

4/3/09 Decision Tree • Par44on feature space R n according to series of decision rules. • Internal nodes: Decision rules x i > c • Leaves: Label assigned to point. • “popular among medical scien4sts, perhaps because it mimics the way that a doctor thinks.” (Has4e, Tibshirani, Friedman. Elements of Sta4s4cal Learning) Overfijng • Many classifica4on methods can fit training data with no mistakes. – k‐NN: k=1 – Decision tree with large depth • Classifier will be tuned to “quirks” in training data. Poor generaliza4on to other data sets. • Evaluate classifier on test data, not training data. 5

4/3/09 Training and Tes4ng • Split data D into training/test sets. • Evaluate performance on test set. • Available data D olen limited. E.g. few gene expression samples. • Splijng into training/test sets leaves too few data points. Cross‐Valida4on Split D into K equally sized parts D 1 , …, D k . For k = 1, …K Train on D’ = D \ D k . Test on D k . Compute performance quan4ty ρ k . Output average of ρ k . K‐fold cross‐valida2on . Special case k=n: leave one out cross valida2on . 6

4/3/09 Feature Selec4on • Selec4ng a subset of features (reduc4on of dimensionality) some4mes leads to beHer classifica4on, performance, etc. • Gene expression: subset of genes informa4ve for classifica4on. Results: Class Discovery with TNoM (ben‐Dor, Friedman, Yakhini, 2001) • Find op4mal labeling L. – Solu4on: use heuris4c search • Find mul4ple (subop4mal) labelings – Solu4on: Peeling: remove previously used genes from set. 7

4/3/09 Results: Class Discovery with TNoM (ben‐Dor, Friedman, Yakhini, 2001) Leukemia (Golub et al. 1999): 72 expression profiles. 25 AML, 47 ALL. 7129 genes Lymphoma (Alizadeh et al.): 96 expression profiles, 46 Diffuse large B‐cell lymphoma (DLBCL) 50 from 8 different 4ssues. Lymphoma‐DLBCL: subset of 46 of above. TNoM Results (ben‐Dor, Friedman, Yakhini, 2001) % survival years 40 pa4ents 24 pa4ents with low clinical risk. 8

Recommend

More recommend