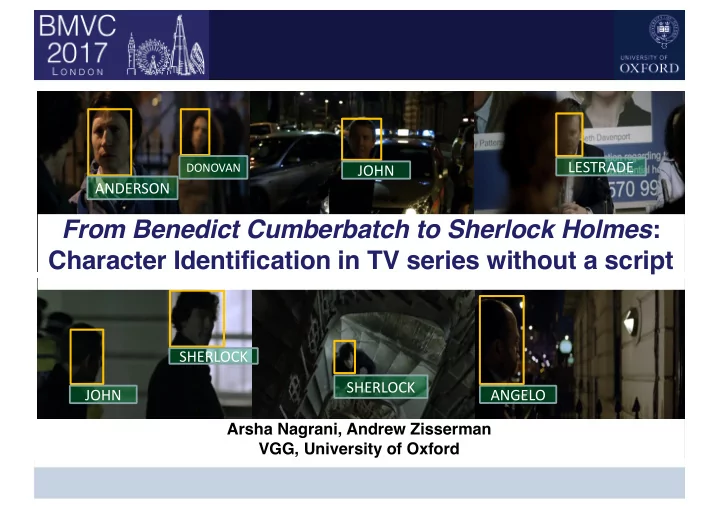

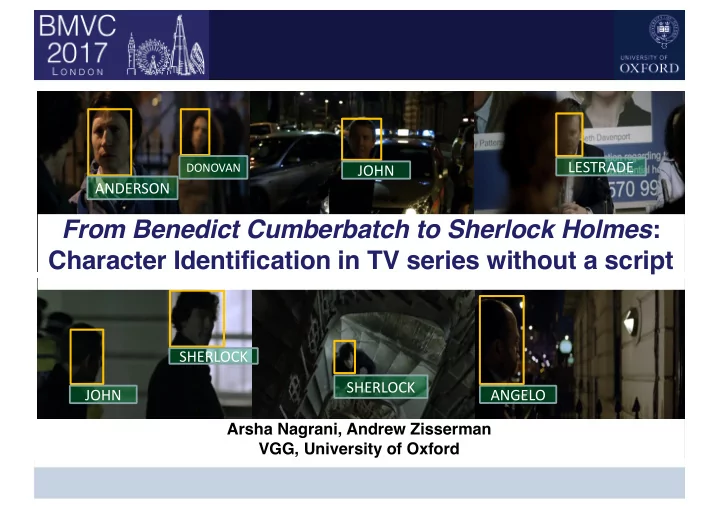

LESTRADE DONOVAN JOHN ANDERSON From Benedict Cumberbatch to Sherlock Holmes : Character Identification in TV series without a script SHERLOCK SHERLOCK JOHN ANGELO Arsha Nagrani, Andrew Zisserman VGG, University of Oxford

Goal Identify every character in every frame of the video Useful for: Ø Content-based browsing e.g. ‘Fast-forward to when Sherlock first meets John’ SHERLOCK Ø One step closer to story understanding JOHN 2 VGG, Dept. of Engineering Science, University of Oxford

Previous Approaches Ø Rely on transcripts or subtitles as weak supervision Subtitles Transcripts who speaks when what is spoken when who speaks what Ø This supervision is only weak, hence techniques like Multiple Instance Learning (MIL) are required as well Everingham et al, 2006 Tapaswi et al, 2016 Bojanowski et al, 2013 Cour et al, 2009 3 VGG, Dept. of Engineering Science, University of Oxford

CNN Face descriptors are marvelous Can we recognise characters from TV shows using faces of their actors only? Actor Images from the Web Raw Facetracks from the video 4 VGG, Dept. of Engineering Science, University of Oxford

Challenges – Different Domains Actor Character Benedict Cumberbatch Sherlock Holmes Actor Images are usually taken from red carpet photoshoots Ø Frontal Ø Good lighting Ø Standard expressions VGG, Dept. of Engineering Science, University of Oxford 5

Challenges Profiles Extreme poses Lighting and contrast Small faces, low resolution Partial Occlusions 6 VGG, Dept. of Engineering Science, University of Oxford

How do we deal with this? Augmentation of actor images 1. Character Context 2. Speech Modality 3. 7 VGG, Dept. of Engineering Science, University of Oxford

How do we deal with this? Augmentation of actor images 1. Character Context 2. Speech Modality 3. 8 VGG, Dept. of Engineering Science, University of Oxford

1. Augmentation of Actor Images 1. Down-sampling using Bi-cubic interpolation 2. Contrast Adjustment 3. Horizontal Flips 9 VGG, Dept. of Engineering Science, University of Oxford

How do we deal with this? Augmentation of actor images 1. Character Context 2. Speech Modality 3. 10 VGG, Dept. of Engineering Science, University of Oxford

2. Character Context VGGFace CNN features are obtained from cropped face regions Ø By retraining on character images we learn hair, make up, expressions of the Ø character Learn the `hairstyle’ of the character, not the `hairstyle’ of the actor. Ø ACTOR CHARACTER CHARACTER Different regions of support 11 VGG, Dept. of Engineering Science, University of Oxford

How do we deal with this? Augmentation of actor images 1. Retraining on facetracks from the TV show 2. Speech Modality 3. 12 VGG, Dept. of Engineering Science, University of Oxford

3. Speech Modality – Voice Classifier Speaker Identification Who is the speaker? Input Output SHERLOCK 13 VGG, Dept. of Engineering Science, University of Oxford

Speech Modality Pipeline 4. Classification Character Face Classifier SVM classifier SHERLOCK High confidence facetrack 1. Labels from facetracks VoxCeleb CNN ASV 1024 Spectrogram 3. Feature Extraction 2. Active Speaker Verification 14 VGG, Dept. of Engineering Science, University of Oxford

Active Speaker Verification - SyncNet Small distance if synchronised Chung, J. S., and Zisserman, A. "Out of time: automated lip sync in the wild." Asian Conference 15 VGG, Dept. of Engineering Science, University of Oxford on Computer Vision , 2016.

Voice Feature Extraction- VGGVox 9x1 3x3x256 3x3x256 300x512 5x5x256 7x7x96 3x3x256 3x3x256 3x3x256 avgpool Raw audio signal C1 maxpool C2 maxpool C5 FC6 C3 C4 300x512 FC7 FC8 Pretrained on 1,251 speakers (VoxCeleb) Nagrani, A. Chung, J. S., and Zisserman, A. ”VoxCeleb: A large scale speaker 16 VGG, Dept. of Engineering Science, University of Oxford identification dataset ” INTERSPEECH , 2017

Voice Classifier Ø 1 vs rest SVM classifier SVM classifier Ø Apply to audio segments where the corresponding face is difficult to identify Obtain labels for extreme poses and profiles 17 VGG, Dept. of Engineering Science, University of Oxford

Putting it all together – Inputs 1. Actor images from the web Benedict Cumberbatch Martin Freeman Rupert Graves Cast Lists easily available on IMDB Actor images 2. Un-labelled facetracks from the TV show Facetracks are obtained using Ø tracking by detection Goal is to update labels using all Ø techniques mentioned so far VGG, Dept. of Engineering Science, University of Oxford 18

Putting it all together We use three 1-vs-rest SVM face classifiers: 1. Actor Face Classifier Ø Trained on augmented actor images only 2. Character Face Classifier Ø Trained on character face images, takes into account face context 3. Character Face Classifier after Voice Correction Ø Trained on face labels following correction by the voice classifier 19 VGG, Dept. of Engineering Science, University of Oxford

Propagation of Confident Labels 1. Actor Face Classifier Face tracks Most confident labels Actor Images 2. Character Face Classifier Train Correct some labels with Voice Classifier 3. Character Face Classifier after Voice Correction Train 20 VGG, Dept. of Engineering Science, University of Oxford

Demo of results at each stage 21 VGG, Dept. of Engineering Science, University of Oxford

Results at each stage - Sherlock Very few Per Character Accuracy PR curve, E01 actor 1 1 1 Many speaking parts images 1.0 1.0 0.8 0.8 0.8 per sample accuracy 0.9 0.9 0.8 0.8 0.6 0.6 0.6 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.4 0.4 0.4 0.3 0.3 0.2 0.2 0.2 0.2 0.2 0.1 0.1 0.0 0.0 0 0 0 0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1 proportion of tracks face(actor) AP:0.98 face+voice(character) face(character) face(actor) face(character) AP:0.99 face+voice(character) AP:0.99 22 VGG, Dept. of Engineering Science, University of Oxford

Results - Casablanca RICK RENAULT RICK ILSA ILSA LASZLO ILSA Profiles Dark faces Partial Occlusions 23 VGG, Dept. of Engineering Science, University of Oxford

Comparison to state-of-the-art - Casablanca 1 1 1 1 AP: 0.96 AP: 0.93 Our method (final) 0.8 0.8 0.8 0.8 AP: 0.89 per sample accuracy per sample accuracy per sample accuracy per sample accuracy Parkhi ’15 [2] Actor face only 0.6 0.6 0.6 0.6 Bojanowski ’13 [1] AP: 0.75 0.4 0.4 0.4 0.4 0.2 0.2 0.2 0.2 0 0 0 0 0 0 0 0 0.2 0.2 0.2 0.2 0.4 0.4 0.4 0.4 0.6 0.6 0.6 0.6 0.8 0.8 0.8 0.8 1 1 1 1 proportion of tracks proportion of tracks proportion of tracks proportion of tracks [1] P. Bojanowski, F. Bach, I. Laptev, J. Ponce, C. Schmid, J. Sivic, "Finding actors and actions in movies", ICCV, 2013 [2] O. M. Parkhi, E. Rahtu, A. Zisserman, "It's in the bag: Stronger supervision for automated face labelling", ICCV Workshop, 2015 24 VGG, Dept. of Engineering Science, University of Oxford

Demo 25 VGG, Dept. of Engineering Science, University of Oxford

What have we missed? Small and very dark faces Extreme occlusion cases where Back of heads the character is not speaking 26 VGG, Dept. of Engineering Science, University of Oxford

Summary Ø Novel approach that eschews transcripts, subtitles or manual annotation Ø Multimodal method with both voice and face context for recognition Ø Recognises profiles, partial occlusions and extreme poses Ø Beats the state-of-the-art on the Casablanca dataset 27 VGG, Dept. of Engineering Science, University of Oxford

Recommend

More recommend