Dynamic Task Scheduling for the Uintah Framework Qingyu Meng, Justin Luitjens, and Martin Berzins Thanks to DOE for funding since 1997, NSF since 2008

Uintah Applications Plume Fires Angiogenesis Industrial Flares Micropin Flow Explosions Sandstone Compaction Virtual Foam Soldier Shaped Charges Compaction

Uintah Development • Uintah is developed over a decade based on a far-sighted design by Steve Parker - Complete separation of user code and parallelism Tuning Expert (CS) Domain Expert (Engineering) Goal Performance, Salability Problem, Methods Responsibility Infrastructure Components Simulation Components Major Load balancing, AMR Arches, ICE, MPM, MPM- Contributions Task-graph based scheduling ICE, etc.. Asynchronous communication View of Parallel Infrastructure Serial Code Written for a Program MPI, Threads Patch

How Does Uintah Work Task-Graph Specification Patch-Based Domain • Computes & Requires Decomposition • No Processor or Domain Information

Patch-Based Domain Decomposition Adaptive Mesh Particles Cells Patch regrid load balance Mesh of cells Patch Processors

Uintah Scalability • Currently scale up to 98K cores on Kraken • Prepare for future machines • Petascale • Exascale 2018 ~2020 e.g. Aggressive Strawmam 742 cores per socket, 166,113,024 cores (DARPA hardware report, 2009)

Software Model for Exascale • Silver model for Exascale software which must: • Directed dynamic graph execution • Latency hiding • Minimize synchronization and overheads • Adaptive resource scheduling • Heterogeneous processing • Graph-based asynchronous-task work queue model (DARPA software report, 2009)

Graph Based Applications 1: 1 1: 1: 1: 2 3 4 2: 2: 2: 2 3 4 2: Charm++: Object-based Virtualization 2 2: 2: 3 4 3: 3: 3 4 Intel CnC: 3: new language for 3 graph based parallelism Plasma (Dongarra): DAG based Parallel linear algebra software

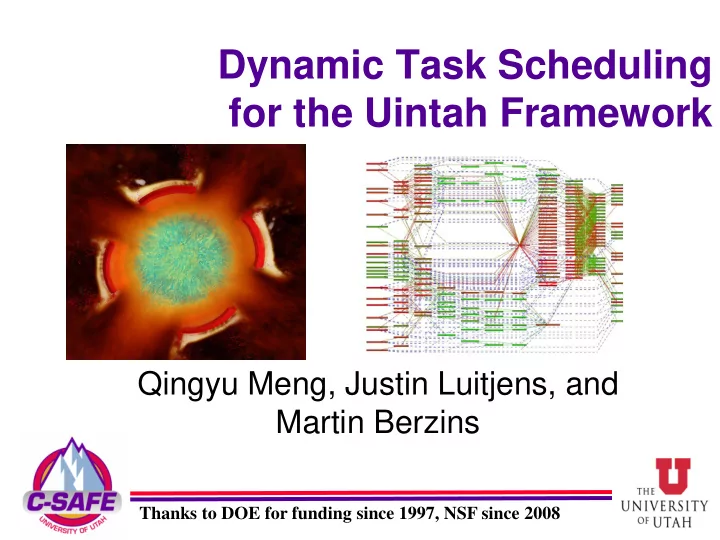

Uintah Distributed Task Graph • Up to 2 million tasks per timestep globally • Tasks on local and neighboring patches • Callback by each patch • Variables in data warehouse(DW) • Read - get() from OldDW and NewDW • Write- put() to NewDW • Communication on cutting edges 4 patches single level ICE task graph

Example Uintah Task from the ICE Algorithm Compute face-centered Velocities: Input variables Output variables (include boundary conditions)

Task Graph Compiling

Uintah Static Task Scheduler • Task List • Static analysis • In order execution, same order for each patch • Task status • Running->Finished->Next Task

Static Task Graph Execution 1) Static: Predetermined order • Tasks are Synchronized • Higher waiting times Task Dependency Execution Order

Dynamic Task Graph Execution 1) Static: Predetermined order • Tasks are Synchronized • Higher waiting times 2) Dynamic: Execute when ready • Tasks are Asynchronous • Lower waiting times Task Dependency Execution Order

Dynamic Multi-threaded Task Graph Execution 1) Static: Predetermined order • Tasks are Synchronized • Higher waiting times 2) Dynamic: Execute when ready • Tasks are Asynchronous • Lower waiting times 3) Dynamic Multi-threaded( Future ): • Task-Level Parallelism • Decreases Communication Task • Decreases Load Imbalance Dependency Multicore friendly Execution Order Support GPU tasks

Uintah Dynamic Task Scheduler Multi-thread • Task queues • Internal ready (MPI waiting tasks) • External ready (ready for concurrent execution) • Task status • Not scheduled -> Internal Ready -> External Ready -> Running -> Finished -> New task(s) satisfied

Ondemand Datawarehouse Directory based hash map <name, type, patchid> Var <name, type, patchid> Var versions del_T Global n/a del_T Global n/a v v1 press CC 1 press CC 1 v v1 v2 v3 press CC 2 press CC 2 v2 v3 v u_vel FC 1 u_vel FC 1 v v1 v3 … … … … … C .. .. V V R M V For fixed order execution Variable versioning for out-of-order execution

Schedule Global Sync Task • Synchronization task R1 R2 • Update global variable R2 R1 • e.g. MPI Allreduce • Call third party library Deadlock • e.g. PETSc • Out-of-order issues • Deadlock • Load imbalance • Task phases • One global task per phase Load imbalance • Global task runs last • In phase out-of-order

Dynamic Scheduler Performance Improvements Strong Scaling Weak Scaling (Fixed problem size) (Fixed problem size/Core)

Task prioritization algorithms not executed executed MPI sends Algorithm Random FCFS PatchOrder MostMsg. Queue Length 3.11 3.16 4.05 4.29 Wait Time 18.9 18.0 7.0 2.6 Overall Time 315.35 308.73 187.19 139.39

Granularity Effect • Decrease patch size • (+) Increase queue length • (+) More overlap, lower task wait time • (+) More patches, better load balance • (-) More MPI messages • (-) More regrid overheads • Other Factors • Problem size • Implied task level parallelism • Interconnection bandwidth and legacy • CPU cache size • Solution- Self Tuning?

Summary • Dynamic task scheduling • Support Out of order execution • Two task queues • Variable versioning • Task phases • Task prioritization algorithms • Ready queue length and task wait time • Granularity effect • Multi-thread and Self-tuning (Future)

Questions

BACKUP SLIDES

Uintah Components Simulation Models Regridder (Arches, ICE, MPM, (EoS, Constitutive, …) MPMICE, MPMArches , …) Callbacks Tasks Simulation Data Tasks Controller Archiver Scheduler Callbacks XML Load Checkpoints Problem MPI Balancer Specification Data I/O Domain Tuning Expert Expert

Uintah: Task Based Application • Automatic dependency analysis • Automatic message generation

Priority: Most Messages • Priority external task queue • Give priority to tasks that satisfy external dependencies first 3 3 5 5 0 3 3 0 3 3 0 0 5 3 3 5 patches on a single core

External Dependency Counter

MPM-ICE Algorithm Uintah originally designed for simulation of fires and explosions, e.g. metal containers embedded in large hydrocarbon fires. • ICE is a cell-centered finite volume method for Navier Stokes equations • ICE now handles fast and slow flows (2009) • MPM is a novel method that uses particles and nodes • Cartesian grid used as a common frame of reference • MPM (solids) and ICE (fluids) exchange data several times per timestep, not just boundary condition exchange. Container with PBX explosive

Recommend

More recommend