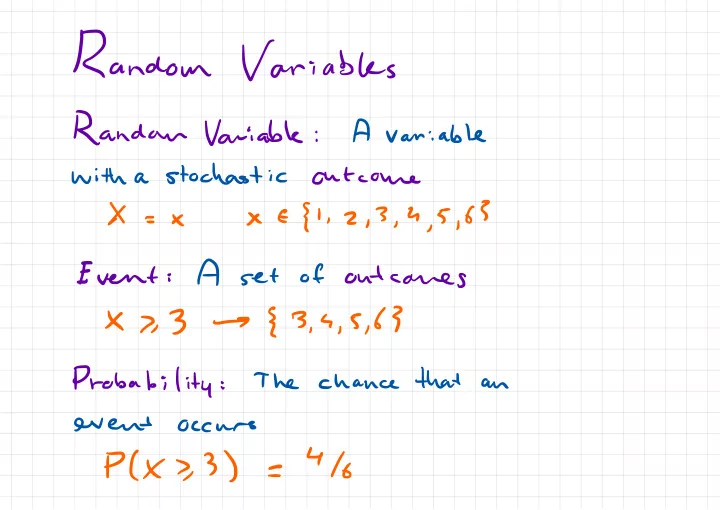

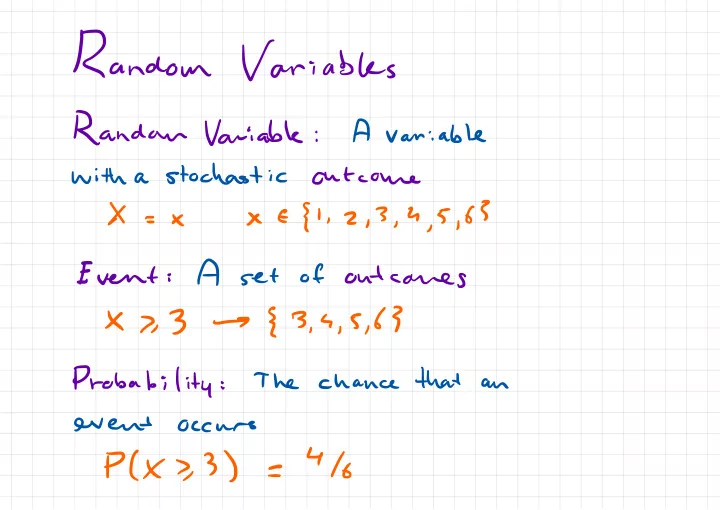

Random Variables Random A Variable variable : stochastic with outcome a E { , 63 2,3 4,5 1 × × = , , Event A of outcomes set : , 5,6 } { 7,3 X 3. s → Probability that The chance an : event occurs 4/6 PCX >, 3) =

Distributions A distribution outcomes maps probabilities to P(X=x ) 116 = ) ( abused shorthand Commonly used ; or Pc P(X=x x ) ) →

Condition 'al Probabilities Probability joint B ) 13 ) PC A. Plan :-. pp Events Probability Conditional PC A. B) B) Al P( :-. PC B)

Sum Rule General PCB = PLA Au Case :X 0.4 0,6 0.4 0.7 0,5 t = - B ) P ( ) , B) PCA ) + - p P p Either hair Has Is short a man a man hair has short or 0.6 0,5 Marginal Corrolaiiies f [ P(4=y)={P(4=y ,X=x ) P P(X=x ) (A) = A T T e ' T Rand outcome Events uw

Bayes Rule Pl Pla ,R ) B) Al . PGA = - PC B) Rule Product . B) ) PCB ) P AIB ( = , PCBIA ) PCA ) = Bayes Rule ' A. B ) ) PCAIB Pl = ) PCB Pla ) PLBIA ) = PC B)

A :-( oooo :| Event Example Prior You have disease rare a positive ) PGA P( A) 0.9999 PCAIB = = for B Test disease is : as a -0.0001 )= Livelihood A) PIBIAIPIA PCB gain qa 1 a = 0.004 ( - A) P ( P( B. A) PGA ) Bl 0.01 a o.O = , PIBIAIPIA )tPlB) ) PIB = PHA ) Question ? What ) is : Pla ) Pl Bt A) ' , o ooo B) . Al p( o.cl = - = = - PC B ) 0.01+0.0001

Densities Probability Suppose X variable that continuous is a P(X=x ) then for outcome 0 is × any outcome f p(X=n ) Normal 10,1 ) X~ o = ) to pl3sXs4 a Define event density function +8 ) P( 8s×< x x - him pxcxs = . 28 8 → o

P ) Probability ( Space Eil R F , , R Sample space ) possible outcomes ( set of F Set events of possible subet ( of every ) the sample space Probability P measure Probability Event = ? g p ( Y E ;) ( Ei ) P P P F [ , D : → o ( ¢ ) ( Empty P { when disjoint set =o pvebo ) has P ) ( R 1 = 1 sample space 1 ) prob has

{ x ; 3¥ @ Measures Examples Reference of probalitu ( ? not Lebesgue Measure : ( interval ) b- of a ,b ] ) µ( Width [ a = Counting : ( µ ( elements ) ) Number of = Measure Product Measure : Product ) ( ( E ) µ{ Cartesian µ IE , ) . ) µ E = , ( E E , Ez ) := ,

Definition |× Probability Measure of measure Differential probability g g of net the measure PCA ) := pyx ) dµc× , a e \ 4 4 Density Outcome Event function Notation Machine Learning ×=x ✓ |a P ( A) dx ) pcx = T Ref implied measure

Values Expected := / X~ X ) E [ dx pcx ) pcx ) x pay . . . @ C Implied by Statistician � 1 � defines Expectation Conditional first this := ) f E[ f ( x. I 4=y ] 4) ( dx g) x. T - Observed data Expectation different distribution w.int a . = / fix Eacx , [ f ( x ) ] dx > ) qcx dist T qcx ) some

Central problem this in course Quantity Epcxiy of e- interest )[ fk , y ) ] Thitngs ( www. Things do we know don't we . driving Self Cars Diagnosis Past trajectory Symptoms y Examples Condition Future trajectory × f Treatment Will pedestrian Outcome ? cross

Biased Example Coins : Beta Bias ( X , B) [ 0,1 ] a E ~ × 's ' unbiased coin is ×=° Bernoulli Yn ( × ) ~ always tails x=o N heads always × I n = = i . . , , . g tails { yn= Banes Rule heads Posterior ) p ( X ,4µ=yn 14 y = , , . . . , Likelihood Prior X ) p ( X ) 4 YN ( 1 y = p yn = = , , . . . , , yn , ) Marginal PCY Uµ= y = , . . Likelihood , . . ,

' ¥-1,1 piyn '× ¥ Yates Beta " ' " ' Pinion ' .× 0 I p l I a - i - Betak xs ( ;qp7= × . i - BC 0,137 - BIQB ) bur P( a) 171137 = co 11 × ) - p - Parameters 176+13 ) Likelihood ,bH µ yi ... , ✓ II. ply :n1× ) plynlx ) are = , , i. XYT " { ×Yn( ) x yn=i = = ( l x ) yn=o -

× depend on Conjngacy does g ) Plx I ply , :µ,× ) a ply yi :µ,×7 :µ = , - ) Plyiin \ depend does not oh × ( yi ) lx ply ) P :µ,× pk ) = , :m µ B- ( l ) yn yn at 1 n - 1- \ ( 1 ( X × × ) × l = - - nil 1310,131 III. but , , .nl#..tynHB 1 -1 to . , = - n BGIB ) ( (^ Number Number of of Sufficient tails heads in in - trials trials N N statistics

Conjngacy ) Plx I ply , :µ,×7 yi a ply :µ,×7 :µ = , - ) plyiin ( Yi ,× ) lx P ply ) pk = :µ ) , :m xo N . xsp "MxYn( , " " -9 "a 1 .× , = B( a ,M n= , ×l£IYnlta + ' =p .nl?Ea.ynDtB.ln=g= N £ ' :[ . 1 ynto .tk#lBe+acx;a.B , , = - B( a ,p7 =D = 1 - N 15=1 i. xp " Ya " , ,3,×£' ,÷ ) ( , BA ,B )

Doesn't depend X on Conjugate f Depends × on d BC I. B ) Betak;I F) × ) ply = :n , , , Bla B) , ) ) PCX I plyi yi = :u :µ Posterior / F ) ) Beta Q ; x pcxiyi :n = , H E [ ynto . = Marginal Likelihood nu = & , .li B(I,pif B ) yn ) + ply 13 - :u = , B( a ,B )

Predictive Distribution Joint probability of trial next bias and coin - | :X , ) ( , ) 1 dx Yi Yi plymti × p 1 yµ+ = :X , , / ) 1×1 pklyi dx ( yµ+ = p :N , ) :* ) [ plyntilxl Epcxiy = , Weighted Example Coin ) ( Exercise

Hard ? Why Bayesian Inference is Gaussians Mixture Example of : K ( he Normal ) Center µw 0,1 ~ 1 , ... , Gamma 11,1 ) 6h Width ~ 1 He Discrete ( 11k . ,N Cluster ) Z ~ 1 = n in . . ... , , , Assignment Normal ( Mh ,6u ) Zn=h 1 yn .nl/Ui:k,6i:k ~ Marginal Likelihood µ ) =) die K K K , :µP( Yi , , ,<d7 , :,<d6 ) PC ,7i yi :N :n

Recommend

More recommend