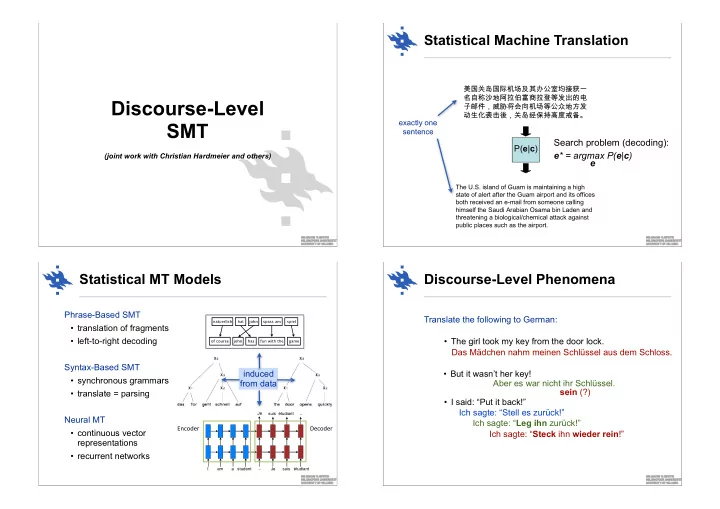

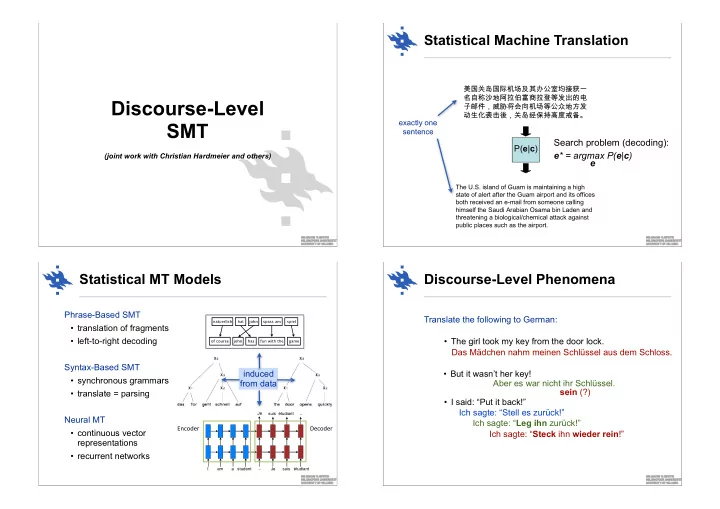

Statistical Machine Translation ����������������� ����������������� Discourse-Level ����������������� ����������������� exactly one SMT sentence Search problem (decoding): P( e | c ) e * = argmax P( e | c ) (joint work with Christian Hardmeier and others) e The U.S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Statistical MT Models Discourse-Level Phenomena Phrase-Based SMT Translate the following to German: • translation of fragments • left-to-right decoding • The girl took my key from the door lock. Das Mädchen nahm meinen Schlüssel aus dem Schloss. Syntax-Based SMT induced • But it wasn’t her key! • synchronous grammars from data Aber es war nicht ihr Schlüssel. sein (?) • translate = parsing • I said: “Put it back!” Ich sagte: “Stell es zurück!” _ Je suis étudiant Neural MT Ich sagte: “ Leg ihn zurück!” Encoder' Decoder' • continuous vector Ich sagte: “ Steck ihn wieder rein !” representations • recurrent networks _ I am a student Je suis étudiant

Textual Cohesion Connectedness of Natural Language Textual cohesion The 10 commandments (1956) Kerd ma lui (2004) The incredibles (2004) • discourse markers To some land flowing with milk Mari honey ... How you doing, honey? • referential devices (e.g. pronominal anaphora) and honey! Mari, gumman ... Hur går det älskling? • ellipses (word omissions) and substitutions Till ett land fullt av mjölk och Sweetheart, where Do I have to answer? honung. • lexical cohesion (word repetition, collocation) are you going? Måste jag svara på det? I’ve never tasted honey. Älskling, var ska Kids, strap yourselves Jag har aldrig smakat honung. du? down like I told you. Textual coherence ... ... Barn ... Gör som jag sa He showed you no milk and Who was that, • semantically meaningful relations åt er .. honey! honey? • logical tense structure Han gav er ingen mjölk och Vem var det, Here we go, honey. honung. gumman? Nu gäller det älskling • presuppositions and implications connected to general world knowledge “One sense per discourse” - “One translation per discourse” Discourse and Machine Translation Locality in Phrase-Based SMT context-independent phrase translations Long-distance relations are lost in local MT models • sentence-by-sentence translation Bakom huset hittade polisen en stor mängd narkotika . • limited context window Behind the house found police a large quantity of narcotics . Discourse-level devices do not easily map between languages Behind the house police found a large quantity of narcotics . • explicit vs. implicit discourse markers • grammatical agreement in anaphoric relations context-independent Behind the house re-ordering models the house police Terminological consistency house police found • domain-specific requirements police found a found a large Small context-window from N-gram language model

Decoding by Hypothesis Expansion Hypothesis Recombination Left-to-right decoding Combine branches to greatly reduce search space Dynamic programming using hypotheses recombination home goes not p:-5.012 p:-1.648 p:-3.526 he -4.672 er geht ja nicht nach hause -3.569 p:-0.556 to house go p:-4.334 does not -2.729 p:-2.743 p:-1.664 it p:-0.484 home p:-4.182 to goes p:-2.839 are p:-1.388 go house p:-1.220 p:-4.087 p:-5.912 • only possible with strictly local models yes • lossy beam search is severely effected if recombination he home goes cannot be done are does not go home it to Lexical Cohesion and Consistency Cache-Based Models Lexical consistency and textual cohesion Adaptive language models • encourage consistent lexical choice (two-pass decoding) • cache information (even across sentence boundaries) • re-ranking MT output (n-best lists) • integrated topic model, topic-shift detection • explicit cohesion model Cached Language Models Natural Repetitions and Probabilistic Models • standard n-gram language models: modified likelihoods • one sense per discourse • add term likelihoods from caching history • likelihood to see the same term goes up • include decay function to slowly forget cached history P ( w 1 .. w n ) = ( 1 − λ ) P n − gram ( w 1 .. w n ) + λ P cache ( w 1 .. w n ) fixed (estimated from training data) dynamic (estimated from cache)

Cache-Based Models Decaying Cache Models n − 1 P decaycache ( w n | w n − K .. w n − 1 ) ≈ 1 Model Perplexity on Out-Of-Domain Data I ( w n == w i ) exp − α ( n − i ) X Z i = n − K 184 cache size λ = 0 . 005 λ = 0 . 05 λ = 0 . 1 λ = 0 . 2 λ = 0 . 3 decay cache size = 2000 cache size = 5000 0 376.124 376.124 376.124 376.124 376.124 factor 182 cache size = 10000 50 317.695 270.700 259.212 256.376 264.905 180 100 314.195 261.115 246.618 239.237 243.276 500 313.591 252.155 233.098 219.118 216.989 178 perplexity 1000 310.135 240.646 217.996 199.221 192.870 176 2000 309.362 234.570 209.578 187.857 179.056 5000 312.367 235.323 209.068 185.789 175.783 174 10000 315.435 237.633 210.745 186.647 176.061 172 20000 318.101 239.868 212.471 187.735 176.674 170 Large impact! → 53.3% perplexity reduction 168 0 0.0005 0.001 0.0015 0.002 0.0025 0.003 decay ratio Cached Translation Models Cache Models in Out-of-domain SMT 5 cache models vs. standard models Prefer consistent translation options 4 P K i = 1 I ( h e n , f n i == h e i , f i i ) ⇤ exp − α i φ cache ( e n | f n ) = P K 3 i = 1 I ( f n == f i ) BLEU score difference 2 Selective caching • content words only (approximated by length threshold) 1 • reliable hypotheses (hypothesis cost threshold 0 -1 (scored per document) -2

Cache Models in Out-of-domain SMT Mixed-Domain Models 5 WMT 2010 data (train = Europarl + News, test = News): cache LM vs. standard LM cache TM vs. standard TM 4 n-gram scores BLEU 1 2 3 4 3 BLEU score difference de-en baseline 21.3 57.4 27.8 15.1 8.6 de-en cache 21.5 58.1 28.1 15.2 8.7 2 en-de baseline 15.6 52.5 21.7 10.6 5.5 en-de cache 14.4 52.6 21.0 9.9 4.9 1 es-en baseline 26.7 61.7 32.7 19.9 12.6 es-en cache 26.1 62.6 32.7 19.8 12.5 0 en-es baseline 26.9 61.5 33.3 20.5 12.9 en-es cache 23.0 60.6 30.4 17.6 10.4 -1 -2 → no success! One Problem: Error Propagation Discussion Mixed Results with Cache-Based Models input Naturschützer wird der Erpressung beschuldigt • lower perplexity especially on out-of-domain data baseline facing conservationists is accused of extortion • not always positive effects when used in SMT facing conservationists is accused of extortion cache reference Nature protection officers accused of blackmail What is the problem? input Die Leitmeritz-Polizei beschuldigte den Vorsitzenden der Bürgervere- • better explains human data (not generated data) inigung "Naturschutzgemeinschaft Leitmeritz" wegen Erpressung. • pushes decoder in the wrong direction when used for baseline the leitmeritz-polizei accused the chairman of the bürgervereinigung " generating machine translation output (error propagation!) naturschutzgemeinschaft leitmeritz " because of blackmail . cache the leitmeritz-polizei accused the chairman of the bürgervereinigung " • cache model part is too simple (usually unigram model) naturschutzgemeinschaft leitmeritz " because of extortion . reference The Litomerice police have accused the chairman of the Litomerice Nature Protection Society civil association of blackmail. Possible application: Interactive Machine Translation

Beam Search Decoding home goes not p:-5.012 p:-1.648 p:-3.526 he -4.672 -3.569 p:-0.556 to house go p:-4.334 does not -2.729 p:-2.743 it p:-1.664 p:-0.484 home p:-4.182 to goes p:-2.839 Advantages: are p:-1.388 go p:-1.220 house p:-4.087 p:-5.912 Document-Level Decoding • very good search results in a huge search space • manageable complexity • best efficiency / accuracy trade-off with current models Disadvantages: • Markov assumption (independence outside of limited history) • sentence-internal long-distance dependencies dramatically increase search space and cause more search errors • difficult to integrate cross-sentence dependencies Document-Level Decoding in Docent Document-Level Decoding Local search with stochastic search operations Initial state • randomly selected segmentation and translation DOCENT • or initialised by beam search with local features only initialize MOSES document translation or random accept / reject change operation & Search operations change score update • simple operations that open entire search space document final doc • randomly selecting next operation translation translation step limit (10 8 ) rejection limit (10 5 ) Search is non-deterministic Search and Termination • start with a complete (suboptimal) solution • accept useful changes • apply small changes anywhere to improve it • until step limit is reached • hill-climbing or annealing • or rejection limit us reached

Recommend

More recommend

Stay informed with curated content and fresh updates.