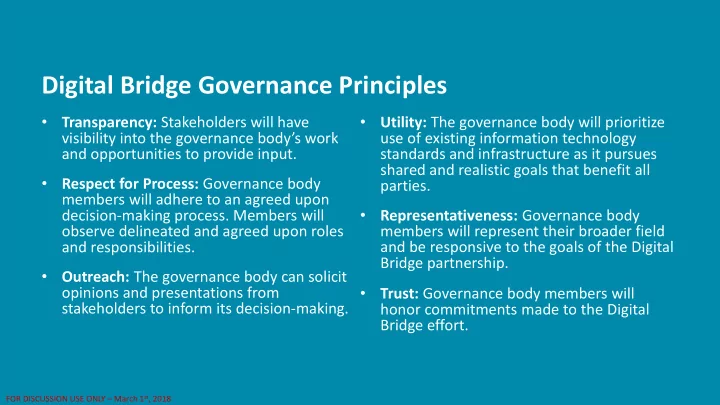

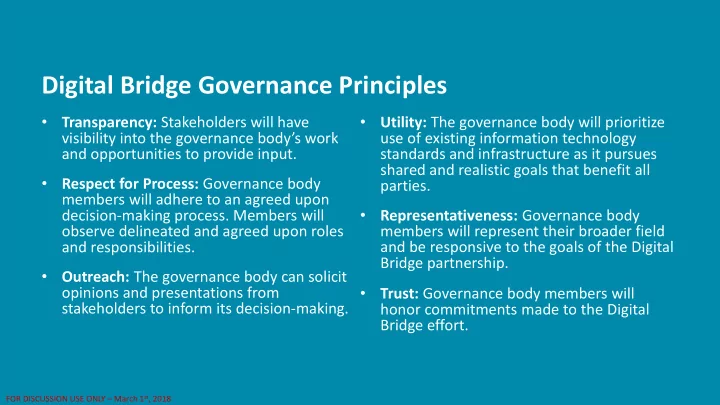

Digital Bridge Governance Principles Transparency: Stakeholders will have Utility: The governance body will prioritize • • visibility into the governance body’s work use of existing information technology and opportunities to provide input. standards and infrastructure as it pursues shared and realistic goals that benefit all Respect for Process: Governance body parties. • members will adhere to an agreed upon decision-making process. Members will Representativeness: Governance body • observe delineated and agreed upon roles members will represent their broader field and responsibilities. and be responsive to the goals of the Digital Bridge partnership. Outreach: The governance body can solicit • opinions and presentations from Trust: Governance body members will • stakeholders to inform its decision-making. honor commitments made to the Digital Bridge effort. FOR DISCUSSION USE ONLY – March 1 st , 2018

Governance Body Meeting Thursday, March 1, 2018 12:00 – 1:30 PM ET This meeting will be recorded for note-taking purposes only. FOR DISCUSSION USE ONLY – March 1 st , 2018

FOR DISCUSSION USE ONLY – March 1 st , 2018

Meeting agenda Time Agenda Item Purpose: 12:00 PM Call to order – John Lumpkin The purpose of this meeting is to work toward a common vision for exchanging actionable information between 12:04 PM Agenda review and approval – John Lumpkin public health and health care. Objectives: 12:05 PM In-person meeting follow-up: Draft eCR demonstration By the end of this meeting, the governance body will have: success factors 1. Discussed draft eCR demonstration success factors Charlie Ishikawa • developed during the January in-person meeting 12:30 PM eCR implementation progress 2. Identified and discussed the status of eCR Jim Jellison implementation work • 3. Discussed the draft implementation evaluation plan 12:45 PM Draft Digital Bridge eCR evaluation plan Materials: Jeff Engel • 1. Draft evaluation plan for eCR implementation remaining Announcements – Charlie Ishikawa 2. Governance body eCR demonstration pledges 3. Draft Digital Bridge eCR demonstration success factors 1:30 PM Adjournment – John Lumpkin 4. Meeting slide deck FOR DISCUSSION USE ONLY – March 1 st , 2018

In-person Meeting Follow-up: Draft eCR demonstration success factors Charlie Ishikawa FOR DISCUSSION USE ONLY – March 1 st , 2018

Key in-person meeting accomplishments 1. Identified facilitators and challenges to eCR implementation work 2. Drafted Digital Bridge eCR demonstration success factors 3. Documented governance body action pledges for 2018 eCR demonstration work 4. Identified a range of activities needed to take eCR from demonstration to early adoption and then to widespread adoption and workflow integration 5. Discussed Digital Bridge sustainability FOR DISCUSSION USE ONLY – March 1 st , 2018

FOR DISCUSSION USE ONLY – March 1 st , 2018

FOR DISCUSSION USE ONLY – March 1 st , 2018

Discussion 1. What is missing? 2. How can what is there be improved? 3. What does the governance body want to do with this? FOR DISCUSSION USE ONLY – March 1 st , 2018

eCR Implementation Progress Jim Jellison FOR DISCUSSION USE ONLY – March 1 st , 2018

Evaluation Committee Update: Main features of the evaluation plan Jeff Engel (Chair, Evaluation Committee) FOR DISCUSSION USE ONLY – March 1 st , 2018

Evaluation purpose and approach • The Digital Bridge eCR approach uses new infrastructure and standards, much of which has not yet been tested or piloted. • Purpose: Assess satisfaction with the Digital Bridge eCR approach and estimate resources needed to implement the approach. • Approach: Combination of formative and process evaluation • Evaluation plan is designed to use the experiences of the implementation sites to inform ongoing development of the Digital Bridge eCR approach. FOR DISCUSSION USE ONLY – March 1 st , 2018

Evaluation goals 1. Identify and describe overall processes by which the sites initiated and implemented eCR and the various influencing factors 2. Determine eCR functioning and performance in terms of: a. System/core component functionality and performance; b. Case reporting quality and performance (completeness, accuracy, timeliness). 3. Identify the resources needed to initiate and implement an eCR system. 4. Identify the potential value and benefits of eCR to stakeholders. FOR DISCUSSION USE ONLY – March 1 st , 2018

Reminder: five conditions in scope • Chlamydia • Gonorrhea • Pertussis • Salmonellosis • Zika virus infection FOR DISCUSSION USE ONLY – March 1 st , 2018

Core components of Digital Bridge eCR approach FOR DISCUSSION USE ONLY – March 1 st , 2018

Key concepts to evaluate • eCR Performance • Data quality: completeness and validity of information shared • Acceptability: willingness of people to use system • Sensitivity: proportion of cases detected • Predictive value positive: proportion of reported cases that truly have condition in question • Timeliness: speed with which events are identified and reported • Implementation Experience • Time to implement • Labor and technology costs • User satisfaction FOR DISCUSSION USE ONLY – March 1 st , 2018

Data sources and collection methods Will use a mixed-methods design with data sources that include: • Key informant interviews (KII) • eCR validation and auditing • Case reporting quality and performance assessment • Documenting costs • Identifying site characteristics FOR DISCUSSION USE ONLY – March 1 st , 2018

Comparators for the Digital Bridge eCR approach • Requirement – “any new IT system must be at least as quick as the previously operational system” • Comparators for timeliness should include: • Existing manual reporting methods • Electronic Laboratory Reporting (ELR) • Other eCR processes in place (where they exist) FOR DISCUSSION USE ONLY – March 1 st , 2018

Nuances of multisite evaluations • Assumption: access to skilled evaluator to coordinate activities across sites • Must take existing programs and policies, landscape into context • Strong stakeholder engagement is required for success • Development of indicators that can be operationalized consistently, even if site implementation strategies vary • Sites will implement eCR at varying speeds FOR DISCUSSION USE ONLY – March 1 st , 2018

Implementation stages for evaluation • Start-up: preparation for electronically sharing production data. Includes development, testing and onboarding activities. • Production: go-live; production data sharing begins. May involve additional testing, validation and adjustments to address unanticipated issues. • Manual case reporting continues in parallel • Maintenance: post-production; stable electronic data sharing continues with minor adjustments as needed. • Routine manual case reporting for selected conditions is discontinued. FOR DISCUSSION USE ONLY – March 1 st , 2018

Major pending decisions • Evaluation budget [PMO] • Identification of evaluation team [PMO] • Need for IRB approval (for chart review, etc) [PMO, evaluation committee, sites, evaluator(s)] • Level of access to PHI or de-identified data by evaluator(s) • Frequency of data submission to evaluator(s) [evaluation committee, sites, AIMS/RCKMS technical teams] • Criteria for determining end of study period [PMO, evaluation committee] FOR DISCUSSION USE ONLY – March 1 st , 2018

Suggested next steps • Governance body reviews, comments on full evaluation plan between now – Thursday, 3/22 (three-week review opportunity) • PMO/evaluation committee compile and review suggested changes, distribute revised plan by Thursday, 3/29 • Governance body votes to approve revised evaluation plan at next meeting on Thursday, 4/5 FOR DISCUSSION USE ONLY – March 1 st , 2018

Questions and Discussion FOR DISCUSSION USE ONLY – March 1 st , 2018

REFERENCE SLIDES FOR DISCUSSION USE ONLY – March 1 st , 2018

Evaluation questions 1. How are core components of eCR initiated and implemented in participating sites? 2. What were the facilitating and inhibiting factors related to eCR initiation and implementation? 3. How were the inhibiting factors addressed? 4. To what extent were the sites able to successfully develop and implement the core components to completely apply the Digital Bridge eCR approach? 5. To what extent is eCR case finding complete, accurate, and timely? 6. To what extent is the information in the eICR complete and accurate? 7. What were the costs associated with the initiation and implementation of eCR in the sites? 8. To what extent did eCR improve (or hinder) surveillance functions in implementation sites? 9. What are the strengths and weaknesses of the Digital Bridge eCR approach(es) for digital information exchange and use? 10. To what extent does eCR add value to health care and public health practice in implementation sites? FOR DISCUSSION USE ONLY – March 1 st , 2018

Recommend

More recommend