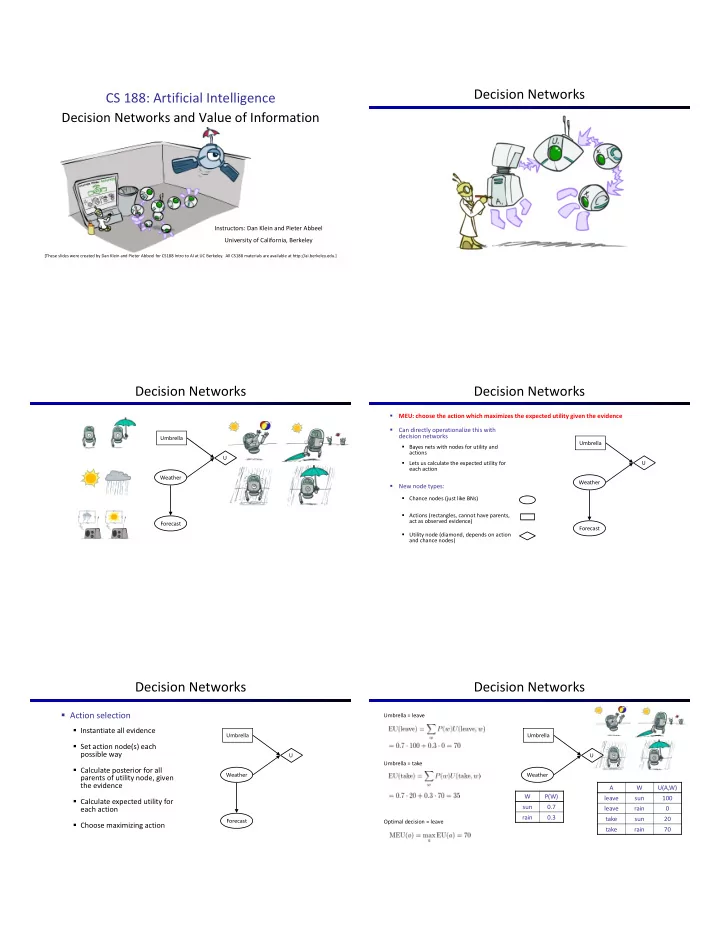

Decision Networks CS 188: Artificial Intelligence Decision Networks and Value of Information Instructors: Dan Klein and Pieter Abbeel University of California, Berkeley [These slides were created by Dan Klein and Pieter Abbeel for CS188 Intro to AI at UC Berkeley. All CS188 materials are available at http://ai.berkeley.edu.] Decision Networks Decision Networks � MEU: choose the action which maximizes the expected utility given the evidence � Can directly operationalize this with decision networks Umbrella Umbrella � Bayes nets with nodes for utility and actions U � Lets us calculate the expected utility for U each action Weather Weather � New node types: � Chance nodes (just like BNs) � Actions (rectangles, cannot have parents, act as observed evidence) Forecast Forecast � Utility node (diamond, depends on action and chance nodes) Decision Networks Decision Networks � Action selection Umbrella = leave � Instantiate all evidence Umbrella Umbrella � Set action node(s) each possible way U U Umbrella = take � Calculate posterior for all Weather Weather parents of utility node, given the evidence A W U(A,W) W P(W) leave sun 100 � Calculate expected utility for sun 0.7 each action leave rain 0 rain 0.3 take sun 20 Forecast Optimal decision = leave � Choose maximizing action take rain 70

Decisions as Outcome Trees Example: Decision Networks A W U(A,W) {} Umbrella = leave Umbrella leave sun 100 leave rain 0 Umbrella take sun 20 Weather | {} Weather | {} U take rain 70 U Umbrella = take Weather Weather W P(W|F=bad) U(t,s) U(t,r) U(l,s) U(l,r) sun 0.34 rain 0.66 Forecast Optimal decision = take =bad � Almost exactly like expectimax / MDPs � What’s changed? Decisions as Outcome Trees Ghostbusters Decision Network Demo: Ghostbusters with probability Umbrella {b} Bust U U W | {b} W | {b} Ghost Location Weather U(t,s) U(t,r) U(l,s) U(l,r) … Sensor (1,1) Sensor (1,2) Sensor (1,3) Sensor (1,n) Forecast =bad … Sensor (2,1) … … Sensor (m,n) Sensor (m,1) Video of Demo Ghostbusters with Probability Value of Information

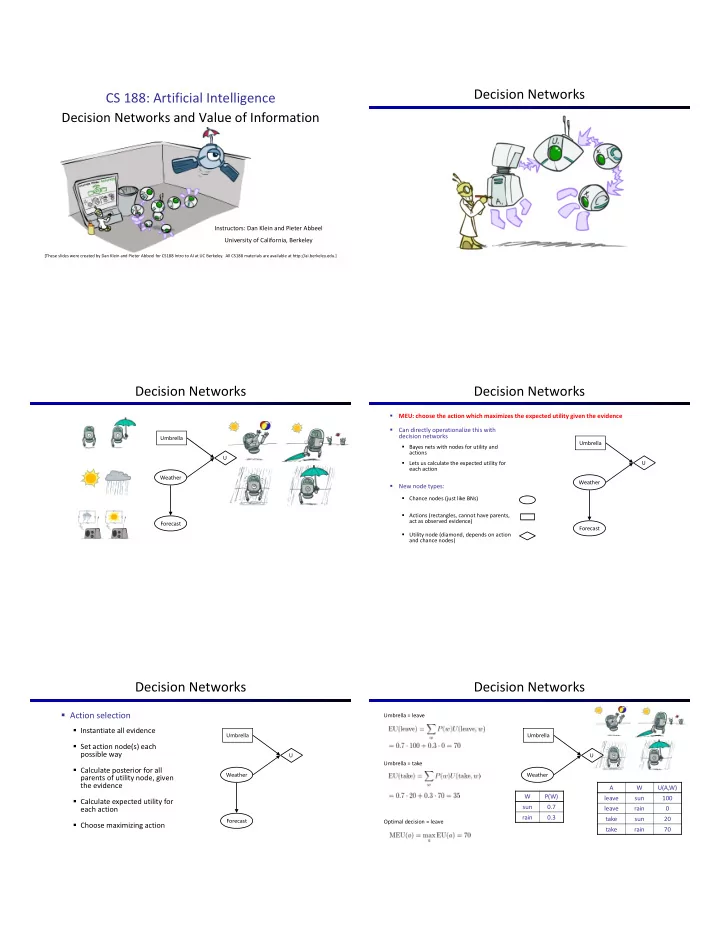

Value of Information VPI Example: Weather A W U � Idea: compute value of acquiring evidence MEU with no evidence Umbrella D O U � Can be done directly from decision network leave sun 100 DrillLoc a a k U leave rain 0 U a b 0 � Example: buying oil drilling rights take sun 20 MEU if forecast is bad O P Weather b a 0 � Two blocks A and B, exactly one has oil, worth k take rain 70 OilLoc a 1/2 � You can drill in one location b b k � Prior probabilities 0.5 each, & mutually exclusive b 1/2 � Drilling in either A or B has EU = k/2, MEU = k/2 MEU if forecast is good Forecast � Question: what’s the value of information of O? � Value of knowing which of A or B has oil Forecast distribution � Value is expected gain in MEU from new info � Survey may say “oil in a” or “oil in b”, prob 0.5 each F P(F) � If we know OilLoc, MEU is k (either way) good 0.59 � Gain in MEU from knowing OilLoc? bad 0.41 � VPI(OilLoc) = k/2 � Fair price of information: k/2 Value of Information VPI Properties � Nonnegative � Assume we have evidence E=e. Value if we act now: {+e} a P(s | +e) � Assume we see that E’ = e’. Value if we act then: U {+e, +e ’ } � Nonadditive a � BUT E’ is a random variable whose value is (think of observing E j twice) unknown, so we don’t know what e’ will be P(s | +e, +e ’ ) U � Expected value if E’ is revealed and then we act: {+e} P(+e ’ | +e) P(-e ’ | +e) � Order-independent {+e, +e ’ } {+e, -e ’ } � a Value of information: how much MEU goes up by revealing E’ first then acting, over acting now: Quick VPI Questions Value of Imperfect Information? � The soup of the day is either clam chowder or � No such thing (as we formulate it) split pea, but you wouldn’t order either one. What’s the value of knowing which it is? � Information corresponds to the observation of a node in the � There are two kinds of plastic forks at a picnic. decision network One kind is slightly sturdier. What’s the value of � If data is “noisy” that just means we knowing which? don’t observe the original variable, but another variable which is a noisy � You’re playing the lottery. The prize will be $0 or version of the original one $100. You can play any number between 1 and 100 (chance of winning is 1%). What is the value of knowing the winning number?

VPI Question POMDPs � VPI(OilLoc) ? DrillLoc U � VPI(ScoutingReport) ? Scout OilLoc � VPI(Scout) ? Scouting Report � VPI(Scout | ScoutingReport) ? � Generally: If Parents(U) Z | CurrentEvidence Then VPI( Z | CurrentEvidence) = 0 Demo: Ghostbusters with VPI POMDPs Example: Ghostbusters � In (static) Ghostbusters: � MDPs have: s b {e} � Belief state determined by � States S a a a evidence to date {e} � Actions A � Tree really over evidence sets s, a b, a e, a � Transition function P(s’|s,a) (or T(s,a,s’)) � Probabilistic reasoning needed � Rewards R(s,a,s’) e ’ e ’ s,a,s’ to predict new evidence given s' past evidence b ’ {e, e ’ } � POMDPs add: b � Solving POMDPs {e} � Observations O a � One way: use truncated a bust a sense � Observation function P(o|s) (or O(s,o)) b, a expectimax to compute {e}, a sense approximate value of actions � POMDPs are MDPs over belief � What if you only considered U(a bust , {e}) o e ’ busting or one sense followed b' states b (distributions over S) {e, e ’ } by a bust? a bust � You get a VPI-based agent! � We’ll be able to say more in a few lectures U(a bust , {e, e ’ }) Video of Demo Ghostbusters with VPI More Generally* � General solutions map belief functions to actions � Can divide regions of belief space (set of belief functions) into policy regions (gets complex quickly) � Can build approximate policies using discretization methods � Can factor belief functions in various ways � Overall, POMDPs are very (actually PSPACE-) hard � Most real problems are POMDPs, and we can rarely solve then in their full generality

Next Time: Dynamic Models

Recommend

More recommend