CSE 401: Introduction to Compiler Construction Course Outline - PDF document

CSE 401: Introduction to Compiler Construction Course Outline Goals: Compiler front-ends: lexical analysis (scanning): characters tokens learn principles & practice of language implementation syntactic analysis (parsing):

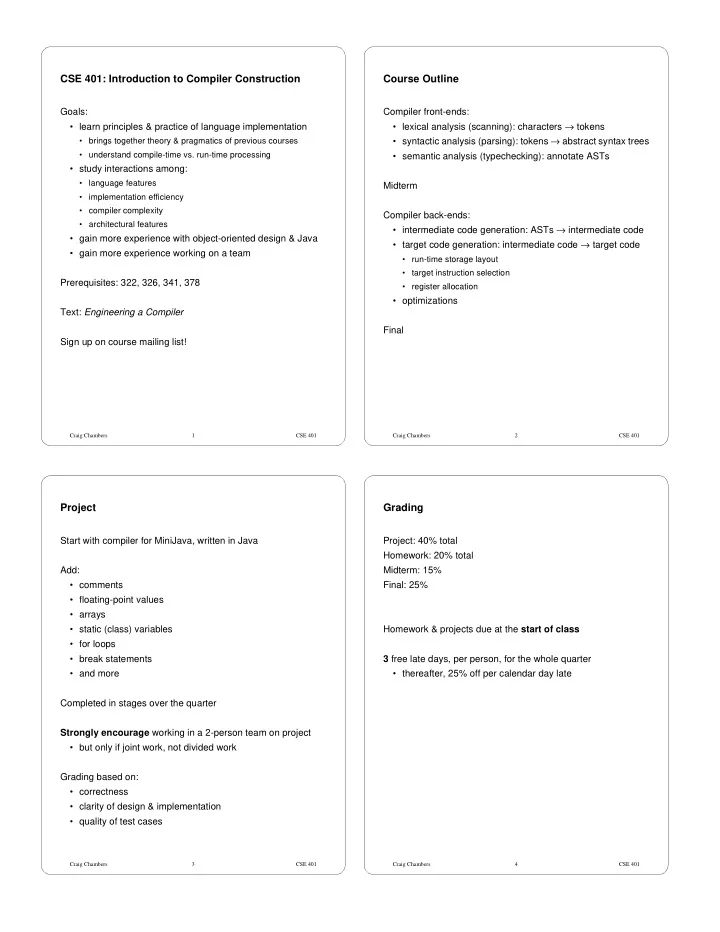

CSE 401: Introduction to Compiler Construction Course Outline Goals: Compiler front-ends: • lexical analysis (scanning): characters → tokens • learn principles & practice of language implementation • syntactic analysis (parsing): tokens → abstract syntax trees • brings together theory & pragmatics of previous courses • understand compile-time vs. run-time processing • semantic analysis (typechecking): annotate ASTs • study interactions among: • language features Midterm • implementation efficiency • compiler complexity Compiler back-ends: • architectural features • intermediate code generation: ASTs → intermediate code • gain more experience with object-oriented design & Java • target code generation: intermediate code → target code • gain more experience working on a team • run-time storage layout • target instruction selection Prerequisites: 322, 326, 341, 378 • register allocation • optimizations Text: Engineering a Compiler Final Sign up on course mailing list! Craig Chambers 1 CSE 401 Craig Chambers 2 CSE 401 Project Grading Start with compiler for MiniJava, written in Java Project: 40% total Homework: 20% total Add: Midterm: 15% • comments Final: 25% • floating-point values • arrays Homework & projects due at the start of class • static (class) variables • for loops 3 free late days, per person, for the whole quarter • break statements • and more • thereafter, 25% off per calendar day late Completed in stages over the quarter Strongly encourage working in a 2-person team on project • but only if joint work, not divided work Grading based on: • correctness • clarity of design & implementation • quality of test cases Craig Chambers 3 CSE 401 Craig Chambers 4 CSE 401

An example compilation First step: lexical analysis Sample (extended) MiniJava program: Factorial.java “Scanning”, “tokenizing” Read in characters, clump into tokens // Computes 10! and prints it out class Factorial { • strip out whitespace & comments in the process public static void main(String[] a) { System.out.println( new Fac().ComputeFac(10)); } } class Fac { // the recursive helper function public int ComputeFac(int num) { int numAux = 0; if (num < 1) numAux = 1; else numAux = num * this.ComputeFac(num-1); return numAux; } } Craig Chambers 5 CSE 401 Craig Chambers 6 CSE 401 Specifying tokens: regular expressions Second step: syntactic analysis Example: “Parsing” Ident ::= Letter AlphaNum* Read in tokens, turn into a tree based on syntactic structure Integer ::= Digit+ • report any errors in syntax AlphaNum ::= Letter | Digit Letter ::= 'a' | ... | 'z' | 'A' | ... | 'Z' Digit ::= '0' | ... | '9' Craig Chambers 7 CSE 401 Craig Chambers 8 CSE 401

Specifying syntax: context-free grammars Third step: semantic analysis EBNF is a popular notation for CFG’s “Name resolution and typechecking” Example: Given AST: Stmt ::= if ( Expr ) Stmt [ else Stmt] • figure out what declaration each name refers to | while ( Expr ) Stmt • perform typechecking and other static consistency checks | ID = Expr ; | ... Key data structure: symbol table Expr ::= Expr + Expr | Expr < Expr | ... • maps names to info about name derived from declaration | ! Expr • tree of symbol tables corresponding to nesting of scopes | Expr . ID ( [Expr { , Expr}] ) | ID Semantic analysis steps: | Integer | ... 1. Process each scope, top down | ( Expr ) 2. Process declarations in each scope into symbol table for | ... scope 3. Process body of each scope in context of symbol table EBNF specifies concrete syntax of language Parser usually constructs tree representing abstract syntax of language Craig Chambers 9 CSE 401 Craig Chambers 10 CSE 401 Fourth step: intermediate code generation Example int Fac.ComputeFac(*? this, int num) { Given annotated AST & symbol tables, translate into lower-level intermediate code int T1, numAux, T8, T3, T7, T2, T6, T0; numAux := 1; T0 := 1; Intermediate code is a separate language T1 := num < T0; • Source-language independent ifnonzero T1 goto L0; • Target-machine independent T2 := 1; T3 := num - T2; T6 := Fac.ComputeFac(this, T3); Intermediate code is simple and regular T7 := num * T6; � good representation for doing optimizations numAux := T7; goto L2; Might be a reasonable target language itself, e.g. Java bytecode label L0; T8 := 1; numAux := T8; label L2; return numAux; } Craig Chambers 11 CSE 401 Craig Chambers 12 CSE 401

Fifth step: target (machine) code generation Summary of compiler phases Analysis Synthesis of input program of output program Translate intermediate code into target code ( back-end ) ( front-end ) Need to do: character stream • instruction selection: choose target instructions for intermediate form (subsequences of) intermediate code instructions Lexical Analysis • register allocation: allocate intermediate code variables to token machine registers, spilling excess to stack Optimization stream • compute layout of each procedure’s stack frame & other run-time data structures Syntactic Analysis intermediate • emit target code form abstract syntax tree Code Generation target Semantic Analysis language annotated AST Intermediate Code Generation Ideal: many front-ends, many back-ends sharing one intermediate language Craig Chambers 13 CSE 401 Craig Chambers 14 CSE 401 Other language processing tools Engineering issues Compilers translate the input language into Compilers are hard to design so that they are a different, usually lower-level, target language • fast • highly optimizing Interpreters directly execute the input language • extensible & evolvable • same front-end structure as a compiler • correct • then evaluate the annotated AST, or translate to intermediate code and evaluate that Some parts of compilers can be automatically generated from specifications, e.g., scanners, parsers, & target code Software engineering tools can resemble compilers generators • same front-end structure as a compiler • generated parts are fast & correct • then: • specifications are easily evolvable • pretty-print/reformat/colorize (Some of my current research is on generating fast, correct optimizations from specifications.) • analyze to compute relationships like declarations/uses, calls/callees, etc. • analyze to find potential bugs Need good management of software complexity • aid in refactoring/restructuring/evolving programs Craig Chambers 15 CSE 401 Craig Chambers 16 CSE 401

Lexical Analysis / Scanning Why separate lexical from syntactic analysis? Purpose: turn character stream (input program) Separation of concerns / good design into token stream • scanner: • parser turns token stream into syntax tree • handle grouping chars into tokens • ignore whitespace • handle I/O, machine dependencies • parser: Token: group of characters forming basic, atomic chunk of syntax; • handle grouping tokens into syntax trees a “word” Restricted nature of scanning allows faster implementation • scanning is time-consuming in many compilers Whitespace: characters between tokens that are ignored Craig Chambers 17 CSE 401 Craig Chambers 18 CSE 401 Complications Lexemes, tokens, and patterns Lexeme : group of characters that form a token Most languages today are “free-form” • layout doesn’t matter • whitespace separates tokens Token : class of lexemes that match a pattern Alternatives: • Fortran: line-oriented, whitespace doesn’t separate • token may have attributes, if more than one lexeme in token do 10 i = 1.100 .. a loop .. Pattern : typically defined using a regular expression 10 continue • Haskell: can use identation & layout to imply grouping • REs are simplest language class that’s powerful enough Most languages separate scanning and parsing Alternative: C/C++/Java: type vs. identifier • parser wants scanner to distinguish names that are types from names that are variables • but scanner doesn’t know how things declared -- that’s done during semantic analysis a.k.a. typechecking! Craig Chambers 19 CSE 401 Craig Chambers 20 CSE 401

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.