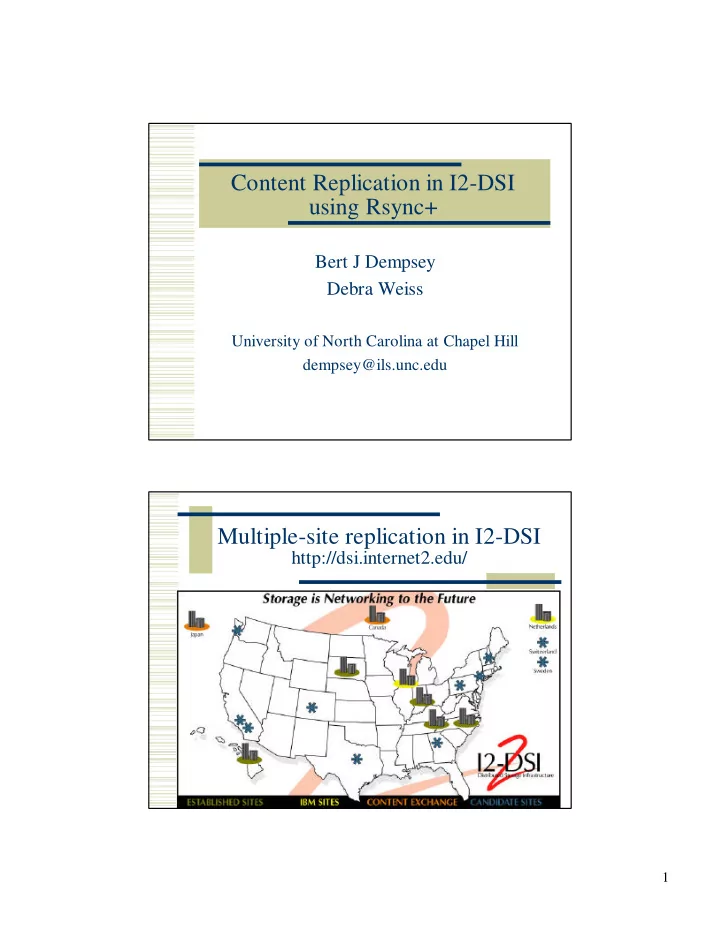

Content Replication in I2-DSI using Rsync+ Bert J Dempsey Debra Weiss University of North Carolina at Chapel Hill dempsey@ils.unc.edu Multiple-site replication in I2-DSI http://dsi.internet2.edu/ 1

Replicating Channels Channel provider Content import from channel provider to master I2-DSI node Master Node Content Replication to all replication sites that carry the channel S3 clients S1 S2 clients Rsync+ for Content Replication Channel provider At M: Master-side rsync+ -F srcLatest/ src/ updates M (m)ftp updates at each of S1, S2, S3: Slave-side clients updates rsync+ -f src/ S1 S3 S2 Rsync is popular filesystem sync tool Rsync+ is our mods to enable local clients capture of update info for store-and-forward communication 2

Server Experiment � Instrumented Mirror � Active Linux repository (8 GB, 25,000 files) � Twice daily synchronization � On dsi.ncni.net: � rsync+ -F : Perform master-side rsync+ processing between two local directories to create updates file � rsync+ -f : Use updates to perform slave-side rsync+ processing Content Change Patterns � Data here from 1-month Linux mirror � Update per 12-hour period � No files to change 13 of 60 periods (21%) � Average size of updated data (all periods) � 0.144% of aggregate archive � 0.104% under rsync+ � Maximum size of updated data � 2.42% of mirror 3

Rsync+ processing cost 2 1.5 Run time as % of 1 rsync 0.5 0 1 3 5 7 9 11 13 15 Mirror updates (one per 12 hours) rsync+ -F rysnc+ -f Rsync+ Local Throughput runtime (sec) unnormalized tput normalized tput 30 25 Tput (Mbits/sec) 20 15 10 5 0 0 4 8 12 16 Mirror Update (12 hours/update) 4

Network Throughput: ttcp experiment parameters Parameter Values File Size 5.45 MB dsi.ncni.net � Network Path ils.unc.edu (100 Mbit/s min) Concurrent ttcp 1,2,4,8,16,24,32 connections Receiver socket buffer 240 KB size (KB) Buffer Policy 240KB / 240KB shared Network Throughput: concurrent ttcp transmits 30 27.03944 25 Tput (Mbits/s) 20 15 10 9.62592 8.23088 6.29856 5 4.50688 2.8464 2.24 0 1 2 4 8 16 24 32 ttcp concurrent transmits 5

Network experiments: setting socket buffer sizes dsi2ils sept14 (1 ttcp, avg. over 6 runs) 9000 8000 Tput (Kbytes/s) 7000 6000 5000 4000 3000 2000 1000 0 0 50000 100000 150000 200000 250000 300000 350000 Buffer Size (bytes) Network Throughput: concurrent ttcp tputs Tput, Buffer Policy 1 Tput, Buffer Policy 2 Aggregate Tput, Buffer Policy 1 Aggregate Tput, Buffer Policy 2 100 Tput (Mbits/s) 10 1 1 2 4 8 16 24 32 Concurrent ttcp transmits (avg over runs) 6

Baseline Scalability Analysis using empirical inputs � Update of content � 0.1 % avg, 2.4 % maximum � Network tput � 8 Servers, thus 6.2 Mbits/sec to each � Server tput (local rsync actions) � Master: 11.4 Mbits/sec � Slave: 8.18 Mbits/sec Baseline Scalability Analysis: end-to-end update latency Content Updates Master Network Slave End-to- processing latency processing end Channel Avg latency latency update Size Max latency 10 GB 10 MB 7 secs 12.9 secs 9.7 secs 29.6 sec 240 MB 168 sec 309 sec 233 s 710 s 100 GB 100 MB 70 sec 129 sec 97 sec 296 sec 2.4 GB 28 min 51.5 min 38.8 min 118.3 min 1 TB 1 GB 11.7 min 21.5 min 16.1 min 49.3 min 24 GB 280.8 min 516 min 386.4 min 19.72 hrs 7

Conclusions � Our work creates scalable design for filesystem- level tool for data synchronization � Current systems without tuning suggest O(100 GB) content can be handled for initial server set � For TB content, system advances will need to provide speed-ups � Tuning � Hardware � Distributed processing 8

Recommend

More recommend