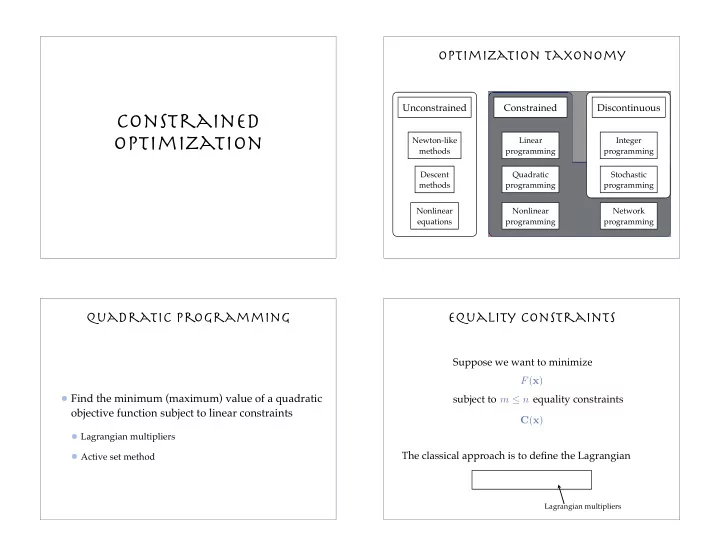

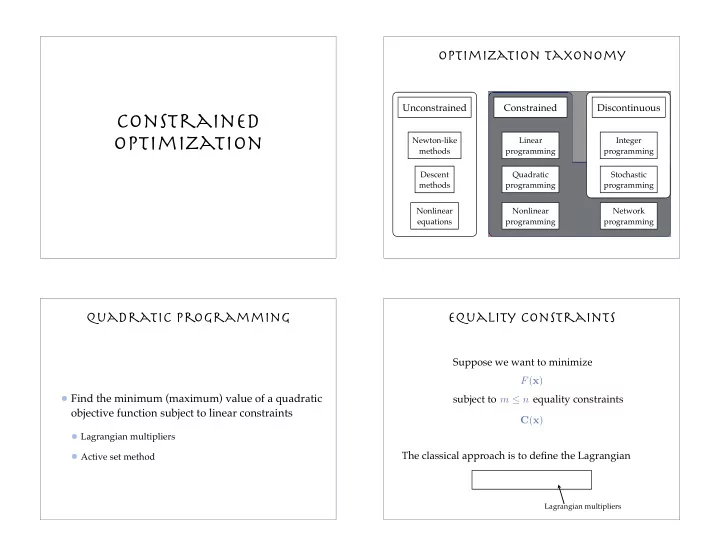

Optimization taxonomy Unconstrained Constrained Discontinuous Constrained optimization Newton-like Linear Integer methods programming programming Descent Quadratic Stochastic methods programming programming Nonlinear Nonlinear Network equations programming programming Quadratic Programming Equality constraints Suppose we want to minimize F ( x ) Find the minimum (maximum) value of a quadratic subject to equality constraints m ≤ n objective function subject to linear constraints C ( x ) Lagrangian multipliers The classical approach is to define the Lagrangian Active set method L ( x , λ ) = F ( x ) − λ T C ( x ) Lagrangian multipliers

Equality constraints Equality constraints Minimizing the Lagrangian is equivalent to solving ∇ F ( x ∗ ) ∇ C ∇ x L ( x , λ ) = 0 At the optimal solution, the gradient of the ∇ λ L ( x , λ ) = 0 objective function is the linear combination of the constraint gradients m ∇ x L = g − J T λ = ∇ F − � λ i ∇ C i The projection of the gradient of the objective i =1 function onto the constraint surface is zero at ∇ λ L = − C ( x ) the optimal solution lagrangian-Newton Inequality constraints Apply the Newton method to find ( x , � ) that minimizes Suppose we want to minimize the Lagrangian F ( x ) � T � � � subject to inequality constraints p + 1 ∇ x L ∇ xx L ∇ x λ L L ( x ∗ , λ ∗ ) = L ( x 0 , λ 0 ) + 2 p p ∇ λ L ∇ λ x L ∇ λλ L C ( x ) ≥ 0 Collection of all points that satisfy the constraints is called the feasible ∇ xx L = H ∇ x λ L = − J ∇ λ x L = − J region Constraints can be partitioned into two sets: active set A and inactive set A’ � � x 0 − x ∗ � g � J T � � H ˜ = Identify the active constraints at each iteration and solve for C J 0 λ ∗ C L ( x , λ ) = F ( x ) − λ T ˜ C ( x ) Karush-Kuhn-Tucker (KKT) system

Active set strategy Quadratic programming F ( x ) = g T x + 1 2 x T Hx How do we identify an active set? A quadratic objective λ ∗ ≥ 0 Linear constraints and for i ∈ A Ax = a Bx ≥ b Example: minimize F ( x ) = x 2 1 + x 2 2 Assume that an estimate of the active set A 0 is given in subject to C ( x ) = 2 − x 1 − x 2 ≥ 0 addition to a feasible point x 0 feasible region Quadratic programming Optimization taxonomy 1. Solve the KKT system with equality constraints and inequality Unconstrained Constrained Discontinuous constraints in the active set 2. Take the largest possible step size in the direction p that α ≤ 1 Newton-like Linear Integer does not violate any inactive inequalities methods programming programming 3. If , then add the limiting inequality to the active set A and α < 1 Descent Quadratic Stochastic return to step 1. Otherwise, take a full step and check λ methods programming programming if all are positive, terminate the program λ Nonlinear Nonlinear Network otherwise, delete the inequality with the most negative from λ equations programming programming A and return to step 1

An SQP algorithm Globalization Strategies Sequential quadratic programming solves NLP by formulating a sequence of QP subproblems Merit functions 2 C T ( x ) C ( x ) F ( x ) + σ The solution p ( k ) to each QP determines the search direction Line search methods Each QP is formulated by Trust region methods Quadratic approximation to the objective function 1 2 p T p ≤ δ 2 Linear approximation to the constraints Summary What is the physical meaning of Lagrangian multipliers? How does Lagrangian enforce “hard” constraints? How is a nonlinear problem solved using quadratic programming?

Recommend

More recommend