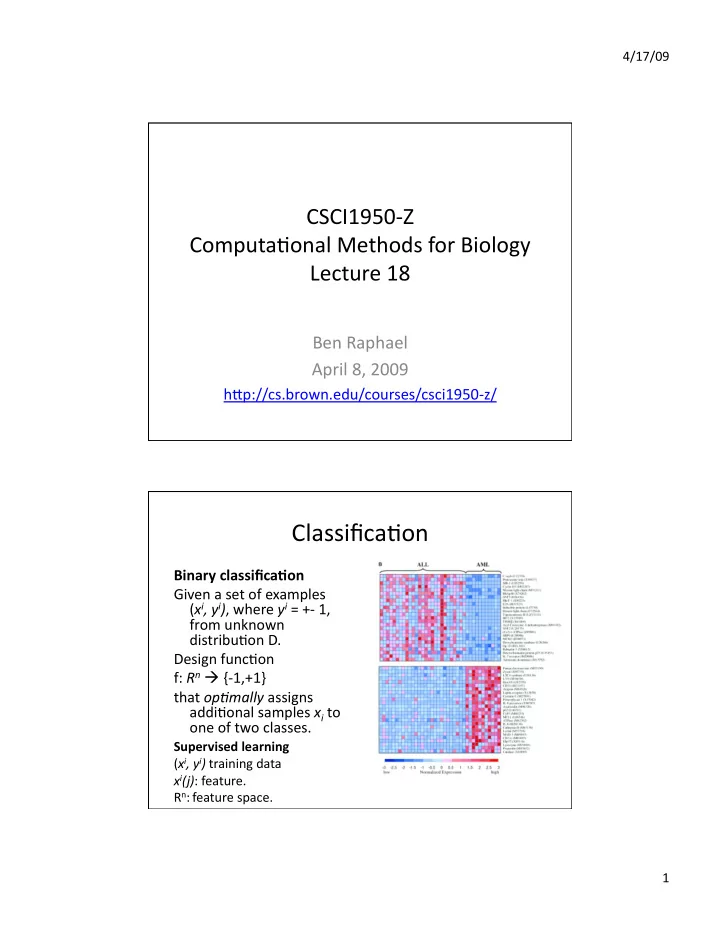

4/17/09 CSCI1950‐Z Computa4onal Methods for Biology Lecture 18 Ben Raphael April 8, 2009 hIp://cs.brown.edu/courses/csci1950‐z/ Classifica4on Binary classifica,on Given a set of examples ( x i , y i ) , where y i = +‐ 1, from unknown distribu4on D. Design func4on f: R n {‐1,+1} that op+mally assigns addi4onal samples x i to one of two classes. Supervised learning ( x i , y i ) training data x i (j) : feature. R n : feature space. 1

4/17/09 Dimensionality Reduc4on Genomic data (e.g. gene expression) o[en high‐ dimensional ( n > 5000), but rela4vely few samples available. Reduce dimensionality of data (lower dimensional subspace) to improve performance of the classifier by: • Removing features that do not contribute to the classifica4on and may introduce noise. • Reducing opportuni4es for overfi]ng. • Improving 4me/memory efficiency in algorithms for learning and classifica4on. Feature Construc4on n features l features Linear/nonlinear transforma4on Common method: Principal components analysis. [Whiteboard] 2

4/17/09 PCA and Clustering Yeast gene expression data (477 genes) clustered into 7 clusters. First two principal components contain ≈89% of varia4on in data. Yeung and Ruzzo (Bioinforma4cs 2001) PCA and Clustering Exon and junc4on microarrays detect widespread mouse strain‐ and sex‐bias expression differences. (Su et al. BMC Genomics 2008) 3

4/17/09 Feature Selec4on • Selec4ng l << n features that are informa(ve for classifica4on. • Gene expression: subset of genes. Feature Selec4on Informa4ve features: Use a measure of associa4on between x i and y i . � m k =1 ( x i k − x i )( y i k − y i ) r x i y i = • Correla4on: ( m − 1) s x i s y i • Chi‐square (con4ngency table) F ( x i ) = | ( x i ) + − ( x i ) − | 2 s 2 ( x i ) + + s 2 • Fischer criterion: ( x i ) − (x i ) + are elements in + class. • t ‐test sta4s4c • Mutual informa4on • TNoM score (previous lecture) [Whiteboard] 4

4/17/09 Feature Selec4on Results Colon Leukemia Feature Selec4on Results Top scoring genes (TNoM < 14) in colon dataset. 5

4/17/09 Assessing Performance Feature Selec4on Build Test (e.g. TNoM) Classifier WRONG Cross‐valida4on Assessing Performance Feature Selec4on Build Test (e.g. TNoM) Classifier Cross‐valida4on Must assess performance of both steps together! 6

4/17/09 Gene Selec4on Results Predictors of Breast Cancer Prognosis • 70 gene signature to predict breast cancer pa4ents with metastasis within 5 years (van de Vijver et al. NEJM 2002, van’t Veer et al. Nature 2002) • Now an FDA approved test: Mammaprint 7

4/17/09 Predictors of Breast Cancer Prognosis Step 1: Clustering n = 25000 genes 98 tumors. >2 fold change (and p<0.01) in >4 tumors n = 5000 differen+ally expressed genes Hierarchical clustering: genes and samples Predictors of Breast Cancer Prognosis Step 2: Classifica4on n = 5000 genes differen4ally expressed genes in 78 (sporadic lymph‐node nega4ve) tumors. Compute correla4on coefficient ρ( x i , y i ) Between each gene and prognosis. Choose 231 genes with |ρ( x i , y i )| > 0.3. Rank genes by ρ( x i , y i ). 8

4/17/09 Predictors of Breast Cancer Prognosis Step 2: Classifica4on n = 5000 genes differen4ally expressed genes in 78 (sporadic lymph‐node nega4ve) tumors. Compute correla4on coefficient ρ( x i , y i ) Between each gene and prognosis. Choose 231 genes with |ρ( x i , y i )| > 0.3. Rank genes by ρ( x i , y i ). Predictors of Breast Cancer Prognosis Step 3: Build a classifier Leave‐out one sample x . Let R = top 5 genes in list of 231. Compute correla4on coefficients ρ(μ( x R+ ) , x R ) and ρ(μ( x R‐ ) , x R ), where μ( x R+ ) is mean vector of genes in + class in R . Assign to best class. Add 5 genes to R un4l performance does not improve. 9

4/17/09 Predictors of Breast Cancer Prognosis • 70 gene classifier • 65/78 (83%) of pa4ents predicted correctly. – 5 poor and 8 good incorrectly assigned. • Changing threshold gave 3 poor and 12 good incorrectly assigned. Discussion • Cross‐valida4on done a[er feature selec4on! – Also fixed this problem. • Resul4ng 70 gene signature is not unique (Ein‐Dor et. al 2005: see notes) • Drawing biological conclusions from the output of a “black box” predic4on algorithm is not wise. – Correla4on vs. causality. 10

4/17/09 Results: Class Discovery with TNoM (ben‐Dor, Friedman, Yakhini, 2001) • Find op4mal labeling L. – Solu4on: use heuris4c search • Find mul4ple (subop4mal) labelings – Solu4on: Peeling: remove previously used genes from set. Results: Class Discovery with TNoM (ben‐Dor, Friedman, Yakhini, 2001) Leukemia (Golub et al. 1999): 72 expression profiles. 25 AML, 47 ALL. 7129 genes Lymphoma (Alizadeh et al.): 96 expression profiles, 46 Diffuse large B‐cell lymphoma (DLBCL) 50 from 8 different 4ssues. Lymphoma‐DLBCL: subset of 46 of above. 11

4/17/09 TNoM Results (ben‐Dor, Friedman, Yakhini, 2001) % survival years 40 pa4ents 24 pa4ents with low clinical risk. 12

Recommend

More recommend