Chapter 5: Cluster ering ing Jilles Vreeken IRDM 15/16 10 Nov - PowerPoint PPT Presentation

Chapter 5: Cluster ering ing Jilles Vreeken IRDM 15/16 10 Nov 2015 Qu Question o of f th the w week How can we discover groups s of objec ects that are highly similar to each other? 10 Nov 2015 V-1: 2 Clustering, where?

Chapter 5: Cluster ering ing Jilles Vreeken IRDM ‘15/16 10 Nov 2015

Qu Question o of f th the w week How can we discover groups s of objec ects that are highly similar to each other? 10 Nov 2015 V-1: 2

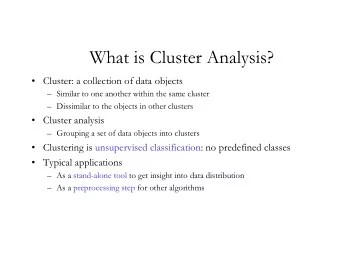

Clustering, where? Biology creation of phylogenies (relations between organisms) inferring population structures from clusterings of DNA data analysis of genes and cellular processes (co-clustering) Business grouping of consumers into market segments Computer science pre-processing to reduce computation (representative-based methods) automatic discovery of similar items V-1: 3 IRDM ‘15/16

Motivational Example (Wessmann, ‘Mixture Model Clustering in the analysis of complex diseases’, 2012) V-1: 4 IRDM ‘15/16

Even more motivation (Heikinheimo et al., ‘Clustering of European Mammals’, 2007) V-1: 5 IRDM ‘15/16

IRDM Chapter 5, overview Basic idea 1. Representative-based clustering 2. Probabilistic clustering 3. Hierarchical clustering 4. Density-based clustering 5. Clustering high-dimensional data 6. Validation 7. You’ll find this covered in Aggarwal Ch. 6, 7 Zaki & Meira, Ch. 13—15 V-1: 6 IRDM ‘15/16

IRDM Chapter 5, today Basic idea 1. Representative-based clustering 2. Probabilistic clustering 3. Hierarchical clustering 4. Density-based clustering 5. Clustering high-dimensional data 6. Validation 7. You’ll find this covered in Aggarwal Ch. 6, 7 Zaki & Meira, Ch. 13—15 V-1: 7 IRDM ‘15/16

Chapter 5.1: Bas asics V-1: 8 IRDM ‘15/16

Example low inter-cluster similarity high intra-cluster similarity an outlier? V-1: 9 IRDM ‘15/16

The clustering problem Given a set 𝑉 of objects and a distance 𝑒 : 𝑉 2 → 𝑆 + between objects, group the objects of 𝑉 into cl clust usters such that the distance between een poin oints in in the same c clu luster is is low low and the distance between t the poin oints in in diffe ifferent c clu lusters is is la large small and la large are not well defined a clustering of 𝑉 can be exc xclus usive (each point belongs to exactly one cluster) probab abilis listic (each point has a probability of belonging to a cluster) fuz fuzzy (each point can belong to multiple clusters) the number of clusters can be pre-defined, or not V-1: 10 IRDM ‘15/16

On distances A function 𝑒 : 𝑉 2 → 𝑆 + is a metri ric if: sel elf-sim imil ilarit ity 𝑒 𝑣 , 𝑤 = 0 if and only if 𝑣 = 𝑤 sym symmetry 𝑒 𝑣 , 𝑤 = 𝑒 ( 𝑤 , 𝑣 ) for all 𝑣 , 𝑤 ∈ 𝑉 tria riangle-in inequ quali ality 𝑒 𝑣 , 𝑤 ≤ 𝑒 𝑣 , 𝑥 + 𝑒 ( 𝑥 , 𝑤 ) for all 𝑣 , 𝑤 , 𝑥 ∈ 𝑉 tance; if 𝑒 : 𝑉 2 → [0, 𝛽 ] for some positive 𝛽 A metric is a distan then 𝑏 − 𝑒 ( 𝑣 , 𝑤 ) is a sim imila ilarit ity score Common metrics include 1 𝑒 𝑣 𝑗 − 𝑤 𝑗 𝑞 𝑞 for 𝑒 -dimensional space 𝑀 𝑞 : ∑ 𝑗=1 𝑀 1 = Hamming = city-block; 𝑀 2 = Euclidean distance Correlation distance: 1 − 𝜚 Jaccard distance: 1 − | 𝐵 ∩ 𝐶 |/| 𝐵 ∪ 𝐶 | V-1: 11 IRDM ‘15/16

More distantly For all-numerical data, the sum o sum of sq squa uared e errors (SSE) 𝑒 is the most common distance measure: ∑ 𝑣 𝑗 − 𝑤 𝑗 2 𝑗=1 For all-binary data, either Hamming or Jaccard is typically used For categorical data, we either first convert the data to binary by adding one binary variable per category label and then use Hamming distance; or count the agreements and disagreements of category labels with Jaccard For mixed data, some combination must be used. V-1: 12 IRDM ‘15/16

The distance matrix 0 𝑒 1 , 2 𝑒 1 , 3 𝑒 1 , 𝑜 𝑒 1 , 2 0 𝑒 2 , 3 𝑒 2 , 𝑜 ⋯ 𝑒 1 , 3 𝑒 2 , 3 0 𝑒 3 , 𝑜 ⋮ ⋱ ⋮ 𝑒 1 , 𝑜 𝑒 2 , 𝑜 𝑒 3 , 𝑜 ⋯ 0 A dis istanc nce (or dissimilarit ity) matrix ix is 𝑜 -by- 𝑜 for 𝑜 objects non-negative ( 𝑒 𝑗 , 𝑘 ≥ 0 ) symmetric ( 𝑒 𝑗 , 𝑘 = 𝑒 𝑘 , 𝑗 ) Zero on diagonal ( 𝑒 𝑗 , 𝑗 = 0 ) V-1: 13 IRDM ‘15/16

Chapter 5.2: Represe sentat ative ive-bas based C ed Clustering ing Aggarwal Ch. 6.3 V-1: 14 IRDM ‘15/16

Partitions and Prototypes Exclusive representative-based clustering the set of objects 𝑉 is partit itio ioned d into 𝑙 clusters 𝐷 1 , 𝐷 2 , … , 𝐷 𝑙 = 𝑉 and 𝐷 𝑗 ∩ 𝐷 𝑘 = ∅ for 𝑗 ≠ 𝑘 ⋃ 𝐷 𝑗 𝑗 every cluster is re repre resented by a prototype (aka centroid or mean) 𝜈 𝑗 clustering quality is based on sum o of s squared err rrors rs between objects in a cluster and the cluster prototype 𝑙 𝑙 𝑒 2 2 � � 𝑦 𝑘 − 𝜈 𝑗 = � � � 𝑦 𝑘𝑘 − 𝜈 𝑗𝑘 2 𝑗=1 𝑦 𝑘 ∈𝐷 𝑗 𝑗=1 𝑦 𝑘 ∈𝐷 𝑗 𝑘=1 V-1: 15 IRDM ‘15/16

Partitions and Prototypes Exclusive representative-based clustering the set of objects 𝑉 is partit itio ioned d into 𝑙 clusters 𝐷 1 , 𝐷 2 , … , 𝐷 𝑙 = 𝑉 and 𝐷 𝑗 ∩ 𝐷 𝑘 = ∅ for 𝑗 ≠ 𝑘 ⋃ 𝐷 𝑗 𝑗 every cluster is re repre resented by a prototype (aka centroid or mean) 𝜈 𝑗 clustering quality is based on sum o of s squared err rrors rs between objects in a over all objects in the cluster cluster and the cluster prototype 𝑙 𝑙 𝑒 2 2 � � 𝑦 𝑘 − 𝜈 𝑗 = � � � 𝑦 𝑘𝑘 − 𝜈 𝑗𝑘 2 𝑗=1 𝑦 𝑘 ∈𝐷 𝑗 𝑗=1 𝑦 𝑘 ∈𝐷 𝑗 𝑘=1 over all clusters over all dimensions V-1: 16 IRDM ‘15/16

The Naïve algorithm The naïve algorithm goes like this one by one generate all possible clusterings compute the squared error select the best Sadly, this is infeasible there are too many possible clusterings to try 𝑙 𝑜 different clusterings to 𝑙 clusters (some possibly empty) the number of ways to cluster 𝑜 points in 𝑙 non-empty clusters is the Stirling number of the second kind, 𝑇 ( 𝑜 , 𝑙 ) , 𝑙 𝑙 = 1 𝑇 𝑜 , 𝑙 = 𝑜 𝑙 ! � − 1 𝑘 𝑙 𝑙 − 𝑘 𝑜 𝑘 𝑘=0 V-1: 17 IRDM ‘15/16

An iterative 𝑙 -means algorithm select 𝑙 random cluster centroids 1. assign each point to its closest centroid 2. compute the error 3. do do 4. 4. fo for ea each cluster 𝐷 𝑗 1. 1. 1 compute new centroid as 𝜈 𝑗 = 𝐷 𝑗 ∑ 𝑦 𝑘 𝑦 𝑘 ∈𝐷 𝑗 1. for ea fo each element 𝑦 𝑘 ∈ 𝑉 2. 2. assign 𝑦 𝑘 to its closest cluster centroid 1. while ile error decreases 5. 5. V-1: 18 IRDM ‘15/16

k -means Example 5 expression in condition 2 k 1 4 3 k 2 k 3 1 0 0 1 3 4 5 expression in condition 1 V-1: 19 IRDM ‘15/16

Some observations Always converges, eve ventu tual ally on each step the error decreases only finite number of possible clusterings convergence to local optimum At some point a cluster can become empty ty all points are closer to some other centroid some options include split the biggest cluster take the furthest point as a singleton cluster Outliers can yield bad clusterings V-1: 20 IRDM ‘15/16

Computational complexity How long does iterative 𝑙 -means take? computing the centroid takes 𝑃 𝑜𝑒 time averages over total of 𝑜 points in 𝑒 -dimensional space computing the cluster assignment takes 𝑃 ( 𝑜𝑙𝑒 ) time for each 𝑜 points we have to compute the distances to all 𝑙 clusters in 𝑒 -dimensional space if the algorithm takes 𝑢 iterations, the total running time is 𝑃 ( 𝑢𝑜𝑙𝑒 ) how many iterations will we need? V-1: 21 IRDM ‘15/16

How many iterations? In practice the algorithm usually doesn’t need many some hundred iterations is usually enough Worst-case upper bound is 𝑃 ( 𝑜 𝑒𝑙 ) 𝑜 Worst-case lower bound is superpolynomial: 2 Ω The discrepancy between practice and worst-case analysis can be (somewhat) explained with some smoothed analysis if the data is sampled from independent 𝑒 -dimensional normal distributions with same variance, iterative 𝑙 -means will terminate in 𝑃 ( 𝑜 𝑙 ) time with high probability (Arthur & Vassilvitskii, 2006) V-1: 22 IRDM ‘15/16

On the importance of starting well V-1: 23 IRDM ‘15/16

On the importance of starting well V-1: 24 IRDM ‘15/16

On the importance of starting well The 𝑙 -means algorithm converges to a local optimum, which can be arbitrarily bad compared to the global optimum V-1: 25 IRDM ‘15/16

The 𝑙 -means++ algorithm The Key Idea: Carefu eful in init itia ial s l seedin ing choose first centroid u.a.r. from data points let 𝐸 ( 𝑦 ) be the shortest distance from 𝑦 to any already-selected centroid 𝐸 𝑦 ′ 2 choose next centroid to be 𝑦𝑦 with probability 𝐸 𝑦 2 ∑ 𝑦∈𝑌 points that are further away are more re pro robable to be selected repeat until 𝑙 centroids have been selected and continue as normal iterative 𝑙 -means algorithm The 𝑙 -means++ algorithm achieves 𝑃 (log 𝑙 ) approximation ratio on expectation 𝐹 [ 𝑑𝑑𝑑𝑢 ] = 8(ln 𝑙 + 2) OPT The 𝑙 -means++ algorithm converges fast in practice (Arthur & Vassilvitskii ’07) V-1: 26 IRDM ‘15/16

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.