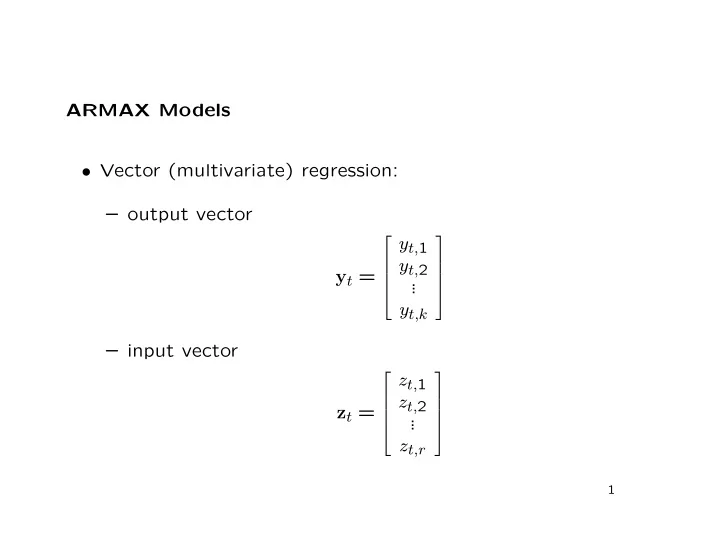

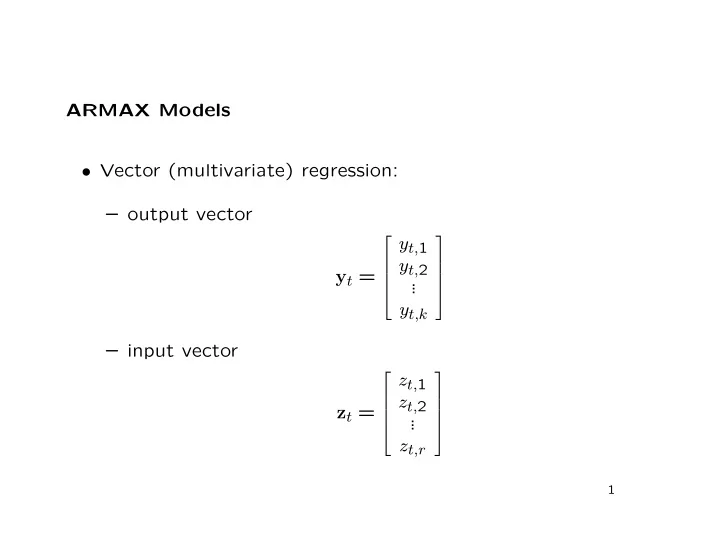

ARMAX Models • Vector (multivariate) regression: – output vector y t, 1 y t, 2 y t = . . . y t,k – input vector z t, 1 z t, 2 z t = . . . z t,r 1

• Regression equation: y t,i = β i, 1 z t, 1 + β i, 2 z t, 2 + · · · + β i,r z t,r + w t,i or in vector form y t = Bz t + w t . • Here { w t } is multivariate white noise: E( w t ) = 0 , h = 0 Σ w , � � cov = w t + h , w t h � = 0 . 0 , 2

• Given observations for t = 1 , 2 , . . . , n , the least squares es- timator of B , also the maximum likelihood estimator when { w t } is Gaussian white noise, is � − 1 , B = Y ′ Z � Z ′ Z ˆ where y ′ z ′ 1 1 y ′ z ′ 2 2 Y = and Z = . . . . . . y ′ z ′ n n • ML estimate of Σ w (replace n with ( n − r ) for unbiased): n Σ w = 1 � ′ . � � � ˆ y t − ˆ y t − ˆ � Bz t Bz t n t =1 3

• Information criteria: – Akaike: � � � + 2 kr + k ( k + 1) � � � ˆ AIC = ln ; Σ w � � 2 n – Schwarz: � � � + ln n kr + k ( k + 1) � � � ˆ SIC = ln Σ w , � � 2 n – Bias-corrected AIC (incorrect in Shumway & Stoffer): � � 2 kr + k ( k + 1) � � � ˆ AICc = ln � + Σ w . � � n − k − r − 1 2 4

Vector Autoregression • E.g., VAR(1): x t = α + Φx t − 1 + w t . • Here Φ is a k × k coefficient matrix, and { w r } is Gaussian multivariate white noise. • This resembles the vector regression equation, with: y t = x t , � � B = α Φ , � � 1 z t = . x t − 1 5

• Observe x 0 , x 1 , . . . , x n , and condition on x 0 . • Maximum conditional likelihood estimators of B and Σ w are same as for ordinary vector regression. • VAR( p ) is similar, but we must condition on the first p ob- servations. • Full likelihood = conditional likelihood × likelihood derived from marginal distribution of first p observations, and is dif- ficult to use. 6

Example: 1-year, 5-year, and 10-year weekly interest rates • Data from http://research.stlouisfed.org/fred2/series/WGS1YR/ , etc. a = read.csv("WGS1YR.csv"); WGS1YR = ts(a[,2]); a = read.csv("WGS5YR.csv"); WGS5YR = ts(a[,2]); a = read.csv("WGS10YR.csv"); WGS10YR = ts(a[,2]); a = cbind(WGS1YR, WGS5YR, WGS10YR); plot(a); plot(diff(a)); 7

• Use the dse package to fit VAR(1) and VAR(2) models to differences: library(dse); b = TSdata(output = diff(a)); b1 = estVARXls(b, max.lag = 1); cat("VAR(1)\n print method:\n"); print(b1); cat("\n summary method:\n"); print(summary(b1)); b2 = estVARXls(b, max.lag = 2); cat("\nVAR(2)\n print method:\n"); print(b2); cat("\n summary method:\n"); print(summary(b2)); 8

VAR(1) print method: neg. log likelihood= -7188.785 A(L) = 1-1.014698L1 0+0.05794167L1 0-0.04292339L1 0-0.02482398L1 1-0.9224325L1 0-0.05304638L1 0-0.0144053L1 0+0.03872528L1 1-1.024605L1 B(L) = 1 0 0 0 1 0 0 0 1 summary method: neg. log likelihood = -7188.785 sample length = 2448 WGS1YR y.WGS5YR WGS10YR RMSE 0.2005654 0.1713752 0.1563661 ARMA: model estimated by estVARXls inputs : outputs: WGS1YR y.WGS5YR WGS10YR 9

input dimension = 0 output dimension = 3 order A = 1 order B = 0 order C = 9 actual parameters 6 non-zero constants trend not estimated. VAR(2) print method: neg. log likelihood= -7414.944 A(L) = 1-1.329215L1+0.3221239L2 0+0.1030711L1-0.05850615L2 0-0.1539836L1+0.1172694L2 0-0.07336772L1+0.05027099L2 1-1.117284L1+0.1974304L2 0-0.1148573L1+0.05777105L2 0+0.0002002881L1-0.01317073L2 0-0.02287398L1+0.06233586L2 1-1.252808L1+0.2262312L2 B(L) = 1 0 0 0 1 0 0 0 1 summary method: neg. log likelihood = -7414.944 sample length = 2448

WGS1YR y.WGS5YR WGS10YR RMSE 0.1910442 0.1666275 0.1534016 ARMA: model estimated by estVARXls inputs : outputs: WGS1YR y.WGS5YR WGS10YR input dimension = 0 output dimension = 3 order A = 2 order B = 0 order C = 18 actual parameters 6 non-zero constants trend not estimated.

• AIC is smaller (more negative) for VAR(2), but SIC is smaller for VAR(1). • For VAR(1), 0 . 3288773 − 0 . 08581201 0 . 136938 ˆ Φ 1 = 0 . 06575108 0 . 1534516 0 . 08875425 − 0 . 004959931 0 . 04152504 0 . 2406055 • Largest off-diagonal elements are (1,3) and (2,3), suggesting that changes in the 10-year rate are followed, one week later, by changes in the same direction in the 1-year and 5-year rates. 10

Recommend

More recommend