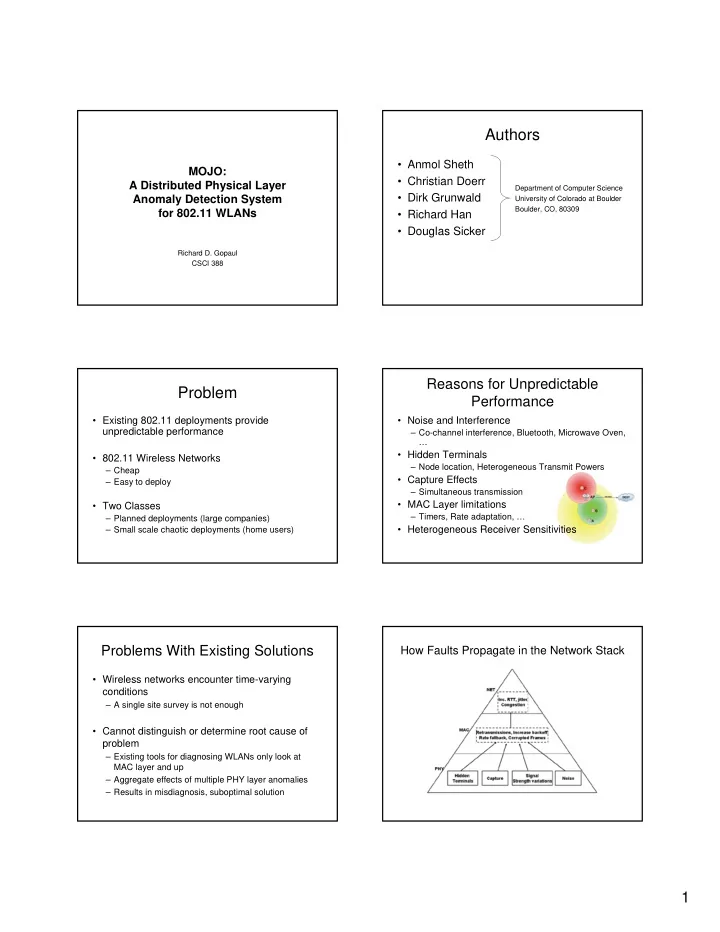

Authors • Anmol Sheth MOJO: • Christian Doerr A Distributed Physical Layer Department of Computer Science • Dirk Grunwald Anomaly Detection System University of Colorado at Boulder Boulder, CO, 80309 for 802.11 WLANs • Richard Han • Douglas Sicker Richard D. Gopaul CSCI 388 Reasons for Unpredictable Problem Performance • Existing 802.11 deployments provide • Noise and Interference unpredictable performance – Co-channel interference, Bluetooth, Microwave Oven, … • Hidden Terminals • 802.11 Wireless Networks – Node location, Heterogeneous Transmit Powers – Cheap • Capture Effects – Easy to deploy – Simultaneous transmission • MAC Layer limitations • Two Classes – Timers, Rate adaptation, … – Planned deployments (large companies) • Heterogeneous Receiver Sensitivities – Small scale chaotic deployments (home users) Problems With Existing Solutions How Faults Propagate in the Network Stack • Wireless networks encounter time-varying conditions – A single site survey is not enough • Cannot distinguish or determine root cause of problem – Existing tools for diagnosing WLANs only look at MAC layer and up – Aggregate effects of multiple PHY layer anomalies – Results in misdiagnosis, suboptimal solution 1

Contributions of this paper: How Faults Propagate in the Network Stack • Attempts to build a unified framework for detecting underlying physical layer anomalies • Quantifies the effects of different faults on a real network • Builds statistical detection algorithms for each physical effect and evaluates algorithm effectiveness in a real network testbed System Architecture MOJO • Provide visibility into PHY layer • Distributed Physical Layer Anomaly Detection System for 802.11 WLANs • Faults observed by multiple sensors • Design Goals: • Based on an iterative design process – Flexible sniffer deployment – Artificially replicated faults in a testbed – Inexpensive, $ + Comms. – Measured impact of fault at each layer of network stack – Accurate in diagnosing PHY layer root causes – Implements efficient remedies – Near-real-time Initial Design Operation Overview • Wireless sniffers sense PHY layer • Main components: – Network interference, signal strength – Wireless sniffers variations, concurrent transmissions – Data collection mechanism – Modified Atheros based Madwifi driver run on – Inference engine client nodes • Diagnose problems, Suggest remedies • Periodically transmit a summary to • Data collection and inference engine centralized inference engine. initially centralized at a single server • Inference engine collects information from the sniffers and runs detection algorithms. 2

Sniffer Placement Prototype Implementation • Sniffer placement key to monitoring and • Uses two wireless interfaces on each detection client – Sniffer locations may need to change as – One for data, the other for monitoring clients move over time – Second radio receives every frame – Cannot assume fixed locations, suboptimal transmitted by the primary radio monitoring • Avg. sniffer payload of 768 bytes/packet • Multiple sniffers, merged sniffer traces – 1.3KB of data every 10 sec. necessary to account for missed data – < 200 bytes/sec. Detection of Noise Detection of Noise • Caused by interfering wireless nodes or • Power of signal generator increased from - non-802.11 devices such as microwave 90 dBm to -50 dBm ovens, Bluetooth, cordless phones, … • Packet payload increased from 256 bytes • Signal generator used to emulate noise to 1024 bytes in 256 byte steps source • 1000 frames transmitted for each power – Node A connected to access point and signal and payload size setting generator using RF splitter Node A RTT vs. Signal Power % Data Frames Retransmitted • RTT stable until -65 dBm • Signal power set to -60 dBm • Beyond -50 dBm 100% packet loss 3

Time Spent in Backoff and Busy Detection of Noise Sensing of Medium • Noise floor sampled every 5 mins. for a period of 5 days in a residential environment. Hidden Terminal and Capture Effect Hidden Terminal and Capture Effect • Both caused by concurrent transmissions • Contention window set to CWmin (31 and collisions at the receiver usec) on receiving a successful ACK • In the Hidden Terminal case, nodes are • Backoff interval selected from contention not in range and can collide at any time window • In Capture Effect, the receivers are not • Clear Channel Assessment time is 25 necessarily hidden from one another usec – Why would they transmit concurrently? • 6 usec region of overlap Hidden Terminal and Capture Effect Hidden Terminal and Capture Effect • Experimental Results • Experiment Setup: – Node B higher SNR than node A at AP – Node C not visible to node B or node A – Rate fallback disabled – Node pairs A-B or A-C generating TCP traffic to DEST node – TCP packets varied in size from 256-1024 Bytes – 10 test runs for each payload size, 5.5 and 11 Mbps 4

Detection Algorithm Detection Accuracy • Executed on a central server • Time synchronization is essential • Sliding window buffer of recorded data • 802.11 time synchronization protocol frames • +/- 4 usec measured error Long Term Signal Strength Detection Algorithm Variations of AP • Different hardware = different powers and • Signal strength variations observed by one sensitivities sniffer are not enough to differentiate • Transmit power of AP varied, 100mW, 5mW – Localized events, i.e. fading – Global events, i.e. change in TX power of AP • Multiple distributed sniffers needed • Experiments show three distributed sensors are sufficient to detect correlated changes in signal strength Detection Accuracy vs. Observations From Three Sniffers AP Signal Strength • AP Power changed once AP Power Reduced every 5 mins. 5

Conclusion • MOJO, a unified framework to diagnose physical layer faults in 802.11 based wireless networks. • Experimental results from a real testbed • Information collected used to build threshold based statistical detection algorithms for each fault. • First step toward self-healing wireless networks? 6

Recommend

More recommend