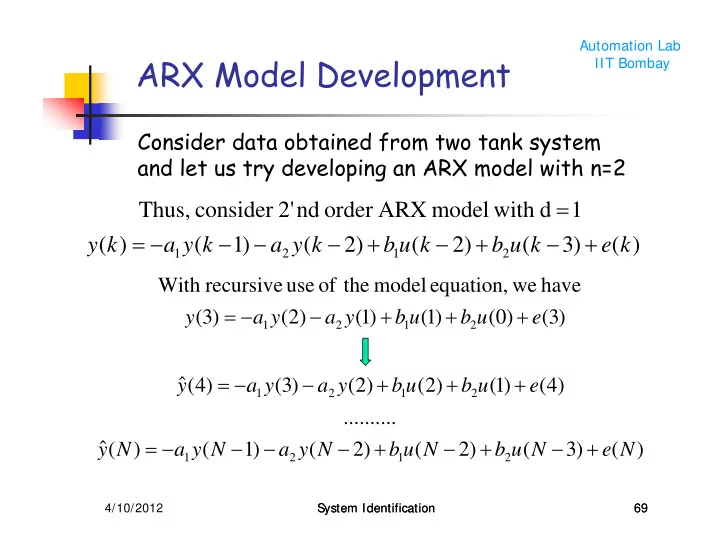

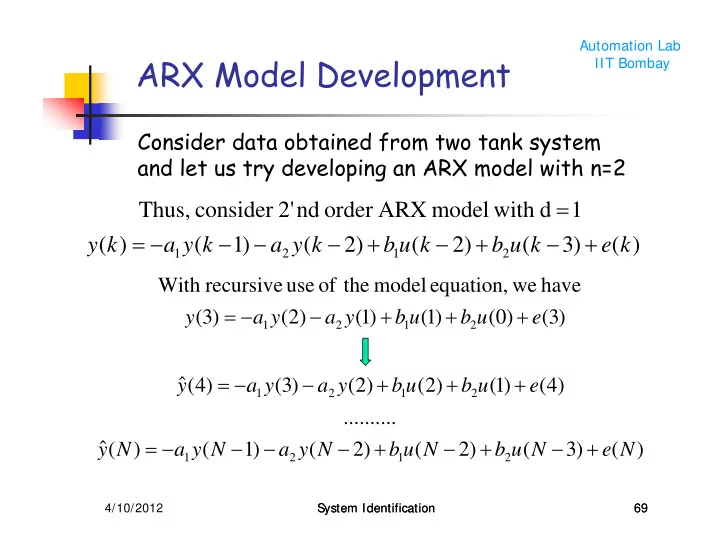

Automation Lab ARX Model Development IIT Bombay Consider data obtained from two tank system and let us try developing an ARX model with n=2 = Thus, consider 2' nd order ARX model with d 1 = − − − − + − + − + y ( k ) a y ( k 1 ) a y ( k 2 ) b u ( k 2 ) b u ( k 3 ) e ( k ) 1 2 1 2 With recursive use of the model equation, we have = − − + + + y ( 3 ) a y ( 2 ) a y ( 1 ) b u ( 1 ) b u ( 0 ) e ( 3 ) 1 2 1 2 = − − + + + ˆ ( 4 ) ( 3 ) ( 2 ) ( 2 ) ( 1 ) ( 4 ) y a y a y b u b u e 1 2 1 2 .......... = − − − − + − + − + ˆ y ( N ) a y ( N 1 ) a y ( N 2 ) b u ( N 2 ) b u ( N 3 ) e ( N ) 1 2 1 2 4/10/2012 System Identification System Identification 69 69

Automation Lab ARX : Parameter Identification IIT Bombay Arranging in matrix form y( ) y y u u a e( ) − − ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ ⎡ ⎤ 3 ( 2 ) ( 1 ) ( 1 ) ( 0 ) 3 1 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ y( ) y y u u a e( ) − − 4 ( 3 ) ( 2 ) ( 2 ) ( 1 ) 4 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ = + 2 ⎢ ... ⎥ b ⎢ ⎥ ⎢ ⎥ ⎢ ... ⎥ .. .. .. .. 1 ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ y(N) y N y N u N u N b e(N) − − − − − − ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ ⎣ ⎦ ( 1 ) ( 2 ) ( 2 ) ( 3 ) { 1 4 4 4 4 4 4 4 4 4 2 4 4 4 4 4 4 4 4 4 3 2 1 4 2 4 3 1 4 2 4 3 e Ω θ Y = Ω + Y θ e “Linear in Parameter” Model Advantage: Least square parameter estimation problem can be solved analytically 4/10/2012 System Identification System Identification 70 70

Automation Lab ARX : Parameter Identification IIT Bombay Least square parameter estimation min N min [ ] ∑ ˆ = = T 2 θ e e e ( k ) θ ( a , a , b , b ) = k 2 1 2 1 2 [ ] [ ] ϕ = = − Ω − Ω e e Y θ T Y θ T Objective Function : Necessary condition for optimality T ⎡ ⎤ ∂ ϕ ∂ ϕ ∂ ϕ ∂ ϕ ∂ ϕ = = 0 ⎢ ⎥ ∂ ∂ ∂ ∂ ∂ θ ⎣ ⎦ a a b b 1 2 1 2 [ ] [ ] ∂ ϕ ∂ − Ω − Ω T Y θ Y θ [ ] = = − Ω − Ω = Y θ 0 T ∂ ∂ θ θ 4/10/2012 System Identification System Identification 71 71

Automation Lab ARX : Parameter Identification IIT Bombay [ ] − 1 ⇒ = Ω Ω Ω ˆ θ Y T T LS S ufficient condition for optimum to be minimum ⎡ ⎤ ∂ ϕ 2 ⎢ ⎥ Hessian matrix is positive definite ∂ θ 2 ⎣ ⎦ ⎡ ⎤ ∂ ϕ 2 = Ω Ω + T ⎢ ⎥ which is always ve definite ∂ θ 2 ⎣ ⎦ ⇒ ˆ θ is a minimum LS ϕ In fact, it happens to be global minimum of A(q -1 )y(k) B(q -1 )u(k) e(k) = + Least square A(q ) 1 - 1.558 q 0.5876 q - 1 - 1 - 2 = + Estimates for Two tank system B(q) 0.002506 q - 2 0.01191 q - 3 = + 4/10/2012 System Identification System Identification 72 72

Automation Lab IIT Bombay ARX Model: Output and Residuals ARX Model ARX(2,2,2): Comparison of Predicted and Measured Outputs 1 0.5 y(k) 0 -0.5 -1 0 50 100 150 200 250 0.1 0.05 e(k) 0 -0.05 -0.1 0 50 100 150 200 250 Time (samples) System Identification 73

Automation Lab Model Residuals IIT Bombay Model fit appears to be much better than OE model. But, this can be deceptive. How do we assess the model quality? Checks Question : Do model the residues of 2' ns order ARX model ˆ ˆ ˆ e Y θ Y Y = − Ω = − LS form a white noise sequence? Check Autocorrel ation Function of {e(k)} Question : Is some part of 'signal' still left in residuals {e(k)}? Check cross correlatio n between {u(k)} and {e(k)}. 4/10/2012 System Identification System Identification 74 74

Automation Lab IIT Bombay ARX(2,2,2): Correlation Functions ARX Model ARX(2,2,2): Correlation function of residuals e(k) 1.5 Model 1 Residuals are 0.5 not white 0 -0.5 0 5 10 15 20 25 lag Model Cross corr. function between input u(k) and residuals e(k) Residuals are 0.2 correlated 0.1 with input i.e. 0 some effect of inputs is -0.1 still left in -0.2 the residues -30 -20 -10 0 10 20 30 lag System Identification 75

Automation Lab ARX: Order Selection IIT Bombay ARX Order Selection -4 5.5 x 10 5 Time Delay Objective Function Value (d) = 1 4.5 4 3.5 3 2.5 2 3 4 5 6 Model Order 4/10/2012 System Identification System Identification 76 76

Automation Lab IIT Bombay ARX(6,6,2): Correlation Functions ARX Model ARX(6,6,2): Correlation function of residuals e(k) Model 1.5 Residuals 1 are almost white 0.5 0 -0.5 0 5 10 15 20 25 lag Cross corr. function between input u(k) and residuals from output e(k) 0.4 Insignificant correlation 0.2 between 0 Model -0.2 Residuals are input -0.4 -30 -20 -10 0 10 20 30 lag System Identification 77

Automation Lab ARX: Identification Results IIT Bombay ARX(6,6,2): Measured and Simulated Output 1 y(k) 0 -1 0 50 100 150 200 250 0.06 0.04 0.02 e(k) 0 -0.02 -0.04 50 100 150 200 250 Time 4/10/2012 System Identification System Identification 78 78

Automation Lab 6’th Order ARX Model IIT Bombay Identified ARX Model Parameters A(q)y(k) = B(q)u(k) + e(t) A(q) = 1 - 0.8135 q -1 - 0.1949 q -2 - 0.07831 q -3 + 0.1107 q -4 + 0.03542 q -5 + 0.01755 q -6 B(q) = 0.00104 q -2 + 0.013 q -3 + 0.01176 q -4 + 0.004681 q -5 + 0.002472 q -6 + 0.002197 q -7 Error statistics Estimated Mean : E{e(k)} 4.8813 10 -3 = × ˆ Estimated Variance : 2 2.5496 10 - 4 λ = × {e(k)} is practically a zero mean white noise sequence 4/10/2012 System Identification System Identification 79 79

Automation Lab IIT Bombay ARX: Estimated Parameter Variances Value Value ˆ σ ˆ σ -0.8135 0.0674 0.001 0.0009 a b 1 1 -0.1949 0.0868 0.013 0.0011 a b 2 2 -0.0783 0.0863 0.0118 0.0014 b a 3 3 0.1107 0.0863 0.0047 0.0015 a b 4 4 0.0354 0.0871 0.0025 0.0015 a b 5 5 0.0175 0.0484 0.0022 0.0013 a b 6 6 4/10/2012 System Identification System Identification 80 80

Automation Lab IIT Bombay Properties of Parameter Estimation Note that ⎡ N N N N ⎤ − − − − 1 1 1 1 ∑ ∑ ∑ ∑ y k y k y k y k u k y k u k − − − − − 2 ( ) ( ) ( 1 ) ( ) ( 1 ) ( ) ( 2 ) ⎢ ⎥ ⎢ k k k k ⎥ = = = = 2 2 2 2 N N N N − − − − 1 2 2 2 ⎢ ⎥ ∑ ∑ ∑ ∑ y k y k y k y k u k y k u k − − − − 2 ( ) ( 1 ) ( ) ( ) ( ) ( ) ( 1 ) T Ω Ω = ⎢ ⎥ k k k k = = = = 2 1 1 1 ⎢ ⎥ ...... ...... ...... ...... ⎢ ⎥ N N − − 1 3 ∑ ∑ y k u k u k ⎢ ⎥ − − 2 ( ) ( 2 ) ...... ..... ( ) ⎢ ⎥ ⎣ ⎦ k k = = 2 0 This implies that r r r r − − ⎡ ⎤ ( 0 ) ( 1 ) ( 1 ) ( 2 ) y y yu yu ⎢ ⎥ r r r r − − ( 1 ) ( 0 ) ( 0 ) ( 1 ) lim ⎢ ⎥ 1 y y yu yu T Ω Ω = r r r r ⎢ ⎥ N N − − → ∞ ( 1 ) . ( 0 ) ( 0 ) ( 1 ) yu yu u u ⎢ ⎥ r r r r − − ⎢ ⎥ ( 2 ) ( 1 ) ( 1 ) ( 0 ) ⎣ ⎦ yu yu u u

Automation Lab IIT Bombay Properties of Parameter Estimation Similarly [ ] lim 1 T T Y r r r r Ω = − − ( 1 ) ( 2 ) ( 2 ) ( 3 ) y y yu yu N N → ∞ Thus, parameter estimates are related to the Autocorrelation and Cross correlation functions of {y(k)} and {u(k)} as follows − 1 ⎡ ⎤ ⎛ ⎞ 1 1 T T ⇒ = Ω Ω Ω ˆ ⎜ ⎟ θ Y ⎢ ⎥ LS N N ⎣ ⎦ ⎝ ⎠ − r r r r r − − 1 − ⎡ ⎤ ⎡ ⎤ ( 0 ) ( 1 ) ( 1 ) ( 2 ) ( 1 ) y y yu yu y ⎢ ⎥ ⎢ ⎥ r r r r r − − − ( 1 ) ( 0 ) ( 0 ) ( 1 ) ( 2 ) lim ⎢ ⎥ ⎢ ⎥ y y yu yu y → ˆ θ r r r r r LS ⎢ ⎥ ⎢ ⎥ N − − → ∞ ( 1 ) . ( 0 ) ( 0 ) ( 1 ) ( 2 ) yu yu u u yu ⎢ ⎥ ⎢ ⎥ r r r r r − − ⎢ ⎥ ⎢ ⎥ ( 2 ) ( 1 ) ( 1 ) ( 0 ) ( 3 ) ⎣ ⎦ ⎣ ⎦ yu yu u u yu

Automation Lab IIT Bombay Properties of Parameter Estimates Assume that true process behaves as n n = ∑ ∑ y ( k ) a y ( k i ) b u ( k d i ) e ( k ) − − + − − + i T , i , T i i = = 1 1 k n d , n d ,........, N = + + + 1 { } { } a and b are TRUE values of parameters and i T , i T , { e ( k )} : zero mean white noise process with v ariance 2 λ Question Will the method of least squares yield unbiased estimates of true parameter values? lim ˆ In other words, does ? θ → θ N T → ∞ System Identification 83

Recommend

More recommend