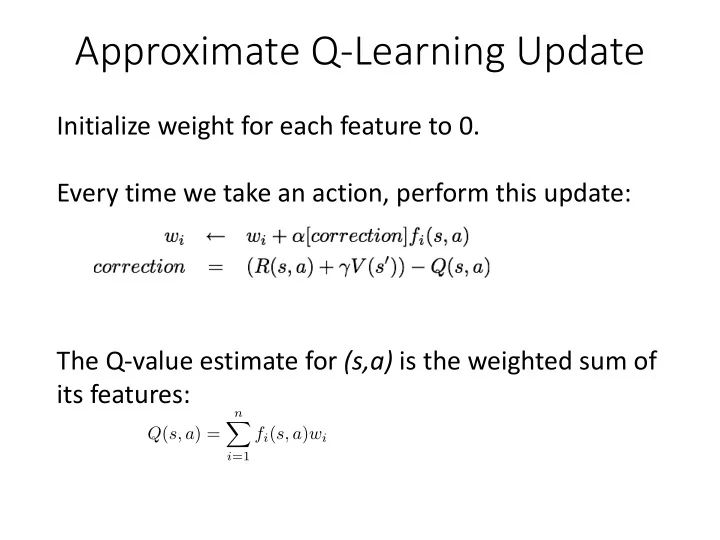

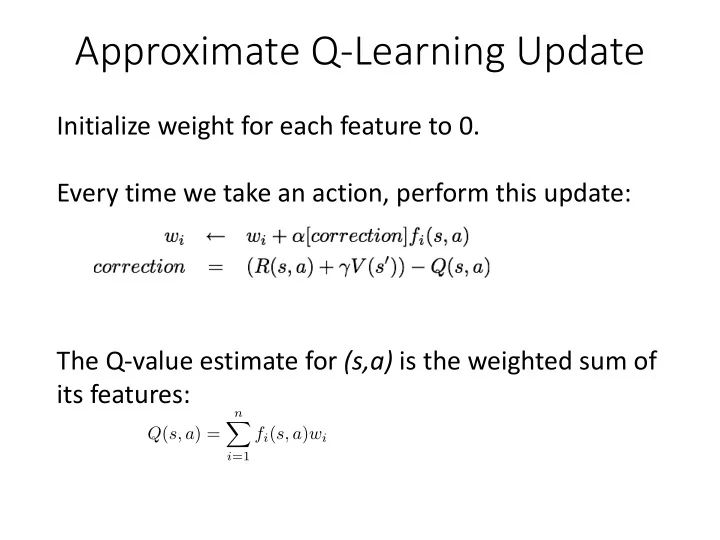

<latexit sha1_base64="cCR4OZ9l/OETB0Xei2CIfbgOzM=">ACDHicbVDLSsNAFJ3UV62vqEs3g1WoICURQV0Uim5ctmBsoY1hMp20QyeTMDNRSsgPuPFX3LhQcesHuPNvnLZaOuBC4dz7uXe/yYUaks69soLCwuLa8UV0tr6xubW+b2zq2MEoGJgyMWibaPJGUE0dRxUg7FgSFPiMtf3g19lv3REga8Rs1iokboj6nAcVIackzD5oVeYyOYA12ZRJ6Ka3Z2V3KMxh4dOo8eNQzy1bVmgDOEzsnZCj4Zlf3V6Ek5BwhRmSsmNbsXJTJBTFjGSlbiJjPAQ9UlHU45CIt108k0GD7XSg0EkdHEFJ+rviRSFUo5CX3eGSA3krDcW/M6iQrO3ZTyOFGE4+miIGFQRXAcDexRQbBiI0QFlTfCvEACYSVDrCkQ7BnX54nzkn1omo3T8v1yzyNItgD+6ACbHAG6uAaNIADMHgEz+AVvBlPxovxbnxMWwtGPrML/sD4/AG0/pmn</latexit> <latexit sha1_base64="cCR4OZ9l/OETB0Xei2CIfbgOzM=">ACDHicbVDLSsNAFJ3UV62vqEs3g1WoICURQV0Uim5ctmBsoY1hMp20QyeTMDNRSsgPuPFX3LhQcesHuPNvnLZaOuBC4dz7uXe/yYUaks69soLCwuLa8UV0tr6xubW+b2zq2MEoGJgyMWibaPJGUE0dRxUg7FgSFPiMtf3g19lv3REga8Rs1iokboj6nAcVIackzD5oVeYyOYA12ZRJ6Ka3Z2V3KMxh4dOo8eNQzy1bVmgDOEzsnZCj4Zlf3V6Ek5BwhRmSsmNbsXJTJBTFjGSlbiJjPAQ9UlHU45CIt108k0GD7XSg0EkdHEFJ+rviRSFUo5CX3eGSA3krDcW/M6iQrO3ZTyOFGE4+miIGFQRXAcDexRQbBiI0QFlTfCvEACYSVDrCkQ7BnX54nzkn1omo3T8v1yzyNItgD+6ACbHAG6uAaNIADMHgEz+AVvBlPxovxbnxMWwtGPrML/sD4/AG0/pmn</latexit> <latexit sha1_base64="cCR4OZ9l/OETB0Xei2CIfbgOzM=">ACDHicbVDLSsNAFJ3UV62vqEs3g1WoICURQV0Uim5ctmBsoY1hMp20QyeTMDNRSsgPuPFX3LhQcesHuPNvnLZaOuBC4dz7uXe/yYUaks69soLCwuLa8UV0tr6xubW+b2zq2MEoGJgyMWibaPJGUE0dRxUg7FgSFPiMtf3g19lv3REga8Rs1iokboj6nAcVIackzD5oVeYyOYA12ZRJ6Ka3Z2V3KMxh4dOo8eNQzy1bVmgDOEzsnZCj4Zlf3V6Ek5BwhRmSsmNbsXJTJBTFjGSlbiJjPAQ9UlHU45CIt108k0GD7XSg0EkdHEFJ+rviRSFUo5CX3eGSA3krDcW/M6iQrO3ZTyOFGE4+miIGFQRXAcDexRQbBiI0QFlTfCvEACYSVDrCkQ7BnX54nzkn1omo3T8v1yzyNItgD+6ACbHAG6uAaNIADMHgEz+AVvBlPxovxbnxMWwtGPrML/sD4/AG0/pmn</latexit> <latexit sha1_base64="cCR4OZ9l/OETB0Xei2CIfbgOzM=">ACDHicbVDLSsNAFJ3UV62vqEs3g1WoICURQV0Uim5ctmBsoY1hMp20QyeTMDNRSsgPuPFX3LhQcesHuPNvnLZaOuBC4dz7uXe/yYUaks69soLCwuLa8UV0tr6xubW+b2zq2MEoGJgyMWibaPJGUE0dRxUg7FgSFPiMtf3g19lv3REga8Rs1iokboj6nAcVIackzD5oVeYyOYA12ZRJ6Ka3Z2V3KMxh4dOo8eNQzy1bVmgDOEzsnZCj4Zlf3V6Ek5BwhRmSsmNbsXJTJBTFjGSlbiJjPAQ9UlHU45CIt108k0GD7XSg0EkdHEFJ+rviRSFUo5CX3eGSA3krDcW/M6iQrO3ZTyOFGE4+miIGFQRXAcDexRQbBiI0QFlTfCvEACYSVDrCkQ7BnX54nzkn1omo3T8v1yzyNItgD+6ACbHAG6uAaNIADMHgEz+AVvBlPxovxbnxMWwtGPrML/sD4/AG0/pmn</latexit> Approximate Q-Learning Update Initialize weight for each feature to 0. Every time we take an action, perform this update: The Q-value estimate for (s,a) is the weighted sum of its features: n X Q ( s, a ) = f i ( s, a ) w i i =1

<latexit sha1_base64="DA+nU6WuIiNWRNEhvmCSzynrSI=">ACI3icbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUFCkU3LluwtDUMJlO2qGTSZiZtJSQh3Hjq7hxocWNC9/FSdqFtl4YOJzvXu7c4aMSmWaX0ZuZXVtfSO/Wdja3tndK+4fPMogEpg0cAC0XaRJIxy0lRUMdIOBUG+y0jLHd6lvDUiQtKAP6hJSLo+6nPqUYyUtpziTaMsz9AprEJbRr4T06qVPMU8gZ5DZ2Ts0JSOCI7HCbRxL1DQy5BTLJkVMyu4LKy5KIF51Z3i1O4FOPIJV5ghKTuWGapujISimJGkYEeShAgPUZ90tOTIJ7IbZ0cm8EQ7PegFQj+uYOb+noiRL+XEd3Wnj9RALrLU/I91IuVdWPKw0gRjmeLvIhBFcA0MdijgmDFJlogLKj+K8QDJBWOteCDsFaPHlZNM8r1xWrcVGq3c7TyIMjcAzKwAKXoAbuQR0AQbP4BW8gw/jxXgzpsbnrDVnzGcOwZ8yvn8AzemiEA=</latexit> <latexit sha1_base64="DA+nU6WuIiNWRNEhvmCSzynrSI=">ACI3icbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUFCkU3LluwtDUMJlO2qGTSZiZtJSQh3Hjq7hxocWNC9/FSdqFtl4YOJzvXu7c4aMSmWaX0ZuZXVtfSO/Wdja3tndK+4fPMogEpg0cAC0XaRJIxy0lRUMdIOBUG+y0jLHd6lvDUiQtKAP6hJSLo+6nPqUYyUtpziTaMsz9AprEJbRr4T06qVPMU8gZ5DZ2Ts0JSOCI7HCbRxL1DQy5BTLJkVMyu4LKy5KIF51Z3i1O4FOPIJV5ghKTuWGapujISimJGkYEeShAgPUZ90tOTIJ7IbZ0cm8EQ7PegFQj+uYOb+noiRL+XEd3Wnj9RALrLU/I91IuVdWPKw0gRjmeLvIhBFcA0MdijgmDFJlogLKj+K8QDJBWOteCDsFaPHlZNM8r1xWrcVGq3c7TyIMjcAzKwAKXoAbuQR0AQbP4BW8gw/jxXgzpsbnrDVnzGcOwZ8yvn8AzemiEA=</latexit> <latexit sha1_base64="DA+nU6WuIiNWRNEhvmCSzynrSI=">ACI3icbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUFCkU3LluwtDUMJlO2qGTSZiZtJSQh3Hjq7hxocWNC9/FSdqFtl4YOJzvXu7c4aMSmWaX0ZuZXVtfSO/Wdja3tndK+4fPMogEpg0cAC0XaRJIxy0lRUMdIOBUG+y0jLHd6lvDUiQtKAP6hJSLo+6nPqUYyUtpziTaMsz9AprEJbRr4T06qVPMU8gZ5DZ2Ts0JSOCI7HCbRxL1DQy5BTLJkVMyu4LKy5KIF51Z3i1O4FOPIJV5ghKTuWGapujISimJGkYEeShAgPUZ90tOTIJ7IbZ0cm8EQ7PegFQj+uYOb+noiRL+XEd3Wnj9RALrLU/I91IuVdWPKw0gRjmeLvIhBFcA0MdijgmDFJlogLKj+K8QDJBWOteCDsFaPHlZNM8r1xWrcVGq3c7TyIMjcAzKwAKXoAbuQR0AQbP4BW8gw/jxXgzpsbnrDVnzGcOwZ8yvn8AzemiEA=</latexit> <latexit sha1_base64="DA+nU6WuIiNWRNEhvmCSzynrSI=">ACI3icbZDNSsNAFIUn9a/Wv6pLN4NFqCAlEUFCkU3LluwtDUMJlO2qGTSZiZtJSQh3Hjq7hxocWNC9/FSdqFtl4YOJzvXu7c4aMSmWaX0ZuZXVtfSO/Wdja3tndK+4fPMogEpg0cAC0XaRJIxy0lRUMdIOBUG+y0jLHd6lvDUiQtKAP6hJSLo+6nPqUYyUtpziTaMsz9AprEJbRr4T06qVPMU8gZ5DZ2Ts0JSOCI7HCbRxL1DQy5BTLJkVMyu4LKy5KIF51Z3i1O4FOPIJV5ghKTuWGapujISimJGkYEeShAgPUZ90tOTIJ7IbZ0cm8EQ7PegFQj+uYOb+noiRL+XEd3Wnj9RALrLU/I91IuVdWPKw0gRjmeLvIhBFcA0MdijgmDFJlogLKj+K8QDJBWOteCDsFaPHlZNM8r1xWrcVGq3c7TyIMjcAzKwAKXoAbuQR0AQbP4BW8gw/jxXgzpsbnrDVnzGcOwZ8yvn8AzemiEA=</latexit> AQL Update Details • The weighted sum of features is equivalent to a dot product between the feature and weight vectors: n X Q ( s, a ) = f i ( s, a ) w i = ~ w · f ( s, a ) i =1 • The correction term is the same for all features. • The correction to each feature is weighted by how “active” that feature was.

Exercise: Feature Q-Update Reward eating food: discount: .95 +10 learning rate: .3 Reward for losing: -500 • Suppose PacMan is considering the up action. Old Feature Values: w bias = 1 w ghosts = -20 w food = 2 Features: w eats = 4 • closest-food • bias • eats-food • #-of-ghosts-1-step-away

Notes on Approximate Q-Learning • Learns weights for a tiny number of features. • Every feature’s value is update every step. • No longer tracking values for individual (s,a) pairs. • (s,a) value estimates are calculated from features. • The weight update is a form of gradient descent. • We’ve seen this before. • We’re performing a variant of linear regression. • Feature extraction is a type of basis change. • We’ll see these again.

Recommend

More recommend