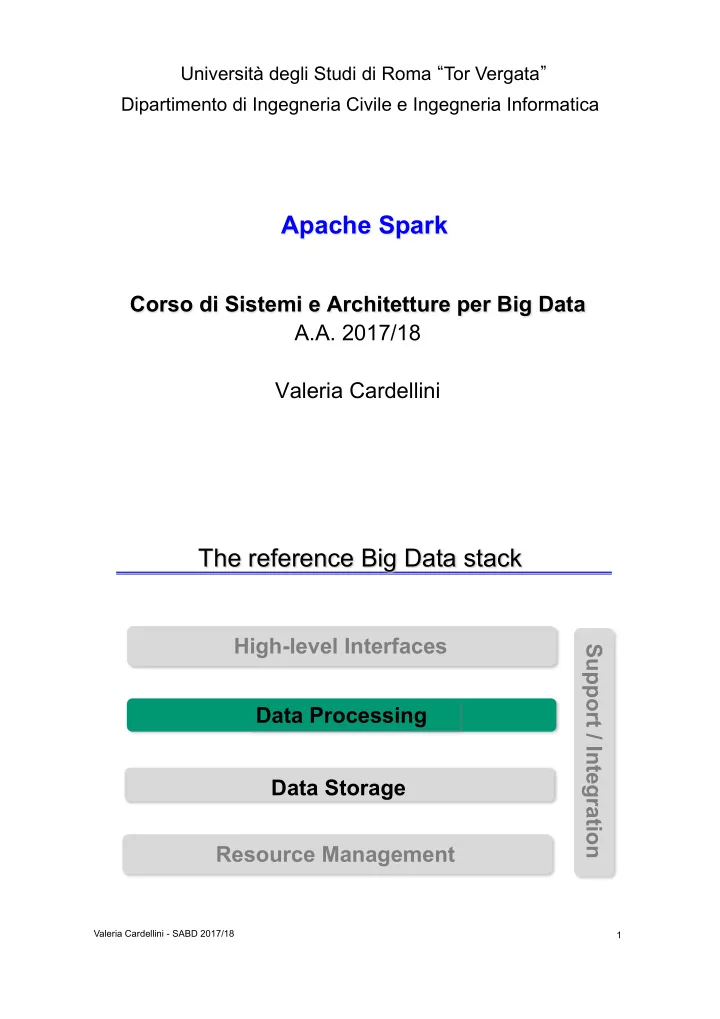

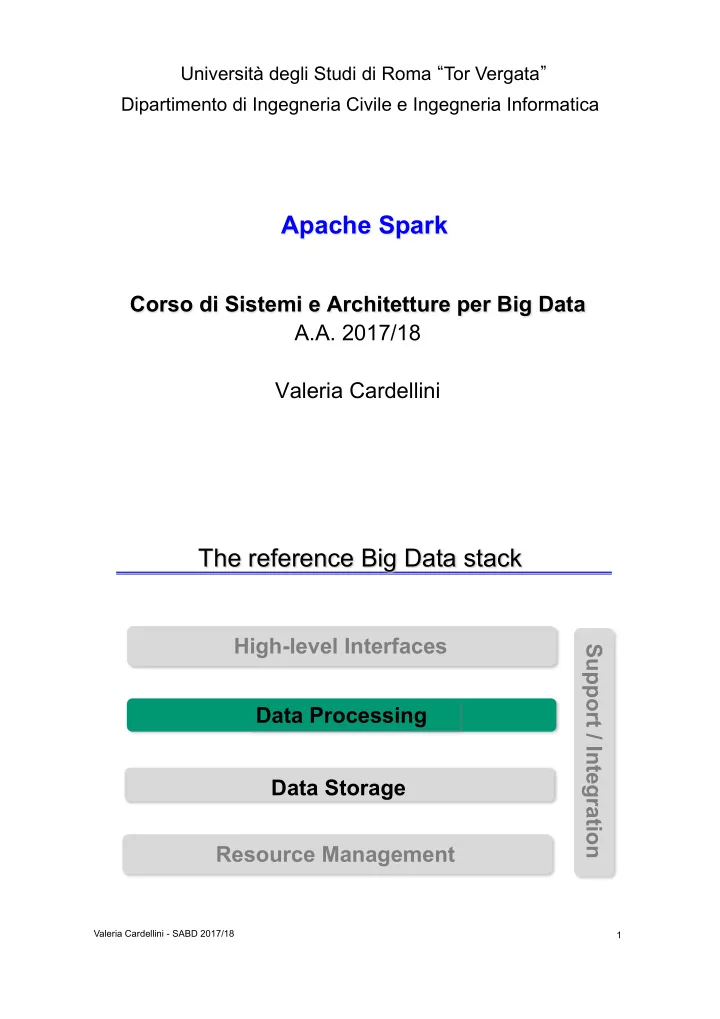

Università degli Studi di Roma “ Tor Vergata ” Dipartimento di Ingegneria Civile e Ingegneria Informatica Apache Spark Corso di Sistemi e Architetture per Big Data A.A. 2017/18 Valeria Cardellini The reference Big Data stack High-level Interfaces Support / Integration Data Processing Data Storage Resource Management Valeria Cardellini - SABD 2017/18 1

MapReduce: weaknesses and limitations • Programming model – Hard to implement everything as a MapReduce program – Multiple MapReduce steps can be needed even for simple operations – Lack of control, structures and data types • No native support for iteration – Each iteration writes/reads data from disk leading to overheads – Need to design algorithms that can minimize number of iterations Valeria Cardellini - SABD 2017/18 2 MapReduce: weaknesses and limitations • Efficiency (recall HDFS) – High communication cost: computation (map), communication (shuffle), computation (reduce) – Frequent writing of output to disk – Limited exploitation of main memory • Not feasible for real-time processing – A MapReduce job requires to scan the entire input – Stream processing and random access impossible Valeria Cardellini - SABD 2017/18 3

Alternative programming models • Based on directed acyclic graphs (DAGs) – E.g., Spark, Spark Streaming, Storm • SQL-based – We have already analyzed Hive and Pig • NoSQL databases – We have already analyzed HBase, MongoDB, Cassandra, … Valeria Cardellini - SABD 2017/18 4 Alternative programming models • Based on Bulk Synchronous Parallel (BSP) – Developed by Leslie Valiant during the 1980s – Considers communication actions en masse – Suitable for graph analytics at massive scale and massive scientific computations (e.g., matrix, graph and network algorithms) - Examples: Google’s Pregel, Apache Hama, Apache Giraph - Apache Giraph: open source counterpart to Pregel, used at Facebook to analyze the users’ social graph Valeria Cardellini - SABD 2017/18 5

Apache Spark • Fast and general-purpose engine for Big Data processing – Not a modified version of Hadoop – It is becoming the leading platform for large-scale SQL, batch processing, stream processing, and machine learning – It is evolving as a unified engine for distributed data processing • In-memory data storage for fast iterative processing – At least 10x faster than Hadoop • Suitable for general execution graphs and powerful optimizations • Compatible with Hadoop’s storage APIs – Can read/write to any Hadoop-supported system, including HDFS and HBase Valeria Cardellini - SABD 2017/18 6 Project history • Spark project started in 2009 • Developed originally at UC Berkeley’s AMPLab by Matei Zaharia • Open sourced 2010, Apache project from 2013 • In 2014, Zaharia founded Databricks • It is now the most active open source project for Big Data processing • Current version: 2.3.0 Valeria Cardellini - SABD 2017/18 7

Spark: why a new programming model? • MapReduce simplified Big Data analysis – But it executes jobs in a simple but rigid structure • Step to process or transform data (map) • Step to synchronize (shuffle) • Step to combine results (reduce) • As soon as MapReduce got popular, users wanted: – Iterative computations (e.g., iterative graph algorithms and machine learning, such as stochastic gradient descent, K-means clustering) – More efficiency – More interactive ad-hoc queries – Faster in-memory data sharing across parallel jobs (required by both iterative and interactive applications) Valeria Cardellini - SABD 2017/18 8 Data sharing in MapReduce • Slow due to replication, serialization and disk I/O Valeria Cardellini - SABD 2017/18 9

Data sharing in Spark • Distributed in-memory: 10x-100x faster than disk and network Valeria Cardellini - SABD 2017/18 10 Spark stack Valeria Cardellini - SABD 2017/18 11

Spark core • Provides basic functionalities (including task scheduling, memory management, fault recovery, interacting with storage systems) used by other components • Provides a data abstraction called resilient distributed dataset (RDD) , a collection of items distributed across many compute nodes that can be manipulated in parallel – Spark Core provides many APIs for building and manipulating these collections • Written in Scala but APIs for Java, Python and R Valeria Cardellini - SABD 2017/18 12 Spark as a unified engine • A number of integrated higher-level libraries built on top of Spark • Spark SQL – Spark’s package for working with structured data – Allows querying data via SQL as well as the Apache Hive variant of SQL (Hive QL) and supports many sources of data including Hive tables, Parquet, and JSON – Extends the Spark RDD API • Spark Streaming – Enables processing live streams of data – Extends the Spark RDD API Valeria Cardellini - SABD 2017/18 13

Spark as a unified engine • MLlib – Library that provides many distributed machine learning (ML) algorithms, including feature extraction, classification, regression, clustering, recommendation • GraphX – Library that provides an API for manipulating graphs and performing graph-parallel computations – Includes also common graph algorithms (e.g., PageRank) – Extends the Spark RDD API Valeria Cardellini - SABD 2017/18 14 Spark on top of cluster managers • Spark can exploit many cluster resource managers to execute its applications • Spark standalone mode – Use a simple FIFO scheduler included in Spark • Hadoop YARN • Mesos – Mesos and Spark are both from AMPLab @ UC Berkeley • Kubernetes Valeria Cardellini - SABD 2017/18 15

Spark architecture • Master/worker architecture Valeria Cardellini - SABD 2017/18 16 Spark architecture • Driver program that talks to the master • Master manages workers in which executors run http://spark.apache.org/docs/latest/cluster-overview.html Valeria Cardellini - SABD 2017/18 17

Spark architecture • Applications run as independent sets of processes on a cluster, coordinated by the SparkContext object in the main program (the driver program) • Each application gets its own executor processes, which stay up for the duration of the whole application and run tasks in multiple threads • To run on a cluster, the SparkContext can connect to a cluster manager (Spark’s cluster manager, Mesos or YARN), which allocates resources across applications • Once connected, Spark acquires executors on nodes in the cluster, which run computations and store data. Next, it sends the application code to the executors. Finally, SparkContext sends tasks to the executors to run Valeria Cardellini - SABD 2017/18 18 Spark data flow Valeria Cardellini - SABD 2017/18 19

Spark programming model Valeria Cardellini - SABD 2017/18 20 Spark programming model Valeria Cardellini - SABD 2017/18 21

Resilient Distributed Dataset (RDD) • RDDs are the key programming abstraction in Spark: a distributed memory abstraction • Immutable, partitioned and fault-tolerant collection of elements that can be manipulated in parallel – Like a LinkedList <MyObjects> – Cached in memory across the cluster nodes • Each node of the cluster that is used to run an application contains at least one partition of the RDD(s) that is (are) defined in the application Valeria Cardellini - SABD 2017/18 22 Resilient Distributed Dataset (RDD) • Stored in main memory of the executors running in the worker nodes (when it is possible) or on node local disk (if not enough main memory) – Can persist in memory, on disk, or both • Allow executing in parallel the code invoked on them – Each executor of a worker node runs the specified code on its partition of the RDD – A partition is an atomic piece of information – Partitions of an RDD can be stored on different cluster nodes Valeria Cardellini - SABD 2017/18 23

Resilient Distributed Dataset (RDD) • Immutable once constructed – i.e., the RDD content cannot be modified • Automatically rebuilt on failure (but no replication) – Track lineage information to efficiently recompute lost data – For each RDD, Spark knows how it has been constructed and can rebuilt it if a failure occurs – This information is represented by means of a DAG connecting input data and RDDs • RDD API – Clean language-integrated API for Scala, Python, Java, and R – Can be used interactively from Scala console Valeria Cardellini - SABD 2017/18 24 Resilient Distributed Dataset (RDD) • RDD can be created by: – Parallelizing existing collections of the hosting programming language (e.g., collections and lists of Scala, Java, Python, or R) • Number of partitions specified by user • In RDD API: parallelize ! – From (large) files stored in HDFS or any other file system • One partition per HDFS block • In RDD API: textFile – By transforming an existing RDD • Number of partitions depends on transformation type • In RDD API: transformation operations ( map , filter , flatMap ) Valeria Cardellini - SABD 2017/18 25

Recommend

More recommend