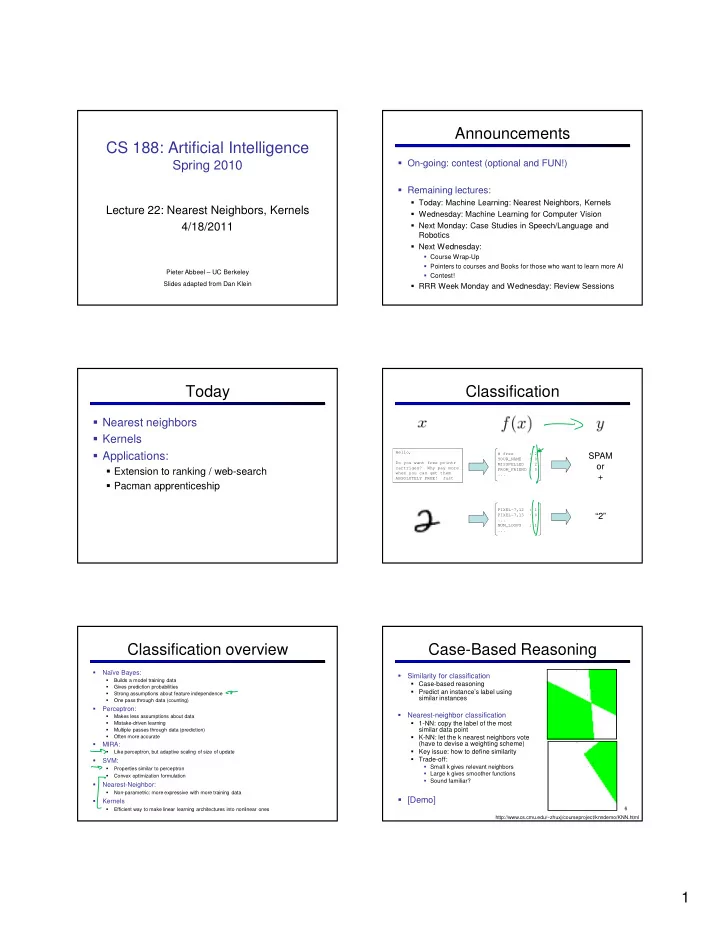

Announcements CS 188: Artificial Intelligence � On-going: contest (optional and FUN!) Spring 2010 � Remaining lectures: � Today: Machine Learning: Nearest Neighbors, Kernels Lecture 22: Nearest Neighbors, Kernels � Wednesday: Machine Learning for Computer Vision � Next Monday: Case Studies in Speech/Language and 4/18/2011 Robotics � Next Wednesday: � Course Wrap-Up � Pointers to courses and Books for those who want to learn more AI Pieter Abbeel – UC Berkeley � Contest! Slides adapted from Dan Klein � RRR Week Monday and Wednesday: Review Sessions Today Classification � Nearest neighbors � Kernels � Applications: Hello, # free : 2 SPAM YOUR_NAME : 0 Do you want free printr MISSPELLED : 2 or � Extension to ranking / web-search cartriges? Why pay more FROM_FRIEND : 0 when you can get them ... + ABSOLUTELY FREE! Just � Pacman apprenticeship PIXEL-7,12 : 1 “2” PIXEL-7,13 : 0 ... NUM_LOOPS : 1 ... Classification overview Case-Based Reasoning � Naïve Bayes: � Similarity for classification � Builds a model training data � Case-based reasoning � Gives prediction probabilities � Predict an instance’s label using � Strong assumptions about feature independence similar instances � One pass through data (counting) � Perceptron: � Nearest-neighbor classification � Makes less assumptions about data � 1-NN: copy the label of the most � Mistake-driven learning � similar data point Multiple passes through data (prediction) � � K-NN: let the k nearest neighbors vote Often more accurate � (have to devise a weighting scheme) MIRA: � Key issue: how to define similarity � Like perceptron, but adaptive scaling of size of update � Trade-off: � SVM: � Small k gives relevant neighbors � Properties similar to perceptron � Large k gives smoother functions � Convex optimization formulation � Sound familiar? � Nearest-Neighbor: � Non-parametric: more expressive with more training data � [Demo] � Kernels � 6 Efficient way to make linear learning architectures into nonlinear ones http://www.cs.cmu.edu/~zhuxj/courseproject/knndemo/KNN.html 1

Parametric / Non-parametric Nearest-Neighbor Classification � Nearest neighbor for digits: � Parametric models: � Take new image � Fixed set of parameters � Compare to all training images � More data means better settings � Assign based on closest example � Non-parametric models: � Complexity of the classifier increases with data � Encoding: image is vector of intensities: � Better in the limit, often worse in the non-limit Truth � (K)NN is non-parametric � What’s the similarity function? 2 Examples 10 Examples 100 Examples 10000 Examples � Dot product of two images vectors? � Usually normalize vectors so ||x|| = 1 � min = 0 (when?), max = 1 (when?) 7 8 Basic Similarity Invariant Metrics � Many similarities based on feature dot products: � Better distances use knowledge about vision � Invariant metrics: � Similarities are invariant under certain transformations � Rotation, scaling, translation, stroke-thickness… � E.g: � If features are just the pixels: � 16 x 16 = 256 pixels; a point in 256-dim space � Small similarity in R 256 (why?) � Variety of invariant metrics in literature � Note: not all similarities are of this form � Viable alternative: transform training examples 9 10 such that training set includes all variations Rotation Invariant Metrics Classification overview � Naïve Bayes � Each example is now a curve � Perceptron, MIRA in R 256 � SVM � � Rotation invariant similarity: Nearest-Neighbor � Kernels s’=max s( r( ), r( )) � E.g. highest similarity between images’ rotation lines 11 2

A Tale of Two Approaches … Perceptron Weights � What is the final value of a weight w y of a perceptron? � Nearest neighbor-like approaches � Can it be any real vector? � Can use fancy similarity functions � No! It’s built by adding up inputs. � Don’t actually get to do explicit learning � Perceptron-like approaches � Explicit training to reduce empirical error � Can’t use fancy similarity, only linear � Can reconstruct weight vectors (the primal representation) � Or can they? Let’s find out! from update counts (the dual representation) 16 17 Dual Perceptron Dual Perceptron � How to classify a new example x? � Start with zero counts (alpha) � Pick up training instances one by one � Try to classify x n , � If correct, no change! � If wrong: lower count of wrong class (for this instance), raise score of right class (for this instance) � If someone tells us the value of K for each pair of examples, never need to build the weight vectors! 18 19 Kernelized Perceptron Kernelized MIRA � If we had a black box (kernel) which told us the dot product of two examples x and y: � Could work entirely with the dual representation � No need to ever take dot products (“kernel trick”) � Our formula for τ (see last lecture) � Like nearest neighbor – work with black-box similarities � Downside: slow if many examples get nonzero alpha 20 22 3

Kernels: Who Cares? Non-Linear Separators � Data that is linearly separable (with some noise) works out great: � So far: a very strange way of doing a very simple calculation x 0 � But what are we going to do if the dataset is just too hard? � “Kernel trick”: we can substitute any* similarity function in place of the dot product x 0 � How about… mapping data to a higher-dimensional space: � Lets us learn new kinds of hypothesis x 2 * Fine print: if your kernel doesn’t satisfy certain technical requirements, lots of proofs break. E.g. convergence, mistake bounds. In practice, illegal kernels sometimes work (but not always). x 0 23 24 This and next few slides adapted from Ray Mooney, UT Non-Linear Separators Non-Linear Separators � Data that is linearly separable (with some noise) works out great: � General idea: the original feature space can always be mapped to some higher-dimensional feature space x 0 where the training set is separable: � But what are we going to do if the dataset is just too hard? Φ : x → φ ( x ) x 0 � How about… mapping data to a higher-dimensional space: x 2 x 0 25 26 This and next few slides adapted from Ray Mooney, UT Some Kernels Some Kernels (2) � Kernels implicitly map original vectors to higher � Polynomial kernel: dimensional spaces, take the dot product there, and hand the result back � Linear kernel: φ � x � � x � Quadratic kernel: 27 4

Some Kernels (3) Why Kernels? � Kernels implicitly map original vectors to higher � Can’t you just add these features on your own (e.g. add dimensional spaces, take the dot product there, and hand all pairs of features instead of using the quadratic the result back kernel)? � Yes, in principle, just compute them � Radial Basis Function (or Gaussian) Kernel: infinite � No need to modify any algorithms dimensional representation � But, number of features can get large (or infinite) � Kernels let us compute with these features implicitly � Example: implicit dot product in polynomial, Gaussian and string � Discrete kernels: e.g. string kernels kernel takes much less space and time per dot product � Features: all possible strings up to some length � Of course, there’s the cost for using the pure dual algorithms: � To compute kernel: don’t need to enumerate all substrings for each you need to compute the similarity to every training datum word, but only need to find strings appearing in both x and x’ 29 Recap: Classification Extension: Web Search � Classification systems: x = “Apple Computers” � Information retrieval: � Supervised learning � Given information needs, � Make a prediction given produce information evidence � We’ve seen several � Includes, e.g. web search, methods for this question answering, and � Useful when you have classic IR labeled data � Web search: not exactly classification, but rather ranking 32 Feature-Based Ranking Perceptron for Ranking � Inputs x = “Apple Computers” � Candidates � Many feature vectors: x, � One weight vector: � Prediction: � Update (if wrong): x, 5

Pacman Apprenticeship! � Examples are states s “correct” � Candidates are pairs (s,a) action a* � “Correct” actions: those taken by expert � Features defined over (s,a) pairs: f(s,a) � Score of a q-state (s,a) given by: � How is this VERY different from reinforcement learning? 6

Recommend

More recommend