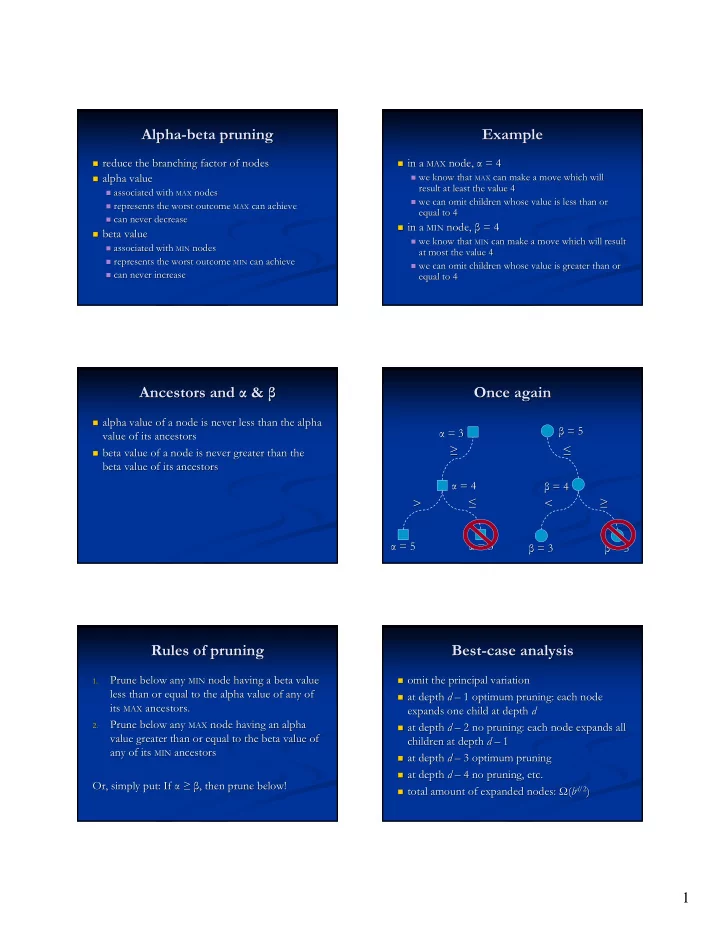

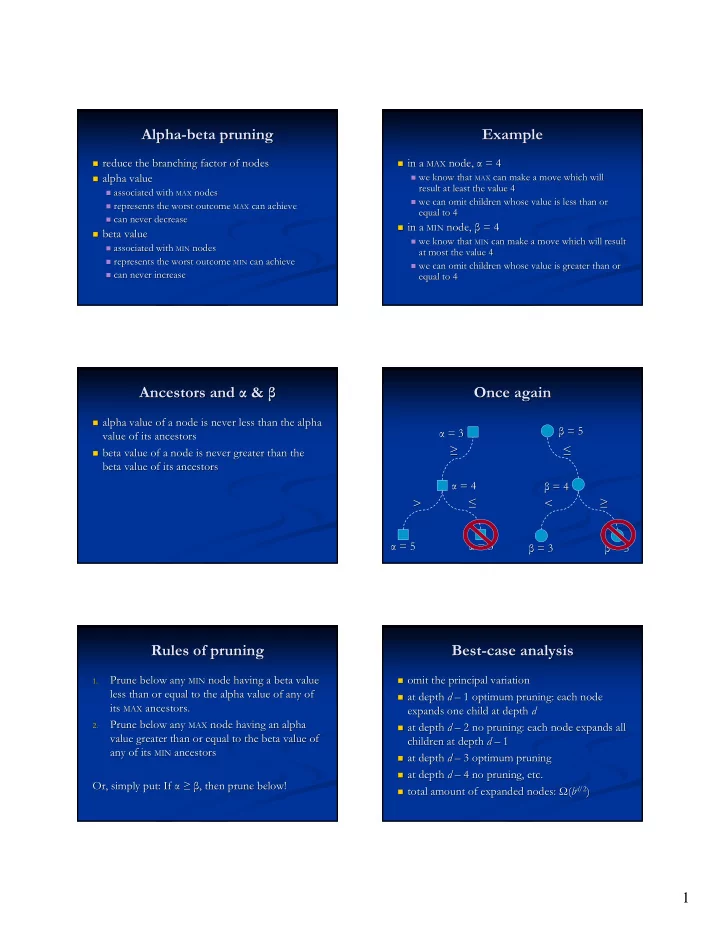

Alpha- -beta pruning beta pruning Example Alpha Example � reduce the branching factor of nodes reduce the branching factor of nodes � in a in a MAX MAX node, node, α α = 4 = 4 � � � we know that we know that MAX MAX can make a move which will can make a move which will � alpha value alpha value � � result at least the value 4 result at least the value 4 � associated with associated with MAX MAX nodes nodes � � we can omit children whose value is less than or we can omit children whose value is less than or � � represents the worst outcome represents the worst outcome MAX MAX can achieve can achieve � equal to 4 equal to 4 � can never decrease can never decrease � � in a in a MIN MIN node, node, β β = 4 = 4 � � beta value beta value � � we know that we know that MIN MIN can make a move which will result can make a move which will result � � associated with associated with MIN MIN nodes nodes � at most the value 4 at most the value 4 � represents the worst outcome represents the worst outcome MIN MIN can achieve can achieve � � we can omit children whose value is greater than or we can omit children whose value is greater than or � � can never increase can never increase � equal to 4 equal to 4 Ancestors and α α & & β β Once again Ancestors and Once again � alpha value of a node is never less than the alpha alpha value of a node is never less than the alpha � β = 5 = 5 β α = 3 = 3 α value of its ancestors value of its ancestors ≥ ≤ ≥ ≤ � beta value of a node is never greater than the beta value of a node is never greater than the � beta value of its ancestors beta value of its ancestors α = 4 α = 4 β β = 4 = 4 > ≤ < ≥ > ≤ < ≥ α = 5 = 5 α = 3 = 3 α α β = 3 β = 3 β = 5 β = 5 Rules of pruning Rules of pruning Best- Best -case analysis case analysis Prune below any MIN Prune below any MIN node having a beta value node having a beta value � omit the principal variation omit the principal variation 1. 1. � less than or equal to the alpha value of any of less than or equal to the alpha value of any of � at depth at depth d d – – 1 optimum pruning: each node 1 optimum pruning: each node � its its MAX MAX ancestors. ancestors. expands one child at depth d expands one child at depth d Prune below any Prune below any MAX MAX node having an alpha node having an alpha 2. � at depth at depth d d – – 2 no pruning: each node expands all 2 no pruning: each node expands all 2. � value greater than or equal to the beta value of value greater than or equal to the beta value of children at depth d children at depth d – – 1 1 any of its MIN MIN ancestors ancestors any of its � at depth at depth d d – – 3 optimum pruning 3 optimum pruning � � at depth at depth d d – – 4 no pruning, etc. 4 no pruning, etc. � Or, simply put: If α Or, simply put: If α ≥ ≥ β β , then prune below! , then prune below! d /2 /2 ) � total amount of expanded nodes: total amount of expanded nodes: Ω Ω ( ( b b d ) � 1

Principal variation search Principal variation search (cont’d) Principal variation search Principal variation search (cont’d) � if we find a principal variation move (i.e., between if we find a principal variation move (i.e., between α α � alpha alpha- -beta range should be small beta range should be small � � and and β β ), assume we have found a principal variation ), assume we have found a principal variation � limit the range artificially → aspiration search limit the range artificially → aspiration search � node node � if search fails, revert to the original range if search fails, revert to the original range � � search the rest of nodes the assuming they will not produce a search the rest of nodes the assuming they will not produce a � � game tree node is either game tree node is either � good move good move � assume that the rest of nodes have values < assume that the rest of nodes have values < α α � α α - -node: every move has node: every move has e e ≤ ≤ α α � � � null window: [ null window: [ α α , , α α + + ε ε ] ] � � β β - -node: every move has node: every move has e e ≥ ≥ β β � � if the assumption fails, re if the assumption fails, re- -search the node search the node � � principal variation node: one or more moves has principal variation node: one or more moves has � � works well if the principal variation node is likely to get works well if the principal variation node is likely to get � e > e > α α but none has but none has e e ≥ ≥ β β selected first selected first � sort the children? sort the children? � Non Non- -zero sum game: zero sum game: Prisoner’s dilemma (cont’d) Prisoner’s dilemma (cont’d) Prisoner’s dilemma Prisoner’s dilemma � two criminals are arrested and isolated from each other two criminals are arrested and isolated from each other � two players two players � � � police suspects they have committed a crime together police suspects they have committed a crime together � possible moves possible moves � � but don’t have enough proof but don’t have enough proof � co co- -operate operate � � both are offered a deal: rat on the other one and get a both are offered a deal: rat on the other one and get a � defect defect � � lighter sentence lighter sentence � the dilemma: player the dilemma: player � cannot make a good � if one defects, he gets free whilst the other gets a long if one defects, he gets free whilst the other gets a long cannot make a good � sentence sentence decision without decision without � if both defect, both get a medium sentence if both defect, both get a medium sentence knowing what the other knowing what the other � � if neither one defects (i.e., they co if neither one defects (i.e., they co- -operate with each other), operate with each other), will do will do � both get a short sentence both get a short sentence Payoffs for prisoner A Payoffs for prisoner A Payoffs in Chicken Payoffs in Chicken Driver B’s move Co-operate: Defect: keep Prisoner B’s move Co-operate: Defect: rat on swerve going keep silent the other prisoner Prisoner A’s move Driver A’s move Co-operate: Fairly good: Fairly good: Bad: Bad: Co-operate: Fairly good: Fairly good: Mediocre: Mediocre: keep silent swerve 6 months 6 months 10 years 10 years It’s a draw. It’s a draw. I’m chicken... I’m chicken... Defect: rat on Good: Mediocre: Defect: keep Good: Bad: Good: Mediocre: Good: Bad: the other going no penalty no penalty 5 years 5 years I win! I win! Crash, boom, bang!! Crash, boom, bang!! prisoner 2

Payoffs in Battle of Sexes Iterated prisoner’s dilemma Payoffs in Battle of Sexes Iterated prisoner’s dilemma � encounters are repeated encounters are repeated Wife’s move Co-operate: Defect: opera � � players have memory of the previous encounters players have memory of the previous encounters boxing � � R. Axelrod: R. Axelrod: The Evolution of Cooperation The Evolution of Cooperation (1984) (1984) � Husband’s move � greedy strategies tend to work poorly greedy strategies tend to work poorly � Co-operate: Wife: Very bad Wife: Very bad Wife: Good Wife: Good � altruistic strategies work better altruistic strategies work better— —even if judged by self even if judged by self- - � interest only interest only opera Husband: Very Husband: Husband: Very Husband: � Nash equilibrium: always defect! Nash equilibrium: always defect! � bad bad Mediocre Mediocre � but sometimes rational decisions are not sensible but sometimes rational decisions are not sensible � Defect: boxing Wife: Mediocre Wife: Mediocre Wife: Bad Wife: Bad � Tit for Tat (A. Rapoport) Tit for Tat (A. Rapoport) � � co co- -operate on the first iteration operate on the first iteration Husband: Good Husband: Good Husband: Bad Husband: Bad � � do what the opponent did on the previous move do what the opponent did on the previous move � 3

Recommend

More recommend