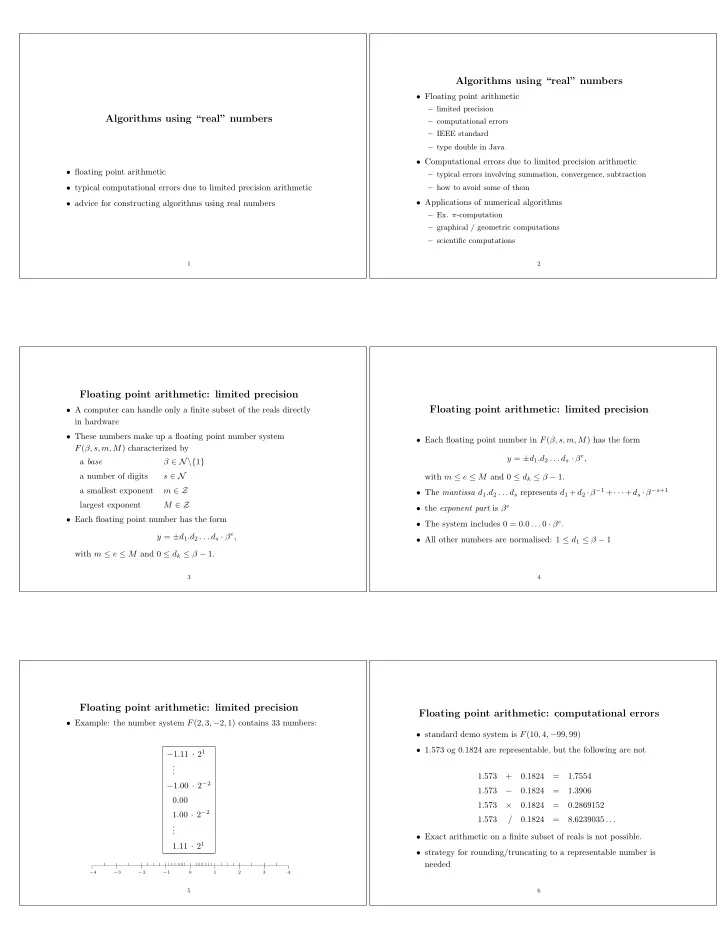

Algorithms using “real” numbers • Floating point arithmetic – limited precision Algorithms using “real” numbers – computational errors – IEEE standard – type double in Java • Computational errors due to limited precision arithmetic • floating point arithmetic – typical errors involving summation, convergence, subtraction • typical computational errors due to limited precision arithmetic – how to avoid some of them • Applications of numerical algorithms • advice for constructing algorithms using real numbers – Ex. π -computation – graphical / geometric computations – scientific computations 1 2 Floating point arithmetic: limited precision • A computer can handle only a finite subset of the reals directly Floating point arithmetic: limited precision in hardware • These numbers make up a floating point number system • Each floating point number in F ( β, s, m, M ) has the form F ( β, s, m, M ) characterized by y = ± d 1 .d 2 . . . d s · β e , β ∈ N\{ 1 } a base a number of digits s ∈ N with m ≤ e ≤ M and 0 ≤ d k ≤ β − 1. • The mantissa d 1 .d 2 . . . d s represents d 1 + d 2 · β − 1 + · · · + d s · β − s +1 a smallest exponent m ∈ Z M ∈ Z largest exponent • the exponent part is β e • Each floating point number has the form • The system includes 0 = 0 . 0 . . . 0 · β e . y = ± d 1 .d 2 . . . d s · β e , • All other numbers are normalised: 1 ≤ d 1 ≤ β − 1 with m ≤ e ≤ M and 0 ≤ d k ≤ β − 1. 3 4 Floating point arithmetic: limited precision Floating point arithmetic: computational errors • Example: the number system F (2 , 3 , − 2 , 1) contains 33 numbers: • standard demo system is F (10 , 4 , − 99 , 99) • 1.573 og 0.1824 are representable, but the following are not − 1 . 11 · 2 1 . . . 1 . 573 + 0 . 1824 = 1 . 7554 − 1 . 00 · 2 − 2 1 . 573 − 0 . 1824 = 1 . 3906 0 . 00 × 1 . 573 0 . 1824 = 0 . 2869152 1 . 00 · 2 − 2 1 . 573 / 0 . 1824 = 8 . 6239035 . . . . . . • Exact arithmetic on a finite subset of reals is not possible. 1 . 11 · 2 1 • strategy for rounding/truncating to a representable number is needed − 4 − 3 − 2 − 1 0 1 2 3 4 5 6

Floating point arithmetic: computational errors Floating point arithmetic: computational errors • Algebraic laws invalid: • rounding/truncation defined by function fl : R �→ M , where M (1 . 418 ⊕ 2937) ⊖ 2936 = 2938 ⊖ 2936 = 2 . 000 is machine numbers 1 . 418 ⊕ (2937 ⊖ 2936) = 1 . 418 ⊕ 1 . 000 = 2 . 418 • machine arithmetic operations ⊕ , ⊖ , ⊗ , ⊘ defined by 1 . 418 ⊗ (2001 ⊖ 2000) 1 . 418 ⊗ 1 . 000 = = 1 . 418 x ⊕ y = fl ( x + y ) etc. (1 . 418 ⊗ 2001) ⊖ (1 . 418 ⊗ 2000) = 2837 ⊖ 2836 = 1 . 000 • In demo system F (10 , 4 , − 99 , 99) define fl by truncation, e.g. • The usual associative and distributive laws are not valid for machine arithmetic. Sometimes: 1 . 573 0 . 1824 = fl (1 . 573 + 0 . 1824) = fl (1 . 7554) = 1 . 755 ⊕ 1 . 573 0 . 1824 = fl (1 . 573 0 . 1824) = fl (1 . 3906) = 1 . 390 ⊖ − 1 . 573 0 . 1824 = fl (1 . 573 0 . 1824) = fl (0 . 2869152) = . 2869 ⊗ × ( a ⊕ b ) ⊖ c a ⊕ ( b ⊖ c ) � = 1 . 573 0 . 1824 = fl (1 . 573 / 0 . 1824) = fl (8 . 6239035 . . . ) = 8 . 623 ⊘ a ⊗ ( b ⊖ c ) � = ( a ⊗ b ) ⊖ ( a ⊗ c ) 7 8 Floating point arithmetic in Java IEEE standard for binary floating point arithmetic • Java implementations use IEEE standard for the primitive types • IEEE standard describes two number systems, approximately float and double on most computers Single precision F (2 , 24 , − 126 , 127) • Use modifier strictfp to enforce IEEE standard independent of Double precision F (2 , 53 , − 1022 , 1023) the underlying hardware. • The IEEE systems has more numbers: • Representable values in type double are ± b 1 .b 2 . . . b 53 · 2 e , – closes the representational gap around zero with xtra numbers where b i ∈ { 0 , 1 } and − 1022 ≤ e ≤ 1023 of the form 0 .b 1 b 2 . . . b 52 2 − 1022 ±∞ and NaN – has representations for ±∞ and NaN (= Not a Number) • Java syntax for ±∞ and NaN is: Double.NEGATIVE INFINITY , • The IEEE system has detailed rules for rounding to representable Double.POSITIVE INFINITY and Double.NaN numbers, ex. • Warning: legal java double literals may not be directly – overflow: 1 / 0 or 2 1023 · 2 has result ∞ representable: literal 0.2 is represented as – underflow: 1 / ∞ or 2 − 1022 − 52 / 2 has result 0 fl (0 . 2 10 ) = fl (0 . 00110011 . . . 2 ) = – 0 / 0 or ∞ − ∞ has result NaN 1 . 1001100110011001100110011001100110011001100110011010 · 2 − 3 9 10 Sources of errors in numerical computations Limited precision arithmetic • The limited precision of arithmetic in R (10 , 4 , − 99 , 99) is illustrated by computing • Hardware errors n →∞ (1 + 1 – Pentium bug n ) n e = lim • Limited precision arithmetic – summation: adding numbers of unequal magnitude (1 ⊕ 1 ⊘ 10 k ) 10 k 1 ⊘ 10 k 1 ⊕ (1 ⊘ 10 k ) k – subtraction: catastrophic cancellation 1 1 . 000 E-1 1 . 100 2 . 591 – convergence: may cycle or diverge 2 1 . 000 E-2 1 . 010 2 . 591 – formula: mathematically equivalent formulae have different 3 1 . 000 E-3 1 . 001 2 . 591 computational properties 4 1 . 000 E-4 1 . 000 1 . 000 • Logical errors 5 1 . 000 E-5 1 . 000 1 . 000 11 12

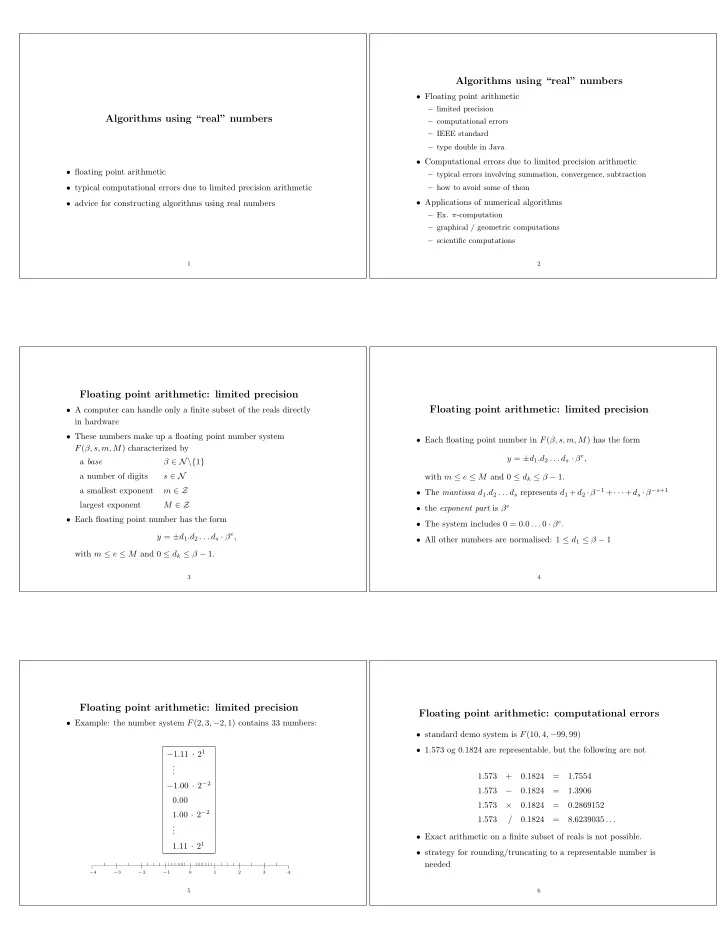

Limited precision arithmetic Limited precision arithmetic • The e -computation in R (2 , 24 , − 126 , 127): (1 ⊕ 1 ⊘ 2 k ) 2 k • The e -computation seems to give a reasonable result, if one stops 1 ⊘ 2 k 1 ⊕ (1 ⊘ 2 k ) k just before the result degenerates to 1? 1 0 . 5 1 . 5 2 . 25 • WRONG: 2 0 . 25 1 . 25 2 . 4414062 3 0 . 125 1 . 125 2 . 5657845 e 4 0 . 0625 1 . 0625 2 . 6379285 R (10 , 4 , − 99 , 99) 2 . 591 . . . . . . . . . . . . R (2 , 24 , − 126 , 127) 2.71 75977 22 2 . 3841858 E-7 1 . 0000002 2 . 7175977 R (2 , 53 , − 1022 , 1023) 2.7182818 08182473 23 1 . 1920929 E-7 1 . 0000001 2 . 7175977 exact 2.71828182845904523536 ... 24 5 . 9604645 E-8 1 . 0 1 . 0 • less than half the significant digits are correct! 25 2 . 9802322 E-8 1 . 0 1 . 0 13 14 Summation • try forward summation: Summation H n = ( · · · ((1 ⊕ 1 2 ) ⊕ 1 3 ) ⊕ · · · ⊕ 1 n ) • The n th harmonic number is defined by ⊕ , ⊖ , ⊗ , ⊘ forward n H n exact n 10 2 . 928 2 . 927 1 i = 1 + 1 2 + 1 3 + · · · + 1 � H n = n 100 5 . 187 5 . 142 i =1 1000 7 . 485 7 . 069 • Associative law is not valid. 10000 9 . 787 7 . 069 • Which order of summation is best? 100000 12 . 09 7 . 069 – from the left? 1000000 14 . 39 7 . 069 – from the right? • The computed sum is constant for n ≥ 1000 since – pairwise in binary tree? 1 • experiment in toy system R (10 , 4 , − 99 , 99) with truncation 1001 = 7 . 069 ⊕ 9 . 990 · 10 − 4 = 7 . 069 7 . 069 ⊕ 15 16 Summation Summation • try backward summation: H n = (1 ⊕ ( 1 2 ⊕ ( 1 3 ⊕ · · · ⊕ 1 • add terms pairwise in a tree structure n ) · · · )) H n = ( · · · ((1 ⊕ 1 2 ) ⊕ ( 1 3 ⊕ 1 4 ⊕ )) · · · ⊕ 1 n ) · · · )) ⊕ n H n exact ⊕ , ⊖ , ⊗ , ⊘ backward forward 10 2 . 928 2 . 928 2 . 927 ⊕ ⊕ 100 5 . 187 5 . 170 5 . 142 1000 7 . 485 7 . 284 7 . 069 ⊕ ⊕ ⊕ ⊕ 10000 9 . 787 8 . 069 7 . 069 100000 12 . 09 8 . 069 7 . 069 1 1 1 1 1 1 1 1 1000000 14 . 39 8 . 069 7 . 069 2 3 4 5 6 7 8 • By adding terms in increasing order, the error is reduced! 17 18

Summation Summation • similar experiment for java double, R (2 , 53 , − 1022 , 1023) n H n exact ⊕ , ⊖ , ⊗ , ⊘ tree-wise backward forward H 100000 error + , − , · , / exact 12 . 090146129863428 10 2 . 928 2 . 928 2 . 928 2 . 927 − 3 · 10 − 15 100 5 . 187 5 . 183 5 . 170 5 . 142 ⊕ , ⊖ , ⊗ , ⊘ “tree-wise” 12 . 0901461298634 31 20 · 10 − 15 ⊕ , ⊖ , ⊗ , ⊘ backward 12 . 0901461298634 08 1000 7 . 485 7 . 477 7 . 284 7 . 069 93 · 10 − 15 10000 9 . 787 9 . 778 8 . 069 7 . 069 ⊕ , ⊖ , ⊗ , ⊘ forward 12 . 090146129863 335 100000 12 . 09 12 . 07 8 . 069 7 . 069 • seems better than R (10 , 4 , − 99 , 99) ? 1000000 14 . 39 14 . 36 8 . 069 7 . 069 • truncation errors add up, but rounding errors may cancel out • By adding equal sized terms the error is diminished further! (though there is no guarantee!) 19 20 Convergence: monotone • Example: find root of polynomial f ( x ) using Newton iteration Summation • compute z = lim k →∞ x k for x k +1 = x k − f ( x k ) f ′ ( x k ) • Problem – compute x 1 + x 2 + · · · + x n • Warning: f ( x k ) y = f ( x ) – ⊕ not associative! • Advice: – Add terms in increasing order α z – Even better: add terms of equal size only x k +1 x k 21 22 Convergence: monotone √ Convergence: monotone • Computing 2 by Newton iteration • Example: squareroot computation, √ a R (10 , 4 , − 99 , 99) R (2 , 53 , − 1022 , 1023) • Find root of f ( x ) = x 2 − a . k x k x k 0 1 . 500 1 . 5000000000000000 • Newton iteration: compute 1 1 . 416 1 . 41 66666666666667 x k +1 = x k − x 2 k − a 2 1 . 414 1 . 41421 56862745099 2 x k 3 1 . 414 1 . 41421356237 46899 using x 0 = 1 4 1 . 414 1 . 414213562373095 1 2( a + 1) 5 1 . 414 1 . 4142135623730950 • Does it hold that 6 1 . 414 1 . 414213562373095 1 k →∞ x k = √ a lim √ exact 2 1 . 414 1 . 4142135623730950 for limited precision arithmetic? • the computation in R (2 , 53 , − 1022 , 1023) seems to be cycling? 23 24

Recommend

More recommend