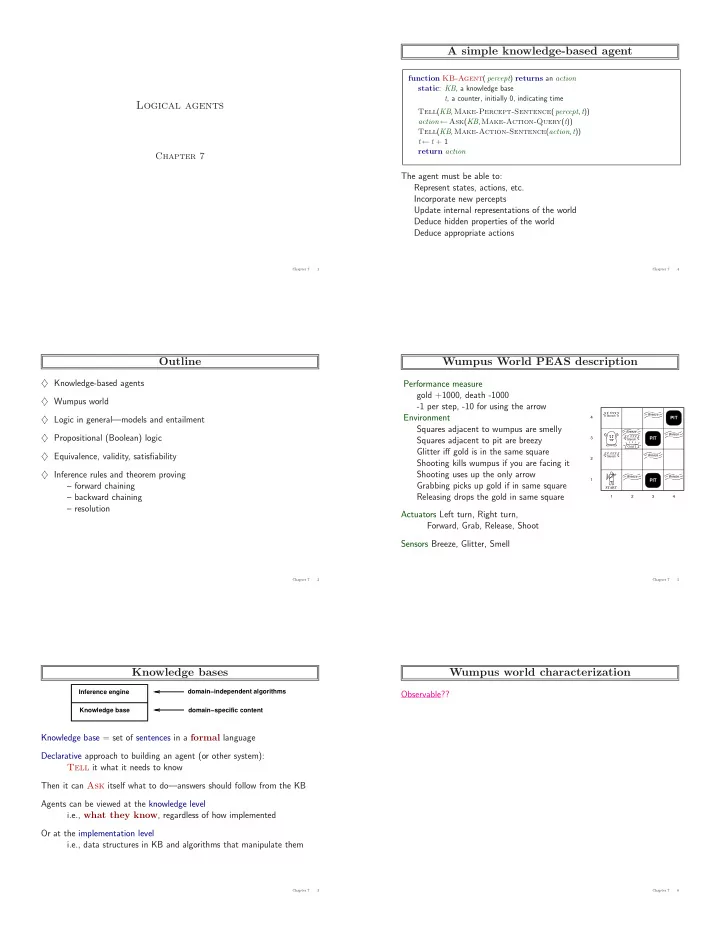

A simple knowledge-based agent function KB-Agent ( percept ) returns an action static : KB , a knowledge base t , a counter, initially 0, indicating time Logical agents Tell ( KB , Make-Percept-Sentence ( percept , t )) action ← Ask ( KB , Make-Action-Query ( t )) Tell ( KB , Make-Action-Sentence ( action , t )) t ← t + 1 return action Chapter 7 The agent must be able to: Represent states, actions, etc. Incorporate new percepts Update internal representations of the world Deduce hidden properties of the world Deduce appropriate actions Chapter 7 1 Chapter 7 4 Outline Wumpus World PEAS description ♦ Knowledge-based agents Performance measure gold +1000, death -1000 ♦ Wumpus world -1 per step, -10 for using the arrow Breeze Environment Stench 4 ♦ Logic in general—models and entailment PIT Squares adjacent to wumpus are smelly Breeze Breeze ♦ Propositional (Boolean) logic 3 Squares adjacent to pit are breezy PIT Stench Gold Glitter iff gold is in the same square ♦ Equivalence, validity, satisfiability Breeze Stench 2 Shooting kills wumpus if you are facing it ♦ Inference rules and theorem proving Shooting uses up the only arrow Breeze Breeze 1 PIT – forward chaining Grabbing picks up gold if in same square START Releasing drops the gold in same square – backward chaining 1 2 3 4 – resolution Actuators Left turn, Right turn, Forward, Grab, Release, Shoot Sensors Breeze, Glitter, Smell Chapter 7 2 Chapter 7 5 Knowledge bases Wumpus world characterization Inference engine domain−independent algorithms Observable?? Knowledge base domain−specific content Knowledge base = set of sentences in a formal language Declarative approach to building an agent (or other system): Tell it what it needs to know Then it can Ask itself what to do—answers should follow from the KB Agents can be viewed at the knowledge level i.e., what they know , regardless of how implemented Or at the implementation level i.e., data structures in KB and algorithms that manipulate them Chapter 7 3 Chapter 7 6

Wumpus world characterization Wumpus world characterization Observable?? No—only local perception Observable?? No—only local perception Deterministic?? Deterministic?? Yes—outcomes exactly specified Episodic?? No—sequential at the level of actions Static?? Yes—Wumpus and Pits do not move Discrete?? Chapter 7 7 Chapter 7 10 Wumpus world characterization Wumpus world characterization Observable?? No—only local perception Observable?? No—only local perception Deterministic?? Yes—outcomes exactly specified Deterministic?? Yes—outcomes exactly specified Episodic?? Episodic?? No—sequential at the level of actions Static?? Yes—Wumpus and Pits do not move Discrete?? Yes Single-agent?? Chapter 7 8 Chapter 7 11 Wumpus world characterization Wumpus world characterization Observable?? No—only local perception Observable?? No—only local perception Deterministic?? Yes—outcomes exactly specified Deterministic?? Yes—outcomes exactly specified Episodic?? No—sequential at the level of actions Episodic?? No—sequential at the level of actions Static?? Static?? Yes—Wumpus and Pits do not move Discrete?? Yes Single-agent?? Yes—Wumpus is essentially a natural feature Chapter 7 9 Chapter 7 12

Exploring a wumpus world Exploring a wumpus world P? OK B OK P? A OK OK OK S OK A A A Chapter 7 13 Chapter 7 16 Exploring a wumpus world Exploring a wumpus world P? P B OK B OK P? OK A A OK OK OK S OK W A A A Chapter 7 14 Chapter 7 17 Exploring a wumpus world Exploring a wumpus world P? P? P B OK P? B OK P? OK A A A OK OK OK S OK W A A A Chapter 7 15 Chapter 7 18

Exploring a wumpus world Other tight spots P? Breeze in (1,2) and (2,1) B OK P? ⇒ no safe actions P? A P? OK P Assuming pits uniformly distributed, OK B OK (2,2) has pit w/ prob 0.86, vs. 0.31 P? A A B OK P? OK OK A A Smell in (1,1) ⇒ cannot move Can use a strategy of coercion: OK S OK W shoot straight ahead A A wumpus was there ⇒ dead ⇒ safe S wumpus wasn’t there ⇒ safe A Chapter 7 19 Chapter 7 22 Exploring a wumpus world Logic in general Logics are formal languages for representing information such that conclusions can be drawn Syntax defines the sentences in the language Semantics define the “meaning” of sentences; P? OK i.e., define truth of a sentence in a world P E.g., the language of arithmetic B OK P? BGS OK x + 2 ≥ y is a sentence; x 2 + y > is not a sentence OK A A A x + 2 ≥ y is true iff the number x + 2 is no less than the number y x + 2 ≥ y is true in a world where x = 7 , y = 1 OK S OK W x + 2 ≥ y is false in a world where x = 0 , y = 6 A A Chapter 7 20 Chapter 7 23 Other tight spots Entailment Entailment means that one thing follows from another: P? KB | = α Breeze in (1,2) and (2,1) Knowledge base KB entails sentence α ⇒ no safe actions B OK P? if and only if P? A α is true in all worlds where KB is true Assuming pits uniformly distributed, OK B OK (2,2) has pit w/ prob 0.86, vs. 0.31 E.g., the KB containing “the Giants won” and “the Reds won” P? A A entails “Either the Giants won or the Reds won” E.g., x + y = 4 entails 4 = x + y Entailment is a relationship between sentences (i.e., syntax ) that is based on semantics S Smell in (1,1) A Note: brains process syntax (of some sort) ⇒ cannot move Chapter 7 21 Chapter 7 24

Models Wumpus models Logicians typically think in terms of models, which are formally structured worlds with respect to which truth can be evaluated 2 PIT 2 Breeze We say m is a model of a sentence α if α is true in m 1 Breeze 1 PIT 1 2 3 KB 1 2 3 M ( α ) is the set of all models of α 2 PIT 2 Then KB | = α if and only if M ( KB ) ⊆ M ( α ) PIT 2 Breeze x 1 PIT x x x Breeze 1 Breeze 1 E.g. KB = Giants won and Reds won x x 1 2 3 x x 1 2 3 x 1 2 3 M( ) xx α = Giants won x x x x x x 2 PIT PIT x x 2 PIT x x x x x x x x x x Breeze 1 Breeze xx x x x 1 PIT 2 PIT PIT x 1 2 3 x x x x x 1 2 3 Breeze 1 PIT M(KB) x 1 2 3 x x x x x x KB = wumpus-world rules + observations Chapter 7 25 Chapter 7 28 Entailment in the wumpus world Wumpus models 2 PIT 2 Breeze 1 Breeze 1 PIT 1 2 3 KB 1 2 3 Situation after detecting nothing in [1,1], 1 moving right, breeze in [2,1] ? ? 2 PIT 2 PIT 2 Breeze 1 PIT Breeze 1 Breeze 1 1 2 3 B 1 2 3 ? 1 2 3 Consider possible models for ?s A A 2 PIT PIT assuming only pits 2 PIT Breeze 1 Breeze 1 PIT 2 PIT PIT 3 Boolean choices ⇒ 8 possible models 1 2 3 1 2 3 Breeze 1 PIT 1 2 3 KB = wumpus-world rules + observations α 1 = “[1,2] is safe”, KB | = α 1 , proved by model checking Chapter 7 26 Chapter 7 29 Wumpus models Wumpus models 2 PIT 2 PIT 2 2 Breeze Breeze 1 1 Breeze Breeze 1 PIT 1 PIT 1 2 3 1 2 3 KB 1 2 3 1 2 3 2 PIT 2 PIT 2 2 PIT PIT 2 2 Breeze Breeze 1 1 PIT PIT Breeze Breeze 1 1 Breeze Breeze 1 1 1 2 3 1 2 3 1 2 3 1 2 3 1 2 3 1 2 3 2 PIT PIT 2 PIT PIT 2 PIT 2 PIT Breeze Breeze 1 1 Breeze Breeze 1 PIT 1 PIT 2 PIT PIT 2 PIT PIT 1 2 3 1 2 3 1 2 3 1 2 3 Breeze Breeze 1 1 PIT PIT 1 2 3 1 2 3 KB = wumpus-world rules + observations Chapter 7 27 Chapter 7 30

Recommend

More recommend