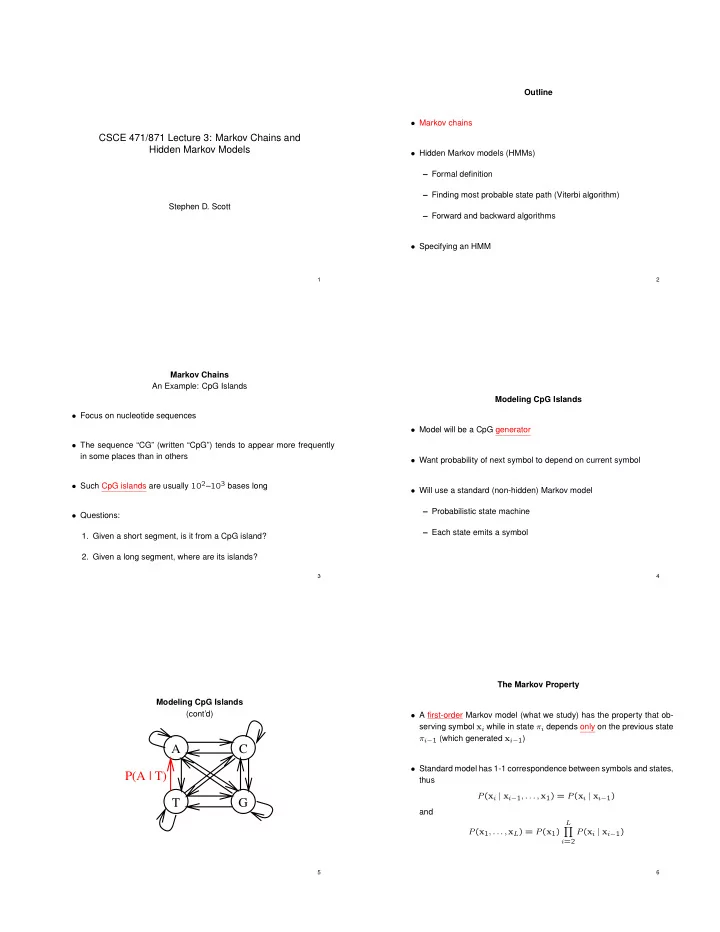

Outline • Markov chains CSCE 471/871 Lecture 3: Markov Chains and Hidden Markov Models • Hidden Markov models (HMMs) – Formal definition – Finding most probable state path (Viterbi algorithm) Stephen D. Scott – Forward and backward algorithms • Specifying an HMM 1 2 Markov Chains An Example: CpG Islands Modeling CpG Islands • Focus on nucleotide sequences • Model will be a CpG generator • The sequence “CG” (written “CpG”) tends to appear more frequently in some places than in others • Want probability of next symbol to depend on current symbol • Such CpG islands are usually 10 2 – 10 3 bases long • Will use a standard (non-hidden) Markov model – Probabilistic state machine • Questions: – Each state emits a symbol 1. Given a short segment, is it from a CpG island? 2. Given a long segment, where are its islands? 3 4 The Markov Property Modeling CpG Islands (cont’d) • A first-order Markov model (what we study) has the property that ob- serving symbol x i while in state ⇡ i depends only on the previous state ⇡ i � 1 (which generated x i � 1 ) A C • Standard model has 1-1 correspondence between symbols and states, P(A | T) thus P ( x i | x i � 1 , . . . , x 1 ) = P ( x i | x i � 1 ) T G and L Y P ( x 1 , . . . , x L ) = P ( x 1 ) P ( x i | x i � 1 ) i =2 5 6

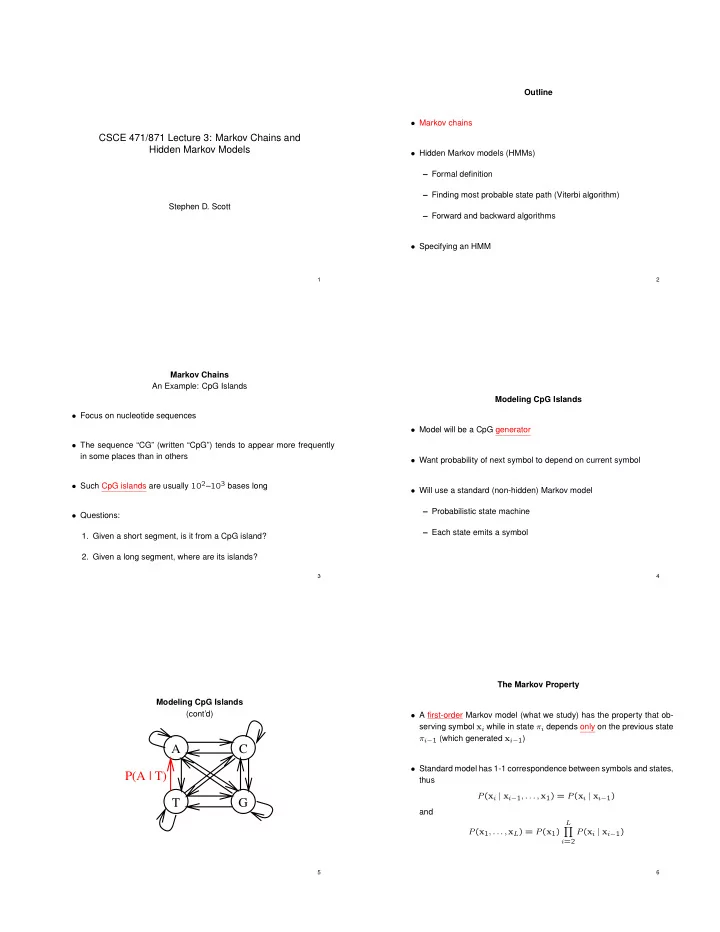

Begin and End States Markov Chains for Discrimination • For convenience, can add special “begin” ( B ) and “end” ( E ) states to • How do we use this to differentiate islands from non-islands? clarify equations and define a distribution over sequence lengths • Define two Markov models: islands (“ + ”) and non-islands (“ � ”) • Emit empty (null) symbols x 0 and x L +1 to mark ends of sequence – Each model gets 4 states (A, C, G, T) – Take training set of known islands and non-islands – Let c + st = number of times symbol t followed symbol s in an island: A C c + E B P + ( t | s ) = ˆ st t 0 c + P T G st 0 • Example probabilities in [Durbin et al., p. 51] • Now score a sequence X = h x 1 , . . . , x L i by summing the log-odds ratios: L +1 Y P ( x 1 , . . . , x L ) = P ( x i | x i � 1 ) i =1 ˆ ! L +1 ˆ ! P + ( x i | x i � 1 ) P ( X | +) X log = log ˆ ˆ P � ( x i | x i � 1 ) • Will represent both with single state named 0 P ( X | � ) i =1 7 8 Hidden Markov Models Outline • Second CpG question: Given a long sequence, where are its islands? – Could use tools just presented by passing a fixed-width window over the sequence and computing scores • Markov chains – Trouble if islands’ lengths vary – Prefer single, unified model for islands vs. non-islands • Hidden Markov models (HMMs) A C T G + + + + – Formal definition [complete connectivity between all pairs] – Finding most probable state path (Viterbi algorithm) A C T G - - - - – Forward and backward algorithms – Within the + group, transition probabilities similar to those for the • Specifying an HMM separate + model, but there is a small chance of switching to a state in the � group 9 10 What’s Hidden in an HMM? What’s Hidden in an HMM? • No longer have one-to-one correspondence between states and emit- (cont’d) ted characters – E.g. was C emitted by C + or C � ? • Must differentiate the symbol sequence X from the state sequence ⇡ = h ⇡ 1 , . . . , ⇡ L i – State transition probabilities same as before: P ( ⇡ i = ` | ⇡ i � 1 = j ) (i.e. P ( ` | j ) ) [In CpG HMM, emission probs discrete and = 0 or 1 ] – Now each state has a prob. of emitting any value: P ( x i = x | ⇡ i = j ) (i.e. P ( x | j ) ) 11 12

Example: The Occasionally Dishonest Casino The Viterbi Algorithm • Assume that a casino is typically fair, but with probability 0.05 it switches to a loaded die, and switches back with probability 0.1 • Probability of seeing symbol sequence X and state sequence ⇡ is L Fair Loaded Y P ( X, ⇡ ) = P ( ⇡ 1 | 0) P ( x i | ⇡ i ) P ( ⇡ i +1 | ⇡ i ) 1: 1/6 1: 1/10 i =1 0.05 2: 1/6 2: 1/10 3: 1/6 3: 1/10 • Can use this to find most likely path: 4: 1/6 4: 1/10 ⇡ ⇤ = argmax P ( X, ⇡ ) 5: 1/6 5: 1/10 ⇡ 0.1 6: 1/6 6: 1/2 and trace it to identify islands (paths through “ + ” states) 0.95 0.9 • There are an exponential number of paths through chain, so how do we find the most likely one? • Given a sequence of rolls, what’s hidden? 13 14 The Viterbi Algorithm The Viterbi Algorithm (cont’d) (cont’d) • Assume that we know (for all k ) v k ( i ) = probability of most likely path • Given the formula, can fill in table with dynamic programming: ending in state k with observation x i – v 0 (0) = 1 , v k (0) = 0 for k > 0 • Then – For i = 1 to L ; for ` = 1 to M (# states) v ` ( i + 1) = P ( x i +1 | ` ) max { v k ( i ) P ( ` | k ) } k ⇤ v ` ( i ) = P ( x i | ` ) max k { v k ( i � 1) P ( ` | k ) } All states at i ⇤ ptr i ( ` ) = argmax k { v k ( i � 1) P ( ` | k ) } – P ( X, ⇡ ⇤ ) = max k { v k ( L ) P (0 | k ) } State at l – ⇡ ⇤ L = argmax k { v k ( L ) P (0 | k ) } +1 i – For i = L to 1 l ⇤ ⇡ ⇤ i � 1 = ptr i ( ⇡ ⇤ i ) • To avoid underflow, use log( v ` ( i )) and add 15 16 The Forward Algorithm The Backward Algorithm • Given a sequence X , find P ( X ) = P ⇡ P ( X, ⇡ ) • Given a sequence X , find the probability that x i was emitted by state k , i.e. • Use dynamic programming like Viterbi, replacing max with sum, and P ( ⇡ i = k | X ) = P ( ⇡ i = k, X ) v k ( i ) with P ( X ) f k ( i ) = P ( x 1 , . . . , x i , ⇡ i = k ) (= prob. of observed sequence through f k ( i ) b k ( i ) x i , stopping in state k ) z }| { z }| { P ( x 1 , . . . , x i , ⇡ i = k ) P ( x i +1 , . . . , x L | ⇡ i = k ) = – f 0 (0) = 1 , f k (0) = 0 for k > 0 P ( X ) | {z } computed by forward alg – For i = 1 to L ; for ` = 1 to M (# states) ⇤ f ` ( i ) = P ( x i | ` ) P • Algorithm: k f k ( i � 1) P ( ` | k ) – P ( X ) = P k f k ( L ) P (0 | k ) – b k ( L ) = P (0 | k ) for all k – For i = L � 1 to 1 ; for k = 1 to M (# states) • To avoid underflow, can again use logs, though exactness of results ⇤ b k ( i ) = P ` P ( ` | k ) P ( x i +1 | ` ) b ` ( i + 1) compromised (Section 3.6) 17 18

Example Use of Forward/Backward Algorithm Outline • Define g ( k ) = 1 if k 2 { A + , C + , G + , T + } and 0 otherwise • Markov chains • Then G ( i | X ) = P k P ( ⇡ i = k | X ) g ( k ) = probability that x i is in an island • Hidden Markov models (HMMs) – Formal definition • For each state k , compute P ( ⇡ i = k | X ) with forward/backward algorithm – Finding most probable state path (Viterbi algorithm) – Forward and backward algorithms • Technique applicable to any HMM where set of states is partitioned into classes • Specifying an HMM – Use to label individual parts of a sequence 19 20 Specifying an HMM When State Sequence Known • Two problems: defining structure (set of states) and parameters (tran- • Estimating parameters when e.g. islands already identified in training sition and emission probabilities) set • Start with latter problem, i.e. given a training set X 1 , . . . , X N of inde- • Let A k ` = number of k ! ` transitions and E k ( b ) = number of pendently generated sequences, learn a good set of parameters ✓ emissions of b in state k 0 1 @X P ( ` | k ) = A k ` / A k ` 0 A • Goal is to maximize the (log) likelihood of seeing the training set given ` 0 that ✓ is the set of parameters for the HMM generating them: 0 1 @X E k ( b 0 ) P ( b | k ) = E k ( b ) / N A X b 0 log( P ( X j ; ✓ )) j =1 21 22 The Baum-Welch Algorithm • Used for estimating parameters when state sequence unknown • Special case of the expectation maximization (EM) algorithm When State Sequence Known • Start with arbitrary P ( ` | k ) and P ( b | k ) , and use to estimate A k ` (cont’d) and E k ( b ) as expected number of occurrences given the training set ⇤ : N L 1 X X f j k ( i ) P ( ` | k ) P ( x j i +1 | ` ) b j • Be careful if little training data available A k ` = ` ( i + 1) P ( X j ) j =1 i =1 – E.g. an unused state k will have undefined parameters (Prob. of transition from k to ` at position i of sequence j , summed over all positions of all sequences) – Workaround: Add pseudocounts r k ` to A k ` and r k ( b ) to E k ( b ) that N N 1 X X X X f j k ( i ) b j reflect prior biases about parobabilities E k ( b ) = P ( ⇡ i = k | X j ) = k ( i ) P ( X j ) j =1 i : x j j =1 i : x j i = b i = b – Increased training data decreases prior’s influence • Use these (& pseudocounts) to recompute P ( ` | k ) and P ( b | k ) – [Sj¨ olander et al. 96] • After each iteration, compute log likelihood and halt if no improvement ⇤ Superscript j corresponds to j th train example 23 24

Recommend

More recommend